Abstract

The W3C Multimodal Interaction Working Group aims to develop

specifications to enable access to the Web using multimodal

interaction. This document is part of a set of specifications for

multimodal systems, and provides details of an XML markup language

for containing and annotating the interpretation of user input and production of system output.

Examples of interpretation of user input are a transcription into

words of a raw signal, for instance derived from speech, pen or

keystroke input, a set of attribute/value pairs describing their

meaning, or a set of attribute/value pairs describing a gesture.

The interpretation of the user's input is expected to be generated

by signal interpretation processes, such as speech and ink

recognition, semantic interpreters, and other types of processors

for use by components that act on the user's inputs such as

interaction managers. Examples of stages in the production of a system output, are creation of a semantic representation, an assignment of that representation to a particular modality or modalities, and a surface string for realization by, for example, a text-to-speech engine. The production of the system's output is expected to be generated by output production processes, such as a dialog manager, multimodal presentation planner, content planner, and other types of processors such as surface generation.

Status of this Document

This section describes the status of this document at the

time of its publication. Other documents may supersede this

document. A list of current W3C publications and the latest

revision of this technical report can be found in the W3C technical reports index at

http://www.w3.org/TR/.

This is the @@ June 2015 Working Draft of "EMMA:

Extensible MultiModal Annotation markup language Version 2.0".

It has been produced by the

Multimodal Interaction Working Group,

which is part of the

Multimodal Interaction Activity.

This specification describes markup for representing

interpretations of user input (speech, keystrokes, pen input etc.) and productions of system output together with annotations for confidence scores, timestamps, medium etc., and forms part of the proposals for the W3C Multimodal Interaction

Framework.

The EMMA: Extensible Multimodal Annotation 1.0 specification was published as a W3C Recommendation in February 2009. Since then there have been numerous implementations of the standard and extensive feedback has come in regarding desired new features and clarifications requested for existing features. The W3C Multimodal Interaction Working Group examined a range of different use cases for extensions of the EMMA specification and published a W3C Note on Use Cases for Possible Future EMMA Features [EMMA Use Cases]. In this working draft of EMMA 2.0, we have developed a set of new features based on feedback from implementers and have also added clarification text in a number of places throughout the specification. The new features include: support for adding human annotations (emma:annotation, emma:annotated-tokens), support for inline specification of process parameters (emma:parameters, emma:parameter, emma:parameter-ref), support for specification of models used in processing beyond grammars (emma:process-model, emma:process-model-ref), extensions to emma:grammar to enable inline specification of grammars, a new mechanism for indicating which grammars are active (emma:grammar-active, emma:active), support for non-XML semantic payloads (emma:result-format), support for multiple emma:info elements and reference to the emma:info relevant to an interpretation (emma:info-ref), and a new attribute to complement the emma:medium and emma:mode attributes that enables specification of the modality used to express an input (emma:expressed-through).

In addition we have extended the specification to handle the production of system output, by adding the new element, emma:output and added a series of annotations enabling the use of EMMA for incremental results (Section 4.2.24).

Not addressed in this draft, but planned for a later Working Draft of EMMA 2.0, is a JSON serialization of EMMA documents for use in contexts were JSON is better suited than XML for representing user inputs and system outputs.

Changes from the last working draft

This working draft also adds a new emma:location element for specification of the location of the device or sensor which captured the input. The ref attribute was added to a number of elements allowing for shorter EMMA documents which use URIs to point to content stored outside of the document: emma:one-of, emma:sequence, emma:group, emma:info, emma:parameters, emma:lattice. A new attribute emma:partial-content is introduced which indicates whether the content in an element with ref, is the full content or whether it is partial and more can be retrieved by following the URI in ref. The emma:emma element is extended with doc-ref and prev-doc attributes that indicate where the document can be retrieved from and where the previous document in a sequence of inputs can be retrieved from. The application of emma:lattice is also extended so that an EMMA document can contain both a N-best and a lattice side-by-side. A new Section 3.3 includes an initial proposal for the extension of EMMA to output and the new element emma:output. A new Section 4.2.24 describes new attributes that extend EMMA so that it support incremental results.

A diff-marked version from EMMA 1.1

is available for comparison purposes.

Also changes from EMMA 1.0 can be found in Appendix F.

Comments are welcome on www-multimodal@w3.org

(archive).

See W3C mailing list and archive

usage guidelines.

This document was produced by a group operating under the

5

February 2004 W3C Patent Policy. W3C maintains a public list of any

patent disclosures made in connection with the deliverables of

the group; that page also includes instructions for disclosing a

patent. An individual who has actual knowledge of a patent which

the individual believes contains

Essential Claim(s) must disclose the information in accordance

with

section 6 of the W3C Patent Policy.

The sections in the main body of this document are normative unless

otherwise specified. The appendices in this document are informative

unless otherwise indicated explicitly.

Conventions of this Document

All sections in this specification are normative, unless

otherwise indicated. The informative parts of this specification

are identified by "Informative" labels within sections.

The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL

NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL"

in this document are to be interpreted as described in [RFC2119].

Table of Contents

- 1. Introduction

- 2. Structure of EMMA documents

- 3. EMMA structural elements

- 4 EMMA annotations

- 4.1 EMMA annotation elements

- 4.2 EMMA annotation attributes

- 4.2.1 Tokens of input:

emma:tokens, emma:token-type and emma:token-score attributes

- 4.2.2 Reference to processing:

emma:process attribute

- 4.2.3 Lack of input:

emma:no-input attribute

- 4.2.4 Uninterpreted input:

emma:uninterpreted attribute

- 4.2.5 Human language of input:

emma:lang attribute

- 4.2.6 Reference to signal:

emma:signal and

emma:signal-size attributes

- 4.2.7 Media type:

emma:media-type attribute

- 4.2.8 Confidence scores:

emma:confidence attribute

- 4.2.9 Input source:

emma:source

attribute

- 4.2.10 Timestamps

- 4.2.11 Medium, mode, and function of user

inputs:

emma:medium, emma:mode,

emma:function, emma:verbal,emma:device-type, and emma:expressed-through

attributes

- 4.2.12 Composite multimodality:

emma:hook attribute

- 4.2.13 Cost:

emma:cost

attribute

- 4.2.14 Endpoint properties:

emma:endpoint-role,

emma:endpoint-address, emma:port-type,

emma:port-num, emma:message-id,

emma:service-name, emma:endpoint-pair-ref,

emma:endpoint-info-ref

attributes

- 4.2.15 Reference to

emma:grammar element: emma:grammar-ref

attribute

- 4.2.16 Reference to

emma:model

element: emma:model-ref attribute

- 4.2.17 Dialog turns:

emma:dialog-turn attribute

- 4.2.18 Semantic representation type:

emma:output-format attribute

- 4.2.19 Reference to

emma:info element: emma:info-ref attribute

- 4.2.20 Reference to

emma:process-model element: emma:process-model-ref attribute

- 4.2.21 Reference to

emma:parameters

element: emma:parameter-ref attribute

- 4.2.22 Human transcription: the

emma:annotated-tokens attribute

- 4.2.23 Partial content:

emma:partial-content

- 4.2.24 Incremental results:

emma:stream-id, emma:stream-seq-num, emma:stream-status, emma:stream-full-result, emma:stream-token-span, emma:stream-token-span-full, emma:stream-token-immortals, emma:stream-immortal-vertex

-

- 4.3 Scope of EMMA annotations

- 5.Conformance

- 6.Integration of EMMA with other Standards Related to Output

- Appendices

1. Introduction

This section is Informative.

This document presents an XML specification for EMMA, an

Extensible MultiModal Annotation markup language, responding to the

requirements documented in Requirements for EMMA

[EMMA Requirements]. This

markup language is intended for use by systems that provide

semantic interpretations for a variety of inputs and representations for a variety of system outputs. Possible inputs include but are not

necessarily limited to, speech, natural language text, GUI and ink Possible outputs include speech, text, GUI, vibration, and gestures made by embodied agents or robots.

It is expected that this markup will be used primarily as a

standard data interchange format between the components of a

multimodal system; in particular, it will normally be automatically

generated by interpretation components to represent the semantics

of users' inputs and by production components to represent system output, not directly authored by developers.

The language is focused on representing and annotating inputs from users and system generated outputs,

which may be either in a single mode or a composite input

combining information from multiple modes, as opposed to

information that might have been collected over multiple turns of a

dialog. The language provides a set of elements and attributes that

are focused on enabling annotations on user inputs and system outputs and

interpretations of those inputs and representation of those outputs.

An EMMA document can be considered to hold three types of

data:

-

instance data

Application-specific markup corresponding to input or output information

which is meaningful to the consumer of an EMMA document. Instances

are application-specific and built by input and output processors at runtime.

Given that utterances may be ambiguous with respect to input

values, an EMMA document may hold more than one instance. Similarly for output, there may be more than one possible realization of the system output (e.g. different renderings of a semantic representation into a string) and so an EMMA document for output may also hold more than one instance.

-

data model

Constraints on structure and content of an instance. The data

model is typically pre-established by an application, and may be

implicit, that is, unspecified.

-

metadata

Annotations associated with the data contained in the instance.

Annotation values are added by input processors and output generators at runtime. In EMMA 2.0 1.1 annotations may also result from transcription and other activities by human annotators.

Given the assumptions above about the nature of data represented

in an EMMA document, the following general principles apply to the

design of EMMA:

- The main prescriptive content of the EMMA specification will

consist of metadata: EMMA will provide a means to express the

metadata annotations which require standardization. (Notice,

however, that such annotations may express the relationship among

all the types of data within an EMMA document.)

- The instance and its data model are assumed to be specified in XML by default, but the instance may be specified in other formats as defined by the

emma:result-format attribute. EMMA will remain agnostic to the specific details of the format (If it is XML, the instance data is assumed to be sufficiently structured to enable the association of annotative data.)

- The extensibility of EMMA lies in the ability for additional

kinds of metadata to be included in application specific

vocabularies. EMMA itself can be extended with application and

vendor specific annotations contained within the

emma:info element (Section

4.1.5).

The annotations of EMMA should be considered 'normative' in the

sense that if an EMMA component produces annotations as described

in Section 3 and Section

4, these annotations must be represented using the EMMA

syntax. The Multimodal Interaction Working Group may address in

later drafts the issues of modularization and profiling; that is,

which sets of annotations are to be supported by which classes of

EMMA component.

1.1 Uses of EMMA

The general purpose of EMMA is to represent the stages of processing of the inputs and outputs of an automated system. In the case of input this is information

automatically extracted from a user's input by an interpretation

component, where input is to be taken in the general sense of a

meaningful user input in any modality supported by the platform. In the case of output, EMMA represents the stages in the production of a system output.

The reader should refer to the sample architecture in W3C

Multimodal Interaction Framework [MMI

Framework], which shows EMMA conveying content between

user input modality components and an interaction manager. EMMA is one potential transport for system output from an interaction manager to system output modality components.

Input processing components that generate EMMA markup:

- Speech recognizers

- Handwriting recognizers

- Natural language understanding engines

- Other input media interpreters (e.g. DTMF, pointing,

keyboard)

- Multimodal integration components

Components that use EMMA representations of input include:

- Interaction manager

- Multimodal integration component

Output production components that may generate EMMA markup:

- Dialog/interaction manager

- Multimodal presentation planning component

- Natural language generation component

Components that use EMMA representations of output include:

- Text-to-speech engine (audio and or video)

- Graphical presentation components (e.g. HTML, SVG renderer or browser)

- Media presentation components (e.g. video player)

- Robot/embodied agent motion planning or rendering component

1.2 Terminology

- anchor point

- When referencing an input or output interval with

emma:time-ref-uri,

emma:time-ref-anchor-point allows you to specify

whether the referenced anchor is the start or end of the

interval.

- annotation

- Information about the interpreted input or produced output, for example,

timestamps, confidence scores, links to the raw signal, etc.

- composite input

- An input formed from several pieces, often in different modes,

for example, a combination of speech and pen gesture, such as

saying "zoom in here" and circling a region on a map.

- confidence

- A numerical score describing the degree of certainty in a

particular interpretation of user input or the relative quality of a production of system output.

- data model

- For EMMA, a data model defines a set of constraints on possible

interpretations of user input or representations of system output.

- derivation

- Interpretations of user input are said to be derived from that

input, and higher level interpretations may be derived from lower

level ones. EMMA allows you to reference the user input or

interpretation a given interpretation was derived from, see

semantic

interpretation. A system output is said to be derived from a semantic representation produced by the system. There may be multiple stages to the production of a system output and EMMA allows you to reference the previous stage that it was derived from.

- dialog

- For EMMA, dialog can be considered as a sequence of

interactions between

a user and the application.

- endpoint

- In EMMA, this refers to a network location which is the source

or recipient of an EMMA document. It should be noted that the usage

of the term "endpoint" in this context is different from the way

that the term is used in speech processing, where it refers to the

end of a speech input.

- gestures

- In multimodal applications gestures are communicative acts made

by the user or application. An example is circling an area on a map

to indicate a region of interest. Users may be able to gesture with

a pen, keystrokes, hand movements, head

movements, or sound. Gestures often form part of composite input. Application

gestures are typically animations and/or sound effects. Gestures may also be made by a system, e.g. highlighting on a graphical display or physical arm/hand motions by an embodied virtual agent or physical robotic agent.

- grammar

- A set of rules that describe a sequence of tokens expected in a

given input or output. These can be used by speech and handwriting

recognizers to increase recognition accuracy and by natural language generation components to produce well formed output.

- handwriting recognition

- The process of converting pen strokes into text.

- ink recognition

- This includes the recognition of handwriting and pen

gestures.

- input cost

- In EMMA, this refers to a numerical measure indicating the

weight or processing cost associated with a user's input or part of

their input.

- input device

- The device providing a particular input, for example, a

microphone, a pen, a mouse, a camera, or a keyboard.

- input function

- In EMMA, this refers to the use a particular input

is serving, for example, as part of a recording or transcription,

as part of a dialog, or as a means to verify the user's

identity.

- input medium

- Whether the input is acoustic, visual, or tactile, for

instance, a spoken utterance is an example of an acoustic input, a

hand gesture as seen by a camera is an example of a visual input,

pointing with a mouse or pen is an example of a tactile input.

- input mode

- This distinguishes a particular means of providing an input

within a general input medium, for example, speech, DTMF, ink, key

strokes, video, photograph, etc.

- input source

- This is the device that provided the input, for example a

particular microphone or camera. EMMA allows you to identify these

with a URI.

- input tokens

- In EMMA, this refers to a sequence of characters, words or

other discrete units of input.

- instance data

- A representation in XML of an interpretation of user

input.

- interaction manager

- A processor that determines how an application interacts with a

user. This can be at multiple levels of abstraction, for example,

at a detailed level, determining what prompts to present to the

user and what actions to take in response to user input, versus a

higher level treatment in terms of goals and tasks for achieving

those goals. Interaction managers are frequently event driven.

- interpretation

- In EMMA, an interpretation of user input refers to information

derived from the user input that is meaningful to the

application.

- keystroke input

- Input provided by the user pressing on a sequence of keys

(buttons), such as a computer keyboard or keypad.

- lattice

- A set of nodes interconnected with directed arcs such that by

following an arc, you can never find yourself back at a node you

have already visited (i.e. a directed acyclic graph). Lattices

provide a flexible means to represent the results of speech and

handwriting recognition, in terms of arcs representing words or

character sequences. Different arcs from the same node represent

different local hypotheses as to what the user said or wrote.

- metadata

- Information describing another set of data, for instance, a

library catalog card with information on the author, title and

location of a book. EMMA is designed to support input and output processors in

providing metadata for interpretations of user input and system output.

- multimodal integration

- The process of combining inputs from different modes to create

an interpretation of composite input. This is also sometimes

referred to as multimodal fusion.

- multimodal interaction

- The means for a user to interact with an application using more

than one mode of interaction, for instance, offering the user the

choice of speaking or typing, or in some cases, allowing the user

to provide a composite input involving multiple modes.

- multimodal presentation planning

- The process of generation multiple, possibly coordinated, outputs in different models to present information to the user. This is also sometimes referred to as multimodal output generation or multimodal fission.

- natural language

understanding

- The process of interpreting text in terms that are useful for

an application.

- N-best list

- An N-best list is a list of the most likely hypotheses for what

the user actually said or wrote, where N stands for an integral

number such as 5 for the 5 most likely hypotheses. N-best lists can also be used for multiple different possible renderings of system output.

-

-

- output device

- The device producing a particular output, for example, a loudspeaker, display screen, robot.

- output medium

- Whether the output is acoustic, visual, or tactile. For example, a spoken text to speech output is acoustic, a presentation of a table of information on a screen is visual, while haptic feedback is tactile.

- output mode

- This distinguishes a particular means of providing an output within a general output medium, for example, speech, non-speech audio (earcons, alerts), graphics, gesture.

- output tokens

- In EMMA, this refers to a sequence of characters, words, gestures, or other discrete units of output.

- raw signal

- An uninterpreted input, such as an audio waveform captured from

a microphone.

- semantic interpretation

- A normalized representation of the meaning of a user input, for

instance, mapping the speech for "San Francisco" into the airport

code "SFO".

- semantic processor

- In EMMA, this refers to systems that can derive interpretations

of user input, for instance, mapping the speech for "San Francisco"

into the airport code "SFO".

- signal interpretation

- The process of mapping a discrete or continuous signal into a

symbolic representation that can be used by an application, for

instance, transforming the audio waveform corresponding to someone

saying "2005" into the number 2005.

- speech recognition

- The process of determining the textual transcription of a piece

of speech.

- speech synthesis

- The process of rendering a piece of text into the corresponding

speech, i.e. synthesizing speech from text.

- text to speech

- The process of rendering a piece of text into the corresponding

speech.

- time stamp

- The time that a particular input or part of an input began or

ended.

- URI: Uniform Resource Identifier

- A URI is a unifying syntax for the expression of names and

addresses of objects on the network as used in the World Wide Web.

Within this specification, the term URI refers to a Universal

Resource Identifier as defined in [RFC3986]

and extended in [RFC3987] with the new name

IRI. The term URI has been retained in preference to IRI to avoid

introducing new names for concepts such as "Base URI" that are

defined or referenced across the whole family of XML

specifications. A URI is defined as any legal

anyURI primitive as defined in XML Schema Part 2:

Datatypes Second Edition Section 3.2.17 [SCHEMA2].

- user input

- An input provided by a user as opposed to something generated

automatically.

- system output

- An output produced by an automated interactive system.

2. Structure of EMMA documents

This section is Informative.

As noted above, the main components of an interpreted user input

or produced system output in EMMA are the instance data, an optional data model, and the

metadata annotations that may be applied to that input or output. The

realization of these components in EMMA is as follows:

- instance data is contained within an EMMA

interpretationfor input and an EMMA output for output

- the data model is optionally specified as an annotation

of that instance

- EMMA annotations may be applied at different levels of

an EMMA document.

An EMMA interpretation is the primary unit for holding

user input as interpreted by an EMMA processor. As will be seen

below, multiple interpretations of a single input are possible.

An EMMA output is the primary unit for holding system output as generated by an EMMA processor. As will be seen below, multiple possible alternative system outputs are possible.

EMMA provides a simple structural syntax for the organization of

interpretations and instances, and an annotative syntax to apply

the annotation to the input data and output data at different levels.

An outline of the structural syntax and annotations found in

EMMA documents is as follows. A fuller definition may be found in

the description of individual elements and attributes in Section 3 and Section 4.

- EMMA structural

elements (Section 3)

- Root element: The root node of an

EMMA document, the

emma:emma element, holds EMMA

version and namespace information, and provides a container for one

or more of the following interpretation and container elements

(Section 3.1)

- Interpretation element: The

emma:interpretation element contains a given

interpretation of the input and holds application specific markup

(Section 3.2)

- Output element: The

emma:output element contains a given instantiation of system output and holds application specific markup. (Section 3.3)

- Container elements:

emma:one-of is a container for one or more

interpretation elements, output elements, or container elements and denotes that

these are mutually exclusive interpretations or possible system outputs (Section 3.4.1)emma:group is a general container for one or more

interpretation elements, output elements, or container elements. It can be associated

with arbitrary grouping criteria (Section

3.4.2).emma:sequence is a container for one or more

interpretation elements, output elements, or container elements and denotes that

these are sequential in time (Section

3.4.3).

- Lattice element: The

emma:lattice element is used to contain a series of

emma:arc and emma:node elements that

define a lattice of words, gestures, meanings or other symbols. The

emma:lattice element appears within the

emma:interpretation element (Section

3.4) or within the emma:output element.

- Literal element: The

emma:literal element is used as a wrapper when the

application semantics is a string literal. (Section

3.5)

- EMMA annotations (Section 4)

- EMMA annotation elements: These are

EMMA annotations such as

emma:derived-from,

emma:endpoint-info, and emma:info which

are represented as elements so that they can occur more than once

within an element and can contain internal structure. (Section 4.1)

- EMMA annotation attributes: These

are EMMA annotations such as

emma:start,

emma:end , emma:confidence, and

emma:tokens which are represented as attributes. They

can appear on emma:interpretation elements or emma:output elements.

Some can appear on container elements, lattice elements, and

elements in the application-specific markup. (Section 4.2)

From the defined root node emma:emma the structure

of an EMMA document consists of a tree of EMMA container elements

(emma:one-of, emma:sequence,

emma:group) terminating in a number of interpretation

elements (emma:interpretation) or output elements (emma:output). The

emma:interpretation elements serve as wrappers for

either application namespace markup describing the interpretation

of the users input or an emma:lattice element or

emma:literal element . The

emma:output elements serve as wrappers for system output. A single emma:interpretation or a single emma:output may also appear directly under the

root node.

The EMMA elements

emma:emma,

emma:interpretation,

emma:one-of,

and emma:literal

and the EMMA attributes

emma:no-input,

emma:uninterpreted,

emma:medium,

and emma:mode

are required of all

implementations. The remaining elements and attributes are optional

and may be used in some implementations and not other depending on the

specific modalities and processing being represented.

To illustrate this, here is an example of

an EMMA document representing input

to a flight reservation application. In this example there are two

speech recognition results and associated semantic representations

of the input. The system is uncertain whether the user meant

"flights from Boston to Denver" or "flights from Austin to Denver".

The annotations to be captured are timestamps and confidence scores

for the two inputs.

Example:

<emma:emma version="2.0"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of id="r1" emma:start="1087995961542" emma:end="1087995963542"

emma:medium="acoustic" emma:mode="voice">

<emma:interpretation id="int1" emma:confidence="0.75"

emma:tokens="flights from boston to denver">

<origin>Boston</origin>

<destination>Denver</destination>

</emma:interpretation>

<emma:interpretation id="int2" emma:confidence="0.68"

emma:tokens="flights from austin to denver">

<origin>Austin</origin>

<destination>Denver</destination>

</emma:interpretation>

</emma:one-of>

</emma:emma>

Attributes on the root emma:emma element indicate

the version and namespace. The emma:emma element

contains an emma:one-of element which contains a

disjunctive list of possible interpretations of the input. The

actual semantic representation of each interpretation is within the

application namespace. In the example here the application specific

semantics involves elements origin and

destination indicating the origin and destination

cities for looking up a flight. The timestamp is the same for both

interpretations and it is annotated using values in milliseconds in

the emma:start and emma:end attributes on

the emma:one-of. The confidence scores and tokens

associated with each of the inputs are annotated using the EMMA

annotation attributes emma:confidence and

emma:tokens on each of the

emma:interpretation elements.

Attributes in EMMA cascade from a containing emma:one-of element to the individual interpretations. In the example above, the emma:start, emma:end, emma:medium, and emma:mode attributes are all specified once on emma:one-of but apply to both of the contained emma:interpretation elements. This is an important mechanism as it limits the need to repeat annotations. More details on the scope of annotations among EMMA structural elements, and also on the scope of annotations within derivations, where multiple different processing stages apply to an input, can be found in Section 4.3.

The core of an EMMA document representing system output is emma:output. Like emma:interpretation, emma:output can appear within container elements. In the following example emma:output elements appear within emma:one-of indicating alternative text-to-speech prompts and this structure is captured with emma:group indicating a graphical table to be presented. The emma:medium, emma:mode, emma:verbal, emma:function, emma:result-format, and emma:lang are the same for both TTS prompts and so they can be specified once on the emma:one-of and are assumed to apply to both prompts.

<emma:emma version="2.0"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:group

emma:process="http://example.com/multimodal_presentation_planner">

<emma:one-of id="ooo1"

emma:medium="acoustic"

emma:mode="voice"

emma:verbal="true"

emma:function="dialog"

emma:result-format="application/ssml+xml"

emma:lang="en=US"

emma:process="http://example.com/nlg">

<emma:output emma:confidence="0.8" id="tts1">

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis"

xml:lang="en-US">

I found three flights from Boston to Denver.

</speak>

</emma:output>

<emma:output emma:confidence="0.7" id="tts2">

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis"

xml:lang="en-US">

There are flights to Boston from Denver on United, American, and Delta.

</speak>

</emma:output>

</emma:one-of>

<emma:output id="gui1"

emma:medium="visual"

emma:mode="gui"

emma:result-format="text/html"

emma:lang="en-US"

emma:function="dialog"

emma:process="http://example.com/gui_gen">

<html xmlns="http://www.w3.org/1999/xhtml">

<body>

<table>

<tr><td>United</td><td>5.30pm</td></tr>

<tr><td>American</td><td>6.10pm</td></

<tr><td>Delta</td><td>7pm</td></tr>

</table>

</body>

</html>

</emma:output>

</emma:group>

</emma:emma>

Many EMMA elements allow for content to be specified either inline or by reference using the ref attribute. This is an important mechanism as it allows for EMMA documents to be less verbose and yet allows the EMMA consumer to access content from an external document, possibly on a remote server. For example, in the case of emma:grammar a grammar can either be specified inline within the element or the ref attribute on emma:grammar can indicate the location where the grammar document can be retrieved. Similarly with emma:model a data model can be specified inline or by reference through the ref attribute. A ref attribute can also be used on the EMMA container elements emma:sequence, emma:one-of, emma:group, and emma:lattice. In these cases, the ref attribute provides a pointer to a portion of an external EMMA document, possibly on a remote server. This can be achieved using URI ID references to pick out a particular element within the external EMMA document. One use case for ref with the container elements is to allow for inline content to be partial and for the ref to provide access to the full content. For example, in the case of emma:one-of, an EMMA document delivered to an EMMA consumer could contain an abbreviated list of interpretations, e.g. the top 3, while an emma:one-of element accessible through the URI in ref to include a more inclusive list of 20 emma:intepretation elements. The emma:partial-content attribute MUST be used on the partially specified element if the ref refers to a more fully specified element. The emma:ref attribute can also be used on emma:info, emma:parameters, and emma:annotation. The use of ref on specific elements is described and exemplified in the specific section describing each element.

2.1 Data model

An EMMA data model expresses the constraints on the structure

and content of instance data, for the purposes of validation. As

such, the data model may be considered as a particular kind of

annotation (although, unlike other EMMA annotations, it is not a

feature pertaining to a specific user input or system output at a

specific moment in time, it is rather a static and, by its very

definition, application-specific structure). The

specification of a data model in EMMA is optional.

Since Web applications today use different formats to specify

data models, e.g. XML Schema Part 1: Structures Second

Edition [XML Schema

Structures], XForms 1.0 (Second

Edition) [XFORMS], RELAX NG

Specification [RELAX-NG], etc., EMMA

itself is agnostic to the format of data model used.

Data model definition and reference is defined in Section 4.1.1.

2.2 EMMA namespace prefixes

An EMMA attribute is qualified with the EMMA namespace prefix if

the attribute can also be used as an in-line annotation on elements

in the application's namespace. Most of the EMMA annotation

attributes in Section 4.2 are in this category.

An EMMA attribute is not qualified with the EMMA namespace prefix

if the attribute only appears on an EMMA element. This rule ensures

consistent usage of the attributes across all examples.

Attributes from other namespaces are permissible on all EMMA

elements. As an example xml:lang may be used to

annotate the human language of character data content.

3. EMMA structural elements

This section defines elements in the EMMA namespace which

provide the structural syntax of EMMA documents.

3.1 Root element: emma:emma

| Annotation |

emma:emma |

| Definition |

The root element of an EMMA document. |

| Children |

The emma:emma element MUST immediately contain a

single emma:interpretation element or emma:output element or EMMA container

element: emma:one-of, emma:group,

emma:sequence. It MAY also contain an optional single

emma:derivation element. It MAY also contain

multiple optional emma:grammar elements,

emma:model elements, and

emma:endpoint-info elements, emma:info elements, emma:process-model elements, emma:parameters elements, and emma:annotation elements. It may also contain a single emma:location element. |

| Attributes |

- Required:

version: the version of EMMA used for the

interpretation(s). Interpretations expressed using this

specification MUST use 1.1 for the value.- Namespace declaration for EMMA, see below.

- Optional:

- any other namespace declarations for application specific

namespaces.

doc-ref: an attribute of type xsd:anyURI providing a URI indicating the location on a server where the EMMA document with emma:emma as root can be retrieved from.prev-doc: an attribute of type xsd:anyURI providing a URI indicating the location on a server where the EMMA document previous to this EMMA document in the sequence of interaction can be retrieved from.

|

| Applies to |

None |

The root element of an EMMA document is named

emma:emma. It holds a single

emma:interpretation or emma:output element, or an EMMA container element

(emma:one-of, emma:sequence,

emma:group). It MAY also contain a single

emma:derivation element containing earlier stages of

the processing of the input (See Section

4.1.2). It MAY also contain multiple optional

emma:grammar, emma:model, and

emma:endpoint-info , emma:info, emma:process-model, emma:parameters, and emma:annotation elements.

It MAY hold attributes for information pertaining to EMMA

itself, along with any namespaces which are declared for the entire

document, and any other EMMA annotative data. The

emma:emma element and other elements and attributes

defined in this specification belong to the XML namespace

identified by the URI "http://www.w3.org/2003/04/emma". In the

examples, the EMMA namespace is generally declared using the

attribute xmlns:emma on the root

emma:emma element. EMMA processors MUST support the

full range of ways of declaring XML namespaces as defined by the

Namespaces in XML 1.1 (Second Edition) [XMLNS]. Application markup MUST be declared either in an

explicit application namespace, or an undefined namespace

by setting xmlns="".

For example:

<emma:emma version="1.1" xmlns:emma="http://www.w3.org/2003/04/emma">

....

</emma:emma>

or

<emma version="1.1" xmlns="http://www.w3.org/2003/04/emma">

....

</emma>

The optional attributes doc-ref and prev-doc MAY be used on emma:emma in order to indicate the location where the EMMA document comprising that emma:emma element can be retrieved from, and the location of the previous EMMA document in a sequence of interactions. One important use case for doc-ref is for client side logging. A client receiving an EMMA document can record the URI found in doc-ref in a log file instead of a local copy of the whole EMMA document. The prev-doc attribute provides a mechanism for tracking a sequence of EMMA documents representing the results of processing distinct turns of interaction by an EMMA processor.

In the following example, doc-ref on EMMA provides a URI which indicates where the EMMA document embodied in this emma:emma can be retrieved from.

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example"

doc-ref="http://example.com/trainapp/user123/emma0727080512.xml">

<emma:interpretation id="int1"

emma:medium="acoustic"

emma:mode="voice"

emma:function="dialog"

emma:verbal="true"

emma:signal="http://example.com/audio/input678.amr"

emma:process="http://example.com/asr/params.xml"

emma:tokens="trains to london tomorrow">

<destination>London</destination>

<date>tomorrow</date>

</emma:interpretation>

</emma:emma>

In the following example, again doc-ref indicates where the EMMA document can be retrieved from but in addition prev-doc indicates where the previous EMMA document can be retrieved from.

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example"

doc-ref="http://example.com/trainapp/user123/emma0730080512.xml"

prev-doc="http://example.com/trainapp/user123/emma0727080512.xml">

<emma:interpretation id="int1"

emma:medium="acoustic"

emma:mode="voice"

emma:function="dialog"

emma:verbal="true"

emma:signal="http://example.com/audio/input679.amr"

emma:process="http://example.com/asr/params.xml"

emma:tokens="from cambridge">

<origin>Cambridge</origin>

</emma:interpretation>

</emma:emma>

EMMA processors may be use a number of different techniques to determine the prev-doc. It may, for example, be determined based on the session. In a session of interaction a server processing requests for processing can track the previous EMMA result for a client and indicate that in prev-doc. Alternatively, the URI of the last EMMA result could be passed in as a parameter in a request to an EMMA processor and returned in the prev-doc with the next result.

3.2 Interpretation element: emma:interpretation

| Annotation |

emma:interpretation |

| Definition |

The emma:interpretation element acts as a wrapper

for application instance data or lattices. |

| Children |

The emma:interpretation element MUST immediately

contain either application instance data, or a single

emma:lattice element, or a single

emma:literal element, or in the case of uninterpreted

input or no input emma:interpretation

MUST be empty. It MAY also contain multiple

optional emma:derived-from

elements and an optional single

emma:info element multiple

optional emma:info elements. It MAY also contain multiple optional emma:annotation elements. It MAY also contain multiple emma:parameters elements. It MAY also contain a single optional emma:grammar-active element. It may also contain a single emma:location element. |

| Attributes |

- Required: Attribute

id of type

xsd:ID that uniquely identifies the interpretation

within the EMMA document.

- Optional: The annotation attributes:

emma:tokens, emma:process,

emma:no-input, emma:uninterpreted,

emma:lang, emma:signal,

emma:signal-size,

emma:media-type, emma:confidence,

emma:source, emma:start,

emma:end, emma:time-ref-uri,

emma:time-ref-anchor-point,

emma:offset-to-start, emma:duration,

emma:medium, emma:mode,

emma:function, emma:verbal,

emma:cost, emma:grammar-ref,

emma:endpoint-info-ref, emma:model-ref,

emma:dialog-turn, emma:info-ref, emma:parameter-ref, emma:process-model-ref, emma:annotated-tokens, emma:result-format, emma:expressed-through, emma:device-type, emma:stream-id, emma:stream-seq-num, emma:stream-status, emma:stream-full-result, emma:stream-token-span, emma:stream-token-span-full, emma:stream-token-immortals, emma:stream-immortal-vertex

|

| Applies to |

The emma:interpretation element is legal only as a

child of emma:emma, emma:group,

emma:one-of, emma:sequence, or

emma:derivation. |

The emma:interpretation element holds a single

interpretation represented in application specific markup, or a

single emma:lattice element, or a single

emma:literal element.

The emma:interpretation element MUST be empty if it

is marked with emma:no-input="true" (Section 4.2.3). The

emma:interpretation element MUST be empty

if it has been annotated with

emma:uninterpreted="true" (Section 4.2.4) or

emma:function="recording" (Section 4.2.11).

Attributes:

- id a REQUIRED

xsd:ID value that uniquely

identifies the interpretation within the EMMA document.

<emma:emma version="1.1" xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:interpretation id="r1" emma:medium="acoustic" emma:mode="voice">

...

</emma:interpretation>

</emma:emma>

While emma:medium and emma:mode are

optional on emma:interpretation, note that all EMMA

interpretations must be annotated for emma:medium and

emma:mode, so either these attributes must appear

directly on emma:interpretation or they must appear on

an ancestor emma:one-of node or they must appear on an

earlier stage of the derivation listed in

emma:derivation.

3.3 Output element: emma:output

| Annotation |

emma:output |

| Definition |

The emma:output element acts as a wrapper for application instance data specifying a system output. |

| Children |

The emma:output element MUST immediately

contain either application instance data or a single emma:literal element. It MAY also contain multiple

optional emma:derived-from elements and multiple optional emma:info elements. It MAY also contain multiple emma:parameters elements. It may also contain a single emma:location element. |

| Attributes |

- Required: Attribute

id of type xsd:ID that uniquely identifies the output

within the EMMA document.

- Optional: The annotation attributes:

emma:tokens, emma:process, emma:lang, emma:signal, emma:signal-size, emma:media-type, emma:confidence, emma:source, emma:start, emma:end, emma:time-ref-uri, emma:time-ref-anchor-point, emma:offset-to-start, emma:duration, emma:medium, emma:mode, emma:function, emma:verbal, emma:cost, emma:endpoint-info-ref, emma:model-ref, emma:dialog-turn, emma:info-ref, emma:parameter-ref, emma:process-model-ref, emma:output-format,emma:expressed-through.

|

| Applies to |

The emma:output element is legal only as a

child of emma:emma, emma:group, emma:one-of, emma:sequence, or emma:derivation. |

The emma:output element is used as a container for system output represented in EMMA. As such is the outside twin of emma:interpretation. For example, a dialog or interaction manager might generate an emma:output containing application specific semantic representation markup describing a system action. For example, to convey the availability of flights in a travel application.

<emma:emma version="2.0"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:output emma:confidence="0.9" id=sem1"

emma:medium="acoustic"

emma:mode="voice"

emma:verbal="true"

emma:function="dialog"

emma:media-type=""

emma:start=""

emma:end=""

emma:lang="en-US"

emma:process="http://example.com/dialog_engine">

<inform>

<flights>

<src>DEN</src>

<dest>BOS</dest>

<airlines>

<airline>United</airline>

<airline>American</airline>

<airline>Delta</airline>

</airlines>

</flights>

</inform>

</emma:output>

</emma:emma>

The semantics of annotations on emma:output differs from emma:interpretation, since here we are seeing the stages of the planning of an intended output by the system. Future drafts will address issues of planned vs. actual output.

This representation would then be received by a natural language generation component with generates a text string for the system to speak, here captured in SSML.

<emma:emma version="2.0"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example"

xmlns="http://www.w3.org/2001/10/synthesis>

<emma:output emma:confidence="0.8" id="tts1">

emma:medium="acoustic"

emma:mode="voice"

emma:verbal="true"

emma:function="dialog"

emma:output-format="application/ssml+xml"

emma:media-type=""

emma:start=""

emma:end=""

emma:lang="en-US"

emma:process="http://example.com/nlg">

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis"

xml:lang="en-US">

There are flights to Boston from Denver on United, American, and Delta.

</speak>

</emma:output>

</emma:emma>

While emma:medium and emma:mode are

optional on emma:output, note that all EMMA

outputs must be annotated for emma:medium and emma:mode, so either these attributes must appear

directly on emma:output or they must appear on

an ancestor emma:one-of node or they must appear on an

earlier stage of the derivation of the output listed in emma:derivation.

3.4 Container elements

3.4.1 emma:one-of element

| Annotation |

emma:one-of |

| Definition |

A container element indicating a disjunction among a collection

of mutually exclusive interpretations of the input. |

| Children |

The emma:one-of element MUST immediately contain a

collection of one or more emma:interpretation elements or one or more emma:output elements or container elements: emma:one-of,

emma:group, emma:sequence UNLESS it is annotated with ref. emma:output elements and emma:interpretation elements MAY NOT be mixed under the same emma:one-of element. It MAY also

contain multiple optional

emma:derived-from elements and multiple emma:info

elements. It MAY also contain multiple optional emma:annotation elements. It MAY also contain multiple optional emma:parameters elements. It MAY also contain multiple optional emma: elements. It MAY also contain a single optional emma:grammar-active element. It MAY also contain a single emma:lattice element containing the lattice result for the same input. It may also contain a single emma:location element. |

| Attributes |

- Required:

- Attribute

id of type xsd:ID

- The attribute

disjunction-type MUST be present if

emma:one-of is embedded within

emma:one-of. The possible values of

disjunction-type are {recognition,

understanding, multi-device, and

multi-process}.

- Optional:

- On a single non-embedded

emma:one-of the attribute

disjunction-type is optional.

- A single

ref attribute of type xsd:anyURI providing a reference to a location where the content of the element can be retrieved from

- An

emma:partial-content attribute of type xsd:boolean indicating whether the content inside the element is partial and more can be retrieved from an external document through ref

- The following annotation attributes are optional:

emma:tokens, emma:process,

emma:lang, emma:signal,

emma:signal-size,

emma:media-type, emma:confidence,

emma:source, emma:start,

emma:end, emma:time-ref-uri,

emma:time-ref-anchor-point,

emma:offset-to-start, emma:duration,

emma:medium, emma:mode,

emma:function, emma:verbal,

emma:cost, emma:grammar-ref,

emma:endpoint-info-ref, emma:model-ref,

emma:dialog-turn,emma:info-ref, emma:parameter-ref, emma:process-model-ref, emma:annotated-tokens, emma:result-format, emma:expressed-through, emma:device-type, emma:stream-id, emma:stream-seq-num, emma:stream-status, emma:stream-full-result

|

| Applies to |

The emma:one-of element MAY only appear as a child

of emma:emma, emma:one-of,

emma:group, emma:sequence, or

emma:derivation. |

The emma:one-of element acts as a container for a

collection of one or more interpretation

(emma:interpretation) or container elements

(emma:one-of, emma:group,

emma:sequence), and denotes that these are mutually

exclusive interpretations.

An N-best list of choices in EMMA MUST be represented as a set

of emma:interpretation elements contained within an

emma:one-of element. For instance, a series of

different recognition results in speech recognition might be

represented in this way.

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of id="r1" emma:medium="acoustic" emma:mode="voice"

ref="http://www.example.com/i156/emma.xml#r1>

<emma:interpretation id="int1">

<origin>Boston</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

<emma:interpretation id="int2">

<origin>Austin</origin>

<destination>Denver</destination>

<date>03112003</date>

</emma:interpretation>

</emma:one-of>

</emma:emma>

The function of the emma:one-of element is to

represent a disjunctive list of possible interpretations of a user

input. A disjunction of possible interpretations of an input can be

the result of different kinds of processing or ambiguity. One

source is multiple results from a recognition technology such as

speech or handwriting recognition. Multiple results can also occur

from parsing or understanding natural language. Another possible

source of ambiguity is from the application of multiple different

kinds of recognition or understanding components to the same input

signal. For example, an single ink input signal might be processed

by both handwriting recognition and gesture recognition. Another is

the use of more than one recording device for the same input

(multiple microphones).

The optional ref attribute indicates a location where a copy of the content within the emma:one-of element can be retrieved from an external document, possibly located on a remote server.

In order to make explicit these different kinds of multiple

interpretations and allow for concise statement of the annotations

associated with each, the emma:one-of element MAY

appear within another emma:one-of element. If

emma:one-of elements are nested then they MUST

indicate the kind of disjunction using the attribute

disjunction-type. The values of

disjunction-type are {recognition,

understanding, multi-device, and multi-process}. For the

most common use case, where there are multiple recognition results

and some of them have multiple interpretations, the top-level

emma:one-of is

disjunction-type="recognition" and the embedded

emma:one-of has the attribute

disjunction-type="understanding".

As an example, in an interactive flight reservation application,

recognition yielded 'Boston' or 'Austin' and each had a semantic

interpretation as either the assertion of city name or the

specification of a flight query with the city as the destination,

this would be represented as follows in EMMA:

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of disjunction-type="recognition"

start="12457990" end="12457995"

emma:medium="acoustic" emma:mode="voice">

<emma:one-of disjunction-type="understanding"

emma:tokens="boston">

<emma:interpretation>

<assert><city>boston</city></assert>

</emma:interpretation>

<emma:interpretation>

<flight><dest><city>boston</city></dest></flight>

</emma:interpretation>

</emma:one-of>

<emma:one-of disjunction-type="understanding"

emma:tokens="austin">

<emma:interpretation>

<assert><city>austin</city></assert>

</emma:interpretation>

<emma:interpretation>

<flight><dest><city>austin</city></dest></flight>

</emma:interpretation>

</emma:one-of>

</emma:one-of>

</emma:emma>

In the following example, emma:one-of contains multiple emma:output elements containing different alternative spoken system outputs.

<emma:emma version="2.0"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of id="ooo1"

emma:medium="acoustic"

emma:mode="voice"

emma:verbal="true"

emma:function="dialog"

emma:result-format="application/ssml+xml"

emma:lang="en=US"

emma:process="http://example.com/nlg">

<emma:output emma:confidence="0.8" id="tts1">

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis"

xml:lang="en-US">

I found three flights from Boston to Denver.

</speak>

</emma:output>

<emma:output emma:confidence="0.7" id="tts2">

<speak version="1.0" xmlns="http://www.w3.org/2001/10/synthesis"

xml:lang="en-US">

There are flights to Boston from Denver on United, American, and Delta.

</speak>

</emma:output>

</emma:one-of>

</emma:emma>

EMMA MAY explicitly represent ambiguity resulting from different

processes, devices, or sources using embedded

emma:one-of and the disjunction-type

attribute. Multiple different interpretations resulting from

different factors MAY also be listed within a single unstructured

emma:one-of though in this case it is more complex or

impossible to uncover the sources of the ambiguity if required by

later stages of processing. If there is no embedding in

emma:one-of, then the disjunction-type

attribute is not required. If the disjunction-type

attribute is missing then by default the source of disjunction is

unspecified.

The example case above could also be represented as:

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of start="12457990" end="12457995"

emma:medium="acoustic" emma:mode="voice">

<emma:interpretation emma:tokens="boston">

<assert><city>boston</city></assert>

</emma:interpretation>

<emma:interpretation >

<flight><dest><city>boston</city></dest></flight>

</emma:interpretation>

<emma:interpretation emma:tokens="austin">

<assert><city>austin</city></assert>

</emma:interpretation>

<emma:interpretation emma:tokens="austin">

<flight><dest><city>austin</city></dest></flight>

</emma:interpretation>

</emma:one-of>

</emma:emma>

But in this case information about which interpretations

resulted from speech recognition and which resulted from language

understanding is lost.

A list of emma:interpretation elements within an

emma:one-of MUST be sorted best-first by some measure

of quality. The quality measure is emma:confidence if

present, otherwise, the quality metric is platform-specific.

With embedded emma:one-of structures there is no

requirement for the confidence scores within different

emma:one-of to be on the same scale. For example, the

scores assigned by handwriting recognition might not be comparable

to those assigned by gesture recognition. Similarly, if multiple

recognizers are used there is no guarantee that their confidence

scores will be comparable. For this reason the ordering requirement

on emma:interpretation within emma:one-of

only applies locally to sister emma:interpretation

elements within each emma:one-of. There is no

requirement on the ordering of embedded emma:one-of

elements within a higher emma:one-of element.

While emma:medium and emma:mode are

optional on emma:one-of, note that all EMMA

interpretations must be annotated for emma:medium and

emma:mode, so either these annotations must appear

directly on all of the contained emma:interpretation

elements within the emma:one-of, or they must appear

on the emma:one-of element itself, or they must appear

on an ancestor emma:one-of element, or they must

appear on an earlier stage of the derivation listed in

emma:derivation.

An important use case for ref on emma:one-of is to allow an EMMA processor to return an abbreviated list of container elements such as emma:interpretation within an emma:one-of and use the ref attribute to provide a reference to a more fully specified set. In these cases, the emma:one-of MUST be annotated with the emma:partial-content="true" attribute.

In the following example the EMMA document received has the two interpretations within emma:one-of. The emma:partial-content="true" provides an indication that there are more interpretations and those can be retrieved by accessing the URI in ref : "http://www.example.com/emma_021210_10.xml#r1".

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of id="r1" emma:medium="acoustic" emma:mode="voice"

ref="http://www.example.com/emma_021210_10.xml#r1

emma:partial-content="true">

<emma:interpretation id="int1"

emma:tokens="from boston to denver"

emma:confidence="0.9">

<origin>Boston</origin>

<destination>Denver</destination>

</emma:interpretation>

<emma:interpretation id="int2"

emma:tokens="from austin to denver"

emma:confidence="0.7">

<origin>Austin</origin>

<destination>Denver</destination>

</emma:interpretation>

</emma:one-of>

</emma:emma>

Where the document at "http://www.example.com/emma_021210_10.xml" is as follows, and

there are two more interpretations within the emma:one-of with id "r1".

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of id="r1" emma:medium="acoustic" emma:mode="voice"

emma:partial-content="false">

<emma:interpretation id="int1"

emma:tokens="from boston to denver"

emma:confidence="0.9">

<origin>Boston</origin>

<destination>Denver</destination>

</emma:interpretation>

<emma:interpretation id="int2"

emma:tokens="from austin to denver"

emma:confidence="0.7">

<origin>Austin</origin>

<destination>Denver</destination>

</emma:interpretation>

<emma:interpretation id="int3"

emma:tokens="from tustin to denver"

emma:confidence="0.3">

<origin>Tustin</origin>

<destination>Denver</destination>

</emma:interpretation>

<emma:interpretation id="int4"

emma:tokens="from tustin to dallas"

emma:confidence="0.1">

<origin>Tustin</origin>

<destination>Dallas</destination>

</emma:interpretation>

</emma:one-of>

</emma:emma>

It is also possible to specify a lattice of results alongside an N-best list of interpretations in emma:one-of. A single emma:lattice element can appear as a child of emma:one-of and contains a lattice representation of the processing of the same input resulting in the interpretations that appear within the emma:one-of.

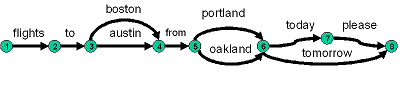

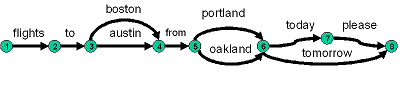

In this example, there are two N-best results and the emma:lattice enumerates two more as it includes arcs for "tomorrow" vs "today".

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:one-of id="r1" emma:medium="acoustic" emma:mode="voice">

<emma:interpretation id="int1" emma:tokens="flights from boston to denver tomorrow">

<origin>Boston</origin>

<destination>Denver</destination>

<date>tomorrow</date>

</emma:interpretation>

<emma:interpretation id="int2" emma:tokens="flights from austin to denver tomorrow">

<origin>Austin</origin>

<destination>Denver</destination>

<date>tomorrow</date>

</emma:interpretation>

<emma:lattice initial="1" final="7">

<emma:arc from="1" to="2">flights</emma:arc>

<emma:arc from="2" to="3">from</emma:arc>

<emma:arc from="3" to="4">boston</emma:arc>

<emma:arc from="3" to="4">austin</emma:arc>

<emma:arc from="4" to="5">to</emma:arc>

<emma:arc from="5" to="6">denver</emma:arc>

<emma:arc from="6" to="7">today</emma:arc>

<emma:arc from="6" to="7">tomorrow</emma:arc>

</emma:lattice>

</emma:one-of>

</emma:emma>

3.4.2 emma:group element

| Annotation |

emma:group |

| Definition |

A container element indicating that a number of interpretations

of distinct user inputs or system outputs are grouped according to some

criteria. |

| Children |

The emma:group element MUST immediately contain a

collection of one or more emma:interpretation elements

or container elements: emma:one-of,

emma:group, emma:sequence . It MAY also

contain an optional single

emma:group-info element. It MAY also contain

multiple optional emma:derived-from

elements and multiple emma:info elements. It MAY also contain multiple optional emma:annotation elements. It MAY also contain multiple optional emma:parameters elements. It MAY also contain a single optional emma:grammar-active element. It may also contain a single emma:location element. |

| Attributes |

- Required: Attribute

id of type

xsd:ID

- Optional:

- A single

ref attribute of type xsd:anyURI providing a reference to a location where the content of the element can be retrieved from

- An

emma:partial-content attribute of type xsd:boolean indicating whether the content inside the element is partial and more can be retrieved from an external document through ref

- The annotation attributes:

emma:tokens, emma:process,

emma:lang, emma:signal,

emma:signal-size,

emma:media-type, emma:confidence,

emma:source, emma:start,

emma:end, emma:time-ref-uri,

emma:time-ref-anchor-point,

emma:offset-to-start, emma:duration,

emma:medium, emma:mode,

emma:function, emma:verbal,

emma:cost, emma:grammar-ref,

emma:endpoint-info-ref, emma:model-ref,

emma:dialog-turn,emma:info-ref, emma:parameter-ref, emma:process-model-ref, emma:annotated-tokens, emma:result-format, emma:expressed-through, emma:device-type.

|

| Applies to |

The emma:group element is legal only as a child of

emma:emma, emma:one-of,

emma:group, emma:sequence, or

emma:derivation. |

The emma:group element is used to indicate that the

contained interpretations are from distinct user inputs that are

related in some manner. emma:group MUST NOT be used

for containing the multiple stages of processing of a single user

input. Those MUST be contained in the emma:derivation

element instead (Section 4.1.2).

For groups of inputs in temporal order the more specialized

container emma:sequence MUST be used (Section 3.3.3). The following example shows

three interpretations derived from the speech input "Move this

ambulance here" and the tactile input related to two consecutive

points on a map.

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example">

<emma:group id="grp"

emma:start="1087995961542"

emma:end="1087995964542">

<emma:interpretation id="int1"

emma:medium="acoustic" emma:mode="voice">

<action>move</action>

<object>ambulance</object>

<destination>here</destination>

</emma:interpretation>

<emma:interpretation id="int2"

emma:medium="tactile" emma:mode="ink">

<x>0.253</x>

<y>0.124</y>

</emma:interpretation>

<emma:interpretation id="int3"

emma:medium="tactile" emma:mode="ink">

<x>0.866</x>

<y>0.724</y>

</emma:interpretation>

</emma:group>

</emma:emma>

The emma:one-of and emma:group

containers MAY be nested arbitrarily.

Like emma:one-of the contents for emma:group may be partial, indicated by emma:partial-content="true" and the full set of group members retrieved by accessing the element referenced in ref.

emma:group may also be used with multiple emma:output as children, for example to represent multimodal output where each emma:output contains content from a particular modality. For example, emma:group can contain a visual table in html grouped with a text-to-speech prompt in SSML, as in the example above (Section 2).

3.4.2.1 Indirect grouping criteria:

emma:group-info element

| Annotation |

emma:group-info |

| Definition |

The emma:group-info element contains or references

criteria used in establishing the grouping of interpretations in an

emma:group element. |

| Children |

The emma:group-info element MUST either

immediately contain inline instance data specifying grouping

criteria or have the attribute ref referencing the

criteria. |

| Attributes |

- Optional:

ref of type

xsd:anyURI referencing the grouping criteria;

alternatively the criteria MAY be provided inline as the content of

the emma:group-info element.

|

| Applies to |

The emma:group-info element is legal only as a

child of emma:group. |

Sometimes it may be convenient to indirectly associate a given

group with information, such as grouping criteria. The

emma:group-info element might be used to make explicit

the criteria by which members of a group are associated. In the

following example, a group of two points is associated with a

description of grouping criteria based upon a sliding temporal

window of two seconds duration.

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example"

xmlns:ex="http://www.example.com/ns/group">

<emma:group id="grp">

<emma:group-info>

<ex:mode>temporal</ex:mode>

<ex:duration>2s</ex:duration>

</emma:group-info>

<emma:interpretation id="int1"

emma:medium="tactile" emma:mode="ink">

<x>0.253</x>

<y>0.124</y>

</emma:interpretation>

<emma:interpretation id="int2"

emma:medium="tactile" emma:mode="ink">

<x>0.866</x>

<y>0.724</y>

</emma:interpretation>

</emma:group>

</emma:emma>

You might also use emma:group-info to refer to a

named grouping criterion using external reference, for

instance:

<emma:emma version="1.1"

xmlns:emma="http://www.w3.org/2003/04/emma"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.w3.org/2003/04/emma

http://www.w3.org/TR/2009/REC-emma-20090210/emma.xsd"

xmlns="http://www.example.com/example"

xmlns:ex="http://www.example.com/ns/group">

<emma:group id="grp">

<emma:group-info ref="http://www.example.com/criterion42"/>

<emma:interpretation id="int1"

emma:medium="tactile" emma:mode="ink">

<x>0.253</x>

<y>0.124</y>

</emma:interpretation>

<emma:interpretation id="int2"

emma:medium="tactile" emma:mode="ink">

<x>0.866</x>

<y>0.724</y>

</emma:interpretation>

</emma:group>

</emma:emma>

3.4.3 emma:sequence element

| Annotation |

emma:sequence |

| Definition |

A container element indicating that a number of interpretations

of distinct user inputs are in temporal sequence. |

| Children |

The emma:sequence element MUST immediately contain

a collection of one or more emma:interpretation

elements or container elements: emma:one-of,

emma:group, emma:sequence . It MAY also

contain multiple optional

emma:derived-from elements and multiple emma:info elements. It MAY also contain multiple optional emma:annotation elements. It MAY also contain multiple optional emma:parameters elements. It MAY also contain a single optional emma:grammar-active element. It may also contain a single emma:location element. |

| Attributes |

- Required: Attribute

id of type

xsd:ID

- Optional:

- A single

ref attribute of type xsd:anyURI providing a reference to a location where the content of the element can be retrieved from

- An

emma:partial-content attribute of type xsd:boolean indicating whether the content inside the element is partial and more can be retrieved from the server through ref

- The annotation attributes:

emma:tokens, emma:process,

emma:lang, emma:signal,