1.

Introduction

Depth cameras are increasingly being integrated into devices such as

phones, tablets, and laptops. Depth cameras provide a depth map,

which conveys the distance information between points on an object's

surface and the camera. With depth information, web content and

applications can be enhanced by, for example, the use of hand gestures

as an input mechanism, or by creating 3D models of real-world objects

that can interact and integrate with the web platform. Concrete

applications of this technology include more immersive gaming

experiences, more accessible 3D video conferences, and augmented

reality, to name a few.

To bring depth capability to the web platform, this specification

extends the MediaStreamMediaStreamTrackMediaStreamTrackMediaStream

Depth cameras usually produce 16-bit depth values per pixel. However,

neither the canvas drawing surface used to draw and manipulate 2D

graphics on the web platform nor the ImageData

The Media Capture Stream with Worker specification

[MEDIACAPTURE-WORKER] that complements this specification enables

processing of 16-bit depth values per pixel directly in a worker

environment and makes the <video> and

<canvas> indirection and depth-to-grayscale

conversion redundant. This alternative pipeline that supports greater

bit depth and does not incur the performance penalty of the indirection

and conversion enables more advanced use cases.

2.

Use cases and requirements

This specification attempts to address the Use

Cases and Requirements for accessing depth stream from a depth

camera. See also the

Examples section for concrete usage examples.

5.

Terminology

The term depth+video stream means a MediaStream

object that contains one or more MediaStreamTrack objects of

kind "depth" (depth stream track) and one or more

MediaStreamTrack objects of kind "video" (video

stream track).

The term depth-only stream means a MediaStream object

that contains one or more MediaStreamTrack objects of kind

"depth" (depth stream track) only.

The term video-only stream means a MediaStream object

that contains one or more MediaStreamTrack objects of kind

"video" (video stream track) only, and optionally

of kind "audio".

The term depth stream track means a MediaStreamTrack

object whose kind is "depth". It represents a media stream

track whose source is a

depth camera.

The term video stream track means a MediaStreamTrack

object whose kind is "video". It represents a media stream

track whose source is a

video camera.

5.1

Depth map

A depth map is an abstract representation of a frame of a

depth stream track. A depth map is an image that

contains information relating to the distance of the surfaces of

scene objects from a viewpoint.

A depth map has an associated focal length which is

a double. It represents the focal length of the camera in

millimeters.

A depth map has an associated horizontal field of

view which is a double. It represents the horizontal angle of

view in degrees.

A depth map has an associated vertical field of

view which is a double. It represents the vertical angle of

view in degrees.

A depth map has an associated unit which is a

string. It represents the active depth map unit.

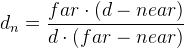

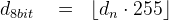

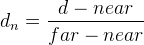

A depth map has an associated near value which is a

double. It represents the minimum range in active depth map

units.

A depth map has an associated far value which is a

double. It represents the maximum range in active depth map

units.

6.

Extensions

6.8

WebGLRenderingContext interface

A.

Acknowledgements

Thanks to everyone who contributed to the Use

Cases and Requirements, sent feedback and comments. Special thanks

to Ningxin Hu for experimental implementations, as well as to the

Project Tango for their experiments.