Multimodal Interaction Working Group Charter

The mission of the Multimodal Interaction Working Group is to develop open standards that enable the following vision:

- Extending the Web to allow multiple modes of interaction:

- GUI, Speech, Vision, Pen, Gestures, Haptic interfaces, ...

- Anyone, Anywhere, Any device, Any time

- Accessible through the user's preferred modes of interaction with services that adapt to the device, user and environmental conditions

| Start date | [dd monthname yyyy] (date of the "Call for Participation", when the charter is approved) |

|---|---|

| End date | 29 February 2020 |

| Charter extension | See Change History. |

| Chairs | Debbie Dahl |

| Team Contacts | Kazuyuki Ashimura (0.1 FTE) |

| Meeting Schedule |

Teleconferences: Weekly (1 main group call and 1 task force call) Face-to-face: we will meet during the W3C's annual Technical Plenary week; additional face-to-face meetings may be scheduled by consent of the participants, usually no more than 3 per year. |

Note: The W3C Process Document (2015) requires “The level of confidentiality of the group's proceedings and deliverables”; however, it does not mandate where this appears. Since all W3C Working Groups should be chartered as public, this notice has been moved from the essentials table to the communication section.

Scope

Brief background of landscape, technology, and relationship to the Web, users, developers, implementers, and industry.

Note: should be shorter?

Background

The primary goal of this group is to develop W3C Recommendations that enable multimodal interaction with various devices including desktop PCs, mobile phones and less traditional platforms such as cars and intelligent home environments including digital TVs/connected TVs. The standards should be scalable to enable richer capabilities for subsequent generations of multimodal devices.

Users will be able to provide input via speech, handwriting, motion or keystrokes, with output presented via displays, pre-recorded and synthetic speech, audio, and tactile mechanisms such as mobile phone vibrators and Braille strips. Application developers will be able to provide an effective user interface for whichever modes the user selects. To encourage rapid adoption, the same content can be designed for use on both old and new devices. Multimodal access capabilities depend on the devices used. For example, users of multimodal devices which include not only keypads but also touch panel, microphone and motion sensor can enjoy all the possible modalities, while users of devices with restricted capability prefer simpler and lighter modalities like keypads and voice.Extensibility to new modes of interaction is important, especially considered in the context of the current proliferation of types of sensor input, for example, in biometric identification and medical applications.

Motivation

As technology evolves the ability to process modalities grows. The office computer is an example. From punch cards, to keyboards to mice and touch screens, the machine has seen a variety of modalities come and go. The mediums that enable interaction with a piece of technology continue to expand as increasingly sophisticated hardware is introduced into computing devices. The medium mode relationship is one of many to many. As mediums are introduced into a system, they may introduce new modes or leverage existing ones. For example: a system may have a tactile medium of touch and a visual medium of eye tracking. These two mediums may share the same mode, such as ink. Devices increasingly expand the ability to weave mediums and modes together. Modality components do not necessarily have to be devices. Taking into consideration the increasing pervasiveness of computing, the need to handle diverse sets of interactions introduces a need for a scalable multimodal architecture that allows for rich human interaction. Systems currently leveraging the multimodal architecture include TV, healthcare, automotive technology, heads up displays and personal assistants. MMI enables interactive, interoperable, smart systems.

Possible new topics

- MMI use case contributions to WoT IG and/or WG

- would it be possible for that work to be taken up in WoT?

- Modality Components Discovery and Registration

- does that work have to happen in an MMI WG? Perhaps? Would a CG work, or does it need to be a WG for Rec-Track considerations?

- MMI architecture to integrate user interface modalities for accessibility into the WoT architecture

- handle jointly between MMI and WoT, if MMI still exists?

- accessible interfaces between WoT devices

- MMI and Accessibility Note

- could this be taken up by APA WG in an update to MAUR, using knowledge from MMI WG? (Media Accessibility User Requirements)?

- possibly part of the updated Accessibility User Requirements, or perhaps directly in the Web Technology Accessibility Guidelines, depending on the nature of the guidance needed here

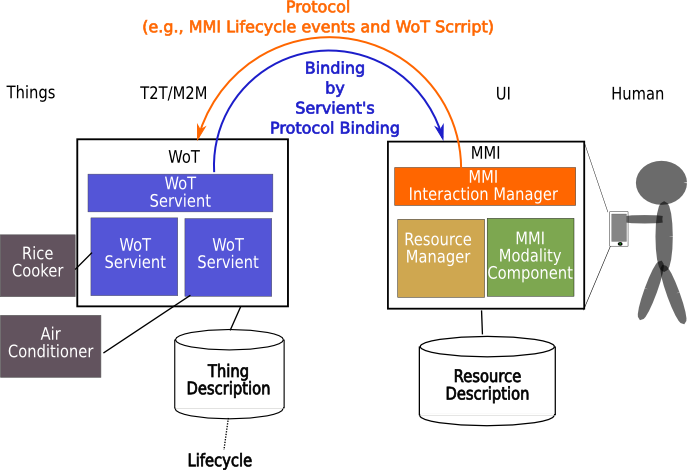

Relationship between WoT and MMI

MMI Ecosystem Example

The diagram below shows an example of an ecosystem of interacting Multimodal Architecture components. In this example, components are located in the home, the car, a smartphone, and the Web. User interaction with these components is mediated by devices such as smartphones or wearables. The MMI Architecture, along with EMMA, provides a layer that virtualizes user interaction on top of generic Internet protocols such as WebSockets and HTTP, or on top of more specific protocols such as ECHONET.

As an example, a user in this ecosystem might be driving home in the car, and want to start their rice cooker.The user could say something like "start the rice cooker" or "I want to turn on the rice cooker". MMI Life Cycle events flowing among the smartphone, the cloud and the home network result in a command being sent to the rice cooker to turn on. To confirm that the rice cooker has turned on, voice output would be selected as the best feedback mechanism for a user who is driving. Similar scenarios could be constructed for interaction with a wide variety of other devices in the home, the car, or in other environments.

Note that precise synchronization of all the related entities is becoming more and more important, because network connection is getting very fast and there is a possibility of monitoring sensors on the other side of the world or even on an artificial satellite.

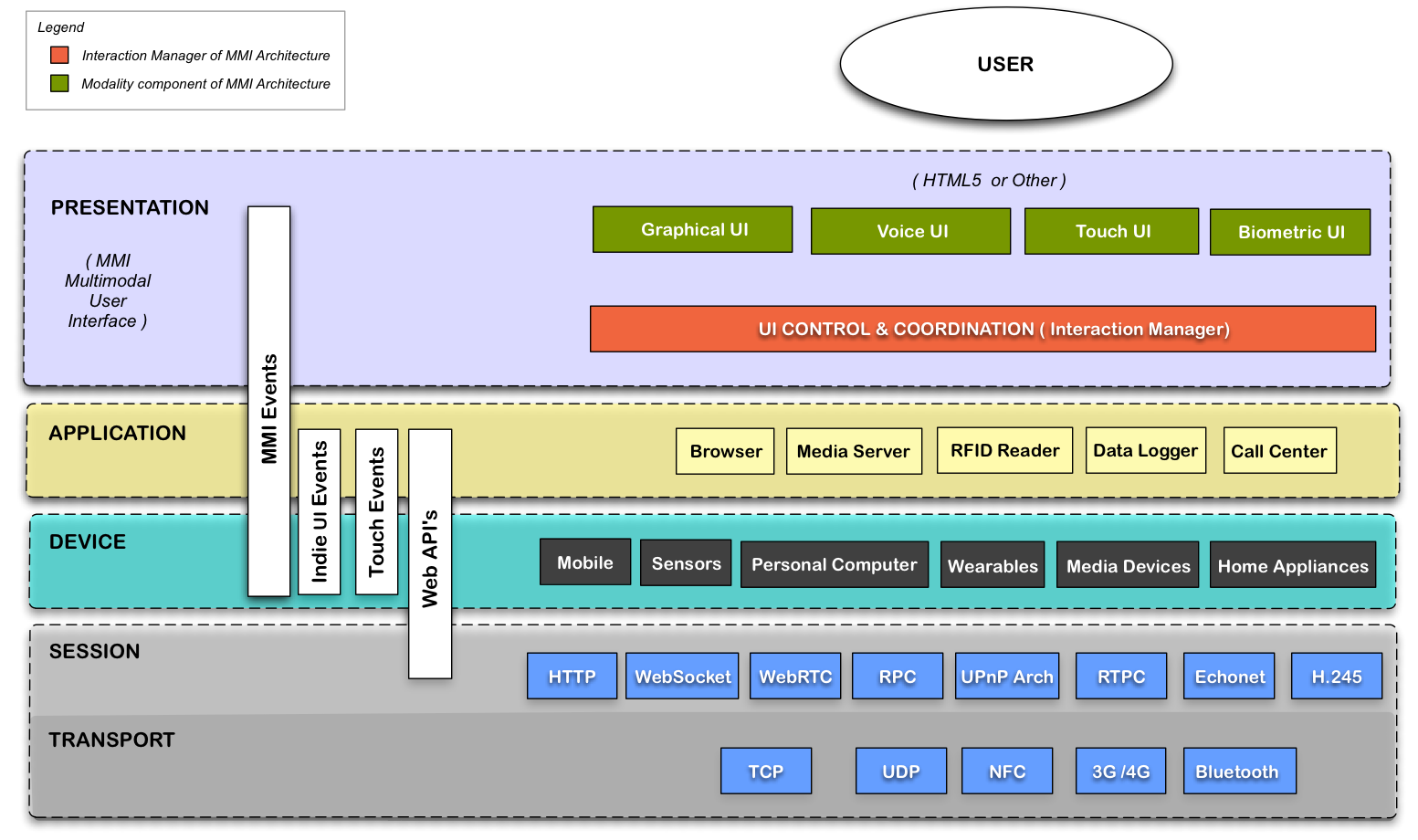

Possible Architecture of Advanced MMI-based Web Applications

The diagram below shows how the MMI-based user interface relates to other Web technologies including the following layers using various communication mechanisms, e.g., MMI Events, Indie UI Events, Touch Events and Web APIs:

- Presentation

- Application

- Device

- Session/Transport

Note that MMI is a generic mechanism to handle event exchange for advanced Web applications with distributed multiple entities.

Layer Cake of MMI-based Web Applications

Target Audience

The target audience of the Multimodal Interaction Working Group are vendors and service providers of multimodal applications, and should include a range of organizations in different industry sectors like:

- Mobile and hand-held devices

- As a result of increasingly capable networks, devices, and speech recognition technology, the number of existing multimodal applications, especially mobile applications, is rapidly accelerating.

- Home appliances, e.g., TV, and home networks

- Multimodal interfaces are expected to add value to remote control of home entertainment systems, as well as finding a role for other systems around the home. Companies involved in developing embedded systems and consumer electronics should be interested in W3C's work on multimodal interaction.

- Enterprise office applications and devices

- Multimodal has benefits for desktops, wall mounted interactive displays, multi-function copiers and other office equipment which offer a richer user experience and the chance to use additional modalities like speech and pens to existing modalities like keyboards and mice. W3C's standardization work in this area should be of interest to companies developing client software and application authoring technologies, and who wish to ensure that the resulting standards live up to their needs.

- Intelligent IT ready cars

- With the emergence of dashboard integrated high resolution color displays for navigation, communication and entertainment services, W3C's work on open standards for multimodal interaction should be of interest to companies working on developing the next generation of in-car systems.

- Medical applications

- Mobile healthcare professionals and practitioners of telemedicine will

benefit from multimodal standards for interactions with remote patients

as well as for collaboration with distant colleagues.

Speech is a very practical way for those industry sectors to interact with smaller devices, allowing one-handed and hands-free operation. Users benefit from being able to choose which modalities they find convenient in any situation. General purpose personal assistant applications such Apple Siri, Google Now, Google Glass, Anboto Sherpa, XOWi, Speaktoit for Android, Indigo for Android and Cluzee for Android are becoming widespread. These applications feature interfaces that include speech input and output, graphical input and output, natural language understanding, and back-end integration with other software. More recently, enterprise personal assistant platforms such as Nuance Nina, Angel Lexee and Openstream Cue-me have also been introduced. In addition to multimodal interfaces, enterprise personal assistant applications frequently include deep integration with enterprise functionality. Personal Assistant functionality is merging with earlier Multimodal Voice Search applications which have been implemented in applications by a number of companies, including Google, Microsoft, Yahoo, Novauris, AT&T, Vocalia, and Sound Hound. The Working Group should be of interest to companies developing smart phones and personal digital assistants or who are interested in providing tools and technology to support the delivery of multimodal services to such devices.

Work to do

To solicit industrial expertise for the expected multimodal interaction ecosystem, the group held the following two Webinars and a Workshop:

- W3C Webinar on "Developing Portable Mobile Applications with Compelling User Experience using the W3C MMI Architecture" on 31 January 2014

- W3C Workshop on "Rich Multimodal Application Development" on 22-23 July 2013

- W3C Webinar on "Discovery in Distributed Multimodal Interaction" on 24 September 2013

Those Webinars and Workshop were aimed at Web developers who may find it daunting to incorporate innovative input and output methods such as speech, touch, gesture and swipe into their applications, given the diversity of devices and programming techniques available today. During those events, we had great discussion on rich multimodal Web applications, and clarified many new use cases and requirements for enhanced multimodal user-experiences, e.g., distributed/dynamic applications depend on the ability of devices and environments to find each other and learn what modalities they support. Target industry areas include health care, financial services, broadcasting, automotive, gaming and consumer devices. Example use cases include:

- Advanced TV Applications/Services:

- Authenticated, authorized users can watch TV from any device

- Multiple devices in sync with each other, delivery mechanism for supplementary content

- Invoking personalized TV home screen using facial recognition, customized program guide, content, applications

- Appliances and Cars:

- Appliances connected to the cloud, e.g., rice cookers, fridges, microwaves and medical devices

- Devices connected using NFC enabled smartphones

- Cars connected to the cloud and road infrastructure giving traffic information, recommendations and music

- Interaction with Intelligent Sensors and Devices:

- Devices dynamically become part of a group in the workplace

- Sensor/camera input — integration with input from other modalities

- Voice enabled personal assistant

- Browsers take multiple/various inputs — GUI, gesture and sensor input

The main target of "Multimodal Interaction" used to be the interaction between computer systems and human, so the group have been working on human-machine interfaces, e.g., GUI, speech and touch. However, after holding the above events, it was clarified that MMI Architecture and EMMA are useful for not only human-machine interface but also integration with machine input/output. Therefore there is a need to consider how to integrate existing non Web-based embedded systems and Web-based systems, and the group would like to provide a solution to deal with the issue by tackling the following standardization work:

- Multimodal Architecture and Interfaces (MMI Architecture)

-

During the previous charter period the group brought the Multimodal Architecture and Interfaces (MMI Architecture) specification to Recommendation. The group will continue maintaining the specification.

The group may expand the Multimodal Architecture and Interfaces specification by including the description of Modality Components Registration & Discovery Working Group Note so that the specification would fit the need of industory sectors.

- Modality Components Registration & Discovery

-

The group has been investigating how various Modality Components in the MMI Architecture which are responsible for controlling the various input and output modalities on various devices can register their capabilities and existence with the Interaction Manager. The possible examples of registration information of Modality Components include capabilities of Modality Components such as Languages supported, ink capture and playback, media capture and playback (audio, video, images, sensor data), speech recognition, text to speech, SVG, geolocation and emotion.

The group's proposal for the description of multimodal properties focuses on the use of semantic Web services technologies over well known network layers like UPnP, DLNA, Zeroconf or the HTTP protocol. Intended to be an extension of the current MMI Architecture and Interfaces recommendation, the goal is to enhance the discovery of services provided by Modality Components on devices using well defined descriptions from a multimodal perspective. We believe that this approach will enhance the autonomous behavior of multimodal applications, providing a robust perception of the user-system needs and exchanges, and helping control the semantic integration of the human-computer interaction from a context and functioning environment perspective. As a result, the challenge of discovery is addressed using description tools provided by the field of web services, like WSDL 2.0 documents annotated with semantic data (SAWSDL) in owl or owl-s languages using the modelReference attribute. This extension adds functionalities for the discovering and registration of the Modality Components by using semantic WSDL descriptions of the contextual preconditions (QoS, price and other non functional information), processes and results provided by these components. These documents, following the WSDL 2.0 structure can either be expressed in xml format or in the lighter Json or Yaml formats.

The group generated a Working Group Note on Registration & Discovery, and based on that document the group will generate a document which describes the detailed definition of the format and process of Registration of Modality Components as well as the process for the Interaction Manager to discover the existence and current capabilities of Modality Components. This document is expected to become a recommendation track document.

- Multimodal Authoring

-

The group will investigate and recommend how various W3C languages can be extended for use in a multimodal environment using the multimodal Life-Cycle Events. The group may prepare W3C Notes on how the following languages can participate in multimodal specifications by incorporating the life cycle events from the multimodal architecture: XHTML, VoiceXML, MathML, SMIL, SVG, InkML and other languages that can be used in a multimodal environment. The working group is also interested in investigating how CSS and Delivery Context: Client Interfaces (DCCI) can be used to support Multimodal Interaction applications, and if appropriate, may write a W3C Note.

- Extensible Multi-Modal Annotations (EMMA) 2.0

-

EMMA is a data exchange format for the interface between different levels of input processing and interaction management in multimodal and voice-enabled systems. Whereas the MMI Architecture defines communication among components of multimodal systems, including distributed and dynamic systems, EMMA provides information used in communications with users. For example, EMMA provides the means for input processing components, such as speech recognizers, to annotate application specific data with information such as confidence scores, time stamps, and input mode classification (e.g. key strokes, touch, speech, or pen). It also provides mechanisms for representing alternative recognition hypotheses including lattice and groups and sequences of inputs. EMMA supersedes earlier work on the natural language semantics markup language (NLSML) in the Voice Browser Activity.

During the previous charter periods, the group brought EMMA Version 1.0 to Recommendation. Also the group generated Working Drafts of the EMMA Version 1.1 specification based on the feedback from the MMI Webinars and the MMI workshop in 2013.

In the period defined by this charter, the group will publish a new EMMA 2.0 version of the specification which incorporates new features that address issues brought up during the development of EMMA 1.1. The group won't do any more work on EMMA Version 1.1, but the work done on EMMA 1.1 will be folded into EMMA Version 2.0.

Note that the original EMMA 1.0 specification focused on representing multimodal user inputs, and EMMA 2.0 will add representation of multimodal outputs directed to users.

New features for EMMA 2.0 includes but not limited to the following:

- Broader range of semantic representation formats, e.g., JSON and RDF

- Handling machine output

- Incremental recognition results, e.g., speech and gesture

- Semantic Interpretation for Multimodal Interaction

-

During the MMI Webinars and the MMI workshop, there was discussion on possible mechanism to handle semantic interpretation for multimodal interaction, e.g., gesture recognition, like the one for speech recognition, i.e., Semantic Interpretation for Speech Recognition (SISR).

The group will start to see how to apply SISR to semantic interpretation for non-speech Modality Components, and discuss concrete use cases and requirements. However, the group will not generate a new version of SISR specification.

- Maintenance work

-

The group will be maintaining the following specifications:

The group will also consider publishing additional versions of the specifications depending on feedback from the user commuinty and industry sectors.

Out of Scope

The following features are out of scope, and will not be addressed by this (Working|Interest) group.

Success Criteria

In order to advance to Proposed Recommendation, each specification is expected to have at least two independent implementations of each of feature defined in the specification.

Each specification should contain a section detailing any known security or privacy implications for implementers, Web authors, and end users.

For specifications of technologies that directly impact user experience, such as content technologies, as well as protocols and APIs which impact content: Each specification should contain a section describing known impacts on accessibility to users with disabilities, ways the specification features address them, and recommendations for minimizing accessibility problems in implementation.

For each document to advance to proposed Recommendation, the group will produce a technical report with at least two independent and interoperable implementations for each required feature. The working group anticipates two interoperable implementations for each optional feature but may reduce the criteria for specific optional features.

Deliverables

Issue: I've removed the milestones table, which is always wildly inaccurate, and included the milestones as projected completion dates in the description template for each deliverable. Up-to-date milestones tables should be maintained on the group page and linked to from this charter.

More detailed milestones and updated publication schedules are available on the group publication status page.

Draft state indicates the state of the deliverable at the time of the charter approval. Expected completion indicates when the deliverable is projected to become a Recommendation, or otherwise reach a stable state.

Normative Specifications

The (Working|Interest) Group will deliver the following W3C normative specifications:

- Web [spec name]

-

This specification defines [concrete description].

Draft state: [No draft | Use Cases and Requirements | Editor's Draft | Member Submission | Adopted from WG/CG Foo | Working Draft]

Expected completion: [Q1–4 yyyy]

The following documents are expected to become W3C Recommendations:

- EMMA: Extensible MultiModal Annotation markup language Version 2.0 (based on the current 1.1 Working Draft)

- Modality Components Registration & Discovery

The following documents are Working Group Notes and are not expected to advance toward Recommendation:

- Authoring Examples

- Multimodality and Accessibility

- Semantic Interpretation for Multimodality

Other Deliverables

Other non-normative documents may be created such as:

- Use case and requirement documents;

- Test suite and implementation report for the specification;

- Primer or Best Practice documents to support web developers when designing applications.

Timeline

Put here a timeline view of all deliverables.

- Month YYYY: First teleconference

- Month YYYY: First face-to-face meeting

- Month YYYY: Requirements and Use Cases for FooML

- Month YYYY: FPWD for FooML

- Month YYYY: Requirements and Use Cases for BarML

- Month YYYY: FPWD FooML Primer

Coordination

For all specifications, this (Working|Interest) Group will seek horizontal review for accessibility, internationalization, performance, privacy, and security with the relevant Working and Interest Groups, and with the TAG. Invitation for review must be issued during each major standards-track document transition, including FPWD and CR, and should be issued when major changes occur in a specification.

Issue: The paragraph above replaces line-item liaisons and dependencies with individual horizontal groups. There's been a suggestion to name and individually link the specific horizontal groups inline, instead of pointing to a page that lists them.

Issue: The requirement for an invitation to review after FPWD and before CR may seem a bit overly restrictive, but it only requires an invitation, not a review, a commitment to review, or even a response from the horizontal group. This compromise offers early notification without introducing a bottleneck.

Additional technical coordination with the following Groups will be made, per the W3C Process Document:

In addition to the above catch-all reference to horizontal review which includes accessibility review, please check with chairs and staff contacts of the Accessible Platform Architectures Working Group to determine if an additional liaison statement with more specific information about concrete review issues is needed in the list below.

W3C Groups

- [other name] Working Group

- [specific nature of liaison]

These are W3C activities that may be asked to review documents produced by the Multimodal Interaction Working Group, or which may be involved in closer collaboration as appropriate to achieving the goals of the Charter.

- Audio WG

- Advanced sound and music capabilities by client-side script APIs

- Automotive and Web Platform BG

- Web APIs for vehicle data

- CSS WG

- styling for multimodal applications

- Device APIs WG

- client-side APIs for developing Web Applications and Web Widgets that interact with devices services

- Forms

- separating forms into data models, logic and presentation

- Geolocation WG

- handling geolocation information of devices

- HTML WG

- HTML5 browser as Graphical User Interface

- Internationalization (I18N) WG

- support for human languages across the world

- Independent User Interface (Indie UI) WG

- Event models for APIs that facilitate interaction in Web applications that are input method independent and accessible to people with disabilities

- Math WG

- InkML includes subset of MathML functionalities with the <mapping> element

- SVG WG

- graphical user interfaces

- Privacy IG

- support of privacy in Web standards

- Semantic Web Health Care and Life Sciences IG

- Semantic Web technologies across health care, life sciences, clinical research and translational medicine

- Semantic Web IG

- the role of metadata

- Speech API CG

- integrating speech technology in HTML5

- System Applications WG

- Runtime environment, security model and associated APIs for building Web applications with comparable capabilities to native applications

- Timed Text WG

- synchronized text and video

- Voice Browsers WG

- voice interfaces

- WAI Protocols and Formats WG

- ensuring accessibility for Multimodal systems

- WAI User Agent Accessibility Guidelines WG

- accessibility of user interface to Multimodal systems

- Web and TV IG

- TV and other CE devices as Modality Components for Multimodal systems

- Web and Mobile IG

- browsing the Web from mobile devices

- Web Annotations WG

- Generic data model for annotations and the basic infrastructural elements to make it deployable in browsers and reading systems

- Web Applications WG

- Specifications for webapps, including standard APIs for client-side development, Document Object Model (DOM) and a packaging format for installable webapps

- Web of Things CG

- Adoption of Web technologies as a basis for enabling services for the combination of the Internet of Things

- WebRTC WG

- Client-side APIs to enable Real-Time Communications in Web browsers

- Web Security IG

- improving standards and implementations to advance the security of the Web

Furthermore, Multimodal Interaction Working Group expects to follow these W3C Recommendations:

- QA Framework: Specification Guidelines.

- Character Model for the World Wide Web 1.0: Fundamentals

- Architecture of the World Wide Web, Volume I

Note: Do not list horizontal groups here, only specific WGs relevant to your work.

External Organizations

- [other name] Working Group

- [specific nature of liaison]

This is an indication of external groups with complementary goals to the Multimodal Interaction activity. W3C has formal liaison agreements with some of them, e.g. VoiceXML Forum. The group will coordinate with other new related organizations as well if needed.

- 3GPP

- protocols and codecs relevant to multimodal applications

- DLNA

- open standards and widely available industry specifications for entertainment devices and home network

- Echonet Consortium

- standard communication protocol and data format for smart home

- ETSI

- work on human factors and command vocabularies

- IETF

- SpeechSC working group has a dependency on EMMA for the MRCP v2 specification.

- ITU-T (SG13)

- generic architecture for device integration

- ISO/IEC JTC 1/SC 37 Biometrics

- user authentication in multimodal applications

- OASIS BIAS Integration TC

- defining methods for using biometric identity assurance in

transactional Web services and SOAs

having initial discussion on how EMMA could be used as a data format for biometrics - UPnP Forum

- Networking protocols permits networked devices, such as personal computers, printers, Internet gateways, Wi-Fi access points and mobile devices to seamlessly discover each other's presence on the network and establish functional network services

- VoiceXML Forum

- an industry association for VoiceXML

Participation

To be successful, this (Working|Interest) Group is expected to have 6 or more active participants for its duration, including representatives from the key implementors of this specification, and active Editors and Test Leads for each specification. The Chairs, specification Editors, and Test Leads are expected to contribute half of a day per week towards the (Working|Interest) Group. There is no minimum requirement for other Participants.

The group encourages questions, comments and issues on its public mailing lists and document repositories, as described in Communication.

The group also welcomes non-Members to contribute technical submissions for consideration upon their agreement to the terms of the W3C Patent Policy.

Communication

Technical discussions for this (Working|Interest) Group are conducted in public: the meeting minutes from teleconference and face-to-face meetings will be archived for public review, and technical discussions and issue tracking will be conducted in a manner that can be both read and written to by the general public. Working Drafts and Editor's Drafts of specifications will be developed on a public repository, and may permit direct public contribution requests. The meetings themselves are not open to public participation, however.

Information about the group (including details about deliverables, issues, actions, status, participants, and meetings) will be available from the [name] (Working|Interest) Group home page.

Most [name] (Working|Interest) Group teleconferences will focus on discussion of particular specifications, and will be conducted on an as-needed basis.

This group primarily conducts its technical work pick one, or both, as appropriate: on the public mailing list public-[email-list]@w3.org (archive) or on GitHub issues. The public is invited to review, discuss and contribute to this work.

The group may use a Member-confidential mailing list for administrative purposes and, at the discretion of the Chairs and members of the group, for member-only discussions in special cases when a participant requests such a discussion.

Decision Policy

This group will seek to make decisions through consensus and due process, per the W3C Process Document (section 3.3). Typically, an editor or other participant makes an initial proposal, which is then refined in discussion with members of the group and other reviewers, and consensus emerges with little formal voting being required.

However, if a decision is necessary for timely progress, but consensus is not achieved after careful consideration of the range of views presented, the Chairs may call for a group vote, and record a decision along with any objections.

To afford asynchronous decisions and organizational deliberation, any resolution (including publication decisions) taken in a face-to-face meeting or teleconference will be considered provisional. A call for consensus (CfC) will be issued for all resolutions (for example, via email and/or web-based survey), with a response period from one week to 10 working days, depending on the chair's evaluation of the group consensus on the issue. If no objections are raised on the mailing list by the end of the response period, the resolution will be considered to have consensus as a resolution of the (Working|Interest) Group.

All decisions made by the group should be considered resolved unless and until new information becomes available, or unless reopened at the discretion of the Chairs or the Director.

This charter is written in accordance with the W3C Process Document (Section 3.4, Votes), and includes no voting procedures beyond what the Process Document requires.

[Keep 'Patent Policy' for a Working Group, 'Patent Disclosures' for an Interest Group]

Patent Policy

This Working Group operates under the W3C Patent Policy (5 February 2004 Version). To promote the widest adoption of Web standards, W3C seeks to issue Recommendations that can be implemented, according to this policy, on a Royalty-Free basis. For more information about disclosure obligations for this group, please see the W3C Patent Policy Implementation.

Patent Disclosures

The Interest Group provides an opportunity to share perspectives on the topic addressed by this charter. W3C reminds Interest Group participants of their obligation to comply with patent disclosure obligations as set out in Section 6 of the W3C Patent Policy. While the Interest Group does not produce Recommendation-track documents, when Interest Group participants review Recommendation-track specifications from Working Groups, the patent disclosure obligations do apply. For more information about disclosure obligations for this group, please see the W3C Patent Policy Implementation.

Licensing

This (Working|Interest) Group will use the [pick a license, one of:] W3C Document license | W3C Software and Document license for all its deliverables.

About this Charter

This charter has been created according to section 5.2 of the Process Document. In the event of a conflict between this document or the provisions of any charter and the W3C Process, the W3C Process shall take precedence.

Charter History

Note:Display this table and update it when appropriate. Requirements for charter extension history are documented in the Charter Guidebook (section 4).

The following table lists details of all changes from the initial charter, per the W3C Process Document (section 5.2.3):

| Charter Period | Start Date | End Date | Changes |

|---|---|---|---|

| Initial Charter | [dd monthname yyyy] | [dd monthname yyyy] | none |

| Charter Extension | [dd monthname yyyy] | [dd monthname yyyy] | none |

| Rechartered | [dd monthname yyyy] | [dd monthname yyyy] |

[description of change to charter, with link to new deliverable item in charter] Note: use the class |

This charter for the Multimodal Interaction Working Group has been created according to section 6.2 of the Process Document. In the event of a conflict between this document or the provisions of any charter and the W3C Process, the W3C Process shall take precedence.

The most important changes from the previous charter are:

- 1.1 Background

-

- added description on the background with the motivation as well as an example of Multimodal Interaction ecosystem and a possible architecture of advanced MMI-based Web applications.

- 1.2 Target Audience

-

- updated the description to better fit the recent situation.

- 1.3 Work to do

-

- added description on new requirements for the MMI Architecture and related specifications from the participants in the MMI Webinars and the MMI Workshop held in 2013.

- updated the description on expected deliverables based on the feedback from the Webinars and the workshop

- removed "Device Handling Capability", and then added "Multimodal Authoring" and "Semantic Interpretation for Multimodal Interaction" to the deliverables based on the feedback from the Webinars and the workshop.

- added "EMMA 2.0" to the deliverables based on the work of "EMMA 1.1".

- removed "EmotionML" from the deliverables because it has become a W3C Recommendation.

- 2. Deliverables

-

- changed "Registration & Discovery" from WG Note to a Recommendation Track document based on the feedback from the Webinars and the workshop

- 3. Dependencies

-

- updated the lists of related internal/external groups