WebPerf WG @ TPAC 2020

bit.ly/webperf-tpac20

Logistics

When

October 19-22 2020 - 9am-12pm PST

Registering

- Register!

- Free for WG Members and Invited Experts

- If you’re not one and want to join, ping the chairs to discuss

Calling in

Video call link

Attendees

- Yoav Weiss (Google)

- Nic Jansma (Akamai)

- Nicolás Peña Moreno (Google)

- Michal Mocny (Google)

- Cliff Crocker (SpeedCurve)

- Ian Clelland (Google)

- Benjamin De Kosnik (Mozilla)

- Sean Feng (Mozilla)

- Noam Rosenthal (Invited expert)

- Steven Bougon(Salesforce)

- Noam Helfman (Microsoft)

- Andrew Comminos (Facebook)

- Nathan Schloss (Facebook)

- Nicolas Dubus (Facebook)

- Patrick Hulce (Invited expert)

- Scott Haseley (Google)

- Nicole Sullivan (Google)

- Matt Falkenhagen (observer) (Google)

- Andrew Galloni (Cloudflare)

- Ulan Degenbaev (Google)

- Kinuko Yasuda (Google)

- Patrick Meenan (Invited expert)

- Carine Bournez (W3C)

- Subrata Ashe (Salesforce)

- Alex Christensen (Apple)

- Thomas McCabe (observer) (Squarespace)

- Nolan Lawson (Salesforce)

- Dinko Bajric (Salesforce)

- Noah Lemen (Facebook)

- Tim Dresser (Google)

- Gilles Dubuc (Wikimedia Foundation)

- Jeremy Roman (observer) (Google)

- Ian Vollick (observer) (Google)

- Utkarsh Goel (Akamai)

- Ziran sun (Igalia)

- Domenic Denicola (Google)

- Boris Schapira (invited expert, Dareboost)

- Arno Renevier (Facebook)

- Alex Russell (Google, observer)

- Ryosuke Niwa (Apple)

- Camille Lamy (Google)

Agenda

Times in PT

Monday - October 19

Recording: part 1, part 2

Tuesday - October 20

Recording: part 1, part 2, part 3

Wednesday - October 21

Recording: part 1, part 2

Thursday - October 22

Recording: part 1, part 2

Secondary Scribes

Session summary

Video, minutes

Video, minutes

- In this session we outlined the goals for a potential future Smoothness / Frame Timing API for Animations (including scrolling) on the web.

- We introduced the idea of focusing on “Missed opportunities to show expected animation updates” (aka “dropped frames”) which is a more user focused measure of impact, vs just focusing on overall FPS broadly. We also discussed briefly what exactly would constitute an animation, “dropped frame”, and how smoothness relates to responsiveness.

- We also outlined several different options for surfacing the data via API (i.e.: per animation, per frame, or as a single page-level summary)

- Some topics discussed:

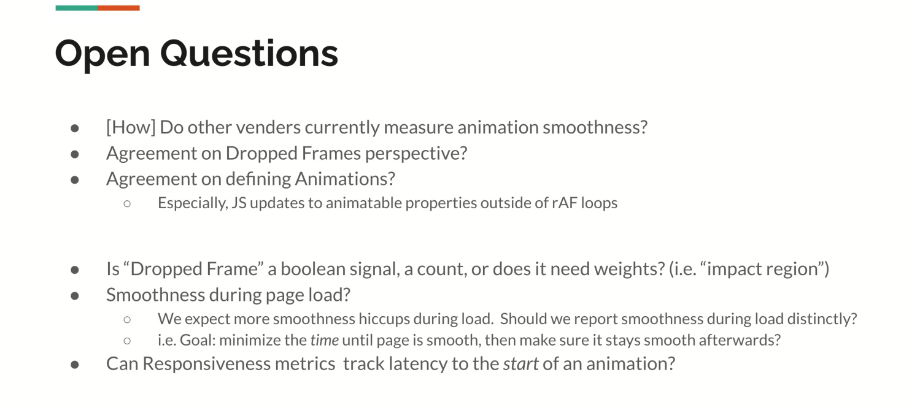

- “variable refresh rate” monitors, where the decision to increase screen refresh rate could be driven by the performance of animations on the page in the first place. How should we balance optimizing for “expected frames”?

- Should we have smoothness targets? Perhaps some applications want to reach the maximum supported device refresh rate, while others this is less critical.

- Learning from the video gaming industry.

- Existing metrics RUM providers track: #rAF’s over time (however, not super useful right now)

- Address questions raised (especially around variable refresh rates)

- Move proposal to github for more feedback, and open a WICG issue

Video, minutes

- In this session we discussed the challenges and opportunities for Core Web Vitals, including how we can work to normalize metrics across synthetic and RUM that are skewed due to the measurement of the page lifecycle.

- We also discussed issues with reporting the performance of third-parties, specifically ads and the urgency of several proposed issues related to measurement of same origin and cross-origin frames.

- The topic of server timing was also briefly raised, specifically how we should be encouraging others to adopt this (CDNs as well as developers) in an effort to provide a better understanding of what is impacting TTFB.

- Next steps:

- Look at how we can effectively leverage Reporting API to more accurately measure load-limited metrics.

- Work with browser vendors and others to potentially adjust CWV thresholds such as FID to be more meaningful

- Push for the prioritization of several open issues related to measuring resources w/in iframes

- More discussion needed around third-party script scheduling/reporting API

Video, minutes

- We presented the various scheduling problems and APIs our team is focused on

- scheduler.postTask: Queue tasks with browser scheduler, with ability to cancel and reprioritize groups of tasks (in OT through mid-January 2021).

- scheduler.currentTaskSignal: provide a way to provide the current task context (priority, etc.) (also in OT through mid-January 2021).

- Yield: Provide an ergonomic and efficient method of breaking up long tasks (revised, narrowly-scoped proposal in progress)

- 3P Script Scheduling: provide developers with some control over scheduling 3P execution on main thread (currently exploring data and API shapes).

- We discussed several other problems/APIs on our radar

- Priorities on other async work, task ordering guarantees, more context propagation, interaction of postTask and rendering, scheduling microtasks, after-task callbacks, etc.

- Some discussion on 3P scheduling and the idea of creating “scheduling domains” to isolate parts of the page

- AIs/Next steps

- Share more concrete thoughts about yield and 3P scheduling with group as proposals take shape

Video, minutes

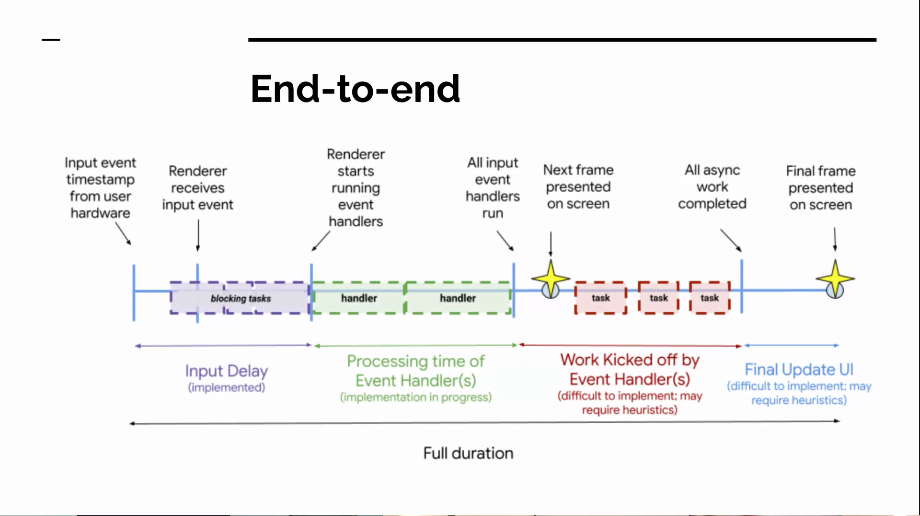

- We talked about the main problems with First Input Delay (FID):

- It does not measure end-to-end-latency

- It only considers the first user interaction.

- For the former problem, we need to consider asynchronous work, which is related to Patrick Hulce's talk on longtasks.

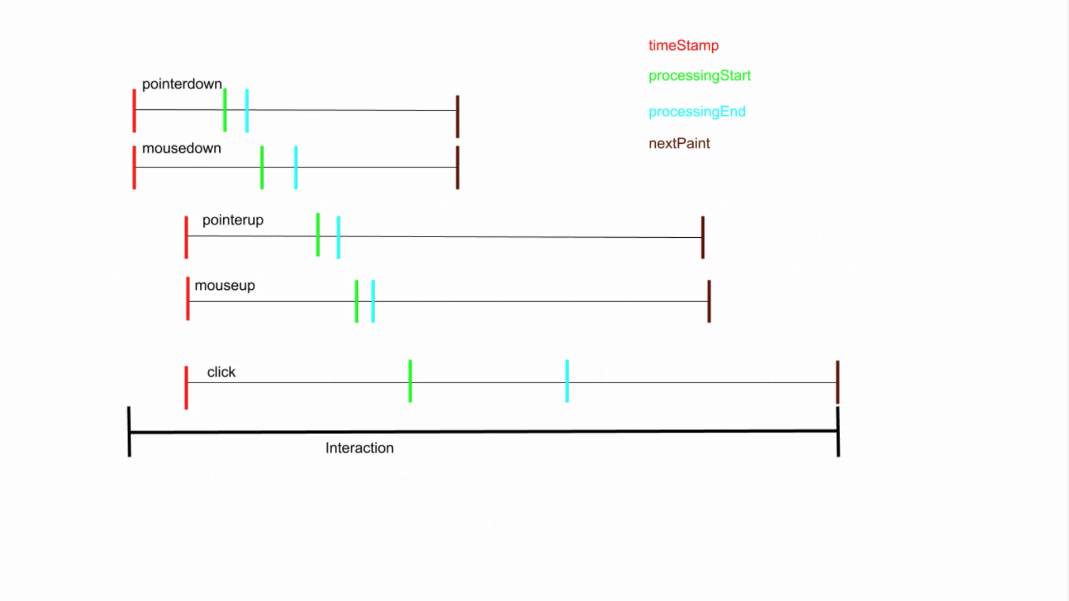

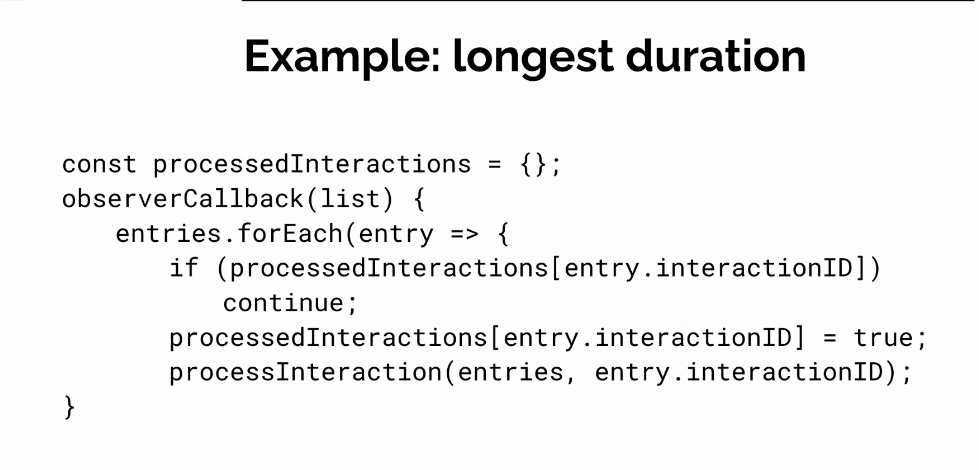

- For the second problem, we proposed exposing an interactionID which would enable knowing which events correspond to the same user interaction and would allow correctly aggregating the entries received to compute a per-page metric.

- We discussed the various open questions, such as how to handle pointerdowns (not all end up in clicks) and more continuous events like drags.

Video, minutes

- Discussed how current browser privacy models interact with prerendering, and what measures are needed to be consistent with anti-tracking measures.

- Discussed prerendering an uncredentialed version of the page and have developers “upgrade” it when the user navigates.

- Some attendees had questions on the necessity of an “upgrade path” compared to loading credentialed pages in their own partition.

- Some attendees expressed concerns about whether prerendering is likely to succeed given mixed results in previous attempts.

- We briefly discussed the implications of prerendering on performance measurement APIs and Core Web Vitals, saying that we likely want to report both the prerendered load and the user’s experience navigating to a prerendered page.

- One attendee expressed concern that prerendering may be vulnerable to timing attacks of some kind, which could be investigated later.

Video, minutes

- Discussed options for how page state should be reported in prerendering/portals modes. Should we bring back visibilityState == ‘prerender’?

- Related issues were brought up around tab/app switchers, where the page is visibilityState == ‘hidden’ but content is still shown. Related to #59

- Decided more investigation was needed, Domenic suggested enumerating all the use cases and states and trying to find some reasonable set of exposable states. David Bokan looking into that.

Video, minutes

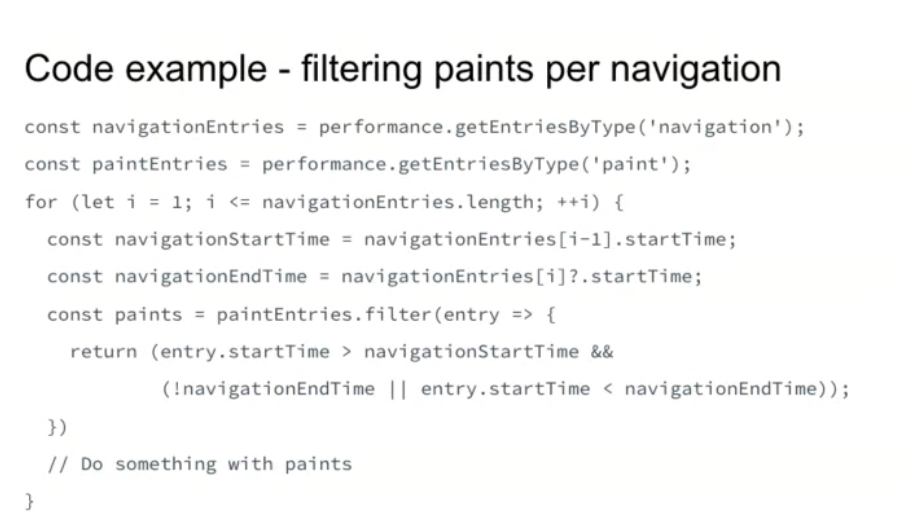

- In this session we review SPA navigation and problems they provide with RUM metrics gathering, as well as synthetic testing.

- Review, then discuss what it would mean to identify a soft navigation and how we would ideally change what we measure.

- Patterns typical with performance measurement when transitioning from MPA to SPA today, as well as issues with Attribution.

- Issues with blending performance metrics between initial Page Load and soft navigation.

- Is it important to measure “MPA vs SPA” or “first in session” vs not (“landing page vs not”)?

- Today, the “default” for MPA is a blended report across all nav types. The “default” for SPA is a segmented report with focus on landing pages (by the nature of perf reports).

- Effects of Caching (on both page load and soft nav)

- Request: support resetting all metrics when timeline is marked by developer.

- How to reset Paint timing? Suggestion: browsers should do the naive thing, and developers can use Element Timing if they need something smarter.

- Security concerns about measuring arbitrary paints.

- History API URL update is a single moment in time, but soft navigations are a span of time. Sometimes most work comes before the URL update, sometimes URL is the first thing, and often the URL is updated arbitrarily in the middle.

- Need more blog posts :)

- Create a public repo, with focus on listing out concrete use cases we aim to solve (with a focus on real existing apps and problems if possible).

- Aim to ask large web property owners to submit use cases.

Video, minutes

- We discussed the various options of exposing BFCache navigations, and the backwards compatibility implications of firing new NavigationTiming entries

- Decided that the option of firing a new typed NavigationTiming entry is the way to go here, as well as firing the relevant First* entries that go along with it.

Video, minutes

- Status update

- Shipping in Chrome 87

- Discussed interactions with Long Tasks API

- Decided to report isInputPending usage inside of long tasks as a boolean or "input starved" signal, rather than omit entirely

- Discussed potential interop with yielding APIs

- Deemed unnecessary to yield only to input

- Current behaviour with setTimeout works for most UAs (particularly those who dispatch events FIFO)

Video, minutes

- Discussed different use cases and tools for network information diagnostics.

- Proposal to extend network information API with custom threshold a custom logic handling network condition changes has been discussed.

- Proposal for a new API to ping local gateway has been presented – there was some pushback related to privacy and insufficient clarity and justification for the use case.

Video, minutes

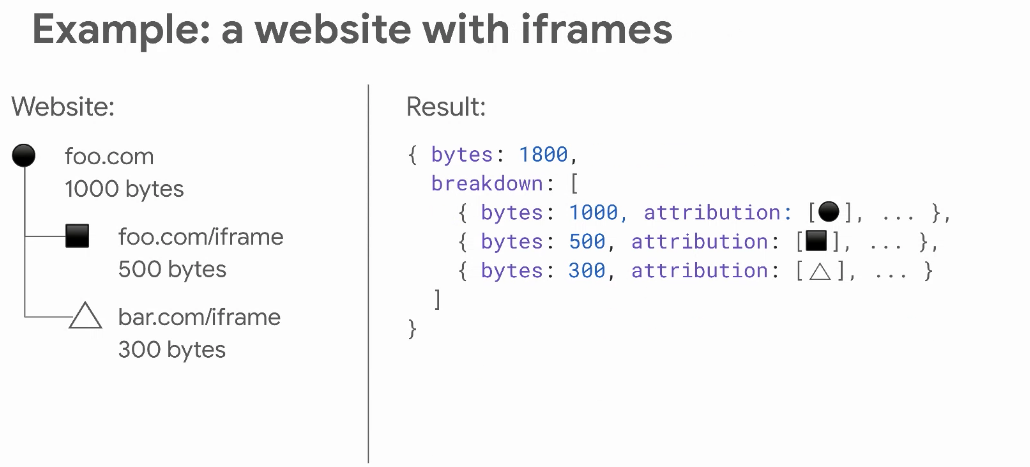

- Recent changes to the API were presented:

- The API is now gated behind self.crossOriginIsolated

- The API provides information to identify iframes in the result

- The format of the result is generalized to allow other memory types beside JS.

- We discussed whether "bytes" in the result mean physical bytes or virtual bytes.

- We discussed the scope of the API and whether it should report the whole browsing context group or not. Concerns were raised about reporting memory usage of cross-origin iframes and potentially exposing the underlying process model.

Video, minutes

- We discussed the charter draft and various decisions that we needed to make

- We decided not to try and publish “almost done” specs to REC before the rechartering, or at least not block on that

- There was general agreement on moving most specs to the CR-based living standard model

- For specs transitioning out of the Group, we decided to move them out of the deliverables.

Video, minutes

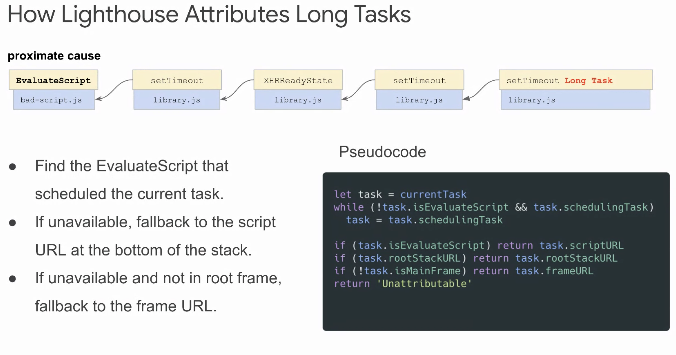

- We discussed the motivation for attribution and several previous attempts in lab tooling that did not work very well toward that goal.

- We outlined the approach that is currently working well in lab tooling and discussed its implementation cost which uses intertask initiator information.

- There was some interest in exploring this as an addition to the long tasks spec.

- There was some concern about the implementation cost that will require some further research.

- AI: Patrick Hulce to file an issue for further discussion.

Video, minutes

- Presented results from Chrome origin trial

- Positive developer sentiment, useful to discover pathologically bad cases

- Discussed activation mechanism

- AI: Add support for disabled-by-default features to Permission Policy

- Talked about potential candidates for renaming

- Popular candidates included JavaScript Sampling API, Performance Profiler

- AI: File GitHub issue, request feedback from TAG

Video, minutes

- We discussed the different categories of information Timing APIs expose and how we can reason about unifying the opt-ins for them.

- We concluded that while CORS does give you access to resource-level information (timing + size), it doesn’t currently provide origin-level or network-level information, so we shouldn’t extend its semantics to include those.

- We discussed whether CORP should enable exposure of resource size, which devolved into a discussion of the semantics of CORP, and whether it implies that a resource can be just embedded or embedded and read.

- AI: Yoav to sum up the discussion on an issue, so we can continue it there

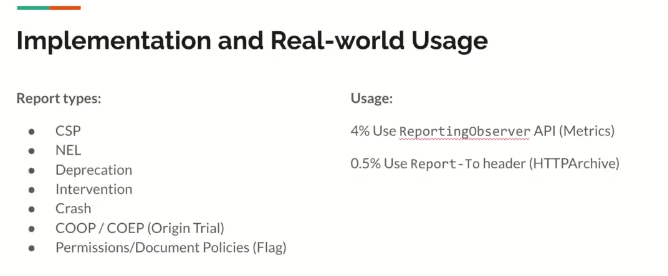

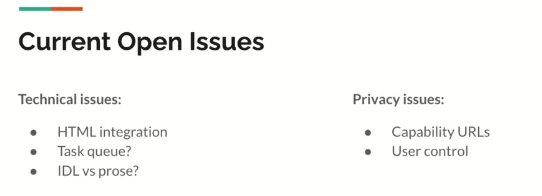

Video, minutes

- Changes made over the last year to the Reporting and Network Reporting specs were presented, along with an update on their implementation and usage within Chrome.

- We discussed the need for something like Origin Policy to enable out-of-band configuration of Network Reporting, though Origin Policy has some blockers that have prevented it shipping so far.

- We discussed whether there was interest from any non-Chromium implementers to ship Reporting

- We discussed how best to resolve the remaining privacy issues on the spec, including when it is appropriate to bring these issues to the Privacy IG / CGs

- We discussed whether anything other than spec-level guidance can be done about the problem of capability URLs appearing in cross-origin reports.

Video, minutes

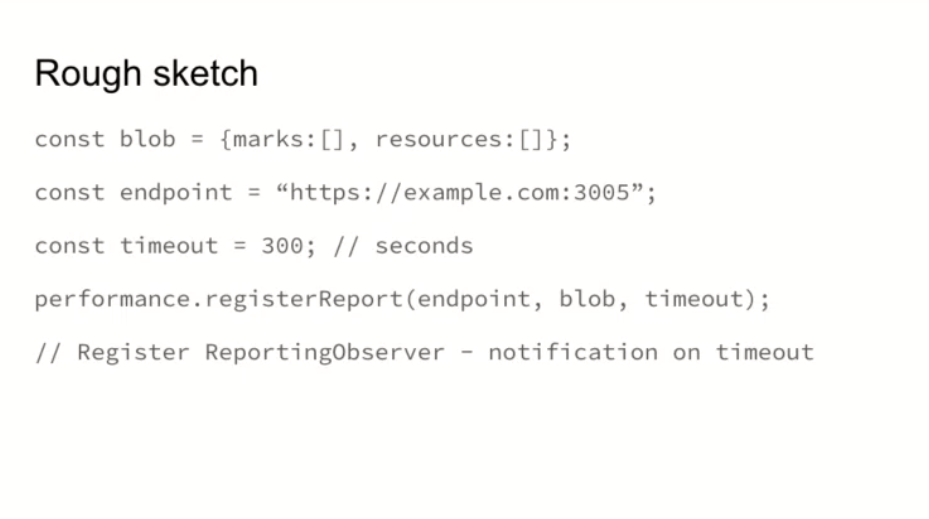

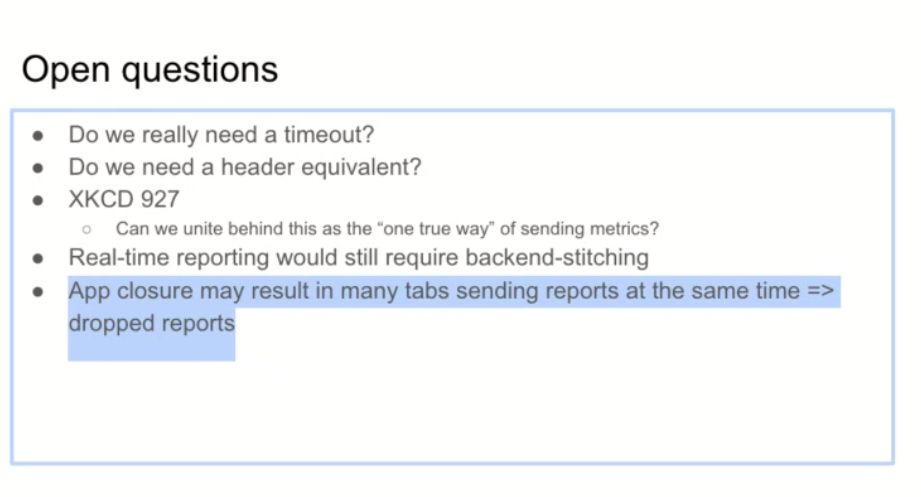

- We discussed a proposal for a reporting API, where developers construct the metrics they want reported in JS, but the browser ensures the reports are sent before the document is dismissed.

- that will increase report reliability and prevent developers from having to rely on dismissal events to manually send their beacons.

- We concluded that such a proposal would be useful to reduce the need for backend “session stitching”, even if this requirement will not go away entirely in cases where we want to report both real-time and continuous results.

- RUM providers expressed interest and said they’d migrate to such a solution, if available

Minutes

Monday Oct 19

- Yoav: Welcome!

- Nic: Welcome to TPAC!

- ... Mission is to think about, measure performance of web. User agent features and APIs

- ... Highlights: New co-chair, HR Time L2, PaintTiming WebKit implemented

- ... F2F SPA focused interim meeting was cancelled due to COVID

- ... Closed 86 issues in Github

- ... Rechartering discussion on Wednesday

- ... Call for Editors - also on Wednesday. Looking for new editors to take specs forward

- ... Lots of incubations we may want to adopt: 13!

- ... Usage is up (mostly)

- ... New member organizations and invited experts

- Yoav: W3C has a code of conduct (CEPC)

- ... Promote diversity and seek diverse perspectives

- ... Try to avoid speakers dominating time

- ... We don't operate a queue, prefer natural discussion

- ... We can use chat to indicate they want to speak

- ... CEPC has section on unacceptable behavior and safety vs. comfort, important to to read

- ... We are recording this event and will be publishing this later

- ... Agenda for today

- ... (round of introductions)

- Michal: Why discuss smoothness?

- ... Animation and Scrolling is big part of UX on the web

- ... Stuttering is very visible

- ... How do we measure smoothness?

- ... Often in terms of FPS, especially coming from gaming

- ... When it comes to the web, it has some flaws. A perfectly static website is perfectly smooth, doesn't generate any new frames

- ... With multi-threading, what does FPS even measure

- ... Why it matters: Missed opportunities to show expected animation updates, aka "dropped frames"

- ... Animations: Scroll, pinch/zoom, rAF loops, CSS, canvas/video/etc

- ... Animations are not: inserting new content, clicking button to produce UX response, form input appearances, background loading

- ... Dropped frames: Anytime an animation is expected to produce an update for vsync, yet the page does not do so

- ... Animations do not always expect to produce an update

- ... CSS animations can have idle periods defined

- ... Event handlers can delay animations based on scrolls in multiple ways (e.g. delays in handler or triggering updates afterwards)

- ... How do we report on missed animations?

- ... Option 1: Report all raw data points, and see which animations are able to complete for each frame ID

- ... Concerns: ergonomics, performance, privacy/security

- ... Option 2: Report per animation (summary) at the end

- ... Simplifies attribution

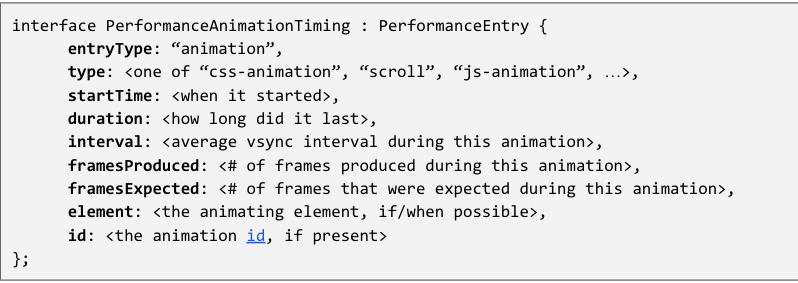

- ... Report #expected frames, #produced frame, duration

- ... Can calculate %smooth or FPS

- ... straw API:

- ... Option 3: Report per frame (on timeline), similar to FrameTiming API proposal

- ... Better matches to what user experiences

- ... Number of updates produced / expected

- ... Option 4: Single summary, "final smoothness"

- ... Or maybe at key moments such as visibility changes

- ... Mix and match the options

- ... Could offer page-level summary but also give details per animation or something

- ... Open questions:

- Ryosuke: What do you mean by expected frames in option 2?

- Michal: For a CSS animation, for a key frame that was expected to move from the previous frame, and if the browser cannot produce it in time

- ... for animations where there's no transform between keyframes, there's no expected new frame being produced

- Ryosuke: In some environments, they adjust frame rates based on what's animated.

- Michal: In Option 2, there is an interval (which is avg vsync interval), so if frame rate increased, the interval would change

- Ryosuke: Assuming you can get that information, I'm not sure we have that capability

- ... For JS based animations, it can be more complex because we're depending on script to draw or not. Not clear if we missed a frame. Expectation of a frame is complex to me.

- Tim: It's unclear in the case where vsync is not constant. In the case where it is constant, is it hard to know if there's no change?

- Ryosuke: Hard to define this. On a device that was 120hz, and an animation that started when the page was running at 30hz, and it needs to ramp up. Number of frames being produced is dependent on the animation being done.

- Michal: In this case there was an opportunity to present (vsync), but it could not be

- Sadrul: "The next rendering opportunity". Have to depend on the system to give the presentation frequency. If a device ramps up from 30 to 60 to 120fps, for each framerate, we know up to how many frames we can present.

- Ryosuke: On fast displays (120hz), the page may not be able to even animate that fast

- Tim: You can switch refresh-rate and not affect the UX

- ... Look at dropped frames per unit time, instead of dropped frames per expected frames

- Ryosuke: If everywhere else, pages are rendering at 120hz, and another page is only at 30hz, the user would notice the difference

- Michal: Does the refresh rate ramp up for developer-provided frames? CSS animations are defined semantically, but what about rAF loops where the developer code just tries to keep up.

- Ryosuke: The system is deciding how fast to present things. It sees how fast you're going and then tries to goes faster. I don't know the exact algorithm here.

- Tim: Seems like you have better experience with variable refresh rate cases, do you have alternate ideas on how to present UX in those cases

- Ryosuke: These are really hard cases

- ... In the case where refresh rate isn't changing at all, there's no problem

- ... I don't really have a great suggestion here

- Robert: If we know the system has the opportunity to ramp up, we know any other rate is dropped frames.

- Noam: Would it make sense for API to allow developers to specify what they're interested in for smoothness criteria. e.g. in some applications, 20FPS is OK, in some it's intolerable below 50-60FPS.

- Ryosuke: If we can say here's the theoretical max rate this device is capable of versus what it presents at

- Michal: The worst thing to happen is there was an opportunity to present, and the animation had a useful thing to give, but for whatever reason it wasn't updated

- ... There are other things to optimize for, like having the interval as low as possible. But you want to make sure you want to present when you have the opportunity to

- Patrick Meenan: Would be useful to look at the video game industry for guidance, e.g. report device supported and minimum framerate, percentiles

- Tim: That kind of research seems valuable to me. We could also take a look at the time it takes to present a frame. Downside of being less representative of user experience.

- Sadrul: How do other vendors measure smoothness right now

- Nic: We do capture very simple metrics: frame rate over time, but not very valuable, due to the issues presented here. Would love more useful UX metrics. Currently just measuring rAF.

- Benjamin: Is the intent to grow towards media capabilities WG and have this be applicable to video?

- Michal: Haven’t considered that

- Tim: A good job for the video use-case would require a lot of additional data, bitrate being streamed, etc. My guess is that it’d need first-class support

- Benjamin: So different APIs

- Tim: My intuition is that would be the way to go. This would handle video in a sane way, but won’t give a full picture of video smoothness

- Michal: Other thoughts?

- Nic: In many ways it’s nice to report on the “bad” things. Easier to present or track user pain. Even just counts of dropped frames is useful to track

- Carine: Video/media use cases are in-scope for other WGs. We can talk to them

- Michal: Next steps - expect more on this

- Ryosuke: Any repo where this is being discussed

- Michal: Coming soon

- Ryosuke: Let us know!

- Ciff: Working on webperf for ~18 years. Want to give some feedback and report what customers are seeing in the wild

- ... Core Web Vitals, ads measurement, Server Timing

- ... Seen a huge wave of interest as a result of Core Web Vitals in terms of people wanting to make their sites faster, from outside our echo chambers: SEO, marketing, CEOs, etc

- ... Problems: Browser support for the metrics. Want to see adoption beyond just Google (Facebook, Adobe analytics and other marketing tools)

- ... LCP RUM data: p50 1.2s, p75 2.2s, p95 5.2s

- ... LCP been easy to understand and people tend to be doing well here

- ... Chrome 86 fixed opacity issues, resulting in more accurate (higher) LCPs

- But getting support calls as a result, may result in erosion of confidence in the metric

- Nic: Chrome has a public change log, that we can point mPulse customers to better understand those changes. Helpful for browser vendors to share that.

- Cliff: Yeah, we recently started doing something similar

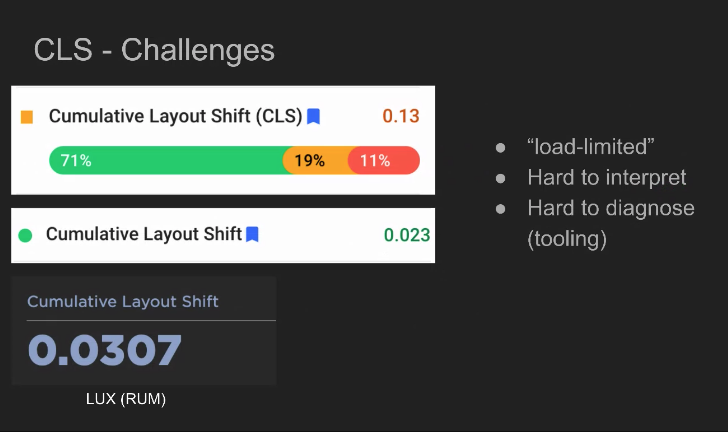

- … CLS - people are doing relatively well, but depends on industry

- … Took some time to get people’s heads around that. We got over that hump - lower score is good, but they run into challenges when comparing field and lab data

- ... Load-limited affects RUM tooling

- ... Hard to compare RUM to CrUX. Tooling doesn’t support what’s collected in CrUX

- Benjamin: So how are you explaining this?

- Cliff: CLS is a cumulative score, show when our beacon is fired (at “load” event), and see that other shifts happen after that

- … Don’t know if this is something where we can improve how we collect metrics, or if there’s a better way to normalize CrUX and RUM

- Michal: CLS is full page lifecycle feature, there are also problems related to other metrics (e.g. smoothness, responsiveness) where user action is not represented in the lab.

- Yoav: I think there are two problems here. For all User Interaction dependent metrics, lab and RUM will inherently differ. That's fine and we'll accept that.

- ... Many RUM providers today don't capture metrics for the full lifetime of the page (e.g. at onload or after). So we will have differences between CrUX and RUM vendors. We need to try to close that gap. I have one potential solution to that later days.

- Nicolás: Is the main complain lab settings vs rum data, or lab settings vs. CrUX

- Cliff: I think the later, it starts there. But then when looking at Lighthouse, you also see a difference.

- ... Challenge comes back to how tools are quick to measure this

- ... This is how analytics has always done this, there are some metrics we can capture later but we generally get everything at load-ish event

- ... Reporting API is potentially a way to close that gap

- Patrick: For a full page lifecycle session, a 0.1 CLS for a 12 hour gmail session vs. a 0.1 CLS for a load page are very different experiences

- Michal: Active accumulating is not always the best measure

- Cliff: We've had to improve our tooling, and normalize data between synthetic, CrUX, RUM

- Patrick: For Lighthouse specifically, we are working towards getting post-load data. Trying to work on naming the different CLS measurements here.

- Benjamin: the "I don't know how to fix this" is concerning

- Cliff: Agree that it’s a problem, and tools need to fix it. Maybe we can focus on the largest shifts first

- ... FID - most customers pass it fine. Seems like the bar is too low, 75th %ile is far below 100ms

- ... customer example: 19ms 75%ile FID, but “JS longest task” at 300ms, which is not great

- ... So not shining a big enough light

- ... LTs may be after load, wait for user interaction, so that may explain some discrepancies

- ... Want LT attribution, today it’s hard to find the source of the LT

- ... FID may need to be a higher bar

- NPM: Patrick will be discussing how to do LT attribution in Lighthouse and maybe we can use it here too

- Annie: Folks on the team also think that the bar may be low

- Pat: Maybe it’s just not a problem in 75% of cases?

- NPM: Problem may be elsewhere

- Cliff: Display ad performance - how can we measure 3P performance

- ... Discussion with a customer a few weeks ago - how haven’t we fixed it yet?

- ... Anything we could be doing about this today?

- ... Otherwise, visibility into resources in iframes would be huge

- Nic: As a RUM vendor, +1 to all that

- Scott: For Scheduling, we see a desire from folks to control what 3Ps are doing, deprioritizing them, etc. Great to collaborate on getting metrics on this

- Cliff: Server Timing, still under used by pages. Guessing that a lot of this is coming from Akamai. Fill that this is a missed opportunity

- ... Call to action - are we really doing everything we can with Server Timing.

- ... SpeedCurve doesn’t yet collect it

- ... WPT surfaces it, but can we do more?

- ... Would love to see other CDNs surface it

- Nic: RE CWV, have your customers reported good/bad things about the metrics, business metrics, etc?

- Cliff: Yeah, got a mix. Strong correlation with FID, of bounce with LCP, but less with CLS

- ... They're happy with CLS that they knew there was a problem, and now we can measure it

- ... Want to collect more data on long term conversion rates, but see strong correlations with user frustration

- Scott: Keeping high level, not diving into things as much

- ... Available APIs:

- scheduler.postTask - Prioritized task scheduling, enables coordination through priorities, controllable (cancel or change priority)

- Post a task and get a Promise that resolves when the task is done

- TaskController has a signal that enables aborting a full class of tasks or change their priorities

- Currently available in Origin Trial, and behind a flag since M82. React and AirBnB plan to start experimenting with it ASAP.\

- React replaced their user-land scheduler. AirBnB use it to break up long tasks

- Next steps: getting feedback from OT and see where we go

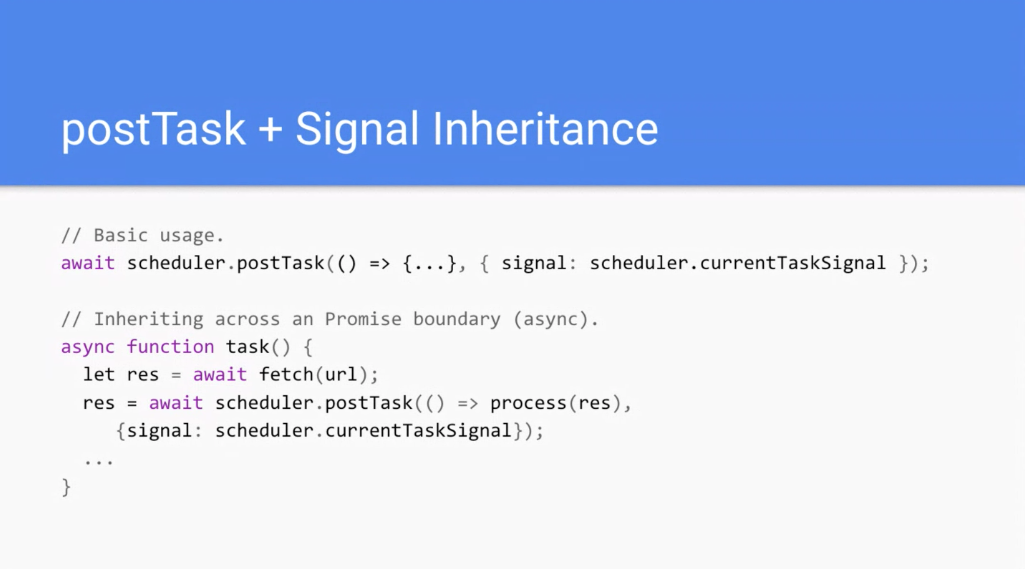

- ... Followup - signal inheritance

- Folks want to inherit the signal to create subtasks that listen to priority changes

- postTask + signal inheritance

- There’s some risk with propagation. Inheriting the context of some tasks, but that soon degrades to “everything is high priority”

- It’s in the OT, but unclear if this will be part of the shipped API

- Subrata: How is starvation handled in this case?

- Scott: Tradeoff between giving developers strict guarantees and giving the browser control. Starvation is isolated to postTask tasks.

- … First pass is strict priority order. Want to get feedback on that to see if it’s good enough, and have metrics to measure starvation. Keeping an eye out for it

- ... Other problems we’re exploring

- Yielding in Long Tasks

- Has come up in the context of isInputPending

- Currently folks schedule setTimeout/postMessage, but you may “miss your place in line” and it’s not great ergonomically

- Also want to reduce the penalty for yielding, enabling faster control

- Otherwise, folks want “Yield to rendering/input/network/etc”

- Initially proposed scheduler.yield(), returning a Promise which returns when the thing you’re yielding for is done

- Want to minimize scope, just for postTask, which may open up options

- E.g. integrating generator functions, so we could make postTasks understand generator, and turn functions there to yieldy functions

- Michal: Reminds me of the spawn pattern

- Scott: You could imagine this changed into “I want to yield to specific things”. It’s exciting for us how easy it is to insert yield points that would work with both async and sync code

- … Will be exploring that soon

- … postTask - app specific priorities

- A lot of partners that develop their own schedulers. Continuing to do that would require building their own queues on top of postTask

- One proposal is to add an option for a “rank” for the order of the task in the priority queue

- If you have multiple parties on the page that have different ranking systems, we’d want to be able to schedule both

- Yoav: It's unclear how you would reconcile multiple ranking systems from different parties? Do they all go into a single queue based on the same rank? Or normalize?

- Scott: If you provide a task signal, that indicates the priority can change. Not clear if that will work for developers. Need to understand what guarantees they want.

- ... From browser's perspective we want freedom to schedule as needed

- ... Could provide a larger domain, where everything in the domain is ranked

- ... With v1, what guarantees do we provide and will that hurt us down the line

- Yoav: If you don't provide a domain, you're just relying on the signal, or some other queues?

- Scott: A signal, or there might be a default domain, simple apps would just use that.

- ... 3P script scheduling:

- Give developers some control over when 3P scripts run on main thread

- Explore what is being done on main thread by 3P libraries

- Control might be deprioritizing, delaying, etc

- How do we prevent or mitigate a 3P task from using highest priority all of the time

- IFRAMEs might be a good place to annotate what is important

- ... Further out: postTask priorities on async things that developers don't have control over, I/O such as IndexDB or network resources

- Tried prioritizing all DB tasks, showed some improvements, but unclear if this impacts on all sites in general

- Allowing developers to specify priority might make sense

- ... Can postTask be extended to microtasks? Demand from frameworks

- ... After-task callbacks: being able to measure all microtasks to determine full duration

- ... Async task graph tracking: May spawn multiple async tasks, useful to know for bookkeeping, measurements, task harness

- ... postTask and rendering: how important is rendering compared to other work (tasks)? DOM R/W coordination

- Noam: Instead of just how many tasks, provide a time budget

- ... Would avoid response input delay

- ... Multiple very short tasks to avoid a LongTask can still starve rendering

- Scott: We've made some changes since then, are open to feedback and questions

- Cliff: On 3P prioritization stuff, is there a proposal ready?

- Scott: Not a formal proposal yet. We want to take a look at the space more wholistically first. I can update these slides with that proposal.

- Cliff: I think it would be valuable, though it could be hard for a developer to know what 3P domains are important

- Nicole: We realized we needed more data about what these 3P plugins are doing anyways. Took 10 and put them into a site to see what it's doing when.

- Yoav: Even things like URLs need some sort of opt-in from 3P frames to expose what they're loading. Similar to Nic's proposal for 3P frames to opt-in to parent frames

- ... Controlling 3P frames is easier than to get details about what they are

- Nicole: Surfacing what third parties are doing upward will just put application authors in just the same bad position they are now, managing behavior of something out of their control

- Yoav: Theoretically, unless RUM providers can make that surfacing actionable

- Nic: As a RUM provider, big request from our customers to get insights into what is causing the pain, and we could jump through those hoops

- Scott: Useful for then if there is some sort of control, we could make it actionable

- Nicole: Maybe we could reach out to security folks to see what is possible.

- Nicole: Maybe we should see if we can start a convo about security folks to see if it's possible

- Nicolás: Also a problem of when a 3P script injects into the main thread, might make it more incentivized. If 3P are punished for being in their own context, they will try to inject into the main context

- Yoav: We shouldn't make that only possible only in the context of the frames and not main frame, then 3P will shift their work to main frame

- Scott: We're focused on the main frame/thread as well that's competing with 1P code etc

- ... example GA is doing setTimeouts for all of its things and those happening at inopportune times

- Nicolás: Responsiveness: Current metric in CWV: FID

- ... Does not capture "end to end" latency of user interaction - when user clicked till the event starting to be processed

- ... Only measures the "first" - not great for SPAs

- ... Diagram shows different points in time for a user interaction

- ... After a user interaction, there is the first frame, the first star above

- ... Then there's other async work

- ... How do we measure the "Final Frame", aka the second star above?

- ... Determining the "end" for async work requires tracking some sort of causality, would need to be a heuristic. Related to LT attribution, which needs something similar

- ... Problem of aggregating, e.g. a single user interaction may correspond to a large sequence of events. E.g. tap => mouse up, mouse down, pointer up/down, and click

- Can result in over counting of taps

- … Want to enable developers to track per-event metrics, but also per user-interaction

- … Proposal - adding an interactionID to PerformanceEventTiming that would be the same for events triggered by a single user interaction

- Example

- From this, via a PerformanceObserver, you would group interactions by InteractionID and get the max of those handlers

- Alternatives: New Interactions API (would require yet another API)

- ... Polyfill on top of EventTiming: events with "close" timestamps would be in same interaction

- Noam: Very important space to solve the end-to-end measurements, as well as the interaction ID. Tried to tackle this with existing APIs.

- … used EventTiming API without addressing the multiple event issue, and then combined Element Timing to measure the added image’s paint timing.

- Nicolás: Wonder how different that is from the duration value that’s already available in EventTiming. That captures a timestamp to the next paint after the event.

- Noam: The problem is that it’s not guaranteed that the relevant paint would be in the next frame

- Yoav: In the scenario you're describing, with a specific element you want to measure, this makes sense. We'll talk about more various task tracking in context of LongTask attribution later. Can bring that back to coalescing of multiple events to a single user interaction.

- Noam: Important for us to address as well.

- Nic: For mPulse interaction tracking, tracking through FID. See a lot of value in tracking async tasks triggered by events.

- Cliff: Tracking FID as well. With attribution, this is very helpful. Started tracking other input things, like when user input happens, but not sure it’s super helpful, because applications vary on that front. Keen on getting more timing around event handling.

- Pat: Aggregation of duration and filtering by event id - when we stagger events, do we want to take the start of the first event and the end of the last one?

- Nicolás: Open to ideas about what we should be measuring. Feedback welcome on https://github.com/WICG/event-timing

- Pat: Start of pointerdown till end of click in the example seems to be what we’d want

- Nicolás: We care about the user’s interaction until the thing is completed.

- Pat: It’s possible with your proposal, just not a simple oneliner

- Nicolás: Currently only surface slow events

- Tim: You’d still have a problem you can't necessarily map from a pointerdown to a click event

- Do we want to count the duration the user help their finger to the screen? Maybe

- Nicolás: Yeah, unclear what we want to measure with long taps. With interactionID could let you know it was a long tap. Not sure if the event surfaces that.

- Noam: How would that work with a combination of events, e.g. pointerdown to a mouse-move, dragging

- Nicolás: That's a good question, the discrete events in EventTiming, but for a drag how would you classify the interaction

- Noam: Sometimes you care about the pointer up event, not the pointer down. But the API with the interaction ID enables you to do that

- Nicolás: Maybe until the finger is lifted, that's how long the interaction is tracked

Tuesday October 20

- Jeremy: Looking at bringing back pre-rendering

- ... Requires three parts: Trigger, Opt-in, Behavior Changes

- ... Behavior Changes: Limit nuisance behavior and limit cross-site tracking

- ... via uncredentialed pre-navigation fetch

- ... Sites cannot access their unpartitioned storage before navigation

- ... Opt-In: Site may need to "upgrade on navigation" if a user is already logged in after un-credentialed pre-navigation fetch

- ... Trigger: Referring page needs to indicate that pre-navigation fetching is safe from unwanted side-effects, resource is compatible, and user is likely to navigate. Link rel prerender is the previous iteration of this

- Domenic: this is all pretty early. Portals came up with the concepts, but then realized it’s more general

- Yoav: Something I find interesting about upgrade path is that it can encourage cacheable static upgrade HTML that can upgrade itself to a customized experience

- Domenic: most sites already have a logged out view, and they can add a bit of JS to modify that state

- Michal: What does the upgrade flow look like?

- Jeremy: exact shape of the API depends on how the API evolve and how storage access and other adjacent APIs evolve

- … Ultimately what authors want to do here is get notified when storage is available, and then load the data that’s dependent on personalized state.

- … If the entire application is personalized there’s little you can load ahead of time, but static sites may be different. Visiting e.g. Wikipedia, most of the content would be the same for all users, and only a small number of components would change

- Michal: thinking there would be some event that indicates that state change: prerender to render or storage availability, etc.

- Domenic: trying to figure this out. There are APIs for the different changes: permissions API that will tell you if you’d be auto-denied when prerendered, storage access in many browsers. Unclear if we should tell people to use these signals individually or bundle them. Early days still.

- Alex: If a site doesn’t declare “I can upgrade” and we fetched it, will we throw it out?

- Jeremy: If the user has local credentials, we cannot use the response

- Alex: and if the user doesn’t?

- Jeremy: Maybe. I don’t see why we couldn’t

- Domenic: we would still be unable to use the rendering, and it was rendered with shut down APIs

- Jeremy: yeah, but we could potentially use the response bodies

- Alex: If a site is static and credential-less, they’d still have to opt-in and their upgrade would do nothing

- Jeremy: yeah, as we can’t determine that from the client side

- Pat: You’re talking about reusing the renderer. The original prerender dies because of excessive resource usage. How are things different today?

- Kinuko: We still worry about resources, but we have throttling infrastructure that can enable us to prerender without consuming too many resources

- Jeremy: UAs that run on devices that have no resources for that, they can always do nothing with prerender hints. Hope that pages would identify that they are prerendered and use less resources while prerendered

- Pat: but that was the case before. The work on the site’s side is the same. Was there adoption last time

- Domenic: I think we cannot depend on sites. The shift in thinking came when we implemented BFCache. We also had background tabs. This is extending the same principle - keep things around that will improve UX in the future.

- Jeremy: We also put more effort on sites caring about performance - e.g. CoreWebVitals. But agree that we can’t rely on sites

- Pat: the performance impact when it works can be awesome. But worried that it may mostly work for lightweight sites and SPA/SSR would send down the credentialed experience and not want to do the credential transitions on the client side.

- Michal: implications towards performance measurement?

- Jeremy: started thinking about it, but no conclusions yet

- Domenic: pages still want to know when they prerender, but also need to know if that made the UX super fast. So we need 2 different metrics: the traditional load timings that may have happened in the background and what the user actually experiences, which could be 0 or close to 0. Still don’t know how it will be manifested

- Michal: Couldn’t agree more and will be talking about related issues.

- Yoav: One question to other browser vendors around non-credentialed prerender coupled with lack of access to localstorage. In the past we talked about privacy preserving prefetch and this is in a way an extension to that. Would that be something that is implementable?

- Alex: Implementable yes, but a little strange. Initial thought would be do a load with credentials and storage in its own partition.

- Domenic: Reason for no-storage is that you do need to eventually transition to first party partition. E.g. You'd need to migrate/merge two IndexDBs

- Alex: In our implementation, if you're on a.com and you prerender b.com, in b.com's partition, there would be no problem if it becomes the main frame's content

- Jeremy: If you load b.com in partition, it's not the partition that stores the user's current cookies and credentials, the user doesn't see themselves as logged in when they transition

- Alex: May be a difference between cookie partitioning in Webkit and Chromium. In WebKit I see no problem and no need for this transition

- Domenic: This is new technology. There’s no way today to take an iframe and make it the top-level page. Without a transition, there could be a situation where the user has 2 tabs to the site, but will only be logged in in one of them

- Alex: If you’re prerendering something, the current page won’t have access to its contents, right? There’s a difference in our thinking here.

- Domenic: wrote up our threat model

- Yoav: Maybe the difference comes from a model that works both from prerendering and portals

- Jeremy: I think this just exists with prerendering

- ... If you do fetch with credentials from a.com to b.com, it allows you to essentially do a subresource request with credentials

- Domenic: We're designing this to align more to Safari's model that Chromium's current model

- Yoav: We can discuss in upcoming WG call or offline on Github

- Ryosuke: Whenever you do a prerender you do a cross-origin request in a separate state each time, correct? Are there timing attacks?

- Domenic: There are timing attacks possible which is why we're trying to partition here. There are probably other attack here.

- Ryosuke: Something we can look into.

- Yoav: Can we talk about visibility aspects?

- David: Need some way to signal to a page it's been pre-rendered

- ... Is it hidden enough? Some content shouldn't load/run in prerender, e.g. ads, analytics

- ... Content could be visible, needs to be up to date, e.g. portal

- ... Open Questions

- ... Compatibility: Check for visibilityState=="prerender", some pages check for if-visible-else, which could break

- ... Will require opt-in so compat is nice-to-have but not critical

- ... What does document.hidden return

- ... Portals: Allow showing a preview of a prerendered page, differs from prerender as it might be visible to a user

- ... Pages could be put into portal. Can go from visible to in a portal

- Benjamin: Portal idea might get us out of the jam of some visibilityState and App Switcher

- Domenic: We have an explainer for Portals, but it’s being rebased on top of prerender explainers

- David: A portal kind of looks and feels like an IFRAME, where it starts out embedded on the page, but it's a link where you can see a preview of the navigation. Curious about app launcher discussions?

- Benjamin: We've had some discussions whether a page is visible or hidden if you're in App Switcher, that problem may have relevance to Portals

- Michal: Our discussion around App Switcher is if we're going to move off a two-state from visible/hidden, what else happens there

- Yoav: Content is "viewable" but it's not the top-level document. If we had a "preview" state that might help with both.

- David: In a portal you're not able to interact with content, more of a preview

- Michal: "Non-interactive" was the proposed state

- Benjamin: Having a new visibility state for Portal might be a smoother path forward

- NPM: Isn't visibilityState determined on the page level right now? Say if you have an iframe not in the viewport, it will still be considered visible.

- Domenic: People's intuition often think of portals as visible, but it's more aligned to a popup window that's overlaid on the page. Visibility state separate from the document.

- Yoav: Seems like we have multiple states that have overlap. For pre-render we could have hidden content, but we also need to know if it's prerendered to not do various things. It's both hidden and non-interactive, where one is a subset of the other. We need some way to mix-and-match those. Is the best path 3 or 4 states with overlap between them, or orthogonal signals we can mix together?

- Michal: That came to mind as well because so many sites check for "not visible" as a hint for hidden, etc. Maybe one way to get out of that bind is to think if visible/hidden is appropriate for all of those cases, and we have a separate visible type, such as for App Switcher

- Domenic: I could imagine a bunch of different boolean switches: app switcher, portal, background tab, etc. Could make a matrix of all of those and see if there's overlap and if we want a bunch of booleans or a big enum

- Jeremy: the other aspect RE the ideal design is how can we reasonably get from the current to that ideal design with relation to existing content

- David: The other interesting thing is what would you consider the visibility state for a portal on a page. It's drawing content and you want it to be ready. But if you have a portal in a BG tab you don't want it to do a lot of work. Don't want it tied to top-pages visibility, because it's a timing communication channel, if you switch tabs and both get the visibility state at the same time.

- Nicolás: can’t you do that with iframes?

- Domenic: IFRAMEs get their own storage partitions, while portals do not

- Nicolás: Makes it hard to reason about the visibility state of a portal

- Yoav: For a visible portal, you’d want it to animate, where for an invisible portal you wouldn’t want that.

- NPM: There's two general use cases, (1) is know whether you want to execute some amount of work and (2) tracking performance of paint metrics

- ... Want portal to count as having paint metrics when it's still a portal, but hard if we can't tell if it's backgrounded

- Domenic: Might be better to always think of it as backgrounded. It’s ok if your preview doesn’t have animations. Extra preview on top of prerendering, and a prerendered page is not visible

- Benjamin: Does the pre-rendered page never change?

- Jeremy: We don't envision taking a single screenshot, we allow paints to happen and frames to be generated. UA could do that at a lower rate if desired.

- Michal: but that conflicts with the goal of treating it as hidden, when some pages avoid e.g. background play

- Jeremy: you want to avoid BG play when users don’t see it. But you don’t want the page to no show up at all. I’d imagine most people not do delay attaching DOM events on page visibility.

- Domenic: and to be clear, you do have to opt in. So the site should see they are rendering reasonably when portaled.

- Yoav: One conflicting requirement in preview state is for tab switcher and app switcher case, we do want animations and video to continue to play.

- Benjamin: But we don’t know what the implications are on HTML

- Domenic: We've been thinking of prerendering things as browsing contexts, maybe app switchers are browsing contexts in some level. But largely for privacy reasons we don't want portals to know they're visible and doing animations and stuff.

- Yoav: Your concept of creating a matrix with all states sounds like the right next step.

- Michal: On the previous topic of prerender, I don't know if Benjamin had an opinion.

- Benjamin: Don't have anything to say, initial impression is better to start with something simple, the localStorage thing is complex

- Alex: I see the wisdom in not allowing localStorage and credentials, that could be problematic

- Benjamin: Do we know what types of sites got the most benefit from prerender?

- Kinuko: Good question, there were a few pages that had issues. I don't have data if there was a significant difference between sites.

- Yoav: There are also fundamental issues between credentialed content pre-rendering and non-credentialed prerendering. Credentialed prerendering would change site state (e.g. log off user), Non-credentialed problems would be with users missing their credentials because the site didn’t do the transition work

- ... Are other browsers doing any prerendering? URL-bar based one?

- Ryosuke: When the user is typing the URL, there's no tracking, so can prerender the site with credentials

- Domenic: What if a user types a user like example.com/logout and you prerender that with credentials

- Alex: Probably issues with edge cases like that

- Domenic: Some things like to shut down during prerendering like speech synthesis APIs. We want to produce an exhaustive list of APIs that we should shut down, which will be useful for browser-initiated prerendering as well. Not mandatory, but hopefully useful

- Ryosuke: if the user types the URL, there’s no need for non-credentialed prerendering

- Domenic: Two variants, same-origin and cross-origin, where cross-origin does all privacy protections, but annoyance prevention is relevant for both

- Kinuko: Visibility discussion could be useful to that case as well

- Ryosuke: Things like auto-play audio probably don't exist in UAs anymore, but there are also probably other edge cases for things to disable

- Jeremy: Things that are behind user-gestures are fine, but there are some APIs which aren’t protected by that.

- Michal: SPA dynamically rewrite the page content instead of navigating, commonly use frameworks, use hash fragments or history API to update URL

- … RUM metrics and tools typically target traditional loads, and synthetic tools are also not great

- … Either in the dark on these sites, or get the wrong picture

- … types of SPA navs: full page recreation, content swapping, component update, infinite scroll

- … Loading: dynamically load what you need, large common template, preload everything (common with scripts),

- … initial load: client side rendering vs. SSR + hydration

- … subsequent loads: interaction vs. automatic

- … frameworks have signals for route transition starts and visual updates

- Coverage is partial

- "Soft done" when all initial work has been started, but not yet done

- Async work may not be captured

- zone.js tried to link async work, but may not work with modern async JS

- … some RUM frameworks mark route starts, listen to network usage & DOM updates

- … We also have UserTiming. Conventions there could help

- … Automatic marking via heuristics?

- If we had conventions and marks, that could help when testing heuristics.

- ...Work on revamp of the history API - maybe we can provide an API to provide a well-lit path to give us something to hook onto when measuring

- … Measurement for SPA

- … Responsiveness - Event Timing can give us data on long event durations. Could provide onload?

- … We’d also want to track the next input, similar to FID

- … There’s also the question of attribution

- … next paint we get from event timing, but interested in FCP or LCP

- … gets problematic with partial updates: the first paint is always contentful, or maybe we only want to count paints for new content

- … visual stability - layout instability is fine, but CLS is cumulative, so ignores softnavs

- … One difference between page load and softnavs is that layout shifts that happen post load would get a signal of hadRecentInput, but new content that gets added as part of a softnav and then shifted is similar to a page load, even if it happened within the 500ms timeout after user input.

- … Transitions from MPA to SPA -

- SPA navs measure more soft navs, but less page loads

- Faster transitions, but slower page loads

- Transition causes many things to change at once, so hard to find causes

- Fewer page loads result in worse caching

- “More pageviews” - may be a result of transitions

- … Attribution - ideally, we could report on each route change and reset metrics

- … But you can’t necessarily report the same metrics

- … Should we blend the metrics with their page load equivalents?

- Similarity to BFCache and visibility changes

- Many fast post load navs don’t compensate for one terrible first load

- Bounce rates are impacted more by the initial page than by transitions

- Distribution may change

- Yoav: first page is important, but isn’t that the same for MPA?

- Cliff: yes! performance is always a distribution. SPA laid is not the same of full page load, but it’s important to segment. Question for Pat Meenan about SPA and synthetic, because when you script something in WPT, LCP is still being reported for SPA navigations. Was just on a call with a customer that is moving to a SPA, but interested when the LCP/product image paints after the softnav. So they rely on synthetic metrics.

- Pat: In WPT, LCP may trigger if the largest in the new loads is larger than the previous loads. Element Timing is the only real way to instrument today

- … render metrics would be relative to the state of the SPA

- Michal: LCP candidates don’t get reported after interaction, no?

- Npm: that would work if the routes are triggered by script, not input

- Pat: yeah, that’s how it works

- Cliff: For CLS, is this something that can be cleared?

- Michal: CLS is not reported, so libraries can calculate it the same way Chrome does. But there’s work underway to normalize it over time and across routes

- … If we start attributing to URL, we’d want to cut it per route

- Nic: that’s what we do with Boomerang

- Michal: Depends if you want to match CrUX or improve attribution

- … Back to comparing initial load for MPA vs. SPA, there’s definitely a difference between first load and reloads, but suspect that SPAs would have bigger differences. Cliff mentioned that they do separate that out and dig deeper. Do you find it important to separate the metrics or blend them.

- Cliff: transitioning to SPA replaces traditional page views, so need to measure holistically

- .. the answer is it depends, but having the ability to separate that matters

- Michal: biggest concern is that the entr point for a website is paying the cost upfront and all future routes are near instantaneous. Blending is weird here

- Noam R: you need to picture both. You want the first load for business impact and subsequent loads to better understand the performance of the full user flow.

- … interesting to look at loads in the same session in MPA vs. SPA

- … SPA would give you better results in those cases

- Yoav: For SPAs, having the site fetch all possible content on first page so other pages are faster is something you can also do with MPAs with prefetch/etc

- Cliff: thinking of cold vs. warm cache, we don’t really do much about that today. But the same is true for soft navigation, some of their resources may be cached. Important to distinguish page load types

- Michal: the best page load is no page load - so is that a perf regression of an improvement?

- Npm: We’ve always discussed SPAs, super clear that this is something that’s needed. Main question - will we require developer annotation, new history API, etc? Also, what metrics do we want to surface - FP, FCP, LCP, FID?

- Steven: working with SPAs for 5 years now, and need an API to say “we’re doing a softnav” to reset all the performance metrics. For navigation browser know things that they don’t know for SPAs. Would love all the metrics to track specific SPA pages that need improvement

- Benjamin: How do you signal?

- Steven: today we do that in our library to reset the library instrumentation, but not the browser metrics. We change the URL, but heuristics may get it wrong, because the timing can change. Would love an API.

- Michal: Event Timing reports on all long events, FID can be polyfilled, but for first inputs after a transition, you want it even if it’s fast, so it makes sense to reset in those cases and report the first event after softnavs.

- Npm: annotating the SPA navigation requires something different from Event Timing. API that changes the URL, e.g. discussions about a new Navigation api that can fix history API as well enable monitoring. Requires URL changes that are not suited for Event Timing

- Michal: issues around UX. Also, the history API is not great. Separate from that, if you want to support opening new tabs + client side routing requires a lot of work. Proposals to fix that. It wouldn’t measure on its own, we still have to answer all these questions.

- … hardest for me to think through paint - can we just do the same things we do for the initial paint? Or should we just care about the new content?

- Noam R: If we go with the application clearing the metrics, the application can use Element Timing for something finer. Cannot rely on the history API, but can allow the application to explicitly reset the metrics.

- Cliff: Couldn’t we tell during the navigation state, when we started the event and when the last layout shift happened and ignore it if the timestamp was before the start of the SPA navigation. Wouldn’t requiring clearing anything, you could still mark a “next contentful paint” or trigger a new LCP. Doesn’t seem that complex to do today.

- Michal: You’re right that you could just use the nav timestamps to slice a session. For paint it’s different. I like Noam’s suggestion, but now you’re measuring different things

- Noam R: we can call it “contentful paint”

- Npm: It’s the first contentful paint after the SPA navigation

- Yoav: even without changing the name we can easily distinguish between one and the other, based on timestamps. Open question with paint metrics is whether we can report them for arbitrary things, from a security perspective. Killing :visited would go a long way to make that reasonable, but not sure that covers everything

- Tim: same process cross-origin iframes can also leak things

- Npm: if you have a cross-origin image you can get information on based on their painting times

- Cliff: is the idea to do something so developers are doing as little work as possible and get that out of the box? Or do we want a common way for developers to annotate so that we can report it everywhere?

- Michal: One idea is to come up with a User Timing convention, get the frameworks to speak the same language and hook into that. Relies on developer hints, so we’d have to see

- …. The next step is for that to inform better heuristics

- … and finally with the navigation API, it could restrict abuse: no reason to use it too frequently - only be used in places where top-level navigations are being used today

- … So other than just identifying - what do we measure and what do we do with it

- … Good to hear you want both blended view and separate first load view

- Cliff: This has come up and we will hear more from it. Web Vitals is still new, but it will come up

- Nic: For our customers, they can segment hard navs from soft navs, obvious that for soft navs the metrics are not there, and we have to educate them. Would be great to have a consistent view and metrics

- Michal: one concrete takeaway - maybe have the default be blended for MPAs and segmented for SPA. Would that minimizes surprises?

- Cliff: Sounds like a good blog post

- Ryosuke: Would be useful to better understand use-cases here with concrete examples: React app, twitter, etc and study what they’re doing.

- Michal: I looked into that. Maybe we can have a session on a call to go over such an example.

- Ryosuke: Similarly, we can hear from e.g. SalesForce how they are measuring now. E.g. some apps change the URL after the navigation. There are multiple ways to write routers, etc. SPAs can also do partial navigations. Would be interesting to see ways that apps consider navigations. Not sure if there’s agreement there.

- Michal: Tried to show this. Also history updating is a single moment in time, but some apps load pages through async processes.

- Ryosuke: There’s also an in-app prefetch case, where they fetch content ahead of time. Specifically to SPAs we need to be able to measure the things that happened before the navigation.

- Michal: in the conversation on prerendering, Domenic mentioned that they want to measure both prerendering and the user experience. I haven’t previously thought about attributing the prerender work to the cost of the future route. Right now, the thing that’s doing the prerendering is the one to which the cost is attributed. If we’re using browser features for prerendering, that may make it easier to attribute the cost.

- Yoav: AI to kick-off repo to cover the problem first, collect use-cases, properties can contribute from their experience what they're trying to measure

- Noam (in chat): I would give +1 to Steven's idea of an API which allows the app to notify that a "soft navigation" occurred. Relying on URL/history change is probably not sufficient for some apps.

- Yoav: We want to align user-experience metrics including BFCache

- ... Fire new entries for BFCache rather than overwrite old entries/timestamps

- ... Single time origin for all navigations

- ... Option 1: Another NavigationTiming entry, nicely uses NT array, no new entry types

- ... Compat questions, does anyone look at non-[0]th entry? 0.125% of Alexa 100K touch a second entry, most loop over all entries. None relied on being exactly 1 item.

- ... Downsides: Have a NT entry with a lot of 0-value properties (related to load time of resource)

- ... [code example]

- ... Option 2: New type of PerformanceEntry, doesn't include all of the zero'd values but contains the startTime

- ... Option 3: New entry, also has pointers to pains/lcp/longtasks/etc. But at the time this entry is created, we don't have all these times necessarily

- ... Option 4: PerformanceObserverEntryList on the entries to get associated ones

- ... "It's weird"

- ... How does it fit in with other observers

- ... Favorite as Option #1: compatible, fits with current API shape

- ... If so, should we also use it for other navigation such as SPA navs

- Michal: In your testing, when did sites check the entire array

- Yoav: During page load process, I looked at the code samples but didn't debug through them

- ... Those sites may not even see those BFCache navigation because it happens after the point in time they're collecting those metrics

- Nic: Is there anything else to track here as part of the duration, I'm assuming there's non-zero work here? Is there anything we need to measure?

- Yoav: We'd want to kick off FCP, LCP, FID, etc as well in that scenario, filtered to the timestamp

- Benjamin: Firefox notes when BFCache is used to restore, but we don't note a duration.

- Yoav: Would it be reasonable to re-fire paint entries and FID in case of restore?

- Benjamin: Possible yes

- ... We're more interested in when cache is used

- Sean: One thing I noticed is that all proposed options look different in what they're reporting. What problems do we want to solve?

- Yoav: I don't think they're different in terms of what they're reporting, main thing is that a navigation happened, and at the point it happened. Secondarily, we want to re-fire entries and filter them based on the start time of that BFCache nav.

- Andrew: Everyone's favorite boolean

- ... Recap: Was called shouldYield, hasPendingUserInput, now isInputPending

- ... Chrome 87+ Origin trial in 2019

- ... Overview: Way to remain responsive for work that doesn't want to yield

- ... Permits yielding less frequently if unnecessary

- ... Why? Input only because most display blocking work is due to input

- ... It does block painting/network and that's OK, not a substitute for yielding

- ... FB measurements and partners show it can save time and throughput by yielding only to input, throughput up 25% from one partner

- ... Interaction with LongTask API: Ideas to adding annotations to LT entries

- ...

- Noam: Can't say a session is bad because it had a LT, it might've been bad when there's user input

- Tim: Tricky cases are when others are using isInputPending for yielding, but some may be using isInputPending for other cases or not yielding

- ... Let people register for a different LongTask entry for those with isInputPending calls

- Andrew: Introduces the idea of a "sanctioned" LongTask

- Patrick: Problem coming in with Lighthouse, deadline with rIC. They may be checking the deadline but doing it so frequently. Maybe they check the rate at which they check isInputPending.

- Andrew: Interesting, though you could be checking it as frequently as you want but if you may not ever do anything based on that signal

- NPM: Maybe in LongTasks, an array of timestamps for isInputPending calls

- ...

- Nic: It would be interesting to mash wasInputPending with isInputPending calls to see if they reacted to input

- Michal: I know there are some RUM tools using TBT, and ...

- Tim: When you have moderate data there are cases where LongTasks give you data that you might not see otherwise

- Michal: The fact that the page called isInputPending gives confidence perhaps

- NPM: If it was a 2 second LT and they called it at the beginning or ending, that's not a good UX

- Andrew: Comes back to wondering if any annotation is useful?

- ...

- Andrew: InputStarved signal, where isInputPending was checked but it was more than X milliseconds before they yielded

- ... Will take some of these suggestions to Github issue

- ... Yielding to input: If yielding, how? setTimeout()/postMessage(), versus scheduler.yield(), or something new?

- Scott: ... (may not cover this case exactly)

- Michal: If the scheduler.yield() proposal is extended to handle this, yield and return to me with high priority, does it just replace isInputPending() entirely?

- Scott: Maybe. There may be problems with throughput where you don't want to yield that frequently

- Tim: My guess is we don't want to specify user will handle all user input before handling all micro tasks. Giving browsers flexibility to whatever they choose to do feels preferable.

- Ryosuke: Webkit does not have any mechanism to prioritize input

- Andrew: Is input frame-aligned?

- Ryosuke: Input is FIFO

- Andrew: We may not need a yield-only-to-input function

- ... I think Tim's suggestion of expecting reasonable behavior and if not default to a timeout in the worst case or potential throttling

- Michal: Presumably isInputPending() signal may give you confidence to introduce a longtask where you would yield. But there could be other scripts that want to run. What about yielding to other script and making sure a bad actor can't take over the thread.

- Andrew: IIP is for script that already wants to do a longtask and has incentive for throughput wins, improve responsiveness and UX. Cooperative multi-tasking is out of scope for this...

- Scott: Our team has discussed some options here, ..., we could lie in isInputPending if you're blocking the thread for too long and there are other things. Not sure if this is something we want to spec.

- Michal: isOkToBlock(), for future cases where it's not strictly related to input. In this case it's nice to scope it directly to the problem.

- Yoav: Regarding incubation status question, we had discussed it a few calls ago. One previous call it was favorable to consider for adoption, but a more recent call went the other way. Didn't want to make Rechartering dependent on incubations.

Wednesday October 21

- Noam: Not a spec or proposal, understanding a need for browser capability

- ... Browser provides very limited network diagnostic information

- ... May want to optimize UX based on network conditions

- ... Key use case is local and last mile network conditions

- ... Web app is required to determine state of local connection, enables app to notify user of local connectivity issues

- ... App could suggest user move to a better reception area

- ... App could adapt (payload size, call frequency)

- ... Can collect this as RUM data

- ... Existing solutions: NEL (no local network info, no client-side info)

- ... Network Info API (too much or not enough sensitivity, not configurable)

- ... Custom solution (via XHR calls, no local conn info)

- ... Possible approaches. Approach 1: Network Info API. Extend current API to configure sensitivity thresholds (RTT to consider timeout, consecutive timeouts trigger notification)

- ... Limitation is in flexibility, since different apps may have different needs (e.g. games requiring lower latency)

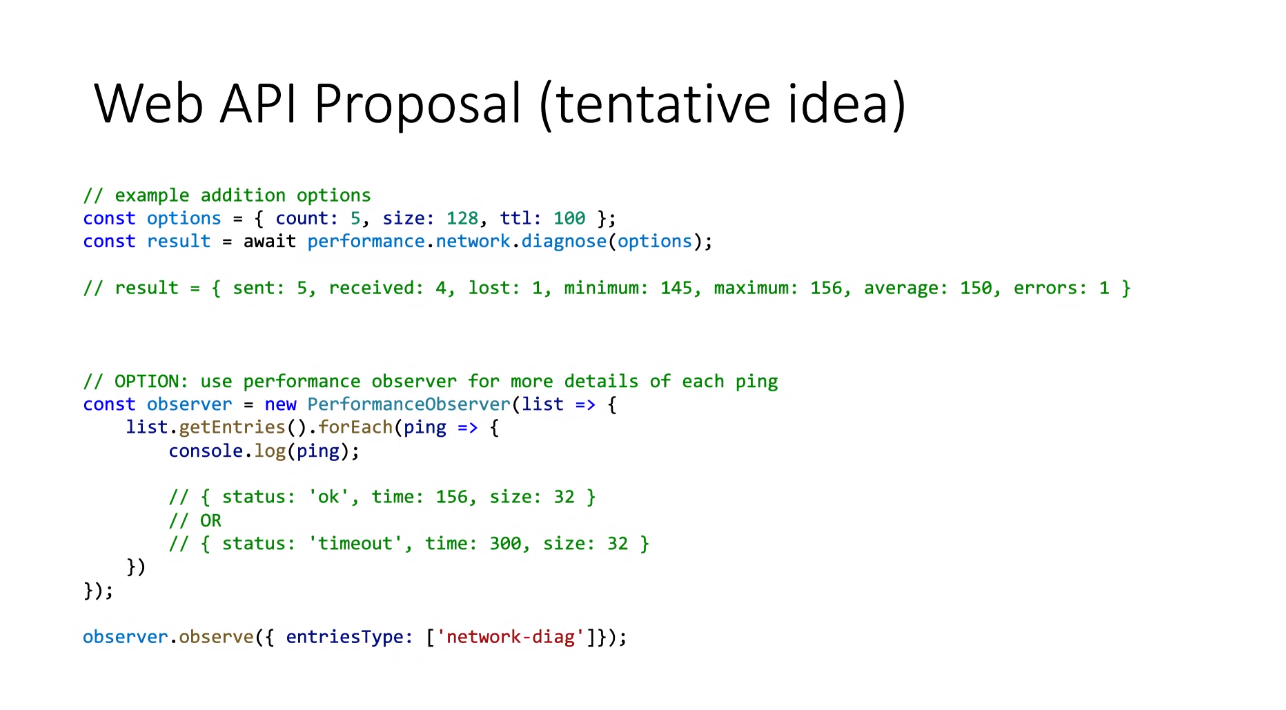

- ... Approach 2: ping API. Attempt to diagnose local last mile connection -- pings default gateway

- Yoav: For Network Info API, the idea of custom thresholds is interesting. Current API exposes to many bits of entropy and doesn't give enough info in other cases.

- ... Would love to have your active involvement on the repo -- current WICG only

- ... Repo was not adopted as part of Devices and Sensors group

- ... I think there's a path forward to modify the API to address concerns from other vendors

- ... Could allow people to gather a small number of bits, but the bits they care about

- ... For Ping API, knowledge of how far away I am from my router and providing that to random web pages seems risky

- ... Would like to split out use cases where there's a need for local network information from general how is the network behaving

- Noam: Let's cover privacy aspects in later slide

- ... [example]

- Yoav: I think the API shape will be overshadowed by privacy issues

- Noam: Privacy concerns. Should user receive prompt to acknowledge and accept diagnosis. Apps already diagnose using hacks via XHR, image load timing, etc.

- ... What is valid to report without violating privacy concerns

- Yoav: Main difference I see here is you're pinging a specific local IP address that is not exposed

- ... Today web content does not know what the local gateway is

- ... Adding an explicit way to ping the default gateway could be a concern

- Noam: Another issue is exposing larger fingerprinting surface

- ... UA should not report the default gateway IP address

- ... Obfuscate ping results to mitigate fingerprinting

- ... NetInfo API rounds to 25ms, could be more or less granular than that even

- Subrata: How is it going to help in a VPN-connected network?

- ... Second question is -- we already have a RTT, how does ping response time differ?

- Noam: Regarding VPN, that definitely changes routing table

- ... More than 1 gateway can exist (default gateway would go to VPN IP)

- ... Was going to consider diagnosing even more destinations, ping FQDN from site origin only

- ... TCP ping to detect network congestion -- harder to define and do securely

- ... Main concerns I'm hearing are around privacy, is that correct?

- Yoav: Concern around privacy with new information being exposed. Maybe that could be put behind prompt?

- ... I would like to understand the use-cases for this to be flushed out, versus NetInfo for local diagnostics

- Noam: Does anyone else here have a need for diagnostics of local network?

- Patrick: Are the diagnostics here the responsibility of the web app, or more browser responsibility? More consistent, but gives the same user feedback.

- Noam: If the app detect bad connectivity, it could adapt, change the payload or send data less frequently

- Yoav: But that could be covered by NetInfo API too, not necessarily local NetInfo

- ... One note from Alex Russel in chat is that an async API that provides a prompt could be a good idea if this moves forward

- ... Going back to NetInfo, wondering if other vendors have feedback on what a revamped API might be something they want to ship

- Benjamin: We're all on-hands for QUIC, so this would be on backburner

- Yoav: Would this be something you'd eventually be willing to expose

- Benjamin: Maybe, but let's cut speculation short, we can't commit to this

- Sudeep: I'm Co-Chair of Web and Networks Interest Group

- ... For NetInfo API, there are some proposals in 5G space with a lot of variations seen. Whether hints can be shared with apps, for doing buffering in advance, etc. Has dependencies on information from operator network, etc.

- ... Second comment is orthogonal, there is an idea in our interest group where it's the other way around, to extend developer tools to extend time-variant conditions. There could be a network trace format where it's taken from the real-world and test how a web app behaves in network conditions, so the developer can adapt to network conditions.

- Yoav: Network trace idea sounds interesting in context of synthetic testing, but possibly orthogonal to this. If you could share links that'd be great.

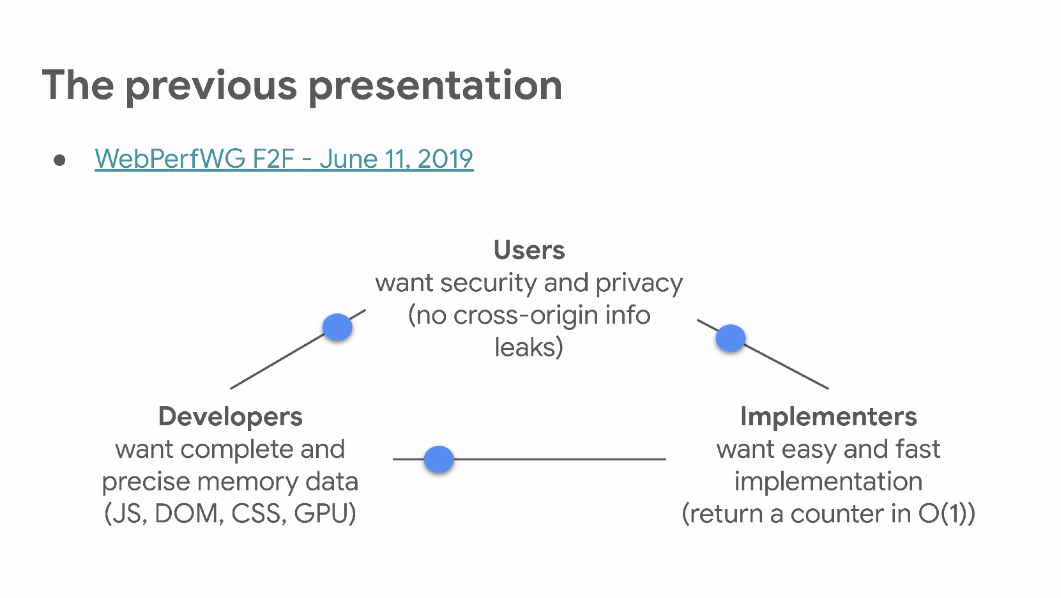

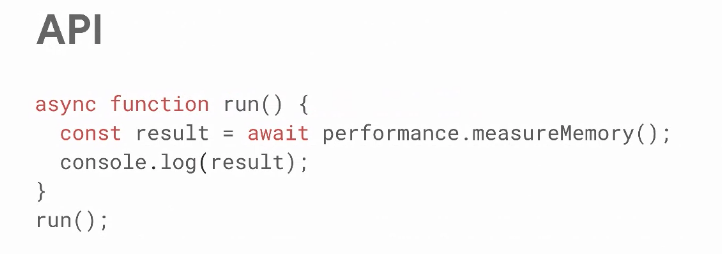

- Ulan: Why care about memory? Use more memory to improve performance

- ... Use more memory due to memory leaks (slow growth over time, slowdown due to paging, garbage collection)

- … Hard to detect in local testing, which run for short times or as a result of specific actions

- … Main use case - how to measure memory usage in production

- ... Useful for long running complex applications

- … Tradeoffs

- API shape

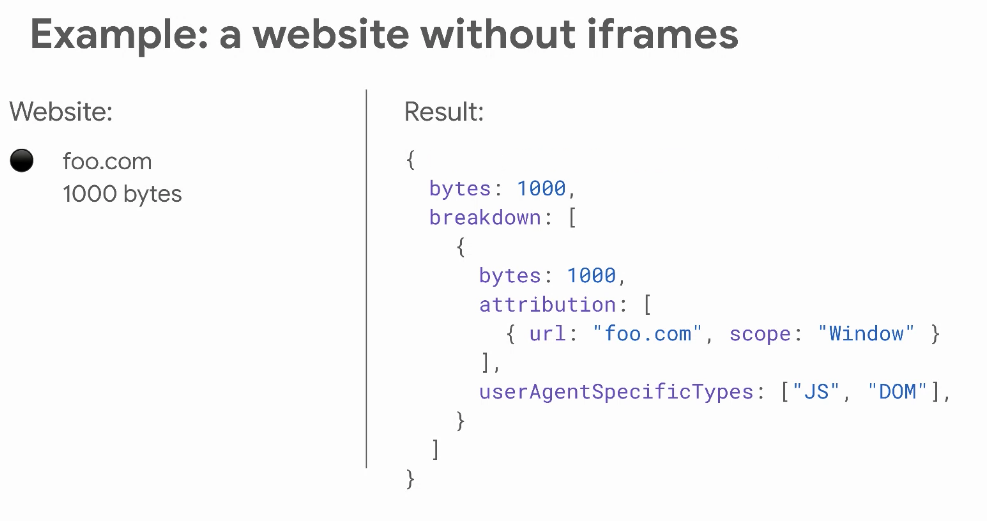

- … Comparison to the non-standard API: current API is well defined, where the previous API may have leaked heap sizes from unrelated pages, based on implementation

- … Current API enables to break down the usage by owner (e.g. iframes) and type

- … Better security through cross origin isolation

- ... An attacker can use the API to infer sizes of cross-origin resources, gate API behind self.crossOriginIsolated via COOP/COEP

- … Breakdown example