WebPerf WG @ TPAC 2021

Logistics

When

October 25-29 2020 - 8am-11am PST

Registering

- Register! https://www.w3.org/2021/10/TPAC/Overview.html

- Free for WG Members and Invited Experts

- If you’re not one and want to join, ping the chairs to discuss (Yoav Weiss, Nic Jansma)

Calling in

Attendees

- Yoav Weiss (Google)

- Nic Jansma (Akamai)

- Lucas Pardue (Cloudflare)

- Subrata Ashe (Salesforce)

- Nicolás Peña Moreno (Google)

- Michal Mocny (Google)

- Patrick Meenan (Google)

- Andrew Comminos (Facebook)

- Ilya Grigorik (Shopify)

- Boris Schapira (Contentsquare)

- Noam Helfman (Microsoft)

- Giacomo Zecchini

- Ian Clelland (Google)

- Addy Osmani (Google)

- Noam Rosenthal

- Steven Bougon (Salesforce)

- Wooseok Jeong(Facebook)

- Caio Gondim (The New York Times)

- Tim Dresser (Google)

- Scott Gifford (Amazon)

- Colin Bendell (Shopify)

- Sérgio Gomes (Automattic)

- Howard Edwards (Bocoup)

- Courtney Holland (Bocoup)

- Simon Pieters (Bocoup)

- Carine Bournez (W3C)

- Scott Haseley (Google)

- Paul Calvano (Akamai)

- Andy Davies (SpeedCurve)

- Katie Sylor-Miller (Etsy)

- Andrew Galloni (Cloudflare)

- Tim Kadlec (WebPageTest)

- Cliff Crocker (SpeedCurve)

- Annie Sullivan (Google)

- Alex Christensen (Apple)

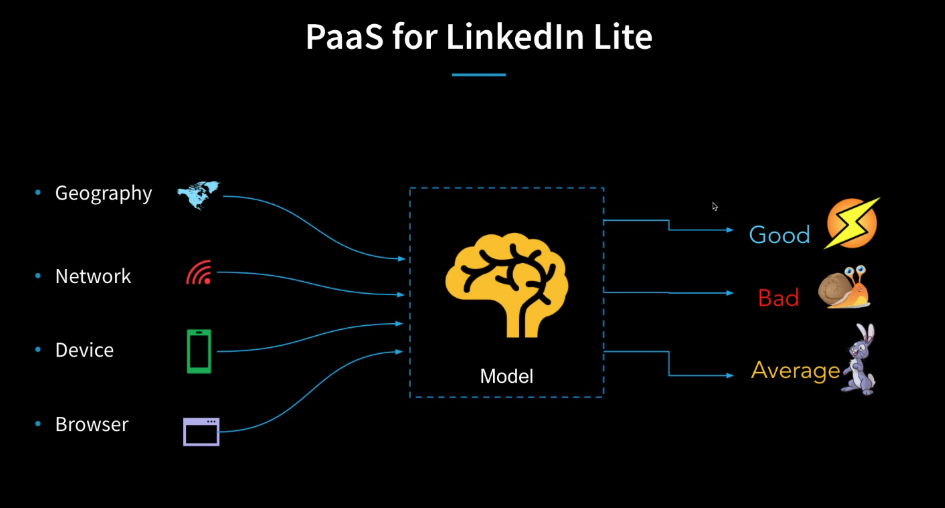

- Nitin Pasumarthy (LinkedIn)

- Prasanna Vijayanathan (Netflix)

- Thomas Steiner (Google)

- Sean Feng (Mozilla)

- Vaspol Ruamviboonsuk (Akamai)

- Benjamin De Kosnik (Mozilla)

- Leonardo Balter (Salesforce)

- Philip Walton (Google)

- Aram Zucker-Scharff (The Washington Post)

- Matthew Ziemer (Pinterest)

- Utkarsh Goel (Akamai)

Agenda

Times in PT

Monday - October 25

Timeslot (PST) | Subject | POC |

8:00-8:30 | Yoav, Nic | |

08:30-9:00 | Nic, Yoav | |

9:00-9:30 | Noam Rosenthal | |

9:30~9:45 | Break | |

9:45-10:30 | Noam Rosenthal | |

10:30-11:00 | Yoav |

Recordings: NavigationTiming and cross-origin redirects, Preload cache specification, Measuring preconnects

Tuesday - October 26

Timeslot | Subject | POC |

8:00-8:30 | Fergal | |

08:30-9:00 | pagevisibility and requestIdleCallback: move processing to HTML? | Noam Rosenthal |

9:00-9:30 | Nic Jansma | |

9:40~9:55 | Break | |

9:45-10:30 | Yoav | |

10:30-11:00 | Michal |

Recording: BFCache, RUM pain points, Measuring SPAs

Wednesday - October 27

Timeslot (PST) | Subject | POC |

8:00-8:30 | Nic Jansma | |

08:30-9:00 | Noam Helfman | |

9:00-9:30 | Addy Osmani Pat Meenan | |

9:30~9:45 | Break | |

9:45-10:30 | Andrew Comminos | |

10:30-11:00 | Xiaocheng Hu |

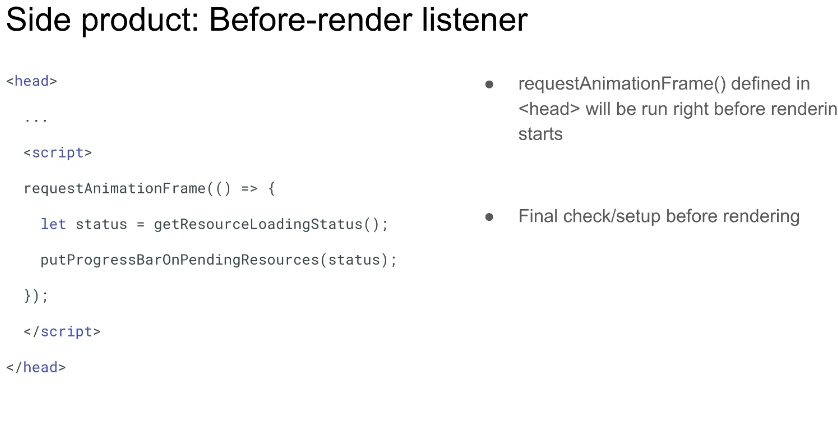

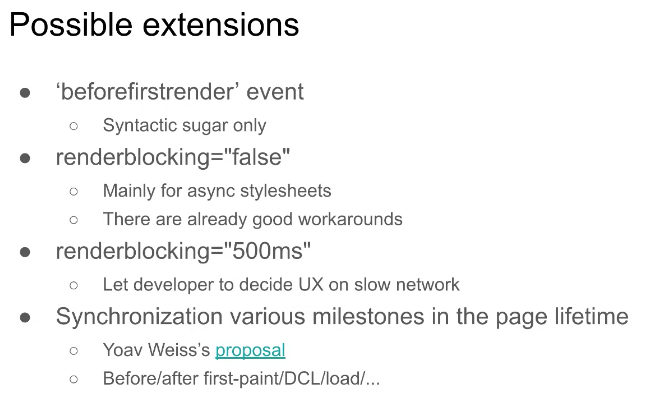

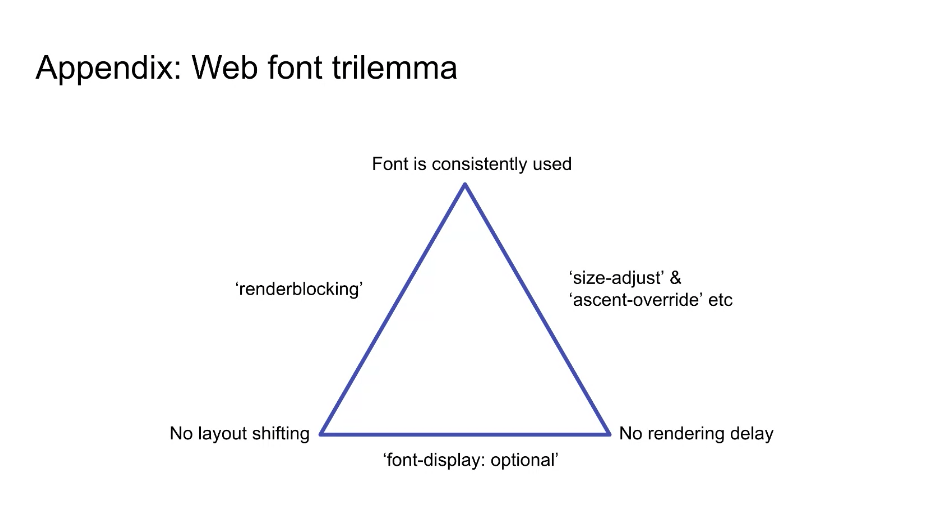

Recording: zstd in the browser, responsiveness in Excel, Optimizing 3P script loading, JS profiling improvements, renderblocking attribute

Thursday - October 28

Timeslot (PST) | Subject | POC |

8:00-8:30 | Yoav | |

08:30-9:00 | Yoav | |

9:00-9:30 | Thomas Steiner | |

9:30~9:45 | Pet camera break | |

9:45-10:30 | ||

10:30-11:00 | Yoav |

Recording: Content negotiation and Client Hints, User preferences media features, Personalizing Performance, LCP updates

Sessions

Intros, code of conduct, agenda review, meeting goals - Nic, Yoav

Minutes

- TPAC 2021!

- Yoav: Missing: Provide methods to observe and improve aspects of application performance of user agent features and APIs

- ... Notable highlights including tighter integration with Fetch and HTML

- ... Spec cleanup improve processing models and align better with Fetch/HTML

- ... A/B testing meeting last Feb which had a lot of insights from industry at large

- ... Helped bridge gaps between different parts of the industry. Hoping to see results at some point.

- ... Help from W3C folks to setup auto-publishing and auto-tidying. Helps smooth over day-to-day friction in publishing specs

- ... 168 closed issues on different Github repos

- ... Rechartered takes us until 2023

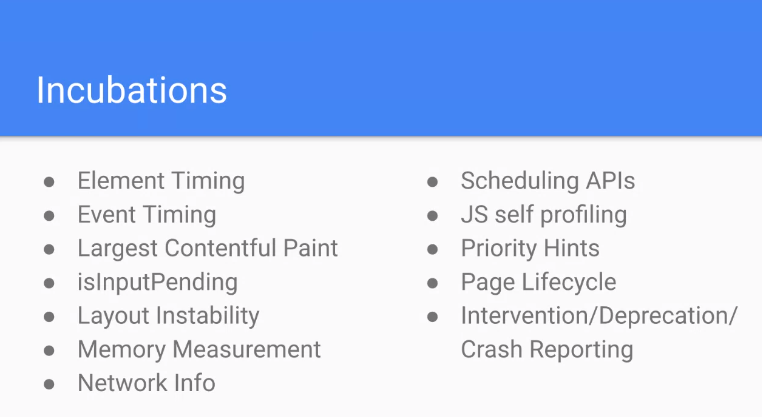

- ... Incubations

- ... Talk more about incubations tomorrow

- ... Seeing if we're interested in adopting them more officially

- ... All seeing an increase in adoption

- ... Working group having a direct impact on users

- ... New members!

- ... Housekeeping and Code of Conduct

- ... Recording presentations and publishing the videos

- ... Would appreciate secondary scribes

- ... Yoav and Nic will take turns with minuting

- ... Once in a while we lose a sentence or the speaker has moved on and we're behind in scribing

- ... Having someone to take on as secondary scribe is extremely helpful

- Quick overview of scribe requirements… capture sentiment and cover when Nic/Yoav are talking.

WG introspection - Nic

Summary

- Rotating meeting times can help folks in different TZs

- Discussion summaries and WG updates can keep the broader community informed. Also created Slack/Matrix channels for comms.

- There’s interest in expanding the discussion scope, assuming we can navigate IPR

Minutes

- Nic: Want to see where we are as a group.

- … Slides meant to facilitate discussion - feel free to bring up ideas

- … The WG has 94 participant across 20 organizations

- … Decent increase in participation

- Stats: 94 participants (signups), 20 organizations, 4 invited experts

- Increase in participation over time

- How can we improve?

- Diversity, Meeting Times, Newsletter, Social Media/Twitter engagement, Discussion Scope, …

- Diversity:

- Want to promote diversity of people, voices, genders, companies and locations

- Want to reduce barriers as much as possible

- TimeZones - have a US/EU focus, but times are more US friendly. A recurrent point of feedback

- Cost of participation - may be interesting to see if it stopped folks from participating.

- A lot of people may be volunteered or only partially supported by their employer. Maybe we can better highlight accomplishments to make that easier

- Technical history can be daunting

- Meeting times - currently 1pm EST 10am PST. 7pm for EU, middle of night for Asia

- One thought is to have an alternating cadence to make it easier for EU folks. E.g. maybe 2 hours earlier

- Doesn’t help with China and Japan

- Any thoughts?

- Cliff: Are meetings recorded?

- Nic: In the past we were able to record the full meetings. Currently we only record presentations with no discussions, due to W3C process changes. We have minutes.

- Dan: What are other teams doing? What day is the group meeting?

- Nic: Thursdays. Other groups have most of the participants be US based.

- Yoav: From what I've seen in other groups it's mostly US centric. Early US West

- NoamR: Rotating 2 hours earlier would be significantly better for IL TZ

- Nic: Any concerns from US folks?

- Nic: Should we do a poll?

- Note: Available at https://docs.google.com/forms/d/1jkZPL8BjsN112uJi75DO2n0RnaSCVc_5Uft66hxoGIE/edit

- Yoav: One option is rotating. Another option is if US West are open to 2 hrs earlier.

- Nic: That doesn’t solve Asia though.

- NoamR: If we have a particular participant from Asia, we can have a one-time meeting that is better for those timezones.

- Nic: Great idea. Will follow up with a poll

- .. Newsletter

- … meeting minutes take a while to digest. This suggestion is for a quarterly updates coming from the group. Could allow folks that can’t keep up with the day to day to get a glimpse of what the group is working on

- … Would require work, but maybe presenters can create blurbs of their presentations and discussion conclusions

- Michal: Like the idea of a newsletter but curious about the intended audience. Is it for ourselves or for an external audience. Are other WGs doing that?

- Nic: Not aware of other WGs doing that.

- Carine: We had WGs that had their own updates. Preferred way is to publish a blog post on the W3C blog

- Yoav: What I've done in the past for TPACs is have a 3-line summary for what was presented, discussion and conclusions. This was driven a lot by folks presenting. Makes sense to extend this to bi-weekly presentations. Once in a while wrap them up into a digestible form.

- ... Michal to answer your question I think it can be useful for ourselves, to see discussions without reading minutes

- ... But more for external audiences to see what we're discussing and see if they want to be part of that discussion

- Cliff: One thing that could be nice when trying to read the summary, is reading arguments before/against. Any time you're trying to read through things, it's useful to see arguments. Could be useful for a broader audience where english is not primary language or not as technical.

- Nic: Would be helpful to have the initiator/presenter to do that work that day, and the chairs can commit to organize that later on. (rather than the chair trying to summarize).

- … We could try it and see what it gives. For last year’s TPAC we had presenters summarizing. We can try it and see

- NoamH: Good idea. Personally had questions from my org where folks wanted highlights from the WG, and had to write them himself. Having this would be useful.

- Nic: Agree it’d be nice to have an easy place to point out things that can be later shared with internal orgs.

- … Followup: we’ll try to do that and see how it goes

- ... Social media!

- … Trying to use it again, mostly around notifications for meetings, minutes available, etc

- … Share updates with the community

- … Worthwhile to have a slack channel for the webperf WG on the webperformance slack?

- Anne: Matrix

- Yoav: Any appetite for a Matrix channel?

- NoamR: Matrix makes more sense

- Andy: Depends on who you want to reach

- Nic: we could have channels on both and see what happens. Let’s give that a try

- Yoav: both makes sense

- Yoav: We've been talking with a few folks to see how we can improve things and make it more interesting

- ... One point of feedback was that current discussions are highly focused on WG deliverables, mostly around webperf measurement

- ... Somehow we have less ways of discussing improving performance

- ... Less deliverables that touch on that

- ... I thought we could instead of limiting discussion scope to WG deliverables to somewhat expand it and discuss work that is impacting web performance, happening in WHATWG/WICG and elsewhere

- ... Reach out to other working groups

- ... Generally have a more working group opinion on things happening in webperf space instead of focusing just on deliverables

- ... WDYT about discussion scope expansion? Shouldn't impact charter or deliverables, just things we would be discussing in WG that we wouldn't discuss otherwise

- ... Benefits for us could make it more interesting. Benefits for broader platform is it could bring our expertise to other discussions where we're not necessarily seeing it right now, i.e. in HTML spec. Right now a missed opportunity.

- Dan: I think it's a good idea, the collected expertise of the members in this group would be a shame to waste. A lot happening in field right now going beyond scope of standards. Impacting how people use the platform. So I'm definitely in favor.

- Yoav: Maybe I'm wishful hearing, are you suggesting expanding the scope to userland issues (framework) or is that overreach?

- Dan: Framework issues can be a really touch subject and could be political

- ... Userland tendencies and approaches yes

- Carine: I'm wondering if we should open W3C CG to see if they want to pair on that sort of stuff. Going too far from charter we could have IPR problems. Others might think we're doing too much incubation and not other activities like testing and going to REC.

- ... Pair with another CG and see how it goes

- Yoav: Could be interesting in terms of other working groups like Immersive Web where they have a similar model. Do you know what they're doing there, and would it make sense to split discussions, once a month CG and once a month WG.

- Carine: Immersive Web was predating WG. They were in CG first and moved to WG.

- ... There are possibilities of doing more incubation and doing more outreach

- Michal: I thought you said it was already in the charter and we haven't done this enough, and that was the feedback rather than expanding.

- Yoav: Wasn't thinking of expanding the charter. Not part of deliverables.

- ... One specific example in group's expertise would've been useful, for lazyloading on HTML I think there were this working group should've provided more feedback on and haven't.

- ... That's work happening in HTML, so a WebPerf CG wouldn't have helped there

- ... What I'm thinking is essentially bring up subjects to this group where members of this group can discuss, and as a groups we can provide feedback on relevant issues to e.g. HTML or other specs

- ... And if that feedback is targeted at WICG I think that is fine, working group members are probably also WICG members.

- Anne: Could be sketchy from WHATWG side.

- Yoav: If someone provided feedback on WG call and they're not a WHATWG member, that could be problematic.

- Yoav: Would give this more thought. A CG won't help either, as the CG will have IPR commitment but won't be WHATWG members.

- Carine: Except that it's not limited to W3C membership

- Philippe: Let's say there's a HTML proposal that happens in WICG and gets a PR for WHATWG, how do you guys handle it?

- Anne: There is a risk there that's assessed on a case-by-case basis.

- Philippe: IPR on contributions but not on the whole. Better than nothing. There's a risk but it's somewhat limited

- Yoav: Let me think through the IPR risk here, a broader question is is there interest for these discussions to happen on the WG calls.

- Pat: I have a vested interest, but I don't know there are other WGs that in incubation phase would be brainstorming and working through issues. WHATWG works off PRs and issues.

- ... Not aware of another WebPerf group other than this one. It does help to have broad industry commitment and contribution early in brainstorming phase around gaps, working across browsers, privacy, developers, etc

- ... e.g. Preload

- Anne: More review on WHATWG side would be welcome. Good to have other people from different perspectives on these PRs.

- Yoav: Take an AI to figure out if this is a mess from IPR perspective, and think through ways we could bring in those issues from HTML/Fetch/etc for the group to discuss.

Navigation Timing and cross-origin redirects - Noam Rosenthal

Summary

- Navigation Start being the time origin exposes cross-origin information, about the length of the time before the user reached the document’s origin. This is a problem, however any change to this would incur a lot of implications on the baselines of tests and on the usefulness of RUM testing in certain situations.

- It is not clear how to proceed, Though some suggestion were brought up such as allowing same-site redirects to still be exposed in this way.

- The conversation was missing people from the security/privacy space to weigh in on the implication of keeping things as they are.

Minutes

- Noam: Starting with a controversial topic! Navigation Start Time

- ... Polarized discussion on the issue

- ... From HR time spec

- ... When you click a link or submit a form, not necessarily the same origin as where you're going

- ... Time Origin is our 0 / epoch for everything

- ... All of our PerformanceTimeline numbers are based on that, performance.now() uses that as its origin

- ... Point in time where user started navigation somewhere

- ... Takes us to a X-O issue with this

- ... Lets say a user navigates using address bar to site-a.com

- ... site-a.com sends 302 to site-b.com

- ... Exposed Cross-Origin time

- ... "Prehistory" before I know what happened. I know how long it took but not what happened at all.

- ... Not the only case

- ... site-a.com submits a form to site-a.com, on POST send back a new Location to site-b.com

- ... So Pre-History is exposed, maybe on site-b.com I can detect there was a POST

- ... Doesn't have to be a redirect, because navStart happens before a bunch of events, unload/pagehide/vischange, those are fired after navigation start

- ... So any time spent on some site that runs those events would be noticed by that Prehistoric time on site-b.com

- ... All of those are combined, so Prehistoric time is a cloud of different things

- ... unload/pagehide/vischange, submit, redirects, etc

- ... Things the destination can figure out

- ... For RUM there's also random things influencing the numbers

- ... Malicious effects on other site's loading times by sending users with long redirects

- ... Graph between potentially harmful vs. potentially useful:

- ... Conclusions

- ... Two main options

- ... Option 1 is to stay the same, too late to change, would affect epoch and have comparison challenges

- ... Option 2 is to move time origin to redirect start time of current origin. I just don't know if I was redirected here by something that took a long time.

- ... If this is something we want to change, how would we ship this?

- Yoav: RUM is Real-User Monitoring, apps and dashboards that reflect the user performance and would be affected by this change. If we changed timeOrigin in this particular version of this browser that shipped this change, all impacted metrics affected by X-O redirects or other events, would move down which seems like a perf improvement but would result in confusion

- Noam: One option that I forgot to put in slides is to change resolution to timeOrigin that's very coarse, but still helps provide the time to get here

- ... Could help fix some of the issues with session sharing, but not share sensitive information

- Anne: I think that exposing the time of navigation is also possibly a problem. Once we've implemented various anti-tracking policies, the timing allows these two origins to do some correlation

- Yoav: Agree this is a future problem, not a current problem. I think Jan pointed out current problems with what we are doing now, specifically referrer guessing. Because referrer A uses a slow redirector and referrer B uses a fast redirector, you could guess which one.

- ... From my perspective that leads me to believe that we should fix this, but interested in the "how can we ship this discussion"

- Anne: I think if we come up with a solution it better take that into account. If we're making a change, make it once.

- Sergio: Provide an example that it's not particularly useful. In production at Automatic, we host wordpress.com and people have subdomains of wordpress.com. We set up RUM with monitoring based on NavTiming. Looked for pages with slow DNS times. domainLookupEnd

- ... Saw a lot of those in a couple pages, this isn't happening in DNS, it's happening before that.

- ... Happening if you type if you picked a wrong subdomain, you go to wordpress.com/typo. We found this through our logs that we found multiple levels of redirects, and caused people to take a lot of time landing on the final page.

- ... We've been able to mitigate some of this by removing a few HTTP to HTTPS redirects

- ... Been able to mitigate a real perf problem for users from this API

- ... Not saying this problem doesn't have privacy issues or shouldn't be fixed

- ... We wouldn't never been able to think to add e.g. TAO headers to find this problem

- ... Maybe have a coarse number to define how much time was spent before navigation, or a "there were redirects"

- Noam: Could we assume some of the redirects were Same Site?

- Sergio: Possible, but in this particular instance it's subdomains that don't exist

- ... Could be from custom domains or auth flows or redirect flows for things like OAuth

- ... There's a category of performance issues that would become invisible unless you have the right headers set for every request

- Noam: For this particular problem you don't need every request to have the right headers, unless the earliest one has the right headers

- Sergio: That's correct, there are a few issues as the metric definitions would change based on whether the headers would be set or not

- ... I just wanted to bring up this example showing where this info was useful

- Andy: I get why we want to change it. Removing what that time does is it removes the reflection of User Experience. Losing long redirect times changes how e.g. the experience of LCP is reported

- Yoav: I think it's interesting to think of this as a two-part problem: How can we fix the current problem, how can we ship something that doesn't break dashboards. Part two is how we can re-expose that information in a safe way. Sounds like there's an appetite for part two.

- Andy: Value in knowing that data, that time

- Scott: One question about discussion is, are you just thinking about moving navigationStart or adjusting redirectStart/redirectEnd or unloadStart/unloadEnd. So right now navigationStart is the only way to gather information for X-O pages.

- Noam: redirectStart/End is under-specified, go to this problem by trying to specify it

- ... If we choose to live with current situation, which I don't think we can, we might as well expose it

- ... But first of all I want to start with epoch

- ... That information is the hardest to change

- Scott: What we use some of the data for in same-origin, redirects and unload times we've had real actionable data to drive those down

- ... Other is that domains for a large company is complicated, many TLDs

- ... Reason why solving this could lose data for optimizing user experience

- Yoav: You'd need something like First Party Sets or something

- Pat: A lot of what we've heard so far, but I'd be really concerned that the performance measurements wouldn't be usefully representative of the UX. As far as the loading from when they took an action until anything.

- ... Canonical problem case is an ad placed somewhere on 3P site or AdWords that has click-trackers, and marketing team adds trackers for the site, you lose ability to see the UX is bad

- ... I think we'd be blowing up the usability of the loading metrics

- Yoav: One thing we could do as a bandaid is we could block this information if Referrer policy says we should block it

- Katie: To add to data points where it's valuable, just last month we used abnormally long prefetch times to find traffic from a scraper/bot. We saw huge spikes in RUM from page load time. A lot of these data points have abnormally large before-Fetch. We used that point to track down other signals that it's a scraper/bot that we could block from our RUM collection.

- ... I'll also add that it's a little confusing sometimes that with this data, you see the problem from these redirects on the following page, not the source page. We saw problems from the checkout page on our home page. Changes happened on the checkout page, but metrics were not showing them in the checkout page, but on the next one.

- ... Not always intuitive to use, but there's value to knowing that and to uncover bugs and bad experiences

- ... Does measure something of value to users

- NPM: Sounds like there's a lot of people that think the data is valuable. Is this an urgent problem to fix?

- ... Doesn't seem like the solutions would work for the discussed use-cases

- Noam: I don't think this is urgent to fix, but I ran into it. By coarsening the numbers, the averages and big problem could be useful for people, but harder to track. Could be bandaid to problem, can't correlate click-time on navStart on millisecond to see it's the same user.

- Yoav: Agree it's not necessarily urgent, but there is at least one problem we know of. For sites that have Referrer policy, that could be used to infer which site linked to. Seems like a cross-origin leak that we should fix once, ideally.

- NPM: Can you explain the Referrer issue?

- Yoav: Your traffic is coming from two major sites. Both don't send Referrer. One has a fast redirector and the other has a slow redirector. You'd be able to tell which of the two sites sent traffic based on timing.

- ... Don't know how realistic that scenario is or urgency

- ... More future-facing scenario where we don't want Site-A communicating userID to Site-B. navigationStart and click time could help them communicate bits of information between two sites.

- ... Ideally we would want to minimize the amount of bandaids here and come up with a real solution

- Noam: I suggest we continue this on Github issue

- Yoav: What is the size of the impact on dashboards?

- Nic: baseline changes cause confusion. This is a thing that will happen incrementally, and would not be able to compare the metrics.

- … Will change all the metrics. Would definitely be a big concern

- … As a counter point, some customers excluded all the “pre-historic” data, because they don’t care about that. So some of them choose to start at fetchStart

- Cliff: +1. It would be extremely disruptive, so we have to be very careful rolling this out.

- Nic: Some corps may have millisecond goals to their metrics, and changing the baseline would require communication

- Cliff: Same challenge with synthetic. RUM can be trusted, so changes can be disruptive

- Dan: There were changes to CWVs over the past couple of months, so if communicated appropriately and correctly, it’s something that people can deal with.

- Noam R: The forum of this conversation that are into performance rather than privacy. We should talk about it with both teams in the room. People can bring valuable information about attack vectors from that works.

Preload - Noam Rosenthal

Summary

- Preload cache is not specified, leading to a lot of different behavior across browsers.

- It was pointed out that preload headers should be supported alongside link headers.

- The Pull request for this is underway.

Minutes

- Noam: Conversation about preload - issue #590

- … Came from an investigation of what preloads are and what they should be

- … Premise - fetch early something you’d use later

- … Avoiding a situation where things you know you need to load are delayed - a good problem to solve

- … But the premise doesn’t go into the details

- … 3 topics:

- … availability - once you loaded the resource, is it available forever, for any request, in workers?

- … caching - should preload interact with cache headers? What does it mean to expire? Can it expire before it loads?

- … error handling - preloaded the resource and it’s an error, should you try to reload before use? If it’s not a network error, but an image you can’t decode? 404?

- … Not theoretical issues, but implemented very differently between the different browsers. Resources errored in preload will be fetched again. Same for undecodable images. Preload with fetch is inconsistent.

- … Chrome optimizes for next request, Chromium will keep the preload for the next request, but not after that (beyond HTTP cache)

- … It’s not necessarily a huge issue for users as the worst case is a double request, but getting it to work across browsers is difficult. You can improve performance in one browser and hinder it in another.

- … Possible solutions, trying to have a simple definition:

- … naive early load - fetch the resource and count on the HTTP cache to keep the response.

- … Could result in double requests if the HTTP cache evicted

- Type specific resource cache - uses the `as` value and adds it to the list of loaded images. Problem here is that un-decoded images can double download.

- ... Also that type-specific cache would need to be specified

- … Strong Cache - similar to the Cache object of SW, and ignore caching headers. Equivalent to having the link header hold the response, and have future element reuse that response before calling the Fetch algorithm. Need to be careful with workers.

- Similar to having a cache object is a SW, we return the response regardless of if it’s an error, etc

- Yoav: One thing about Chromium implementation is the memory cache as a fallback for Preload cache. Some things are kept in the memory-cache that may not go to the HTTP cache. One of the benefits of that architecture in Webkit and Chromium, is that the HTTP cache has more overhead. Having a close-by cache is beneficial vs. loading things into HTTP cache and hoping for the best

- Noam: See memory cache similar to HTTP cache, it interacts with cache headers.

- Yoav: To some extent

- ... It's equivalent to Webkit Resource Cache

- ... Memory Cache is also not well defined, possibly another issues

- Dan: I don't know how important it is to discuss, but when would something NOT be in Preload Cache but would be in Memory Cache.

- Yoav: Chromium-specific, after first request is hit (preload with IMG tag using preload), resource is no longer in Preload cache but resource is maybe in Memory cache, assuming another element is holding it

- Noam: Preload is a "strong cache" for one hit

- Dan: Memory cache doesn't necessarily respect Cache headers?

- Yoav: Correct, it's a weak cache so if no element is holding that resource, it's gone from the cache. Respecting some cache headers but not all, e.g. no-store. Maybe some variant of Vary header. Won't necessarily respect Caching lifetimes. Considered since it's loaded in document once, it can be used in same document again even if it's expired

- Anne: What are the conceptual problems with trying to merge those caches, Memory and Preload Caches

- ... It would no longer be one-time-use, is that a problem?

- Yoav: How cache started in Chromium, Preload cache is version of Memory cache

- Pat: Semantics are different from just one-use, ignores no-cache etc. Preload allows you to re-use that one-time-only API, where Memory Cache wouldn't cache it at all.

- Anne: Primarily around Fetch and XHR calls?

- Pat: Script returning different content for a URL, e.g. ad

- Yoav: JSONP scenario

- Noam: Many of those scenarios I wouldn't expect them to use Preload

- Anne: Resource Cache is fairly limited, its scope is wider. e.g. a stylesheet could be cached in one document and re-used in the next document. Where Preload is scoped to a document?

- Pat: Yes

- Yoav: Because Memory Cache is a weak cache, it should evict resources

- Anne: If you keep current document open and navigates to a new tab, it could re-use

- Yoav: If same renderer

- ... With a new process you may have to go to the HTTP Cache

- Dan: Seems to me the bigger distinction is that Preload is not just caching, then it's also parsing or additional action for various types of resources, not just loading into memory as-is

- Anne: That might not be observable, up to implementations

- Dan: Optimization that you may or not notice

- Yoav: It is web-exposed, things not in Memory Cache don't have ResTiming entries

- ... If you go to the HTTP Cache you do trigger them

- Dan: Seems to be a significant implementation distinction in browsers between Memory Cache and Preload Cache.

- Noam: That problem is what can create several network requests in scenario of invalid image and things

- Dan: Exactly if you don't parse the image or script you don't know it's invalid

- Simon: Related topic, speculative HTML parsing. Is that considered in this realm of things.

- Yoav: Related, they're cache in Memory Cache. Slightly different rules between WebKit and Chromium

- ... Initially Preload Cache was just another case of speculative parsing we're using Memory Cache for, later changed in Chromium

- Simon: In Memory Cache for speculative parsing, there is no reference for the resource

- Yoav: No reference to the element

- Anne: Maybe there's ways where we can merge concepts but keep the same behavior? If something was entered into this cache due to Preload it has a one-time flag. Flag can be considered for different scenarios.

- Pat: One case is if you have link rel=preload, and you remove the link, does it stay in Memory Cache or does it get removed?

- Noam: Yes removed, possibly in HTTP Cache

- Anne: There with a one-time flag?

- Pat: But the link was what put it there with a one-time flag

- Anne: I think you can make an argument either way

- Noam: If we create a semantic where link tag is responsible for reference, you can remove link tag when you no longer want to use it

- ... Remove it when ready

- ... Definitely be better to specify that's what it does and have platform tests

- Pat: Removing the link tag as the forced condition for the one-time would be a problem for header-based preloads

- Noam: Is header Preload in use?

- Pat: Yes, mostly from HTTP/2 Push

- Simon: On topic of removing resource from Preload cache when you remove <link> element, I know of a pattern where you use rel=preload and you change the rel attribute to stylesheet

- ... Shouldn't prune resource immediately

- Anne: Do you consider resources not having RT entries a bug? Seems like a bug

- Yoav: If we added it, almost all resources would have two RT entries. Due to speculative preloads we don't want two entries at least. For preload as well, I wouldn't consider it a problem. If it was used there was no request going out from renderer

- Noam: Bug is that resource cache isn't specified

- Anne: You could end up with a document where it doesn't see any RT entries because of sibling documents. Agree with deduping for some, but maybe not entirely.

- Yoav: Have possibly at least one entry in a document

- Alex: I recently fixed some RT bugs in this area, one of the WPT tests would speculatively parse the HTML it receives and then speculatively fetch script, put in Memory Cache and look at RT data for that

- ... I used the RT of the real network fetch, but then if you fetch it again it says 0ms

- ... Because it did actually go to the network at some point. But seems like it's not well specified

- Yoav: Is that Preload or speculative preloading in general

- Alex: Speculative preloading based on HTML parsing

- Noam: Thanks for the one-time semantics explanations. I’ll try to specify something in that area that matches what browsers do

- … errors? We can specify that once the rest is specified

- … about HTTP errors, that’d have to be part of the spec

- … IMO, errors should be forwarded

- Anne: you might want to test this. We can render 404 images, if they were actual images. Not sure it’s cached

- … Otherwise, some browsers have XSS checks for some images. Further questions for CSS (e.g. if it doesn’t have the right mime type)

- Simon: For images, per spec it doesn’t put an image in the cache if it’s not a valid image. We’d only cache successful images. Maybe we can change that. Not sure browsers follow that.

- Noam: In preload they do, but maybe for 2 out of 3 browsers

- Anne: At least in Firefox preload hooks into existing caching infrastructure, which can explain some observations.

- Noam: We should either go resource specific, or go towards a generic cache. It can be resource specific, but we need to specify it.

- Anne: There are a couple of types where there’s a spec, but otherwise there isn’t

- Yoav: Memory Cache distinguishes destinations where HTTP Cache doesn't

- Pat: Those are spec'd in Sec-Fetch-Dest?

- Yoav: HTTP Cache doesn't necessarily Vary on those

- Pat: As part of spec process for Memory Cache we can

- Anne: Depends on where cache is placed, if you can get an entry out of it

- Yoav: A lot of checks that Memory Cache currently does is to avoid that scenario and we should maintain that property

- Dan: In context of errors, if it's a HTTP error then it's an error, but if the actual resource is an error, we kind of ignore that because not all browsers will parse all resources?

- Anne: What we're saying is it might be type specific

- Yoav: I think we can specify it can be type-specific but interoperable

- Dan: You would assume script would be pre-parsed across all browsers

- Yoav: Doesn't have to be preparsed, as long as it's eventually parsed

- Anne: Aim to specify the observable behavior is equivalent (network traffic, not perf)

- Simon: And RT API

- Noam: Same mixture of preloads and resources should end in same number of resource requests and RT entries

- ... Should still cache invalid script with an error

- ... With images I think it should be specified

- ... Even if an image got a 404, first request going through Preload should get that 404

- ... In general if we go with first request thing, if one-shot thing from Preload is available, we just get that response and clear it, everything else separate from this

- ... Fetching resource first checks Preload, separate from Resource Cache

- Noam: Please be involved in GH discussion

Measuring preconnects - Yoav Weiss

Summary

- I outlined the difficulties in knowing if preconnects were helpful

- Folks pointed out that since connections are potentially shared between browsing contexts, the information may not be easy/safe to expose

- Reporting on unused preloads seemed to have more support

Minutes

- Yoav: ResourceTiming gives us a connection time

- ... So we can know when a connection was re-used because fetchStart==connectStart==connectEnd

- ... Know whether resource was pre-connected

- .. Can't distinguish between implicit preconnects (knows things about user), explicitly preconnets (tags), or re-used persistent connections

- ... We don't know when they happen?

- ... We don't know if they were useful?

- ... Implicit preconnects

- ... Reused persistent connections

- ... Explicit preconnects is <link rel=preconnect>

- ... Opened issue many years ago about RT and preconnect

- ... Recently more relevant with Early Hints

- ... Early Hints allow server to send HTTP response headers via 103 Early Hints status before it gets the rest of the content / original hints

- ... Preload or Preconnect headers use-case

- ... For Preloads, we can estimate those were useful via RT API. But for Preconnects we don't know if the connection happened due to Early Hint and wasn't available before

- ... A few options for how we could distinguish

- ... When did preconnects happen? vs. the fact that it did happen

- ... Finding useless preconnects

- Pat: Unless you're assuming network partitioning on a per-doc basis, the connection pools don't belong to any document. Two tabs open, one could be creating connections for the other one. I don't know you want to leak that info unless it was a request on that document creating it

- Yoav: Should be partitioned for all top-level same-site

- Anne: Connection pools are partitioned, but you concern is valid

- ... If top level site A, and a embeds B, A shouldn't be able to detect if it's a reused connection

- ... At some point there will be leaks because there's not a key for the whole chain

- Yoav: Saying if A embeds B, B loads a resource from C, and A loads C it can see if there's an information leak

- Pat: Is it not enough if you're doing Early Hints trial from Preconnect, and you see the B fork of trial that it spends N% of time less in connections?

- Yoav: What I hear from field is that it's hard to tell those scenarios apart

- Pat: If it's not having an effect though?

- Yoav: If you have a very low data volume on some origins, hard to tell whether it theoretically worked or not

- Dan: Being reporting on unused Preloads would be much more important than unused Preconnects, consequences much more severe

- Anne: Assuming Preload is document scoped, you fire off a report

- Andy: When I've used Preconnect we just look at the effect on the 3P that we're connecting to (e.g. Cookie Consent banner). Measure something that matters.

- Dan: Way that you should look is the reverse, if you added Preconnect and it helped. Then you make changes in code and it no longer helps but nobody checks anymore. All those marketing pixels that live forever.

- Yoav: Going back to the useless Preconnect case, I take your point that you can't expose that cross-origin iframe, but that's not the only case for Preconnects. Any objections to preconnects the same origin triggered, or that didn't use? Hard, I have to think about it more.

BFCache - Fergal Daly

Summary

- Chrome, Firefox and Safari now support back/forward cache. Chrome is working on increasing its hit-rate. RUM vendors can help by exposing BFCache hit-rates in dashboards (some already are).

- We would also like to expose the reason for cache misses (e.g. holding a WebLock). Proposing an API for this and would like feedback (seems like exposing via PerformanceTimeline is favoured in this session). Already exposing reasons in DevTools.

- Unload is a big blocker on desktop for Chrome and Firefox (Safari ignores it) and problematic legacy API in general. We want people to use pagehide, visibilitychange or others like upcoming beacon-API, (sorry no link yet). Chrome is doing outreach and exposing in lighthouse.

- Feedback is that customers don't get "BFCache", maybe another name would help.

Minutes

- Fergal: Team effort - bfcache-dev@chromium.org

- … quick summary of BFCache.

- … Firefox and Safari have them for a while, Chrome recently added

- … Navigating away from a page discards the objects created. With BFCache the page is paused, and can be resumed without re-parsing

- … Makes history navigation instant (for the most part)

- … See 10-35% of history navigations served from the BFcache, aiming for 50% next year.

- … Have requests for RUM folks. Would be great those metrics included BFCache hit rates for the sites in question

- … Would be great if dashboards tell people what’s causing their sites to miss BFCache

- … and would be great to move off of unload events

- … Need more awareness - many developers are unaware of it

- … e.g. Wikipedia fixed it quickly once they were aware of them

- … If you’re brought back from BFCache, the event has a persisted bit that’s true

- … Can divide that by navigation entries with a “back_forward” type

- …< Insert code example here>

- … Lots of reasons why you’d be blocked from BFCache

- … Chrome initially blocked everything that could’ve been problematic and now working on unblocking those

- … Example - cache-control: no-store

- … If you hold a weblock it’s difficult to put you in BFCache, we’d have to let other folks take it and kick you out

- … Other features as well result in blocking

- … Pages can see that, handle it properly in pagehide, and be able to go into the BFCache

- … Explainer on an API that would give you the reasons for why you didn’t make it into the BFCache

- … https://github.com/rubberyuzu/bfcache-not-retored-reason/blob/main/NotRestoredReason.md

- … Give you frame URL, and reasons when possible (i.e. when not cross-origin)

- .. Would like feedback on the shape of the API

- … Could attach it to PageShow, NavigationTiming or Reporting API.

- … Would love feedback on the GH issue

- … Would like to enable sites to drive their cache hit rates

- … DevTools show such reasons, but that’s limited

- … We also want to ask people to stop using unload

- … Android ignores unload for BFCached pages

- … Safari is the same

- … Chrome desktop cannot do the same, but it is the largest BFCache blocker

- … You can change unload usage to pagehide usage typically. PageHide is more reliable

- … You can also tell apart PageHide that goes into BFCache

- … Sometimes VisibilityChange can be a good replacement.

- … If you have unload use-cases that are not replaceable, please reach out.

- … Orthogonal, but there’s a proposal for a beacon API that delivers information after the page is discarded regardless of reason

- … Almost every usage of unload can be replaced by PageHide, but it’s still not 100% reliable, and there’s no “last PageHide”, so hard to send a final beacon from the page.

- Nic: Thanks! Definitely something that we are planning to support in our dashboard. Glad you’re thinking about attribution when things don’t work out. Have to think through about the best delivery mechanism. Generally favor PerformanceTimeline.

- … BFCache itself is a mouthful and we’re not sure how to explain that. Maybe as a community we can figure out a better name? Common terminology?

- Olli: Blazingly-Fast cache, originally

- Dan: We already collect this info, exposed to us rather than to users, as they can’t disable it. Did the work of removing the unload event. Collect the data of when a BFCache occurs.

- … We don’t normally look at those numbers. The motivation to collect it was to get the best performance possible and avoid interference with CWVs.

- … We don’t actually get BFCache for Chrome, because we use WebWorkers for most of our sessions.

- Fergal: Working on dedicated workers support

- Yoav: Performance timeline may not be available at the time of the page unloading for RUM scripts?

- Fergal: Data should be available to a page that wasn't able to BFCache when the page comes back in at the pageshow event, so the RUM script should be there

- Cliff: As a RUM provider, we’re excited about exposing more navigation types as dimensions. Similarly excited about attribution

- … How we talk about BFCache is important, as we have more non-technical folks. So language is important. Worthy a discussion. +1 on PerformanceTimeline

- Nic: Suggestion to create an issue on naming suggestions. Nic to take AI

- Alex: We’ve been measuring and trying to increase BFCache use, so interested in this direction. Recently renamed it from PageCache to BFCache

- Olli: How to deal with cross-origin iframes? E.g. youtube widgets

- Fergal: When an iframe is blocked and its cross-origin is blocked. You don’t know what URL it was. But if you added it to your page, you should know what that widget is and where it came from.

- … Looking at logs we’re trying to find the worst offenders and get them to remove their unload handlers.

- Ian: Wanted to talk about the reporting API. I know Firefox and Safari made some work on that, and we could use the infrastructure to report BFCache related info.

- Fergal: If there are problems in the explainer with the ReportingAPI, let us know.

- Ian: Example is not bad, but would require the code to run in the page.

- Fergal: I’ll add a note about reporting to the explainer.

- Anne: Interested in Reporting API and Observer. We have a behind a flag implementation for both. Only integrated with CSP, need another feature to justify shipping it.

- … We’re still supportive of the API once there’s justification.

- Steven: Where in devtools can you see BFCache misses?

- Fergal: shipping in Chrome 95. Under “application”

PageVisibility and requestIdleCallback - Noam Rosenthal

Summary

- PageVisibility and requestIdleCallback are slowly folding their content into the HTML spec.

- It was agreed that PageVisibility can be folded entirely (which has since happened), and that requestIdleCallback would fold only the deadline algorithm for now.

- Regarding the future of requestIdleCallback, some request was made to expose some of the deadline information outside the context of the idle period deadline.

Minutes

- Noam: Discussion topic, not presentation

- … In my work I'm going through a lot of WebPerf specs, cleaning up old issues

- ... Cleaning up technical debt

- ... Common thread of what I'm doing is moving things into Fetch and HTML

- ... Last year moved from ResourceTiming and NavigationTiming into Fetch and HTML

- ... This year, same thing but also getting into smaller specs

- ... Recent PRs they're very close to removing the entire spec into HTML

- ... pageVisibility PR https://github.com/whatwg/html/pull/7238

- ... Defines event, states, hidden, etc in HTML

- ... Makes pageVisibility spec itself not have anything but prose (that could be transferred to HTML spec)

- ... Same for requestIdleCallback https://github.com/whatwg/html/pull/7166

- ... Attempts to define what "deadline" means, which is a bit hand-way today

- ... Defines an event loop terms, and testable terms

- ... A lot of the rIC spec is about that

- ... Issues in the rIC repo are about the deadline not being well defined

- ... Some of this takes the "hook" concepts in PageVis spec, which other things can call

- ... Why not just call them one-by-one and we can call them in order

- ... Making it more predictable and clear is a big Pro

- ... One con of putting things in HTML spec. I'm a member of W3C and WHATWG, not sure if everyone else is

- ... In general there are fewer reviewers available in HTML specs

- ... However it's a different process than working with W3C specs that may feel more experimental, the process can go faster for better or worse

- Yoav: From my perspective I think that it makes sense for these two specs to be better aligned with HTML. The PRs that I looked at make general sense and agreed that they don't leave a lot beyond setting up the context in those documents

- ... Those two specs define bits whose processing is outside of HTML

- ... Makes sense for those two specs to move

- ... Unless there's a specific reason why we shouldn't

- NPM: My only concern for Page Visibility is if we ever decided to expose additional information via the Observer that we have discussed in the past, we'd have to revive the spec we just killed.

- Yoav: Or another Visibility Observer we'd have to add that to the charter

- NPM: Regarding rIC I'm not super familiar with it, not sure if there are any intended additions to it

- Yoav: Spec editing situation there is complex, no longer involved

- Scott: We have plans to keep working ins scheduling space, but not necessarily rIC

- ... Taking over editor and ramping up on it

- ... I think it does make sense in HTML, given how integrated it is, no objections at this point

- ... If we did have to expand on it for any reason, we could do a PR to HTML, or try to monkey patch it through a different spec

- Noam: Wanted to suggest - some of these specs are tiny, it may be better to arrange specs per subject rather than function

- Scott: This is what we’re doing with the Scheduling APIs. One option would be to move that processing to the scheduling APIs spec. It’s similar event loop modifications, but maybe it should be in HTML

- Noam: Makes sense to have the event loop modifications be part of HTML and keep the web exposed bit in W3C

- … If anyone is interested in the deadline discussion, it felt that no one is extremely familiar with it.

- … A deadline is when you get an idle callback you get a number of seconds you have for “idleness”. May make sense to see if people are using it in a meaningful way.

- Olli: Have you compared how different browsers implement it?

- Noam: Added WPTs, and based on the definition, browsers handle it differently. Not super different.

- … Nice to work on it, as it required a lot of timer revamp in HTML, and now it’s better defined.

- Philip: I used that deadline in the past in code. At the time, it was only implemented in Chrome. I avoided running the cleanup code if the idle time was lower than 16. Not the most useful signal, but nice to know what idle period I was in.

- Noam: Might be more explicit to just expose that: is there a pending render opportunity

- … That’s how the deadline logic is implemented.

- Olli: Not quite true in Firefox. We can create new rendering opportunities as needed to improve performance

- NoamR: best guess

- NoamH: Using it as a heuristic to execute expensive/blocking tasks. A better approach for the render opportunity is the isRenderPending.

- … We do use the deadline and skip tasks when there’s no time

- Scott: isFramePending is useful outside of rIC, so it largely takes care of that use case. Deadline also takes into account pending timers.

- Noam: Those are the 3 things that can effect it: timers, rendering opportunity, 50ms timeout.

- … Can be useful generally

- Scott: People also ask for deadlines outside of rIC as a useful signal

- Alex: We have devices with variable framerates that can be greater than 60 FPS, so don’t want to encourage the web to be locked into 60 FPS.

- Noam: This is where deadline fails. It’s heuristics based on assumptions, rather than providing the signals directly. Libraries could then do that for people.

- Michal: Followup to Alex’s comment. What’s running on that frame? Hard to know ahead of time, but maybe you could use past info to predict the future.

- … Skipping a main frame update is not equally bad in all situations.

- Noam: I’ll open an issue to consider exposing direct signals instead of deadline heuristics.

- Dan: Just wanted to say that I’m hard-pressed to think of a really good use-case for rIC, and it’s starting to see it as an anti-pattern. So maybe it's not worth investing in it.

- Philip: To clarify, I was looking for a dedicated scheduling API, to fire low priority tasks. Could be a better API to better handle that use case.

- NoamH: In the same sense, having a signal of how much time you have remaining, enables you to split you tasks.

- Dan: If it’s a DOM related task it shouldn’t be in rIC

- NoamH: Render less important aspects of the UI (generate HTML using React). You could consider doing that in a worker, but there are tradeoffs.

- Dan: That’s the part we need to fix.

- Yoav: Moving things over may also depend on ability to discuss WHATWG things

- <TODO> conclusions

RUM pain points - Nic Jansma

Summary

- Discussed what "ideal" RUM API characteristics are, including being able to look-back into history and having enough attribution to be able to affect the metric

- RUM needs around more SPA support, better ResourceTiming visibility, observing cross-origin frames, and other resource characteristics

- Would like to see a more reliably mechanism for ensuring beacons are sent (beyond Beacon API), maybe through Reporting API

- Echos of support from other RUM vendors and companies using RUM for their own analytics

Minutes

- Nic: Wanted to talk about RUM pain points from my point of view, influenced by feedback from SpeedCurve and Pinterest

- … Ideal RUM APIs

- … Prefer APIs that can look back into history - performance timeline or perfObserver with a buffered flag.

- … even if your library wasn’t on when the event happened, you can collect it later

- … As a 3P provider, we sometimes load late, and such APIs are critical

- … Attribution is key

- … We’ve been getting better with that. In previous discussion with Fergal we discussed BFCache attribution, which is great

- … SPA support - designing APIs with SPA support in mind. Some of the current APIs don’t work great with SPA, e.g. LCP

- … Want to see RUM loading improve. We’re using a loading snippet that’s 2.5K

- … Put this junk in the pageload to avoid affecting onload performance.

- … Can run scripts at onload and have visual indicators

- … So jump through hoops to avoid it. Async and defer are blocking onload.

- … There is a possible solution in resource loading orchestration, where there are different hints to load scripts at different stages

- … When RUM measures something people ask “why” and “how to fix it”

- … LCP.element and CLS.sources are great examples for attribution out the gate

- … LongTasks are the flip side. They have no attribution detail which helps find the source.

- … In a modern website you have so many dependencies that current attribution doesn’t help

- … Pinterest wanted to do better root cause analysis, and knowing the trigger would really help

- … JS self profiling could help, but it’s a sampling API so it may not help in all cases.

- … Last year we had a great presentation from Patrick Hulce on that front.

- … SPAs - affect nearly every RUM metric

- … Many metrics are irrelevant

- … You have to think about how they affect an SPA

- … In mPulse we have an “are you an SPA” boolean that then measures different things

- … AppHistory can help, but for Soft navigation, we can hook into some events to know when it starts

- … But we don’t know when it ends

- … We listen to mutation observer, patch Fetch, and doing a lot of work that we’d love for the browser to help us do better.

- … For MPAs, the page is loaded once the onload fired. For SPAs onload doesn’t matter

- … We’re definitely talking more about SPAs, but we’re not yet there.

- … e.g. LCP needs a reset

- … on the other hand, CLS does work well with SPAs, because you have all the events to slices them as you want

- … So need to focus on new APIs supporting SPAs

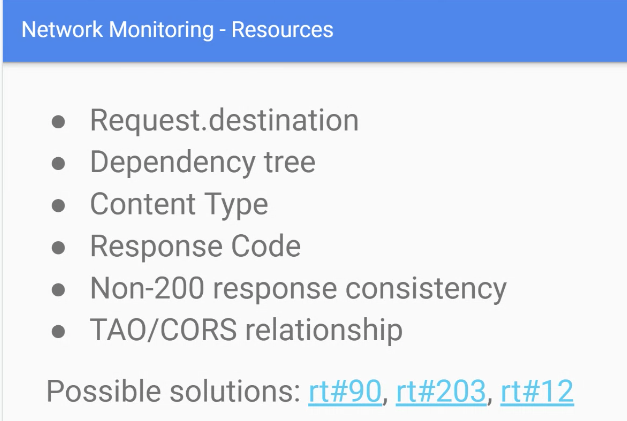

- … Network visibility - some proposals but no solutions

- … If you measure resources, all cross-origin iframe resources are not visible, which constitute 30% of hits and 50% of bytes.

- … So we show customers waterfalls, but they are incomplete

- … Particularly painful for ads

- … Also for CLS, visible in devtools but cross-iframe CLS is invisible to RUM

- … Proposal for a “bubbles” API to bubble up resource entries to the top-level document, and cross-origin iframes can opt-in to share that data.

- … Observing when network requests start

- … Enables SPA monitoring. Currently patch Fetch and XHR. Otherwise incomplete, and makes it hard to calculate network idleness.

- … Ongoing work on Fetch+SW integration - better cache hit rate reporting would help Pinterest

- … More information not exposed for resources›

- … No consistency on non-200 responses. Want more visibility into that

- … Worthwhile to continue past discussion on TAO and relationship with CORS

- … Last thing: reporting API

- … Browser extensions polluting data

- … Would love to tag on metadata

- … Today the only way is through the URL

- … would be useful to add session ID, session length during the lifetime of the page

- … May have privacy and security implications

- … Was a presentation to use reporting as a more reliable beaconing mechanism

- … mPulse sends beacons when the page loads, because it’s most reliable. Would like to send it later, to enable more data. There are some downsides to sending 2 beacons.

- … Some customers send it later, but there’s a reliability cost

- … Lost beacons means losing experiences

- … For some customers that’s a good tradeoff, but an API that would fix that tradeoff would be helpful.

- … Thanks for SpeedCurve and Pinterest for their ideas.

- Katie: Wanted to say +100000 to all of that. Owns and maintains internal RUM. Same issue. We do all kinds of wild stuff to work around that.

- … A couple of years ago we moved our JS build system, and when they tried to launch their perf monitoring went off the chart.

- … Grouping the perf monitoring bundle ith the JS, so performance changes changed when perf monitoring ran

- … Ended up inlining that code to avoid variation

- … We see this when running the monitoring code on the same thread, changes result in impact on the measurement.

- … Big problem overall: attribution, SPAs

- NoamH: Curious - are your customers interested in hang detection - when the page blocks.

- Nic: Yeah and the reporting API crash reporting is great. A freezing API would be a good fit as well.

- NoamH: We’re exploring similar ideas

- Olli: In slide 2 you mentioned looking back in history. Need to be careful. E.g. last year a social media site used a buffer size of 100000, which resulted in huge memory bloat.

- … Maybe the APIs should be designed to prevent such misuse

- Nic: ResourceTiming has explicit control over the buffer. PerfObserver doesn’t allow infinite buffer size

- … We have a doc that suggests recommended buffer size for implementations.

- … Definitely a tradeoff there.

- Dan: At Wix we also use inhouse perf monitoring. Definitely echo everything you said on attribution, despite controlled env. With regard to SPAs, what you do with CLS creates some discrepancy between how you measure CLS and how CrUX measures CLS. We decided to align with CrUX because that’s what users see.

- … What do you think about integration with privacy cookie banners, consent, etc?

- Nic: We indeed report a different metric from CrUX. Customers use both data points.

- … CLS sessions is a great way to think of this, and points out the worst layout experiences.

- … Otherwise, we have some customers that try to measure the effect of privacy banners, etc. Still at early stages.

- Dan: Wasn’t asking about the banner’s perf implications, but about the consent policy itself. Avoid reporting things, etc.

- Nic: Gave customers the choice and it depends on their policies.

- Cliff: On the LCP issue, is there an open issue on that?

- Nic: There’s an open issue to reset LCP.

Measuring SPAs - Yoav Weiss

Summary

- Outlined a path to reporting soft navigation in RUM APIs, including related paint timings.

- There was interest in that happening, but also in a “Paint Timing reset” API

- We should be careful not to take the first paint after a navigation (e.g. button color change, spinner) as *the* FCP.

Minutes

- Yoav: Soft navigations are hard

- ... aka same-document navigation

- ... with History API, user typically clicks on something, fetches content, history.pushState(), DOM modifications

- ... AppHistory API proposal, user-initiated link or "appHistory.navigte()" API and "navigate" event

- ... transitionWhile() with a Promise that fetches content and modifies DOM

- ... What's hard? There's no clear start point (History API model)

- ... Don't know when navigation has started, hard to link to user click event

- ... Hard to distinguish URL-changing interactions from soft navigations

- ... No correlation to paint events that happen afterwards to soft nav itself

- ... Paints may happen after nav but may not be related to nav work itself

- ... No clear starting point: App History came up with "user initiated navigation" (anchor or form)

- ... Interactions vs. navigation: detect <main> paints? % of screen painted?

- ... What happens when main element is on a small part of the screen? Mis-incentivising

- ... Maybe we can filter interactions based on past hard navigation

- ... Paint correlation - same as responsiveness

- ... Exact same problem we have for soft navs we have for responsiveness, we don't know next frame is related to that user action

- ... Potentially async work that can happen and need to be completed before related frame is presented on screen

- ... AppHistory - limit tracking to promise chains

- ... Any promise that dirties the DOM and is paint causing, we can track back to navigation

- ... Promise Hooks, V8 internal API, an option but has performance implications

- ... Look at tracking tasks from render side

- ... <demo!>

- ... On AppHistory demo page, you can see navigation and task that resulted in a layout operation

- ... Essentially I think that it's far from done and there's still a bunch of problems, e.g. prototype doesn't handle userland promises

- ... Path between navigation or click events and the DOM operations that result

- ... We may not have to rely on AppHistory, although alignment could be nice

- ... Responsiveness could use same mechanism

- ... Maybe this has effects on LongTask attribution

- ... Caveats: Cannot track all tasks, e.g. data queues

- ... If people are putting data into a queue and getting it later, we don't have a way to correlate and say these tasks are a continuation of one another

- ... I think from a navigation perspective this is fine

- ... We can essentially tell developers that if they want to be able to track soft navigation, this is not something they should be doing as part of their navigation flow.

- ... Might be easier to implement when task scheduling is centralized

- ... Not sure if this is cross-browser compatible, so feedback would be appreciated

- ... If we want to expose paint events as a result of this correlation tracking, there may be security restrictions on a number of paint events, to avoid developers gaming this somehow and get more paint events than they should to sniff out visited state on links

- ... It's possible the requirement for a user-initiated navigation would make it so this throttling is not needed, but I haven't passed this bys security folks

- ... So if we can detect soft navs, what can we do?

- ... perf-timeline#82 API shape for monitoring new navigation-like entries

- ... Point in time developer resolved the promise

- ... API shape for same document entries, as well as other navigation-like entries

- ... One result of past discussions is we need a Navigation ID to correlate other performance entries with that navigation (e.g. RT, UT) to be able to group them per-navigation

- ... Real, BFCache, Soft navs

- ... Paint-timing for same-document navigation, would enable Paint Timing correlation

- ... Navigation ID helps to correlate to relevant navigation

- ... May or may not depend on App History API

- Scott: This is cool, one of the things we're going to think about next year. Otherwise penalizes SPA-style nav even though it's better for users

- ... We have a lot of metrics around "largest" or "first"

- ... Wonder if shorter-term work is resetting timers or on navigation so we can re-use those same mechanisms

- ... Worry if handling a soft-nav diverges too much from initial nav, harder to explain to developers how to optimize this

- Yoav: Reason it's hard to reset and re-fire LCP and FP and other metrics, right now with History API we don't have a clear starting point. When do we restart them? If we fire them, what's their time origin? Makes it hard

- ... Security concerns around exposing paint times more often than once per navigation because it can be used as an attack vector for :visited link-based history interpolation

- ... Tie that to user-initiated navigation and not be completely JavaScript controlled

- Scott G: One other big blind spot for SPA nav, RT don't show up until they're complete, because we don't know the beginning.

- ... User-space solutions may help

- Yoav: Aligns with what Nic was asking for with more network awareness in APIs

- ... I'm skeptical with userland implementation, unless it's app-specific if you instrument it fully with ElementTiming and such

- ... Harder for RUM providers to generically pick up from any app

- ... Require a browser implementation, hopeful that timelines won't be too long

- ... Typing as fast as I can

- Steven: Clearly a need for a concept of a reset, each MPA navigation you get LCP, FID, etc. In SPA you can't get them

- ... I decide now it's called interaction/navigation/transaction, reset

- ... In our use-case, we have a big SPA. We have concept of our own navigation, when we decide click of user was a navigation, we reset all of our measurements. We don't just want to measure soft navigation, but also interactions. Tab within subtab.

- ... Because we're measuring at navigation level, we'd miss measurement of sub-tabs, but sometimes those are very important. We've moved to the aspect of "transaction". Ideally we want to measure every click. You can almost forget concept of navigation, and you can measure every interaction

- ... To me I would not be opposed to every click or tab we'd reset measurements, and we the developer would send it back for reporting

- Yoav: What would you measure after that reset?

- Steven: CLS, FID, LCP, usage of JS thread, how long did it take when user clicked

- ... Sometimes we get stuck at the navigation concept, and we may another measurement down on the page somewhere

- ... A reset mechanism we could call on every click would be more useful for app developers

- Yoav: I would argue you can already do all those things, i.e. FID based on event timing. CLS based on layout shifts.

- ... For LCP I'm not sure what it's meaning would be if we don't have the concept of tying those paints to the click

- ... Responsiveness API would solve your problem for interaction-related things

- ... Hoping to solve for navigation with similar mechanism

- ... LCP I don't know if it makes sense to have user-based mechanism

- ... AI to post issue to minutes and we can discuss

- Nic: Awesome we can ask for something in one presentation and have a proposal in the next. Would help with SPA monitoring and LT monitoring. Would be powerful

- Pat: In SPA, for LCP, hard to know if DOM is changing

- ... For Chrome, trying to avoid gaming of metrics and making them consistent across all sites

- ... Don't want it to impact search

- ... Back to ResourceTiming events, something we do in synthetic a lot, looking at when the page finished based on network activity. We see when requests are started, completed, etc.

- ... We can wait for 2 seconds of idle after things finish

- ... Would help in the generic case but also the app-specific case

- ... e.g. we have specific widgets are kicking off activity and want to know when that's done

- ... I don't know if the network timeline currently as it's modeled would support something, but if we had incremental updates as request is issued, cors-allowed, complete would help that case

- ... Paint linking back to user input is absolutely critical thing to pull out and tease out separately

- ... Other things that are LEGO building blocks to measure SPAs well generically and specific cases

- Dan: I would also like to add that I would love to have an SPA compatible LCP event, moreso FCP. For example suppose what triggered it is a button that clicked, so the button repaint could be FCP. Think carefully when measuring the "first" paint. LCP may not want to track before some network response finishes. Not exactly clear. Reason FCP is so important is I've seen a lot of SPAs where there's no visual indication that something has been clicked for a very long time, where MPA the browser itself gives indication.

- Yoav: We can take the last correlated paint as the first paint. App History API has a definitive end when the developer resolves the Promise, vs. History API where it’s not known when it finishes

- Michal: Clarifying question for Scott - do you think we should start experimenting with resetting timers in parallel to trying to automatically detect soft navigations?

- Scott G: Both in parallel is what I was thinking about, the problem with automatically measuring is that it may take a long time for all browsers to implement. We need something to fill the gap while a proper solution is baked in and becomes a standard for everyone.

Animation smoothness - Michal Mocny

Summary

- Follow-up from a recent presentation on Animation Smoothness.

- Discussed evaluation criteria for animation frame visual completeness vs. animation smoothness, the states a single animation frame can have, and what that means for the observer.

- Discussion involved making sure the metric is accessible to less-technical folks, emphasized the importance of attribution, as well as a request to get the expected device refresh rate.

Minutes

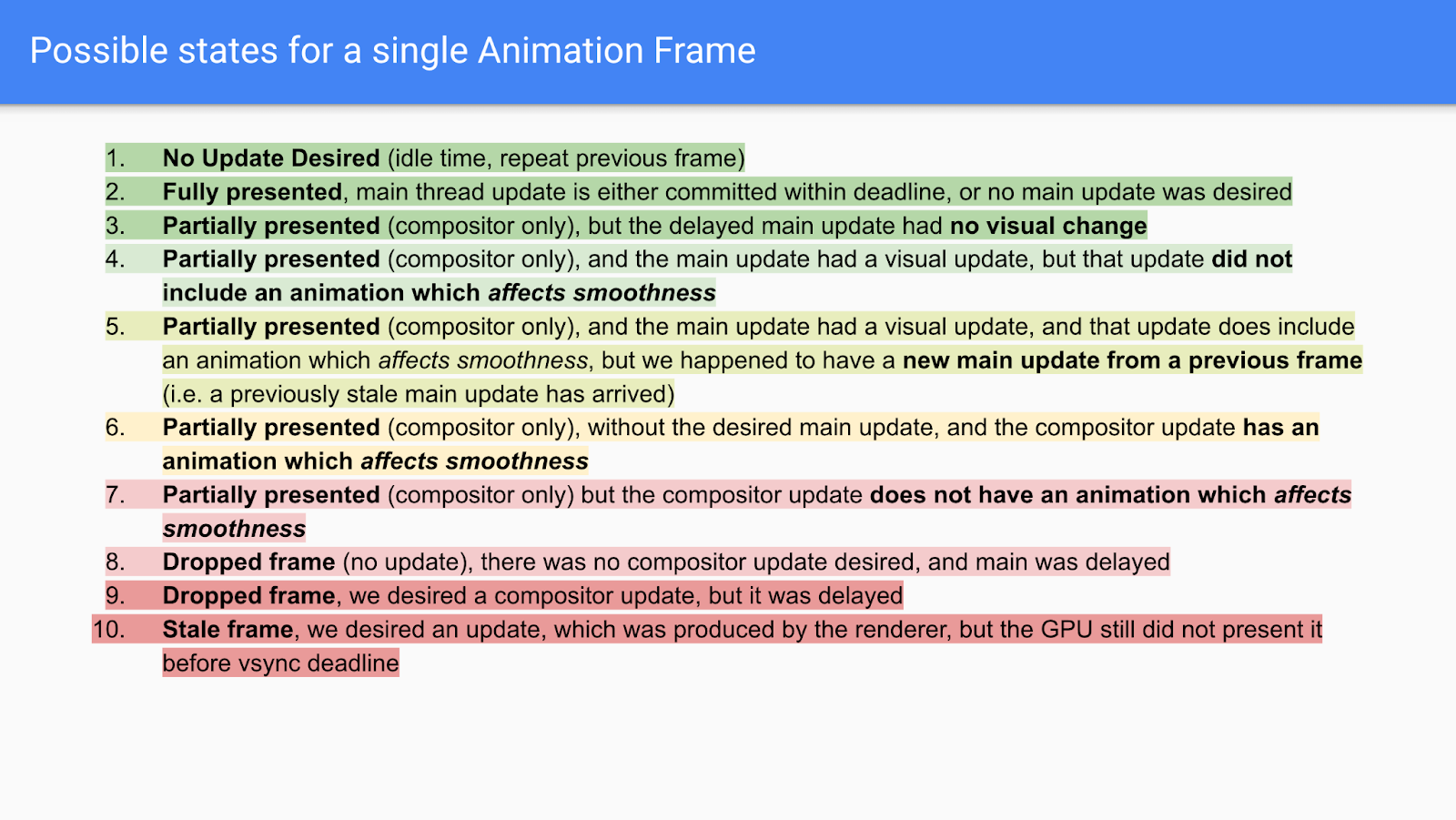

- Michal: We talked about this last year and I've recently presented, so I'm not going to go over the full thing, but links to previous dive

- ... Rather get feed back from folks

- ... Today: Frame Completeness vs. Animation Smoothness

- ... Possible states for a single Animation Frame

- ... What is Animation Smoothness?

- ... Goals i to deliver complete animation frames in a timely manner

- ... 2nd goal is to identify frame updates when they matter, i.e. no jank

- … Frame completeness vs animation smoothness

- … a single animation frame is not necessarily presented or dropped

- … there may not be a rendering update

- You can have a partially presented frame

- … Even if you produce an update you can miss content (checkerboarding)

- … quality vs. quantity (e.g. low bitrate smooth video)

- … Difference between some updates and animation updates

- … So, we have to start by detecting active animation

- … try to direct web developers towards composited animations

- … So for a web metric, we need a way to classify if a given frame factors into smoothness

- .. Main thread affecting smoothness

- <slide>

- Compositor

- <slide>

- … So we don’t know immediately if an update impacts smoothness. But we have to wait for the LT to complete before we know if all its frames were delayed or not.

- So tried to create a simplified diagram

- Conceptually a frame is not a boolean, but a fractional, probability value

- NoamH: I really like the breakdown. Very technical discussion that requires browser architecture understanding. How do I communicate this up to less technical folks? Can we have a definition that doesn’t rely on internals?

- Michal: Great question. Wanted to show y’all the details and then we can discuss simplifications. Made some API proposals in the past.

- .. Our job to put this all together. For each animation frame, we can label for completeness and smoothness

- .. Or we can combine them to a score

- … Important to think about average throughput

- … But sometimes also important to minimize max latency

- … Want developers to focus on the situation and adapt to it.

- NoamH: It feels to me that, given different frame rates on different devices, it’s important to have a framerate that matches the device.

- .. Maybe we can have an API that enables us to define what’s the level of smoothness that we desire?

- Michal: In some earlier version, average throughput was saying “you’re penalized on every missed opportunity”. Didn’t handle the case where higher frame rates mean you have more opportunities to miss.

- … But if look at frame to frame latency, lower framerates give you more time to get it right.

- … Users with higher framerate requirements can have different bars

- NoamH: Is there an API to expose the current refresh rate?

- … The desired refresh rate may depend on app type: e.g. Game vs. business

- Michal: Attribution? Lab tooling give you animations by type

- … common issues:

- ..animation on the main thread + long tasks

- .. Or compositor animations that overwhelm the GPU

- … So could be completely unrelated to JS

- … So even knowing these things can be useful

- …. So maybe we can expose such top causes

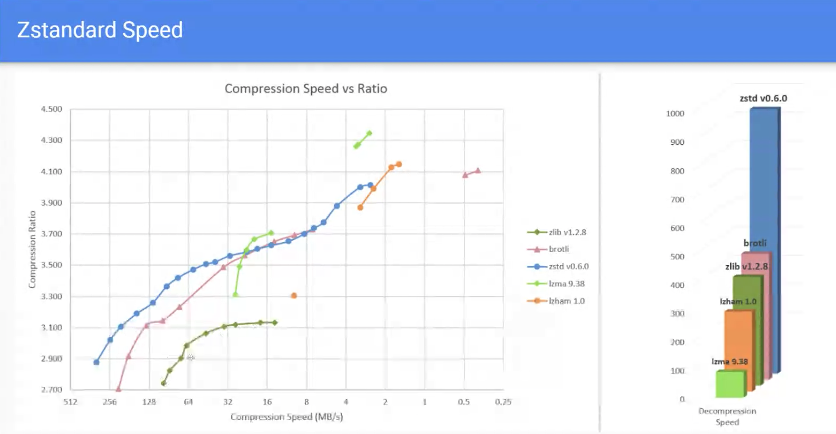

ZStandard in the Browser - Nic Jansma

Summary

- Zstandard has interesting characteristics of being relatively high compression and relatively low CPU cost

- Having the browser support it as an Accept Encoding could provide a benefit for CDNs and Origins that want to compress their dynamic content with higher compression than gzip but less CPU cost than Brotli. If supported by JavaScript Compression API, uses include uploads, beacons, profiling data.

- Interest from other members. Interesting idea to expand Beacon API to request it compresses uploads first. Concerns from browser vendors around binary size, needing to update Compression API to let you specify level.

Minutes

- Nic: zstd is a recent compression algorithm, pushed by the Facebook team

- … want to go over use-cases and background, and talk about maybe adding support for the web, in the browser

- … I’m not an expert on zstd, just a user.

- … May be interesting in the contexts of compression streams, on top of content-encoding

- … published by FB, adopted across the web in tooling

- … Not much web server support for it, there’s maybe an nginx module

- … Not necessarily supported by CDNs, but in many custom client-server set ups

- … At Akamai have been using it for at-rest storage

- … It’s faster than gzip with smaller files

- … experimenting with it internally, and think that the browser use-case can be a good fit

- … zstd published benchmarks

- Zlib is limited in compression ratios, and loses speed fast

- Comparing speeds, zlib is slower per compression ratio compared to brotli and zstd

- In comparable speeds, zstd is better than brotli

- If we’re comparing compression ratios, it’s also faster than brotli

- Diff between zstd and brotli (on the lower compression levels)

- Decompression speeds are not worse than brotli and zlib

- Precompressing static content gives better compression than gzip, but it’s similar to brotli, and is very CPU intensive

- Where zstd would shine is with dynamic content: HTML pages, API call JSON, etc