WebPerf WG @ TPAC 2022

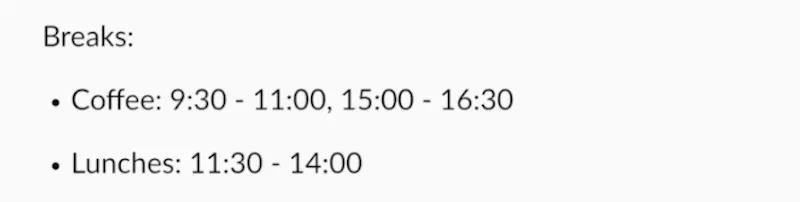

Logistics

Where

Monday: North Tower - 3rd floor - Junior A

Tuesday: South Tower - 4th floor - Granville

Thursday: Residential/Concourse Tower - 3rd floor - Finback

Friday: North Tower - 4th floor - Port McNeill

When

September 12,13,15,16 2022 - 9am-12pm PST

Registering

- Register! https://www.w3.org/register/tpac2022

- Register by Aug 05 for the Early Bird pricing

- Health rules: Masks on, daily COVID tests

- All WG Members and Invited Experts can participate

- If you’re not one and want to join, ping the chairs to discuss (Yoav Weiss, Nic Jansma)

Calling in

Attendees

- Yoav Weiss (Google) - in person

- Nic Jansma (Akamai) - in person

- Barry Pollard (Google) - in person

- Patrick Meenan (Google) - Remote

- Jeremy Roman (Google) - in person

- Sean Feng (Mozilla) - in person

- Simon Pieters (Bocoup) - remote

- Michal Mocny (Google) - in person

- Amiya Gupta (Microsoft) - remote

- Rachel Wilkins (Bridge and Switch) - remote

- Ian Clelland (Google) - in person

- Andrew Comminos (Meta) - in person

- Scott Haseley (Google) - remote

- Kouhei Ueno (Google, Tokyo) - in person - near the door

- Paul Calvano (Etsy) - remote

- Nolan Lawson (Salesforce) - in person

- Noam Helfman (Microsoft) - remote

- Dominic Cooney (Meta) - in person

- Andy Luhrs (Microsoft) - remote

- Benjamin De Kosnik (Mozilla) - remote

- Steven Bougon (Salesforce) - remote

- Theresa O’Connor (Apple, TAG) - in person, just for the PING joint session

- Charlie Harrison (Google) - in person

- Jeff Jaffe (W3C) - part-time in person

- Aram Zucker-Scharff (The Washington Post) - in person, early departure

- Alex Christensen (Apple) - in person

- Andy Davies, SpeedCurve - remote

- ... please add your name, company (if any) and if you’re planning to attend remotely or in person!

Agenda

Times in PT

Monday - September 12

Timeslot (PST) | Subject | POC |

9:00-9:30 | Intros, CEPC, agenda review, meeting goals, introspection | Yoav, Nic |

9:30-10:00 | Noam R (Yoav) | |

10:00-10:30 | Domenic | |

10:30~10:45 | Break | |

10:45-11:00 | Amiya G | |

11:00-11:30 | Michal | |

11:30-12:00 | Barry | |

12:00-13:00 | Lunch | |

13:00-17:00 | Webperf Debugging Workshop (in-person) | |

17:00-17:30 | Mark Nottingham | |

17:30-18:00 | Fergal |

Recordings:

Tuesday - September 13

Timeslot | Subject | POC |

9:00-9:30 | Noam R (Ian) | |

9:30-10:30 | APA joint meeting: Accessibility and the Reporting API | |

10:30~10:45 | Break | |

10:45-11:30 | Alex C | |

11:30-12:00 | Yoav |

Recording:

Thursday - September 15

Timeslot (PST) | Subject | POC |

9:00-9:45 | Responsiveness @ Excel | Noam H |

10:00-11:00 | PING joint meeting:

| Yoav |

11:00-11:15 | Break | |

11:15-12:00 | Colin |

Recording:

Friday - September 16

Timeslot (PST) | Subject | POC |

9:00-9:30 | Nic | |

9:30-10:00 | Yoav | |

10:00-10:30 | Amiya | |

10:30~10:45 | Break | |

10:45-11:30 | Performance beyond the browser | Lucas |

11:30-12:00 | Yoav |

Recording:

Sessions

Intros

Summary:

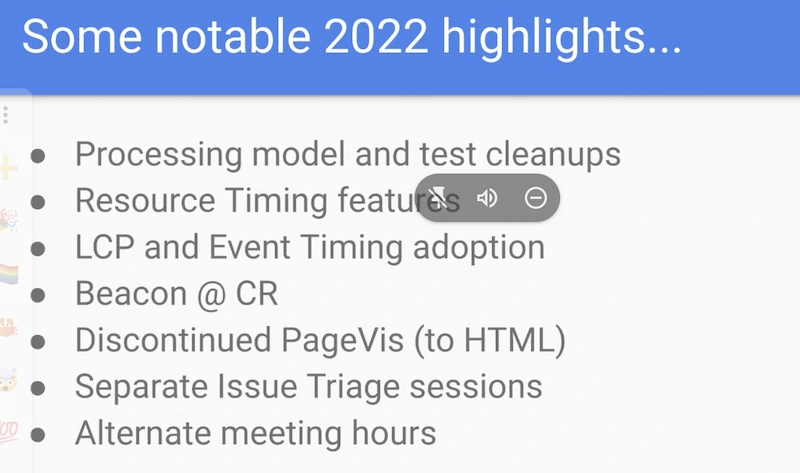

- Review of 2022 highlights, including processing model changes and features added to ResourceTiming, adopting LCP and EventTiming, and moving some specs to HTML

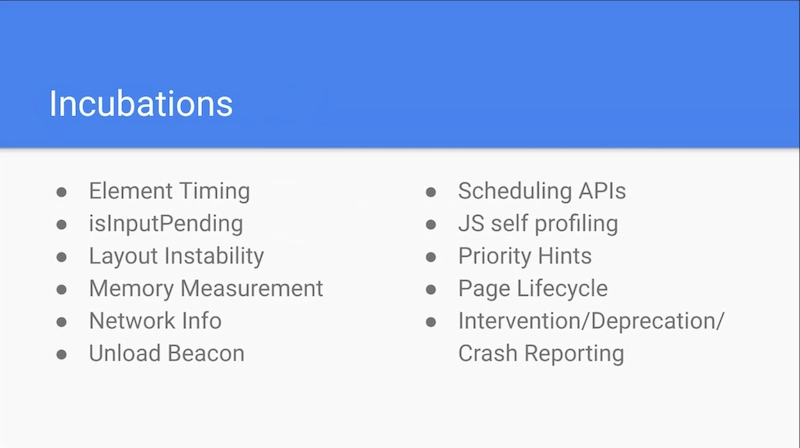

- The Working Group continues to discuss WICG incubations, and some of these have been adopted in the past (future session discusses rechartering and these incubations more)

- Review of Code of Conduct, Health rules and Agenda for the week

Prefetch processing model

Summary:

- Proposal - `<link rel prefetch>` would be only for same-origin document prefetches, and same-partition subresources. We’d remove the “5 minute rule” and apply regular caching rules. (other than ignoring `Vary: Accept` at consumption time.

- An “idle priority” preload can replace some of the current uses for same-document prefetch (even if preload is mandatory, and prefetch is not)

- Dropping the “5 minute rule” would reduce some current usefulness for same-origin navigation prefetches. We should look into the impact, and if we go ahead with it, tell developers about it (and have them e.g. use stale-while-revalidate)

No-Vary Query

Summary:

- General positive reception.

- Points raised during discussion:

- Be sure this gets surfaced to HTTP WG to maximize the likelihood this gets used throughout the stack, not just in browsers.

- Some worry about people losing their ability to cache-bust if they accidentally use No-Vary-Query: *. A number of possible mitigations were mentioned.

- Unclear how `No-Vary-Query: order` treats differing values, e.g. ?a=1&a=2 vs. ?a=2&a=1. The explainer needs to be clear and maybe add different variants.

Element Timing and Shadow DOM

Summary:

- Element Timing API is an important step forward for pages to observe rendering performance

- Unfortunately Element Timing does not support Shadow DOM; pages using Web Components cannot use this great API

- Largest Contentful Paint does include Shadow DOM elements in its calculations, which is seemingly inconsistent behavior; we should work towards ensuring Element Timing can support Shadow DOM

- Discussion:

- LCP spec relies on Element Timing, so has a similar issue (even if Chromium implementation diverges)

- We should fix this and there are multiple options to do so

- Continue discussion in a followup call

Exposing more paint timings

Summary:

- Multiple specs refer to “paint timings”: Paint Timing, Element Timing, LCP, Event Timing… summarize the different ways there timings are currently specced and how they differ

- Showcase an example, using FCP and Image decode (sync, async, decode()) how there are interop issues across all browser implementations.

- Propose that there is no single best time point that works for all use cases, instead, we should expose multiple time points with unique names (as we already do with loadTime + renderTime, and as we already plan to expand with firstAnimatedFrameTime etc)

- Discussion

- WebKit implementation is being modified and observed differences may change

- Firefox aren’t interested in exposing “commit to GPU” timestamps, due to privacy concerns

Super early hints

Summary:

- Proposal to allow early connection hints, perhaps through SVCB DNS header, to allow frequently used domains (e.g. asset domains, or image CDNs) that are used on most pages, to be initiated at as soon as connection is opened, rather than wait for first response to HTML response (either through 103 Early Hint preconnects, or within HTML response).

- Proposal was positively received (Cloudinary expressed interest).

- Next steps: discuss with SVCB spec owners if that is a good place for this. Email thread started.

CDNs and resource timing info

Summary:

- Proposal to add cache and proxy information to resource timing, to allow better measurements of CDN (and similar) performance (compared to synthetic measures used today). E.g., cache hit latency, miss latency, hit ratio. Next step: develop a proto-spec.

Unload beacon

Summary:

- Proposal to provide a web API that reliably sends queued requests on page discard.

- Clarifying API behaviors: a single PendingBeacon object represents a request, backgroundTimeout vs timeout.

- Discussions around API’s capabilities: e.g., can it be abused, how long should a beacon stay alive, etc

Resource timing and iframes

Summary:

- Presentation on the cross-origin information leaks made possible because iframe resources are included in resource timing.

- Proposals to remove them or reduce the information obtainable through that API.

- Discussion brought strong preference towards “option 3”

- “Do something different between same-origin/TAO frames (measure first navigation and report as completely as it can) and cross-origin/no-TAO just gets start and time of the load event”

- There’s some hesitance RE it throwing the complexity on API consumers. Need to better understand that cost

- Aggregated reporting may save us in the future

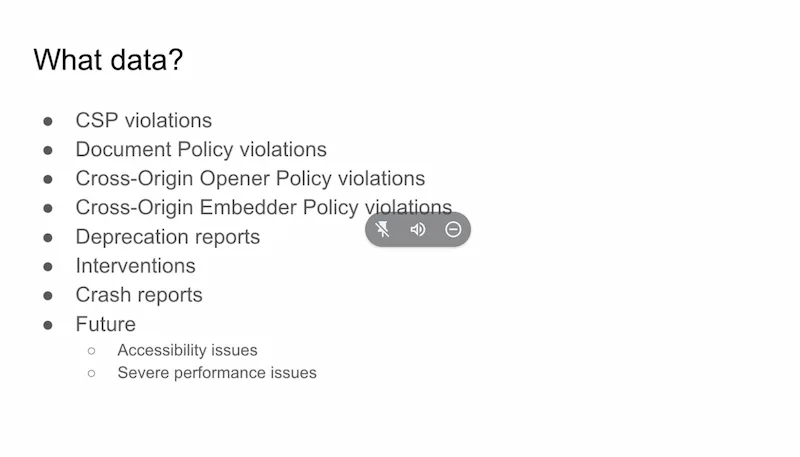

APA joint meeting - A11y and the Reporting API

Summary:

- The APA would want Reporting API to have reports on automatically detected a11y issues, user reported a11y issues, slow loading sites

- Such reporting would need to take user privacy into account - there’s tension between user’s need for accessible/adapted experience and not revealing themselves as AT users

- There’s potential interest in satisfaction survey reporting

Server Timing and fingerprinting risk

Summary:

- Server Timing enables a passive cross-origin resource to communicate information to the Window, that can then be read by other cross-origin scripts run in the top-level document’s context.

- Apple wants the UA to be able to not expose the info across sites (TLD+1), and potentially across origins.

- Same question for transferSize, encodedBodySize, etc

- Agreement from RUM collectors that same-site Server-Timing and sizes is better than none.

Aggregated reporting

Summary:

- Proposal - we could safely expose some cross-origin data if we only do that in aggregate, by relying on Aggregated Reporting.

- Need to be careful not to expose business data of embedded pages - that’s different from current threat models, where we protect user data

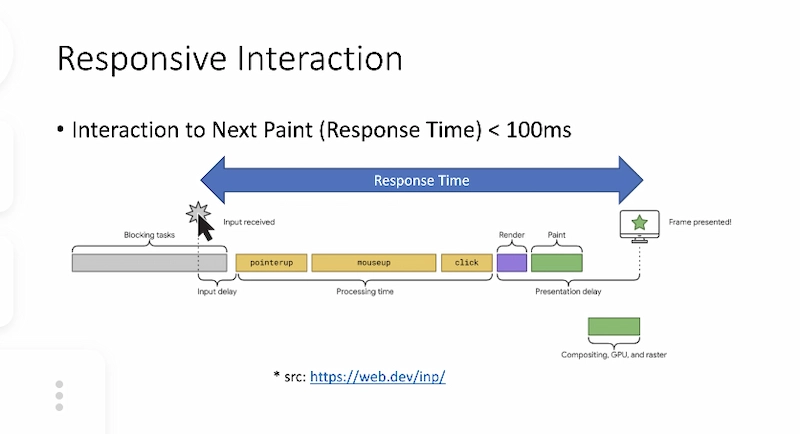

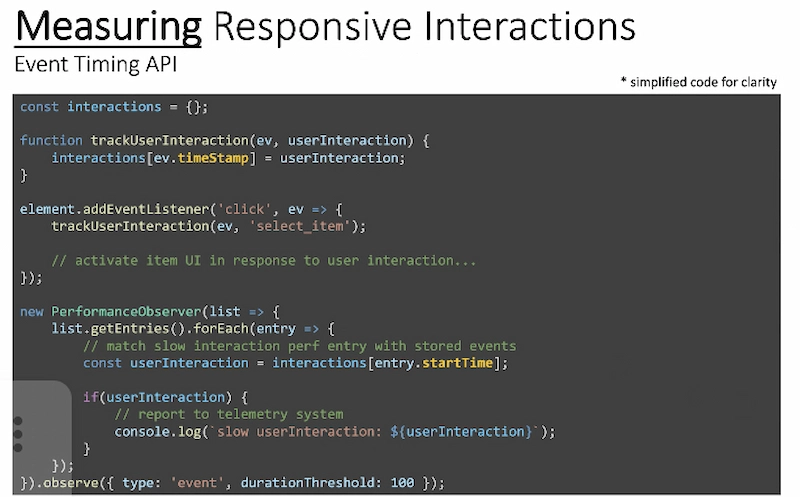

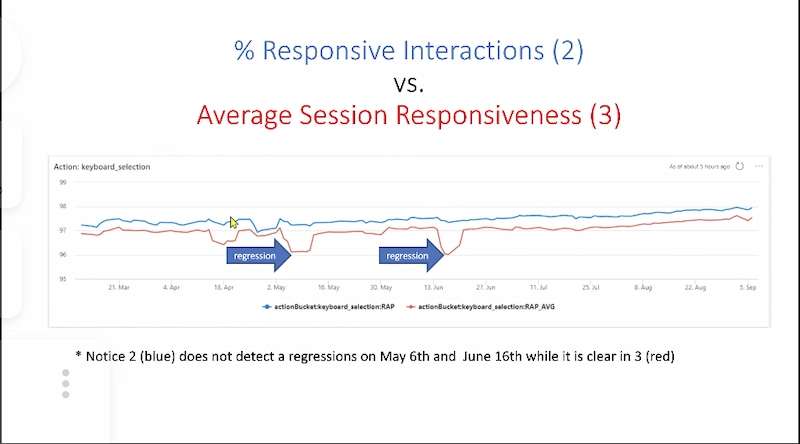

Responsiveness at Excel

Summary:

- This talk goes over the journey Microsoft Excel Online went when trying to find an effective user facing responsiveness metric.

- We explain how we measure responsiveness on the client using Event Timing API and then the different ways we explored to utilize the measurement to define a new responsiveness metric which is effective in detecting regression and has high reliability.

- We show how using the Average Session Responsiveness metric turned out to be a very effective path and propose an hypothesis why that is.

Joint meeting with PING

Summary:

- Discussion on the principles around opt-outs for ancillary data, vs. ancillary transport mechanisms.

- Some agreements, e.g. turn off reporting when JS is disabled

- Some disagreements, e.g. whether we should provide an opt-out for the transport mechanisms, that’s equivalent to a “do not monitor me” bit

- We’ll continue the conversation

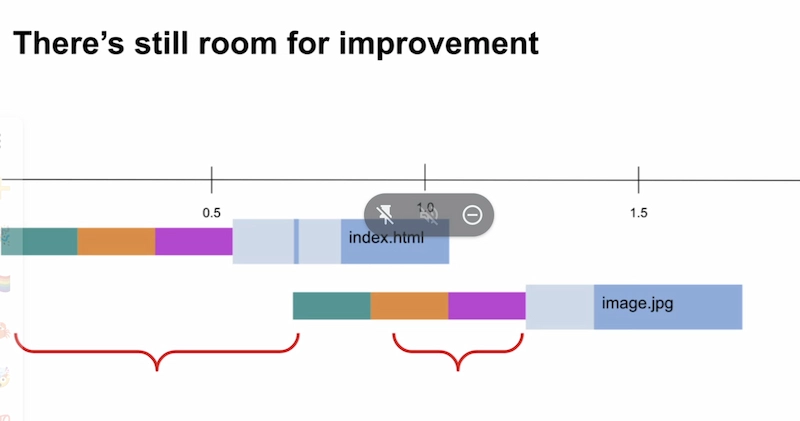

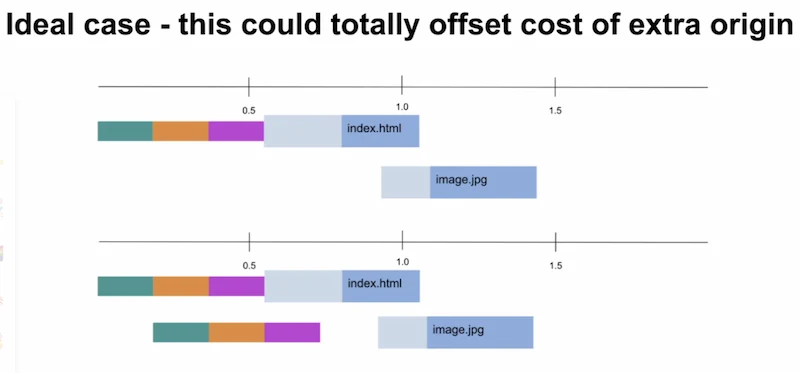

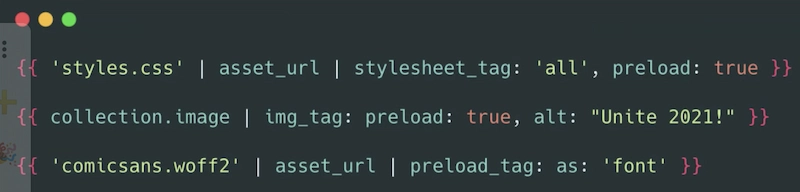

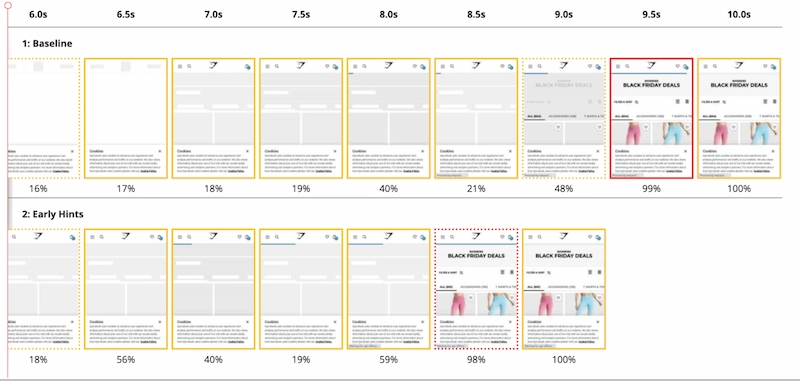

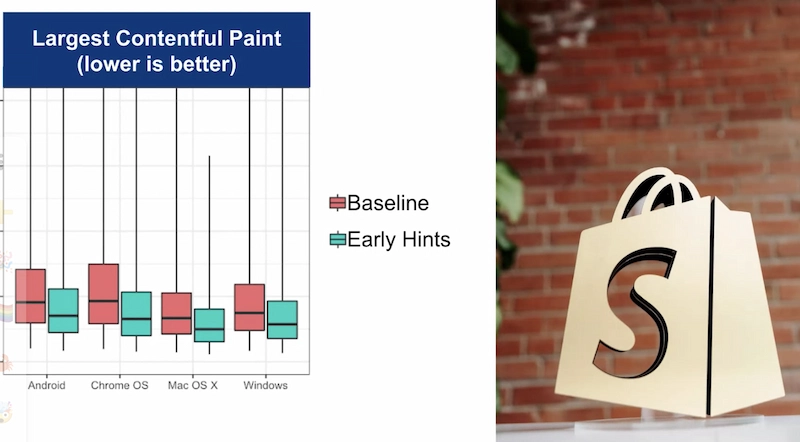

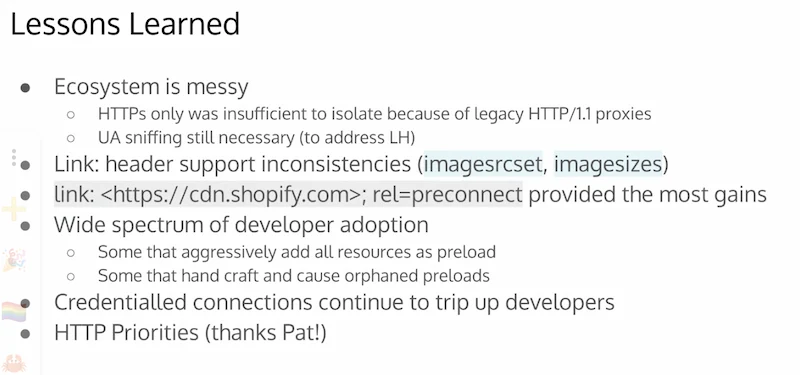

Early Hints - lessons learned

Summary:

- Mileage varies wildly - early Hints & Resource Hints still require context and developer intention to get it right.

- Most of the gains Shopify saw were from pre-connect and unblocking the tcp+tls bottleneck

- There are a number of outstanding issues to be resolved: reporting the initiator, responseStart definition, and sub-resource early hints

Rechartering

Summary:

- Need to re-charter for 2023 (2021 charter expires in February 2023)

- A few specs have been moving out of WebPerfWG and into HTML, this is good

- We've recently brought in EventTiming and LCP

- Discussed barriers to Layout Instability

- Considering bring in parts of ElementTiming into LCP and parts into PaintTiming

- No other incubations being adopted

Sustainability and WebPerf

Summary:

- There’s a bunch of overlap between web performance and sustainability

- We don’t currently expose information on server-side energy consumption, but maybe we should

- Discussion on how that information can be exposed, what more can we do

- Might be worthwhile to add to the charter

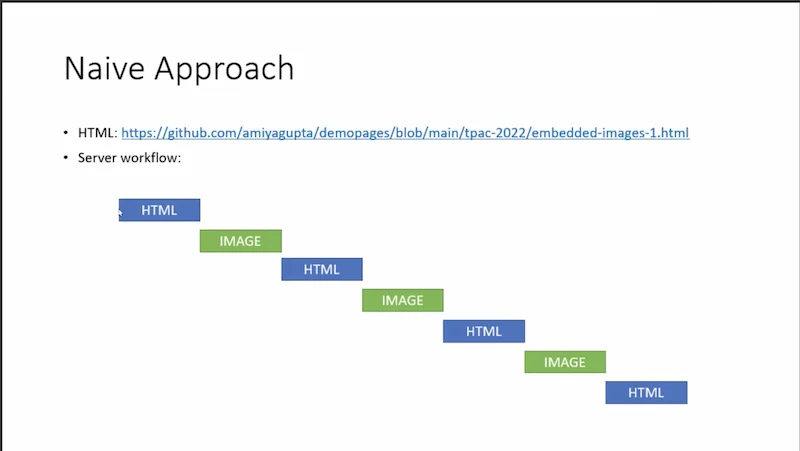

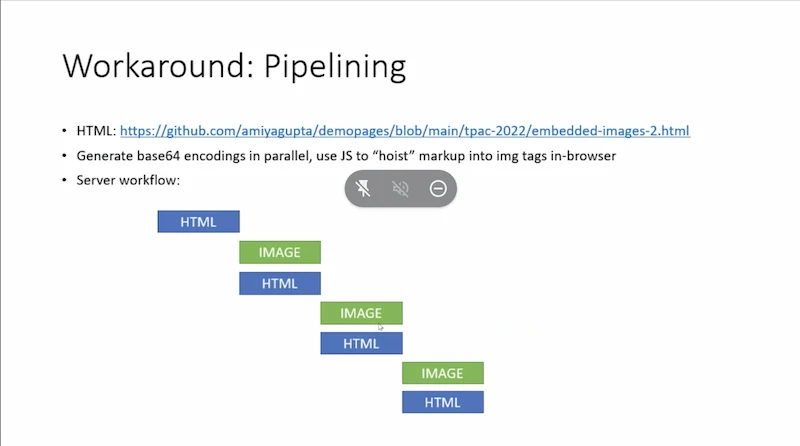

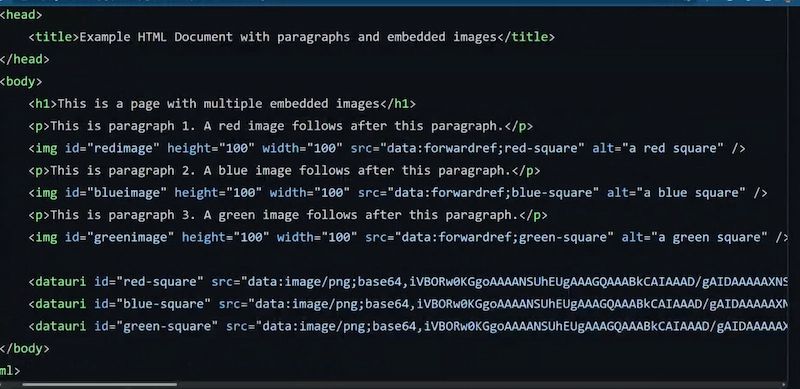

Forward referenced data URIs

Summary:

- For a certain class of web pages that render dynamic and highly variable content (e.g. search engine result pages), embedding images using Data URIs is the most efficient way to deliver those images over the network because the benefits of browser caching are minimal

- Data URI payloads need to be declared where they are used

- Dynamically generating base64 encodings for these images on-the-fly is expensive and causes a server-side bottleneck if done serially. Instead a common approach is to append these to the end of HTML sections and hoist them into the appropriate img tags using JS. This JS should also be inline since it needs to run quickly.

- What if there was a way to provide a forward pointer to a Data URI payload that appears later in the page? That would enable generating them in a non-blocking fashion on the server and eliminate the need for inline JS to implement this. Also it would cut down on redundant Data URI payloads such as 1x1 placeholders which currently need to be declared repetitively.

- SVG does potentially support this already so a solution may be to wrap the embedded images inside an SVG

Performance Beyond the Browser

Summary:

- Web Performance WG work might be applicable to a slightly more broad set of environments than just the browser such as edge compute (think node.js, deno, Cloudflare workers etc).

- Spec tweaks, possibly motivated by new requirements or other constraints, might help adopt existing designs to the environs where they find perf measurement valuable.

- The WinterCG, is helping codify the considerations in W3C, so we cross pollinated in the session.

- The session was closed with brief discussion on a variety of analysis concerns sitting just outside the envelope of browser perf monitoring - hars, netlogs, NEL.

Task Attribution

Summary:

- TaskAttribution has a bunch of potential use cases - SPA heuristics, LT attribution, privacy protections

- For privacy protections, we’d need to think defensively, and protect against code polluters

- Discussion around traces and if the WG should also cover cross-implementation lab tooling related efforts

Minutes

Monday 9/12

Intros, CEPC, agenda review, meeting goals, introspection - Yoav, Nic

Summary:

- Review of 2022 highlights, including processing model changes and features added to ResourceTiming, adopting LCP and EventTiming, and moving some specs to HTML

- The Working Group continues to discuss WICG incubations, and some of these have been adopted in the past (future session discusses rechartering and these incubations more)

- Review of Code of Conduct, Health rules and Agenda for the week

Minutes:

- Nic says hi

- Mission Statement: “Provide methods to observe and improve aspects of application performance of agent features…”

- Highlights from 2022

- 249 Closed Issues, yay!

- Rechartering talk on Friday

- Incubations:

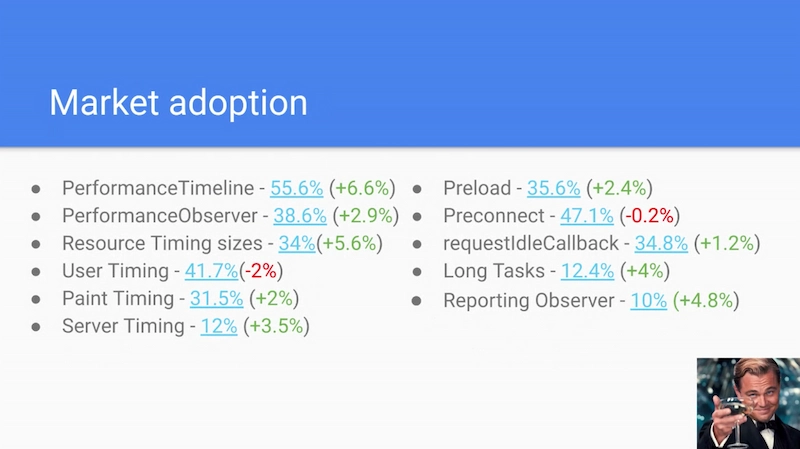

- Market Adoption!

- <Insert Humour here>

- Quick TPAC Schedule review

- 2 additional slots in the late afternoon for APAC folks

- CEPC overview

- Presentations will be recorded; please do not interrupt to prompt for questions until the presentation is complete so that we can record. (same as WG meetings)

- Health rules

Prefetch processing model - Noam/Yoav

Minutes:

- <Congratulations Noam & family!>

- Yoav: Trying to "unbreak" the model

- ... What is prefetch used for?

- ... Used to prefetch HTML for docs the user may soon navigate to

- ... At the same time, for low-priority resources that are likely to be used on the current page but don't want them to get in the way (CSS that is relevant for lower parts of the page)

- ... There are a lot of differences in how vendors implement

- ... and when trying to test the current behavior

- Chromium - Prefetch works well for same-site navigation as well as subresources

- ... For cross-site documents there is an undocumented codepath (hack) where as="document" puts the resource in the resource's partition rather than the site that's initiating it

- ... One way to solve cross-origin navigation

- ... Resource survives in cache for 5 minutes without the need to re-validate unless the caching headers are restrictive/no-store

- … special headers for signed exchange support

- Gecko - special prioritization for prefetches after load event

- ... No specific cache semantics

- ... X-Moz Prefetch header

- ... (Chrome has a Purpose header)

- WebKit - it's behind a flag (off-by-default)

- ... Simple low-priority fetch

- Issues: 5-minute rule is getting in way of caching semantics and can cause correctness problem

- ... Major differences between same-site and cross-site behaviors

- ... Different accept headers can break caching / Vary: Accept

- Noam has a spec PR html PR#8111

- ... No special timed (i.e. ~5min) cache semantics

- ... No as=document

- ... Send “Sec-Purpose: Prefetch”

- ... Ignore “Vary: Accept” when consuming prefetch

- <Discussion!>

- Anne (Apple): Wondering about Sec-Fetch-* headers, are they included in Prefetch?

- Yoav: They would have to be, we need to figure out what the value would be

- Domenic: The value would follow normal rules, would we need to vary Sec-Fetch-Dest

- Anne: Some implementations that might go to the HTTP cache

- Yoav: You'd need the HTTP cache to address that

- ... Some HTTP caches are shared system wide

- Domenic: To clarify Noam's motivation is that there's a lot of content in the wild that doesn't have `as` attributes.

- Yoav: We’d need to define `as` attribute since it's not really defined today

- ... And have everyone adapt their prefetches

- Domenic: Destination is just prefetch right now

- Yoav: yeah but it's not the consumers destination

- Dan: Noticed there's no explicit reference in presentation to data-saver mode

- ... As far as I know prefetch does nothing in data-saver, we should be explicit about that in the spec

- Yoav: There was some spec language that prefetches are optional resource

- Dan: Seems to me we need to be explicit about data saver

- Domenic: Data-saver is not a spec concept, but a browser feature

- Anne: Should say UAs are allowed to do this if UA has preferences

- Yoav: Yes instead of specifying a specific mode that doesn't translate across browsers

- Dan: Mentioned use of prefetch for downloading low-priority content with assets on the same page

- ... These days we also have the option of preloading with low priority

- ... Any benefit for prefetch in those cases?

- Yoav: Also use-case of same-site subresources for the next page

- ... Load CSS or JavaScript for a likely next page the user will go to

- ... If you were to preload that, there are advantages if you have special cache semantics

- … but some of the use-cases could be addressed with low-priority preload

- Barry: Preload is mandatory

- Dan: Next page assets, prefetch is appropriate and preload is not

- ... Low-priority resources w/in same page, and that's what I'm asking about

- ... Is there any opinion on our part when talking about low-pri resources for the same page

- ... Qwik framework uses prefetch for this

- ... Should they be using low-priority preload instead?

- Patrick: Low-pri preload is generally a lot higher priority than prefetch (in Chrome)

- ... So need to define another idle level of priority

- ... Firefox loads 1-at-a-time after onload

- Dan: You're saying there's low and even lower

- Patrick: CSS in Chrome's scheme, with no priority it's the highest resource, but even low-pri CSS it's still a high-priority in Chrome, it's not idle priority

- Yoav: If we settle on needing to define 'as' attribute for all resources, maybe it's easier to add this extra priority, it's an idle resource

- Patrick: Biggest difference around optional, instead of a must

- Dan: In some engines, JavaScript gets parsed

- ... Based on Qwik’s usage pattern they should use preload instead of prefetch

- Michal: They switched to prefetch because parsing was the problem

- Barry: Also compat issue where everyone else could see it as a high-priority preload

- Dan: One more point, no special timing in part of the proposal, what does that mean in the context of cache revalidation

- Yoav: One thing would've been a "single use", cache can use it once

- Domenic: Proposal is it populates the HTTP cache and that's it

- Yoav: If you're prefetching no-cacheable resources this would do nothing

- ... Compat analysis Noam did, this isn't a thing that happens

- ... Avoid special cache handling semantics

- ... Tell developers to only prefetch cacheable things

- Dan: If there is a need for re-validation, we lose most of the benefit of prefetch…

- Yoav: Is there a reason why the content can't be cacheable?

- Dan: A lot of websites who currently have revalidation requirements for the HTML

- ... Not necessarily thinking about short-duration caching

- ... They basically just require revalidation, and when it's required, prefetching will have effectively no value

- Yoav: If HTML is non-cacheable or requires revalidation, speculation rules?

- Domenic: We don't have anything until Noam's proposal that is just populating the http cache

- Anne: Would this only work for same-origin things, or same HTTP partition?

- Domenic: It would just go in your current partition

- ... It could be useful if you embed a cross-site image on your page

- Yoav: But not for cross-site documents

- Anne: link rel=prefetch would no longer be useful for SERP results

- Jeremy: If you want to do a cross-origin fetch there's a lot of complexity

- Dan: If I understand correctly, if I want to avoid revalidation be explicit about it and set cache headers to avoid revalidation

- Yoav: Proposal, I'm not sure I fully agree with that conclusion

- ... You make a good point this is a significant downside for same-site document prefetches

- ... If we are keeping special semantics for speculation rules we can also keep them for prefetch

- Domenic: There's memory caches and HTTP cache, if you want memory cache use preload

- Jeremy: Situation most commonly seen on HTML resources, not subresources

- ... It's more likely the author will be able to update to change the Caching headers

- Dan: I really wish more browsers would implement stale-while-revalidate

- ... Any thoughts about bulk prefetches for resources for a page

- Yoav: No-state prefetch kind of thing?

- Jeremy: We have it implemented in Chrome, have done some experimentation

- ... Need to be fairly confident the navigation will occur because it takes so much more bandwidth

- ... Prerender is the other side of that, not clear if the intermediate point is valuable vs. just prefetching the resource

- Yoav: Next steps is discussion on the issue

- https://github.com/whatwg/html/pull/8111

- https://github.com/whatwg/html/issues/5229

No-Vary-Query - Domenic

Minutes:

- Domenic: Will scroll through the explainer:

- https://github.com/WICG/nav-speculation/blob/no-vary-query/no-vary-query.md

- … Probably will be renamed to No-Vary-Search

- … about making this faster due to cache misses due to query params (?foo=bar)

- … originally for prerender/prefetch, but may be widely application

- … HTTP cache, SW, shared worker, …caches

- Want more cache hits, fewer misses, may be nice!

- … You can use the header to:

- Ignore the order of the query params (wow)

- Ignore specific query params

- Please ignore everything, except specific parameters

- Should be clear why this is useful, but here are some use cases

- … Inconsistent referrer… maybe append random things to URL, swap order, etc

- … Customizing Server behavior… e.g. modify priority or load balancing or debugging customizations– but don’t affect the response. This metadata is not really part of the resource. Would be better to store this in headers, but you cannot always do that (and some web platform features don't allow it, e.g. iframes)

- … There are also queries that are used for client side processing… State tracking etc, Analytics data, login processes. In theory they could use fragments, but sites use queries e.g. because they’re used to them, or because they sometimes want to pass the same info to the server.

- … With preloading, prefetching and prerendering, sites may be interested in having that data client side. So, prerendering changes the calculus here

- … Specific to prerendering - the URL may contain data not yet determined during fetch

- … imagine an article with hero image and headline, and you want to store state of which was clicked (via=headline)

- … you want to prerender once, for either case, but you DO want to send the query params after activations

- something like “No-Vary-Query: via”

- Detailed Design

- ... Structured header

- … HTTP Caches, request vs response

- …preloading caches, today keyed by request URL

- …ServiceWorkert cache api, already has handling for Vary header

- …Other URL-keyed caches (list of available images, module maps, shared workers, like rel=preload)

- Not quite sure if we want to change the behavior of all of these

- … Not going to change the response

- …when you request a resource with a new URL with new query params that are ignored, the response will store the URL you requested. Not going to be modified

- …it's more complicated for prerendering… the current process is to activate with the initial URL, then on activation you have to history.repalceState with the new URL

- …Interaction with storage partitioning… doesn’t affect

- ... Redirects are complicated - the request URL vs. response URL, what if some redirects have the header and some don't (redirects can come from cache)

- ... Worked out some examples, user might see something they didn't quite expect

- ... If there is a redirect you want to put it on the original request URL, since that's what these are key'd on

- Anne: What if a redirect goes cross-origin, it'd be the final URL you'd use

- Domenic: SW cache, prerender cache, keyed on the request URL

- Anne: Aren't they keyed on the final URL?

- Domenic: I think they're keyed on the initial one

- Anne: Might be abused?

- Yoav: Keep for discussion at the end

- … More complex No-Vary rules

- … We could expand this in the future for regexes, case insensitivity

- … Option for the future: similar “No-Vary-Path”

- …Security perspective seems fine, and could be very useful for client-side applications

- …ServiceWorkers had to limit the flexibility for replacing paths, some sec issues there

- … And parsing paths is quite a bit harder and cannot re-use the same header rules as for No-Vary-Search

- …Referrer Hint

- …Optional: can we provide a <meta> version

- … could be difficult, but would help developers

- … <grumbling, please no>

- … security and privacy is probably fine :P

- … Origin is the boundary. Modifying query params is widely possible and done already, (history.replaceState etc)

- … For privacy, it sounds similar to link tracking at face value but it is very different. It's basically the opposite: treat two requests as the same, vs treat a single resource as different

- Anne: Did you discuss syntax

- Domenic: It's a structured header, use URL Search Params

- Dominic Cooney: Curious thought how this would be implemented.

- …How would it work if a bunch of requests are made which claim to not vary on search, but then the last response claims that it DOES vary, what happens?

- Jeremy: Create a canonical cache key? Is it different from Vary?

- ... Can't comment on any particular HTTP cache

- Domenic: This is at the explainer stage and not yet implementation stage, feedback from implementers so far suggests this seems doable

- Jeremy: Some CDNs offer something like this

- Domenic: Would be cool if CDNs used this instead of a custom thing

- Edward: Work on content delivery at CloudFlare. CF documentation does not give an indication on how they treat query parameter ordering. Query-string sort. Although in some cases it requires the request to cooperate with that. Query parameter ordering does matter in our particular case.

- Barry: If order doesn't matter, and you've got a parameter repeated, then which is it?

- Domenic: We've been thinking mostly on the key level, so I don't have a clear answer right away. Does order mean key order or key+value order, sort keys and values

- Barry: Related to Vary header which is not a structured header, could people use the quote and get it wrong since they're interlinked and have a different syntax

- Domenic: Good point, something to be sure about when implementing diagnostics

- Alex: If I have something in my cache which is a=b and I make a request for a=c, what would the response URL be?

- Domenic: The one that was requested currently

- Alex: Concerned about regular expressions

- Anne: Can we use URLPattern-safe subsets?

- Michal: The request URL would be replaced, so the order in Barry's question shouldn't matter, because it would be interpreted by the thing that interprets the response. If you don't ignore it, it doesn't affect i

- Yoav: When you have a request going out, you need to match it

- Domenic: If you say No-Vary-Query: X, then it doesn't matter that X is

- Jeremy: If you said No-Vary-Query: X, then it doesn't matter at all, but if you say No-Vary-Order: order, then it does

- Anne: You would ignore both right?

- Domenic: In this case you're not ignoring any parameters, just the order

- Barry: If i said country=CA and country=UA

- Jeremy: Conceptually it's an ordered multimap, some servers will do same things or different things depending on the order

- ... 95% of servers don't have repeated query parameters, so most use-cases won't matter

- Domenic: Maybe there should be a special case for multiple values, or I do care

- Dan: If you wanted to ignore a parameter called "order" would you include it in quotes?

- Domenic: Yes

- Kouhei: We should support a PHP get query param that uses the same key multiple times

- ... Common way to pass parameters [stackoverflow]

- Yoav: Requires web-platform processing model as part of fetch

- .. Assuming we'd want this outside the browser to various caches and CDNs etc

- Domenic: First focus is preloading caches but we think it'd be useful generally

- Do we coordinate with the HTTP specs? Or do this work in the WG first

- ... at least affects the caching spec

- Domenic: How would they feel about a webperf spec patching RFC?

- Anne: Is mnot around?

- Yoav: Would be valuable to get feedback from that community, at the same time I don't think this should block the web processing model. We can define the header.

- Domenic: We already modify the HTTP semantics a lot in Fetch

- Yoav: But not for other caches

- Anne: To reach maximum potential we want it not just in browsers but all intermediaries

- Yoav: Good to get feedback

- Lucas: +1 to what Yoav is saying, we don't need to define every header in HTTP land

- ... But the interplay here with server-side and CDN it needs wider review

- ... RFC linked isn't even the most current one right now

- ... Even if you want to do your own thing, having awareness of what else is happening is good

- Ian: None of the semantics of the query string are in HTTP? Is it all just convention?

- ... Lots of query parameters in the wild that might not be compliant

- ... For fragments?

- Domenic: We see some cases where servers process it. They would need to move ot client-side processing if they use this header

- Jeremy: In practice a lot the UTM headers are processed by client-side SDKs, a server could process but they don't

- Alex: I realize the point is to provide this type of thing, they use a random query parameter, and they can't get their content to go to the server.

- Jeremy: They could always use "except='my-cache-busting-paramter'"

- Alex: If you have the content already on a bunch of people's devices, how do you bust it?

- Anne: Should we do a "*" in the first version?

- Barry: With a warning?

- Yoav: Or a no-cache query parameter in the spec, a special that is always in the "except" list?

- Kouhei: On reload?

- Yoav: Cache-Control: no-cache on the request?

- Dominic C: Lot of history with AppCache, worth studying

- Tom: JavaScript that does replace-state, are you considering a client hint?

- Domenic: JavaScript works except in prerendering case, on prerendering change event we do replacestate, saying everything is changing

- Tom: Do you still have to send down the JavaScript that does the replacestate

- Domenic: No

- Tom: Do you know on the server if this has any impact?

- Tom: On the server you need to know if you're doing this or not, how do you know?

- Yoav: If this isn't supported you wouldn't do preloading at all

- Domenic: I would want to make sure to work through this example

Element Timing and Shadow DOM - Amiya

Minutes:

- Amiya: WebPerf eng at Microsoft, first TPAC

- … Revisit an old issue and share observations

- … Before ElementTiming, webdevs had no direct way to see when elements are painted

- … As a workaround, there were approximation

- … Observe an image used the RT API or a load handler, but those are not accurate

- … For a text element, might use an inline script tag. Have an inline script with a perf marker

- … requestAnimationFrame and other variants were also used to tell the browser to record a marker at the next frame

- … This is not totally accurate and potentially over report the number

- … Including the script tag may change the rendering behavior of the browser and introduce new paints

- … Element Timing fixed all this and got a way to get that info

- … Built a couple of pages to demo this:

- Shadow DOM: https://amiyagupta.github.io/demopages/tpac-2022/shadow.html

- No Shadow DOM: https://amiyagupta.github.io/demopages/tpac-2022/noshadow.html

- Source code: https://github.com/amiyagupta/demopages/tree/main/tpac-2022

- … Same page also implemented with Shadow DOM where the image is implemented as a shadow dom element

- … Element timing attribute on the outer div and inside the shadow element

- … In the regular page, all the ET entries are fired

- … With the shadow dom page, no element timing is fired for the image

- … You can’t observe external or internal elements to the shadow DOM

- … Wanted to bring the discussion up again, and move this forward

- … The behavior is by design because shadow DOM is isolated, but we can have elements that opt-in to being observed. Elements should be able to opt in

- … Implications of this: shadow DOM can’t use element timing

- … For msn.com: moved from React to we components, and lost element timing data (and are using hacks instead)

- … LCP still captures the shadow DOM element, but Element Timing doesn’t.

- … Seems like a mismatch between those two approaches, where the declarative approach doesn’t capture the full picture

- Dan: Never tested it, but LCP when recorded by CrUX looks at iframes, but not in the webperf API

- … with shadow DOM, what’s the element reported by LCP?

- Amiya: good question, we could try it and see

- Dan: The discrepancy with LCP seems weird.

- … Other point - Can you implement a performance observer within the context of the custom element?

- Amiya: Haven’t tried it, not sure that works

- Dan: Consider web components to be iframes light, so..

- Yoav: From my perspective, web components are not a security boundary, they're a way for developers to isolate code to make authoring easier, not to hide information from the page.

- ... You cannot run JavaScript in a different "world" in the component, still have the same window/PerformanceTimeline etc.

- ... No current way to get that info from inside that shadow DOM, at the same time I'm not sure what was the original reasoning to segregate to not report Element Timing to shadow DOM

- ... At very least the opt-in option seems reasonable

- ... If you're importing a component from an unknown author, you should still be able to opt-in

- Nolan: I haven't heard relevant issues, but two precedents. One is declarative shadow DOMs, includeShadowRoots=true, it will just pierce and serialize those recursively.

- ... The other is cross-root delegation spec, can't access ARIA attributes across boundary

- ... Either the onus is on the web component author to export, or on PerformanceObserver owner to say they want to pierce everything

- Yoav: Worthwhile to look at original reasoning

- Shuo: LCP and ElementTIming difference, in current spec LCP shouldn't include things in Shadow DOM.

- ... LCP depends on ElementTiming

- Yoav: At the same time I think we want LCP to include those

- Shuo: Not sure?

- Michal: If the whole page is a giant component in Shadow DOM and that's the only visible portion

- Dominic C: I worked on Chrome when we worked on Shadow Elements etc.

- ... Things should be consistent

- ... General philosophy is it's an encapsulation mechanism

- ... From that POV there's two good choices, outer element can have ElementTiming, constituent timings are opaque to the page. That's the low-fi version.

- ... High-fi version is the element can control interior and exterior timings

- ... Very sympathetic to this issue

- Yoav: To understand, in case there's no opt-in, we're just reporting the timing of the custom element, regardless of what's inside it

- ... If the element author defines a more granular mapping, we can define more granular things

- ... Best path forward is to schedule this for a longer session on one of the upcoming calls and make sure relevant people will be on that call

Exposing more paint time points - Michal

Minutes:

- Michal: Talking about PaintTiming and potentially exposing more

- ... Several specs that expose some concepts of paint timing

- … Several reasonable interpretations

- … Multiple time points are interesting and important to developers

- … No specific proposal, wants to drive towards clarity and consistency

- … Paint timing marks the point in time the browser renders a given document

- … LCP builds on that and element timing, exposes load time and a render time

- … Element Timing, same as LCP

- … Event Timing, start time of the event and the duration, which are effectively the render time

- … interop issues aside - how do you do paint timing and image decode

- Create an image and the decode async, sync or just wait on the image to load, decode and attach it at that point

- Async - image loaded, attached to the DOM and not wait for the image decode

- So user would see the render before the image is decoded

- Decoupled from when the image become available

- … The common case - decode sync

- Load the image, wait for rendering to happen, which will kick off a decode

- …In all browsers decode is async, we don’t block on decoding

- …But there’s no rendering until that decode is complete

- …Two different rendering tasks that will happen when an image loads - two rendering opportunities

- …Might depend on conditions on the page which one is used

- …That means that the timing that’s marked for images that are sync decode is the time they are attached to the DOM

- …There are differences between browsers and it’s complicated

- …If you use film strips, you see the differences

- …Differences in Firefox are pretty small, side effect of default async decode. So the FCP is for non-image elements of the page. Safari is default sync decode.

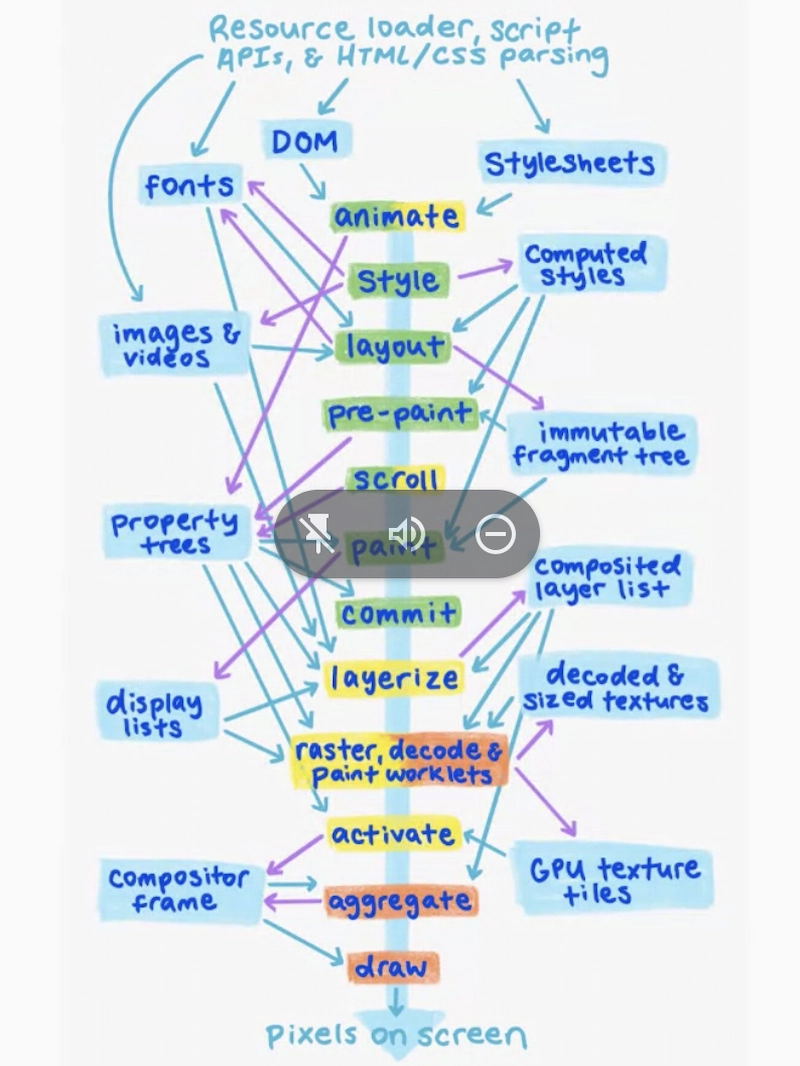

- …Why is this difficult? Linear process on how you do rendering

- … step 16 in “update the rendering” is pretty unspecified

- … In reality, this is Chromium’s rendering process

- … Firefox is similar

- … Summary from ChrisH on interpreting the rendering steps and how they are assumed to be serial but many happen in parallel. That’s possible because it’s not really observable, but the performance timeline violates that.

- … Discussion on whether this can be resolved differently. Original paint timing exposed something similar to Chromium, but changed due to spec issues

- … Focusing on Event Timing - The goal is to be under 100ms of interaction. Small differences in what’s measured make a big impact

- …Many things developers can do to shoot themselves in the foot

- …Even when you start a rendering task in the browser, it takes time before rendering actually happens: input delay, rendering work

- … Lots of things that can prevent a rendering opportunity from happening

- …So when we tell developers “render took a long time”, that’s a black box

- …Other issues with multiple timepoints: progressive images, FCP>LCP with non-TAO, render time decouples from load time (e.g. through JS)

- …Optimize LCP guide - element render delay shouldn’t take a long time, but it tends to be larger than expected

- …Summary

- Alex: From my perspective you're correct, complicated is a good way to describe it

- ... It's undergoing major changes right now in WebKit, things you observe may change

- Michal: I know you don't get accurate representation time for the system, but you at least know when that frame was submitted. Not well spec'd.may be hard to spec parallelism.

- Shou: For us we could know the time committing to the GPU, but we don’t want to expose that time due to privacy concerns

- Michal: For event timing, we're rounding off timings to 8ms. My understanding was that the amount of rendering work that happens vs. saying it took X frames to generate a result

- … Maybe we can solve the use case without exposing accurate timing

- Barry: Specifically for the FCP > LCP problem, should we fall back to FCP?

- Michal: That’s what we were suggesting. Chromium doesn’t expose the beginning of the rendering task. In this case, the earlier timestamp is more valuable

- Yoav: Are privacy concerns around visited links or other things?

- Shou: Building the complexity of the website, you log into a page reveals whether you were logged in or not. Paint timing can differ whether logged in or not.

- Yoav: You don't get paint timings outside of the context in which you see this information anyway, right?

Super Early Hints - Barry

Minutes:

- Barry: Proposal for even earlier hints for connections

- Many use cases for cross-origin requests - image CDNs, etc

- Extra origins have a performance cost - require another connection

- Gap between when the image is discovered and when it downloads

- Preconnect can minimize that gap. 103 response can help even further

- But it’s still never as fast as using the same origin as the HTML

- Using less origins is a best practice

- Everything on the same domain may not be practical

- Proxy requests through a domain - has security consideration, and feels icky. There’s also the backend connection cost

- Is there another way?

- Linking the resource request to the connection with 103, but it’s more of a connection-level setting

- For most cases, these secondary domains are shared across most pages on the origin

- Can we treat this as a connection level request?

- Gaps

- Until the first load, we’re not doing much else

- Front loading that work to that first gap would be better

- Could we tell with DNS, TLS or HTTP settings that this secondary connection is needed?

- Question re performance of the different options, but also has deployability differences

- Recently standarding HTTPS/SV/CB which may be best amongst all three

- Some concerns: footgun for performance reasons (over connecting), security/privacy, is this DNS’ role

- 2 questions for y’all:

- Is this worth pursuing?

- Preference RE the layer?

- Dominic: For web.dev that sounds great, but for a broader domains they could vary greatly

- Pat: Shopify that used Early Hints always have a static domain. Also Amazon. So this would be useful

- … If this was a DNS record, maybe this is a web-only addition

- … Strong preference to DNS because it’s easier to add

- Alex: Is there limit to how much data DNS can send

- Pat: Need to be careful of DNS for reflection attacks and DDoS

- Barry: Should be easy for people to do, but shouldn’t change every time you change your page

- Alex: If you have 300 domains, would DNS be upset about that

- Barry: Browsers can only use the first 1-2 values to prevent that

- Yoav: And what Alex is saying is that DNS could choke on that many values

- Barry: Only for preconnects, not preloads

- Lucas: Thought about it a bit, but we should be talking about new records. HTTPS specialization records allow that and we should look into it, and concerns should feed into considerations for those RFCs

- … We have a record for this, let’s use it

- Eric: Super interested in this, and the problem is well known. Can unlock some things worth pursuing. Aware of couple instances of similar things: Critical-CH

- Yoav: Accept_CH frames

- Barry: DNS may be easier to deploy than HTTP or TLS settings

- Nic: Could see CDNs handling these things for their customers

- Barry: Cloudflare offers 103s

- Nic: One challenge at the DNS layer, you don’t know the URL. So different parts of the domain may have different needs

- Yoav: URL knowledge (or lack of) are not DNS specific

- Ian: Do you expect it’d cascade?

- Barry: Yeah. Google Fonts as an example (CSS domain and font domain)

- Michal: Curious if browsers do any of this speculatively?

- Pat: Chrome has a network predictor, but it takes a while to learn, and that’s on a per-user, per-device basis

- … hit levels are only good for sites you visit a lot

- … would learn only for very frequent domains

- Barry: so smaller websites don’t benefit

- Amiya: Similar to what Pat said, IE used to do that. At least do a DNS lookup.

- Ian: Most people don’t run their own DNS servers, and the registrar does that for them. Some people run their own servers but not their DNS

- Pat: we could technically do both

- Barry: best to speak to HTTP/SV folks to see what their point of view

- Yoav: Might be worthwhile to query developers

- Barry: CDNs can provide a one click stop

Webperf Debugging Workshop (in-person)

CDN Resource-Timing info - Mark

Minutes:

- Proto-explainer

- Talking about a proposal - for a long time we didn’t have good ways to measure performance, and ResourceTiming came along and RUM gave visibility

- In the world of CDNs we’re stuck measuring perf using the older techniques - ping resources from data centers around the world

- What if we could collect better data about how CDNs are performing, so that users can make better decisions

- CDNs make lots of claims about their performance, or 3Ps do that, but it’s very synthetic

- In the HTTPWG we defined 2 new HTTP headers:

- Cache-Status - hit, miss, freshness lifetime

- Proxy-Status - this is why this proxy generated an error, etc

- Both those specs are based on structured headers, maps easy into JS/JSON

- The proposal is to expose the contents of those headers as JS as part of ResourceTiming (considering TAO, etc)

- Enables using hit/miss as dimensions

- Gives you a much better idea about how your CDN is performing (or your reverse proxy)

- I think folks are interested - question of getting browsers interested in implementing

- Because it’s structured headers, may not be too hard to implement

- Nic: Love this idea. Would be beneficial for people that want to capture the info

- .. With server timing you could choose to communicate the same info

- … Some CDNs are using the ServerTiming header to do that

- … Seems like Cache status gives more structure

- … Any benefits beyond the structure over server timing?

- Mark: Not just structure but also semantics. Enable to compare apples to apples when comparing CDNs, especially for multi CDN

- … Also pretty common hierarchical caching. As a customer, I want to understand where my hits are coming from. Edge caches or central ones

- … Server timing was about the origin server, where this is more cache specific

- Nic: Cache-Status gives you which cache it is

- Amiya: Can a CDN game its metrics? It’s self-reporting

- .. If used for comparative analysis, may incentivize inaccurate reporting

- Mark: The kind of response on hit or miss, no good answers.

- … reporting high latency hits as a miss would still reduce their hit rate

- … timing is still collected on the browser, so they could lie, but the benefit is not high

- Amiya: What about suppressing the headers for slow responses? Could be ways to misuse it

- Mark: Yeah, but if this ecosystem evolves, there can be demand for these headers from customers

- Michal: There’s a precedent of user timing having arbitrary json payloads. Here we would have RT exposing arbitrary JSON payloads. Are there other things we’d want to expose from headers?

- Mark: The Cache-Status seems super important. The Proxy-Status just seems easy if we;re doing the first. It’s more about error handling

- … For other headers, good question.

- Yoav: Same concerns as ServerTiming https://github.com/w3c/server-timing/issues/89

- .. Also, CDNs would not want to get caught lying

- Mark: Limiting this to just same origin, it would still be useful

- Paul: Would also be interesting to add to that is a CDN identifier

- … For multi-CDN customers looking at their RUM data, they can split other than using Server Timing

- … Currently using a Forward header to communicate the info to the client

- Mark: the specs have an identifier for each ‘hop’; it’s just a string, so the assumption is that CDNs will identify themselves there, along with the relevant part of their infrastructure. E.g., “cloudflare-edge-MEL’.

- … Lots of weird CDN architectures, so can’t make assumptions about them

- Nic: With Cache-Status, it doesn’t really emit timing information. Is that intentional?

- Mark: I assumed we used ResourceTiming to measure client visible timings. We could extend it

- Nic: Our RUM customers today can expose origin time vs. edge time to give them insights on that breakdown

- Mark: Would hope we’d get good input from CDNs so that customers could make meaningful comparisons between them.

- Yoav: Next steps?

- Mark: develop the explainer and write a proto spec that people can comment on.

- Yoav: implementation interest?

- Mark: Intend to get CDNs to apply pressure on browsers of the same companies

- Michal: To what extent are CDNs replaceable and equivalent? There are some CDNs that are coupled to a certain tech stack.

- … Could you assess them without issues with correlation?

- Mark: Depends on what CDN you're talking about. I’m talking about classic CDNs - Akamai, Fastly, Cloudflare. You’re talking about platform CDNs. Can be complex to tease out their performance story

- … Edge compute - lots of different solutions there, which this won’t address

- … but we need common metrics to make good comparisons

- … For edge compute we’re not there yet

Unload beacon - Fergal + Ming

Minutes:

- Ming: Page Unload Beacon API

- ... Focused on current API and some pending issues and discussions

- ... Motivation: Provides a more reliable way to beacon data, sends data to a server without expecting a response

- ... Analytics data, requirement to send data at the "end" of a user session, but not a good time in page's lifecycle to make a call

- ... Want to expand sendBeacon API

- ... PendingBeacon would represent a piece of data that would be sent later to a target URL

- ... Sent on page discard, plus a customizable timeout

- ... Support both GET and POST methods

- ... Ideally, we would like browser to support crash recovery from disk, but some privacy concerns

- ... Basic shape: Construct a PendingGetBeacon or PendingPostBeacon, will queue and the browser will later send out when page is being discarded

- ... Has properties like url, method and pending state

- ... Check property before updating PendingBeacon

- ... Mutable properties such as backgroundTimeout and timeout

- ... API is simple, can also send the beacon out now, or deactivate

- ... For Get you can update its URL, for Post you can update its data

- ... Constructor takes an argument to specify backgroundTimeout or timeout

- ... backgroundTimeout will send the beacon approx that time after being hidden

- ... timeout is like a setTimeout() to send the beacon, but is more reliable

- ... Updating the property will reset that timer

- ... Concrete example:

- Thomas: Why would you pick a backgroundTimeout number

- Ming: How long do you want to wait for beacon to be sent

- ... If tab is minimized it would be in a hidden state

- ... But maybe page will come back after hidden, and you don't want the beacon to be sent

- ... If page goes back to visible, it will be stopped

- Fergal: Tradeoff between wanting to minimize beacons sent and wanting to get as much information as much as possible

- Jeremy: Why is setURL only available on GetBeacon?

- Fergal: It would be immutable for a POST beacon and mutable for a GET beacon

- ... In terms of type safety, you can only attempt to set the URL on a GET beacon

- Jeremy: But why can you not change on the POST beacon but can on GET beacon?

- Ming: Open issue to discuss

- Jeremy: Seems like an artificial constraint

- Alex: Confused by API shape, it seems like if you make two new PendingGetBeacons

- Ming: They are different objects, with same target URL, it will make the request twice

- Alex: You can have a whole bunch of PendingGetBeacons to different URLs

- Ming: Or even to the same URL

- Alex: And if you create one and it never gets referenced again, and it gets GCd, it will still send

- Ming: Yes, it shouldn't get GC'd until it gets sent off

- Domenic C: Given you need to support cancelation anyway, if network activity is happening in a different process, couldn't it be racey

- ... Why do you need to be able to modify it, why can't you just cancel and create a new one

- Fergal: Renderer is full control unless renderer dies, so multi-process concerns are not there

- ... There's a thread, the ergonomics get really tricky when you do that

- ... Example is you have a beacon and accumulate data, and eventually send it, you want to know if the last one was sent or not. Can't tell if it's async until after the fact.

- Domenic C: Understood

- Ming: It's better to make constructor support actual post-data etc

- ... But some cases you want to construct beacon and update its data later

- Domenic C: No code sample for PendingPostBeacon, in this example for PendingGetBeacon, there are other event loops in between right?

- Ming: Yes

- Sean: Existing issue for current Beacon API, the tab could be killed at any time and the unload event fires

- ... It would still persist with this API right?

- ... Otherwise you can rely on page hidden / visibility change?

- ... Can current Beacon API support the same with pagevis event

- Ming: Can achieve the same with page vis changes, but No good way to support if the page was discarded

- Fergal: Plus the ability to send a beacon with backgroundTimeout

- Sean: Developers would typically want to send the beacon right away

- Ming: In Chrome’s case, the renderer can crash, but you want to still send out a beacon

- Olli: Wouldn’t developers abuse this API in the background tabs? Browsers throttle background, so developers could create thousands of new beacons and send them?

- Fergal: To what end? You could do the same in the foreground

- Olli: To overcome the limits

- Michal: You’re saying you wouldn’t be scheduled, so then you can’t update the beacon

- Olli: You’d need to update the beacon ahead of time

- Jeremy: UAs can apply reasonable resource limits here to avoid gigabytes of updates

- Fergal: If you have the event loop running, you can do this with fetch. Otherwise, there isn’t a point

- Olli: In order to update the pending state, you need to run the event loop

- Fergal: A DoS attack here, but no new information

- Jeremy: Don’t want the background tabs to ping the server, but we can apply reasonable limits

- Barry: As a RUM provider, I can see the use case

- Jeremy: There are reasons for us to want to limit that - avoid users pinging the server constantly

- Dominic: Any reason the beacon isn’t integrated as a Fetch option?

- Ming: only allow you to attach the data but not to configure the request itself

- Sean: If I set the timeout to 1 minute and I close the tab, would the beacon be sent?

- Ming: When the tab is close, the beacon is sent immediately

- … The renderer gets discarded so the browser would send it

- Alex: What if you close the browser?

- Ming: Not sure. Initially wanted to support browser crash recovery from disk. But due to privacy concerns around network change, we may not do that.

- … If the beacon is still queued when the user reconnects to a different network, that’s not what the user expects.

- … Can leak navigation history. So don’t want to implement crash recovery at the moment

- Fergal: Maybe we can keep the beacons but only send them when you go back to the site that registered them.

- Ming: Beacon may be old

- Fergal: reduces utility, but may be worth doing

- Michal: Would beacons built ahead of time be an actual performance win?

- Fergal: That’s the original motivation for this. Key feature to allow people to remove unload handlers

- … About to start an origin trial for this, if people want to use it

- Nic, Thomas: interested!

- Michal: Is it also more ergonomic to use?

- Nic: The API fits well with what we’re doing today. Interested in getting better reliability. Won’t stream more data.

- Barry: Reliability + atm RUM providers don’t measure full-lifecycle metrics (CLS)

- Thomas: concerned about log loss. In native we can log everything and this gets us a little bit closer. Batching and crashes are still missing.

- Barry: How often do browser crashes happen?

- Thomas: hard to say

- Jeremy: <missed question>

- Thomas: Android would preserve the logs offline. Can do that with session storage, but they get old.

- Ian: Makes sense to hold on to them until the user goes back to the page.

- Jeremy: <missed comment>

- Thomas: no one wants their logs dropped

- Nic: For RUM we may not want data that’s too old

- Barry: For crash reports, you want to store them

- Jeremy: You need to make sure you respect Clear-Site-Data, etc

- Thomas: In any case, we regard events based on the timestamp in which they were logged, not when they were received on the server side

- Fergal: Limit of 48 hours

- Ming: Still pending discussion

- Fergal: Maybe it should be implementation defined

- Alex: Not thrilled about persisting this to disc at all. Like the idea of sending a beacon decoupled from the same time when the next page is loaded, to avoid congestion.

- … But would also want an aggressive cap on the amount of time we’re willing to wait. E.g. 1 minute

- Ming: There’s a discussion about how long we should wait.

- Alex: If the user closes the browser or the browser crashes, the data is lost. Too bad

- Fergal: We don’t want to give more capability than developers have. Keeping the data in local storage is possible, but this seems like a better API for everyone

- Barry: arguing about 0.0000% case

- Michal: If you navigate away and unload, we’d eagerly send. If the tab is discarded, we’d send it within a minute. So I guess there’s no contention

- Alex: What's the timeout?

- Barry: It’s for a backgrounded page. It’s still there.

- Fergal: yeah, the timeout is for the page when it’s not discarded.

- Alex: In that case, I’d want to send it 10 seconds later.

- Ming: Open an issue in the repo

- Jeremy: If you read the timeout property, do you get the time that’s left?

- Fergal: Currently you’d get the last value you set.

- Ian: timeout = timeout is that a reset? Maybe it’s a write only.

- Michal: Somebody said they used Fetch to send the beacon eagerly. Why would beacon be lossy? Is there something about Fetch that’s keeping the page alive?

- Ming: You’re describing a different issue. Probably a bug.

Tuesday, 9/13

Resource Timing and iframes - Noam/Ian

Minutes:

- Ian: Iframes break ResourceTiming, and they don't play well together

- ... Current way this works is you can use RT inside a document, and you can look into an IFRAME you embed

- ... The IFRAME itself is going to see a NavTiming entry expressing details of its own navigation

- ... The containing document will get a RT entry that contains info about the doc that gets loaded

- ... IFRAMEs can sen TAO to optionally expose additional information

- ... Get a RT entry for the first navigation following through any redirects

- ... Any time you change the src attribute from containing document you should get a RT entry

- ... Gets added at the end of the BODY stream

- ... Unlike the load event

- ... But navigation of IFRAMEs have issues

- ... Can be canceled before it's reported -- client-side redirects, user navigates away, scripts

- ... Multiple navigations can happen from the same

- ... Session restore can cause issues

- ... Metrics might be misleading

- ... Cross-origin user behavior is exposed, e.g. navigate away

- ... Would TAO be good enough to opt-in for that?

- ... What should we do?

- ... Option 1 - Break up, don't put IFRAMEs in RT

- ... Could post-message to embedder, regular resources stay in RT

- ... Option 2 - Expose only the start/end time in an entry

- ... Safe, consistent between origins

- ... Lose some information, does not fit with what RT already does

- ... Option 3 - Do something different between same-origin/TAO frames (measure first navigation and report as completely as it can) and cross-origin/no-TAO just gets start and time of the load event

- ... Any other options?

- – Q & A –

- Yoav: Question we should be asking, is what does the current data we're exposing give us in case of use-cases

- ... Without any opt-ins what does it give?

- Nic: In the same origin case today, we can crawl all the same origin pages and get their resource timings – gather all of the timings for any subframe's resources, helps when treating frames like an image, helps understand the “page weight”, and of course just understand iframe timings

- … For the cross origin case, it can give us a hint that there was more content loaded that you don’t know about

- … When visualizing things, you’d see the existence of these frames even if not the data

- … Can deduce some things based on times

- … 30+% of resource urls and over 50% of bytes (5 years ago) are hidden

- … Having the entry be there in an incomplete state, it’s a helpful thing for reconstructing the waterfall later

- … Our RUM customers would be confused if their cross-origin iframes disappeared

- … Option 3 sounds ideal, as we’d still get the data

- … This is just a partial picture, but just the knowledge of the iframe’s existence is helpful

- Ian: For same origin frames, you could just look at their navigation timing info?

- Nic: exactly

- Ian: cross origin frames, you don’t get their transfer size

- Nic: get it if they have TAO

- Ian: If all you get is navigation timing, you’d still be able to do same origin

- Nic: Yeah, but not for cross-origin ones

- Ian: A separate proposal to have cross-origin frames to share that

- Nic: They’d have to opt in, where here we get that info without an opt in

- Michal: Can we get navigation timing propagation? Does navigation ID open up the option to expose navigation timing?

- Ian: You’re not gonna see this from the top-level frame

- … The frame has its last navigation where the embedder should only see the first one

- Yoav: Theoretically if we were to redesign from scratch… resource timing would just be for resources, and navigation timing we would have for frame timing..

- … The processing model is very different

- … But it's unclear if the churn that this would entail at this point is worth the churn

- ... but this is very valuable information which we don't want to lose

- … Maybe there is another Option 4:

- … I have ideas around Aggregated Reporting. Unclear still if that will work, but perhaps it can help

- … so far we have been concerned about the data sizes, but maybe we can look at timestamps of when things happened (this origin loaded this frame?)

- … Hard to bring that information back to construct a whole waterfall?

- … Won't necessarily solve all the use cases we have right now

- … Option 3 feels kind of awkwards… seems like too much special casing

- … But PoC deprioritizes implementer/spec complexity

- Nic: … Option 3 sounds like it requires a lot of special handling, is that for implementers?

- Ian: …No, for the developer/consumer, you will have to know that you have to handle differently depending on the resource type, because the timings measured will differ.

- Barry: For cross-origin images, you get the get the last byte, and not any of the other timings

- … Should be more consistent with that

- Ian: Cross-origin images and scripts, you can get information about their sizing from time, but you don’t get information about what the user did

- Pat: The razor is “can you get the same data from load events”. The Frame Timing proposal fits that model, which would give you the same information as attaching an onload/onerror event to the frame.

- … That’s how we got to where we are with cross-origin images and scripts

- Ian: You certainly get more than that right now

- … Option 3 is roughly equivalent to that

- Jeremy: How do you deal with redirects?

- Ian: TAO is sticky through the chain. Any non-TAO redirect fails the resource TAO

- Jeremy: checks Timing Allow fail is checked

- Yoav: We should really understand how this is used

- … Option 1 removes a TON of complexity

- … Option 2 involves a lot of code. At least in Chromium

- … Option 3 just fiddles some things around, easy for implementers, but the cost to developers may be significant

- Andy Davies: (in chat) Going for the option that leaves API consumers to handle edge cases doesn't make sense to me. Need to understand API consumer cost

- Nic: I’d have to think about it, but you can still get some of the same information about SO frames by crawling in and getting the data from frame perf timeline. Cannot do that for cross-origin frames, but we cannot do that today anyway

- Yoav: There is a separate proposal, for frames to expose up perf timings

- … Is it possible that we lean on that? Without frame bubbling up you just get Option 3, but it incentivized opt in to “frame bubbles” (cross frame performance timeline)

- Ian: Okay, interesting

- Colin: Is it only iframes that have this?

- … Do <video>, HLS, <model> tags have similar issues?

- … This are sort of like iframes embedded on the page

- Yoav: These are not iframes, these are resources

- … It's different because of cross-document navigation and being unable to expose

- Sean: What about <embed>?

- Yoav: right, and <object>. These are “types of iframes”

- … It would make sense for them to also fall into this model… but probably people don't care about these as much, since they are far less popular and far more legacy usage only. It would be good to handle these if it's possible.

- Alex: I think I like Option 3 of the three.

- Ian: <Re-iterates Option 3>

- Yoav: Let's start with Option 3 in the short term to eliminate any issues today, and then we have some time to evaluate other alternatives that reduce complexity.

APA joint meeting: Accessibility and the Reporting API

Minutes:

- Janina: Thank you for meeting with us. Could learn from you about ways to improve accessibility by gathering data on it.

- … Would be great to learn about load times - some pages are slow to load on bad networks

- … Not acceptable. Just an example that came up

- … Want to understand the reporting api better

- … As a leading question: can we make fast loading be a normative requirement?

- Intros: Janina Sajka, Matthew Atkinson, Scott Hollier, Lionel Wolberger

- Lionel: APA is reviewing specs towards the needs of accessibility. In 2018 we said “no need to review”, but then realized it can be useful

- … Use case for reporting TLS cert failures

- … Janina pointed out load times because that’s a recent concern. A failure of accessibility on the page is something that the user agent can be aware of

- … UA assembles resources and then fails due to TLS cert failure

- … UA builds an accessibility tree and the tree building can fail. Could be very interesting to report back on

- …another case - User access a form themselves, but some forms were inaccessible

- … a blind individual is trying to access the form and fails. Can they report that the form has failed them?

- … motor impaired person will use their keys to navigate, but would be interesting to enable them to report them

- … A web page is comprised of many resources - what if the report could go to the provider of the service (e.g. chat service)

- Matthew: automated things that can be detected are one thing. (30-40%)

- … Human side of things. Have a product to enable a11y reporting through the page. Interesting to have it standardized

- … Wanted to understand the performance envelope

- Yoav: What I'm hearing is there are 3 different cases

- ... One is browser automatically detects accessibility issues and reports them to an endpoint that registers as interested in that info

- ... Second is user-generated reports, give the user a way to say this is bad

- ... Third is around loading performance, since it's a multi-dimensional aspect, there are many different APIs this working group is working on that report on dimensions of that problem

- ... Are you asking to make use of those APIs or do you want the UA to have a conclusion on what is too slow and report those conclusions?

- Janina: Would like to have a conversation around what is too slow, even with US infrastructure bill that doesn't cover the planet and making sure people w/ lesser resources can participate better

- ... Knowing when load starts and when it becomes interactive is important

- ... Could lead to algorithms that load less eye candy

- David: Big implications on the cognitive disability community, when I get my news on the phone, but whenever you load a lot of articles that have content, it doesn't load continuously all at once.

- ... Ads shift screen up/down whatever, we lose our place and trains of thought

- ... Creates cognitive fatigue and losing train of thought

- ... If it's loaded in one continuous it would be OK

- Yoav: That aligns perfectly with what this group does in its day to day

- ... Google has this Core Web Vitals program that defines thresholds for what is good/needs improvement/poor

- ... And we could have a normative version of that that is reporting in cases if one of those dimensions fails

- ... Loading performance, shifts, responsiveness

- ... On this front, there's perfect alignment for what you're asking for and what we're doing her

- Ian: I'm editor of Reporting spec

- ... First off, I'm excited about possibility of Accessibility standard that could leverage this

- ... Reporting is a mechanism other specs could use

- ... Doesn't define any reports, but here's a standard way of reporting

- ... Could be any other server that you setup to collect them

- ... Other specs are responsible for what goes into a report

- ... What the endpoint does is up to the site developer

- ... e.g. Sets up headers that ask for "CSP" reports, and please send to this reporting server. Or crash reports

- ... They could say "please set up Accessibility reports" and send to this server

- ... Sub-documents can set up their own configurations, e.g. chat server asks for reports to go to a separate place

- ... With certain triggers, if the tree is malformed (or things we can detect happen), please generate a report and send it to whatever is configured

- Eric: Could we take existing automated tooling checks and it could hook into existing tech and report through API?

- Ian: If you have a document policy, you could use it to apply restrictions around accessibility requirements and report any violations through the reporting API

- Yoav: Document policy is a different spec that allows site to say specific policies on the document being loaded

- Matthew: To add context, there's different ways you might get these automated accessibility tests

- ... One is by using Axe built into Chromium

- ... Or another browser extension, which do accessibility testing

- ... Third is a service that crawls and does tests. Headless browser.

- ... Key thing is the browser itself at the moment doesn't do anything with that information

- ... Not just at document load time, it's whenever, as DOM changes so does accessibility tree

- ... Depends on when I push the button in the browser to run the tests

- ... We'd need a way to package it and send it to particular endpoints

- ... Addition to browser extensions/APIs and that's setting aside the format of the report

- ... Not just at document load time for the accessibility stuff

- Ian: With Reporting API being part of the browser, anytime something happens you want to report on, we buffer them and send out as a batch

- Janina: If a subcomponent encounters issues it could report to another endpoint

- Ian: If the subcomponent opts into it

- Yoav: Iframes can set up their own Reporting API header

- Ian: Yes

- Michal: Was there a spec for user reporting accessibility concerns?

- Ian: Would that be a browser UI that reports having accessibility issues?

- Janina: We are encouraging a browser standard mechanism

- ... That goes to whoever owns the domain

- Yoav: We need to be careful that users don't create cross-origin leaks for themselves and report their own state

- ... In general from implementation, the accessibility tree is not always built, it's only built for users that need it?

- Lionel: Not quite correct, it's always built, depends on whether it's consumed

- Chris: In Chromium, the accessibility tree isn't created until the APIs are used by the browser

- ... Browser has a mode, as accessibility code tends to be very expensive

- ... Difficult to expose this reporting information outside the context that it's already being produced

- ... That's in Chromium, I believe the same in other UAs

- Alex: I think that's the same in Webkit

- Lionel: Chromium knows if the Accessibility API has been called

- ... Does it share that information, if it's logged into the profile

- Chris: It does not

- Lionel: Does the browser have knowledge of whether you're using the accessibility tree?

- Chris: It does know when you're using accessibility tech, as APIs from screen reader, it's calling an API

- ... Tree is not really something operating system APIs know about

- ... Tree is an implementation detail of the browser

- Scott: Browser detects if you have high-contrast mode in OS

- Matthew: Reasonable if APIs are being called, not controversial, but we are concerned as a community about users inadvertently telling others they're using accessibility tech

- ... Many different reasons why someone may be calling these APIs, testing it, might be using assistive tech

- ... Somewhere between 1/4 to 1/3 of users can see the screen, but they use it to understand the text

- ... Generally we don't want to share private information and it's probably not accurate

- Chris: It is really important, there's no way for a website developer to directly detect it, by design

- ... So Reporting API needs to take that into account, especially if user reports it

- Janina: Tomorrow we have a meeting with verifiable credentials

- ... So you'd only have to share this data once

- ... We want to simplify so you don't have to set each time with every device

- ... We think there's an opportunity

- Noam H: I was wondering, and I think I got the answer, whether it's interesting for us to measure time the accessibility tree is available, like we measure if FCP