WebPerf WG @ TPAC 2023

Logistics

Where

Meliã Sevilla Hotel, Seville, Spain

When

Registering

- Register by Aug 31 for the Standard Rate (increases on Sep 1 to late/on-site rate)

- Health rules: Masks on, daily COVID tests

- All WG Members and Invited Experts can participate

- If you’re not one and want to join, ping the chairs to discuss (Yoav Weiss, Nic Jansma)

Calling in

We’ll be using the W3C zoom this year. (For the WHATWG session, we’ll be using this one)

Attendees

- Yoav Weiss (Google) - in person

- Nic Jansma (Akamai) - in person

- Michal Mocny (Google) - in person

- Sia Karamalegos (Shopify) - maybe remote

- Benjamin De Kosnik (Mozilla) - in person

- Sean Feng (Mozilla) - in person

- Barry Pollard (Google) - in person

- Andy Luhrs (Microsoft) - remote

- Jason Williams (Bloomberg) - in person

- Jeremy Roman (Google) - in person

- Patrick Meenan (Google) - remote

- Neil Craig (BBC) - will attend remotely as much as possible

- Tsuyoshi Horo (Google Chrome) - in person

- Khushal Sagar (Google) - in person

- Lucas Pardue (Cloudflare) - remote (best effort)

- Shunya Shishido (Google) - in person

- Andrew Comminos (Meta) - in person

- Lorenzo Tilve (Igalia) - in person

- Antonio Gomes (Igalia) - in person

- Andy Davies (SpeedCurve) - remote (best effort)

- Alon Kochba (Wix) - remote (best effort)

- Alex Christensen (Apple) - in person

- Ryosuke Niwa (Apple) - in person

- Kevin Babbitt (Microsoft) - remote

- Nidhi Jaju (Google) - in person

- Domenic Denicola (Google Chrome) - in person

- Leon Brocard (Fastly) - remote

- Mu-An Chiou (Ministry of Digital Affairs, Taiwan) - in person

- Tim Vereecke (Akamai) - remote (best effort)

- Noam Helfman (Microsoft) - remote (best effort)

- Mark Nottingham (Cloudflare) - in person

- Benjamin Feigel (Navy Federal) - remote

- Carine Bournez (W3C) - in person

- Ming-Ying Chung (Google) - remote

- Fergal Daly (Google) - remote

- Nathan Schloss (Meta) - in person

- Sam Weiss (Meta) - in person

- Brain Strauch (Meta) - in person

- Andrew Cominos (Meta) - in person

- Anne Van Kesteren - in person

- Aoyuan Zuo (Google) - remote

- Erik Anderson (Microsoft Edge) - in person

- ... please add your name, company (if any) and if you’re planning to attend remotely or in person!

Agenda

Times in CEST

Monday - September 11 (11:30-13:00, 14:30-16:30)

Timeslot (CEST) | Subject | POC |

11:30 | Nic, Yoav | |

12:00 | Domenic, Jeremy | |

12:30 | Fergal, Ming-Ying | |

13:00-14:30 | Lunch Break | |

14:30 | Tsuyoshi, Patrick | |

15:00 | Michal | |

15:30 | ||

16:00 |

Recordings:

Tuesday - September 12 (14:30-18:30)

Timeslot | Subject | POC |

14:30 | Yash | |

15:00 | Kenneth | |

15:30-16:00 | Break | |

16:00 | Long Animation Frames | Noam |

16:30 | ||

17:00 | Yoav, Nic | |

17:30 | Scott | |

18:00 | Crash Reporting | Andy |

Recording:

Thursday - September 14 (14:30-16:30)

Timeslot (CEST) | Subject | POC |

14:30 | Ian | |

15:00 | ||

15:30 | Michal | |

16:00 | Yoav |

Recording:

Friday - September 15 (11:30-13:00, 14:30-16:30)

Timeslot (CEST) | Subject | POC |

11:30 | Nidhi/Adam | |

12:00 | Ian | |

12:30 | Benjamin | |

13:00-14:30 | ||

14:30 | Joint meeting with WHATWG - Giralda III (-2) | |

15:00 | ||

15:30 | ||

16:00 |

Recording:

Sessions

Day 1

Intros, CEPC, agenda review, meeting goals, introspection

- Nic: Mission to provide methods to observe and improve aspects of application performance of user agent features and APIs

- … Highlights - moved preload to the HTML spec

- … new triage process =>progress on open issues

- … Rechartering is in progress. Extension ongoing

- … Proposed charter draft

- … Notable changes: EventTiming, spec unification, hoping to revitalize the primer

- … Doc around perf APIs security and privacy

- … Discussion session tomorrow at 5pm

- … New incubations

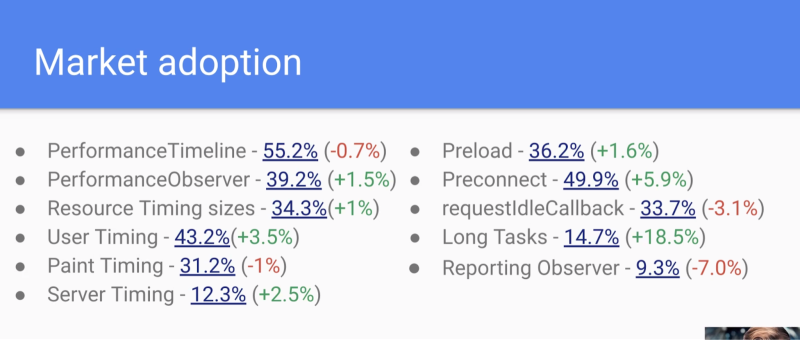

- Market adoption slide

- … Interesting changes year over year, e.g. why is long tasks up 18% and Reporting down 7%?

- … <round of intros>

- … Agenda for the week at https://bit.ly/webperf-tpac23

- … Housekeeping

- … Health rules overview: masks, tests, and stay isolated if you’re symptomatic

Speculative Loading (prefetch & prerender)

- Jeremy: How to make navigations go faster

- ... Page Load Time matters to users, LCP one key way we measure how long a page takes before presented to user

- ... Benefits beyond that

- ... Can accelerate by optimizing images, lazy load, hints, preconnect, etc

- ... Sometimes getting a headstart on top of that is nice

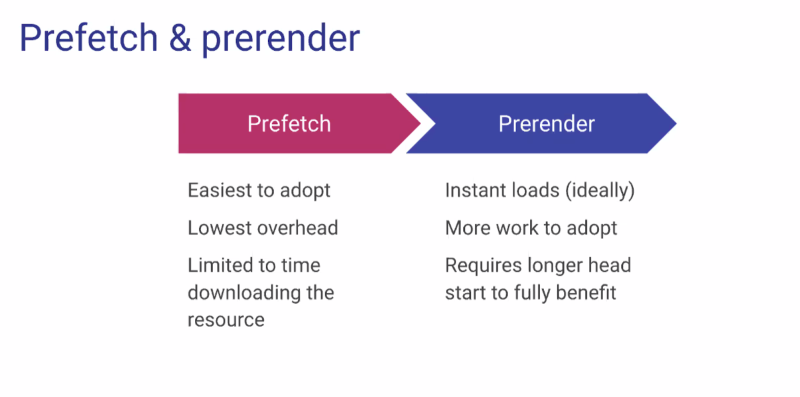

- ... Prefetch and prerender gives more options

- ... Allow APIs, developer tools, libraries to assist with this

- ... Because prefetch is simpler, we can do it cross-site and preserve privacy

- ... Prerendering can only work on same-site for now (more concerns on arbitrary scripts)

- ... UI speculation, URL bar might trigger it

- ... Speculation rules with APIs available

- ... For prerendering, some changes site may need to make changes to allow for (e.g. no auto play sound)

- ... Browsers can get value for users from these features

- ... We need to handle page-to-page navigations

- ... Speculation declarative way to tell browsers of a strategy to use

- Domenic: Working on these technologies for a few years now

- ... This year we've switched our focus to figure out how ecosystem can adopt better

- ... Dev-tools updates

- ... API gaps our partners have found

- ... Working on expanding URL bar prefetching and prerendering beyond simple ones

- ... Working on No-Vary-Search feature

- ... Plus document rules, delivering through headers, and improved document heuristics

- ... How much does this actually help?

- ... Deployed this on web.dev, developer relations site for Chromium team

- ... Relatively simple static site

- ... Significant gains for same-site navs

- ... 200ms for prefetch, 700ms for prerender

- ... Using document rules for Origin Trial

- ... This data is not necessarily apples-to-apples

- ... Mobile shifted significantly compared to desktop

- ... Based on when Prerendered succeeded vs. when it didn't trigger

- ... Exciting result to see change in distribution

- ... Working with NitroPack

- ... They insert a specific URL for prerender, and see 168ms improvement on LCP for prefetch

- ... Almost 2 seconds of LCP win vs. not doing any loading at all

- ... They have a lot of data, shared with us

- ... Benefits of these technologies for other perf metrics vs. LCP (CLS and input delay and whether page responds on tap)

- ... Happy to report we have approval to share agg numbers for Chrome itself

- ... 602ms improvement when Prerender triggered

- ... Improved the global LCP average by 9ms

- ... Not a lot of lead time from SERP results, but still enough to have savings above

- ... Counterfactual analysis data

- ... Speculation rules are having an impact across the web

- ... Based on counterfactual data with small holdback groups

- ... This might be a reflection of which sites are giving prerender a try - typically sites that are faster to begin with

- ... More percentiles of data in speaker notes

- ... Higher precision is less waste

- ... Recall is how often you speculatively loaded the things needed

- ... Lead Time is how quickly you speculatively load things

- ... Lots of people are experimenting with hover predictors right now

- ... We think we can do better, it's not a zero-sum triangle

- ... Maybe ML models that can optimize these axis

- ... If your platform has access to analytics data, you can use this to boost some angles

- ... When experimenting, might be surprising that Prerender might cause LCP go to "zero", but there's possibly not enough lead time to render whole page in background

- Jeremy: Clearing hurdles to adoption

- ... Complications

- ... Query parameters that don't affect semantic meaning

- ... e.g. for analytics, don't affect query processing, or ordering of parameters

- ... We'd still like to be able to use a Prefetch for same semantic result

- ... Using the response header No-Vary-Search can help define parameters or ordering to ignore

- ... Related to that, when a Prefetch goes out and before it's complete, we want to be able to use that lead time we have

- ... If the URL that's being Prefetched and the URL the user is going to, what if the response has a No-Vary-Search HTTP response header, there's a timing issue

- ... Speculation rules can have a expects_no_vary_search

- ... Tells UA there's a No-Vary-Search policy that should be waited for

- ... Subtle but doesn't have a lot of extra complexity

- ... Can use Prefetched pages in more cases

- ... One that's a little bit complicated is User State

- ... Ideally state should be discarded on some changes, e.g. when user logs in or out

- ... Some ideas which could be e.g. HTTP Variants proposal or something similar, denotes where the document will vary on a specific Cookie

- ... Just varying on cookie will probably over-match, as sites have many cookies

- In some cases the Rules would be specified by a third-party or another service provider, there could be a HTTP response header that provides the content

- … Been experimenting with a response header that would enable prefetching without modifying the markup to ease deployment.

- ...

- Other things authors need

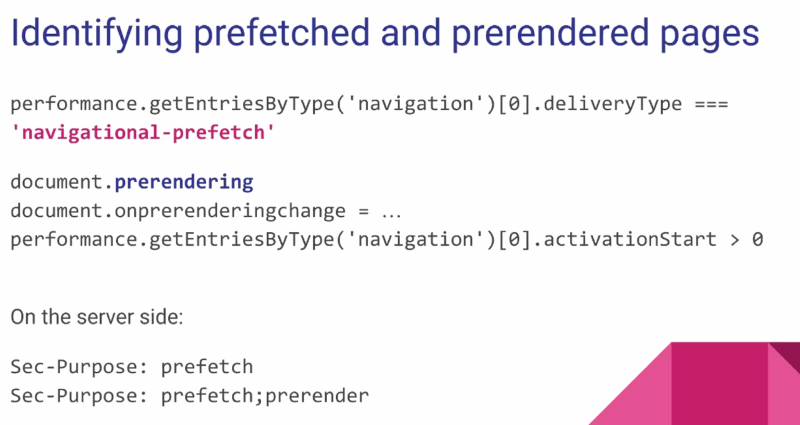

- ... Can check if document was delivered via navigational-prefetch type, or document.prerendering in JavaScript

- ... Or Sec-Purpose header on server side

- ... Allows analytics to understand what's happening and slice data'

- ... Dev Tools updates to understand why something in the background isn't working

- ... Developer Tools can show what UA is doing

- Domenic: To wrap things up, some call to actions

- ... Looking for other browsers to implement

- ... Web Developers and platforms should add speculation rules for page. Can start small, mark everything as safe to prefetch

- ... Use analytics data

- ... RUM and analytics update for prerendering measuring

- ... One of the hardest thing we've heard in adoption is they're not sure if all third parties are updated

- ... Not everyone is there, the more we get there the better

- Questions:

- Neil: I'm wondering if you've thought to allow users to opt-out, users for whom bandwidth is expensive. e.g. Save-Data

- Domenic: Several opt-outs in place. There's a setting in chrome://settings, we respect OS and battery-saver mode

- Khushal:

- ?

- Speculation rules for logged in users?

- Pre-render anti-pattern example: limit to 3 articles per month, and pre-render “eats” that budget.

- Keith: impact on server-side load?

- Jeremy: should set proper caching policies, etc

- … you can set the speculation rules eagerness level to only trigger prerenders or prefetches when the user is very likely to actually need them

- Domenic: people can vary priority based on sec-purpose headers

- Jeremy: or decline them on the server side when under heavy load

- … any error status code would cause the user to drop it

- Keith: What’s `sec-`?

- Jeremy: means the request can’t be set by fetch() or SW

- Domenic: in practice, it means post 2020 header

- Michal: From a UX perspective, only the activation time matters, but developers want to know about their lead times. If the user spent more time prerendering but that wasn’t felt, does it matter?

- Domenic: are you using analytics to measure user-facing performance or your implementation. CWV are defined in terms of activation start. But the raw data enables you to measure the things that happened before activation

- Michal: That’s a theoretical problem? Should we just measure from activation? If your aggregate data improves, but becomes more extreme in the outliers and that goes unobserved.

- Jeremy: your data could go bimodal, but I don’t see this a problem

- Barry: You should also be able to split your data by prerendered, bfcache, etc buckets

- Domenic: approach we’ve seen, and saw sites that split their traffic to prerendered/non buckets

- Jeremy: (uncaptured)

- Barry: compared second page navigation, to prerendered but most navigation were still landing ones

- Jeremy: and some sites can have more internal navigation than others

- Nic: As a RUM provider as we’re splitting on prerendering, do we want to measure from the user’s perspective or the backend perspective. Trying to figure out how to communicate “cheap for the user, but expensive to the backend”. Slightly more complex

- Barry: Was already a thing before this, with e.g. preconnect

- Shuo: Does chrome start a new process and start parsing?

- Domenic: promising but hard to implement. Need to wait for security headers to be back before starting the process.

- Khushal: Trickiest API that pages use in the background? E.g. location?

- Jeremy: Most of these APIs are async, so we delay them until activation. Async means the site doesn’t have to change

- Domenic: Some sites have issues with waiting for too long. Cross-site prerendering means hanging on localStorage which is sync, so that didn’t yet happen

- Tom: Have you come across sites where most of the site is Prerenderable but there are just a few small script tags that aren't compatible?

- Domenic: common for pretenders. Some folks do that, and others consider it a lot of work. Thought of adding attributes to scripts to help with that

- Dominic: Frozen async things get flushed to script after activation?

- Domenic: First the prerender changed event fires and then all the frozen things fire in order

- Michal: You showed one partner with interactivity data improving. How long that loading period is for certain sites? After activation start for things to settle?

- Domenic: hasn’t been a huge case because the lead time is limited. For sites that prerender at the very top, we’ve seen larger numbers than we’d like (400-500ms)

- … part of that is that we’re not doing layout and paint in Chrome. Some may be due to scripts that were deferred to activation, but we haven't heard specific complaints about that yet. So overall, I can't say conclusively.

FetchLater

- Fergal: Lots of work by Ming-Ying and Noam R. Pretty close to starting off a second OT

- … Don’t want people using fetches in the unload handler, which is also unreliable, especially on mobile

- … Typical use case is analytics data - want to send the last version of your cumulative data. Want to send it even if the page crashes

- … API shape, quite different from the last time we presented. Reused as much of the fetch() API as possible

- … Returns an object that tells you if the request was actually sent, in case you want to update the data

- … Cancellation happens using an AbortController

- … Can also pass a background timeout, indicating a preference to the browser, but there’s no particular guarantee on the times

- … 'Before' scenario doesn't always work, e.g. pagehide isn't reliable in all scenarios

- ... If you really want your data to get there, your last chance to have a good chance is visibilitychange[hidden], but maybe that's too early. The user could come back in seconds.

- ... With this API, you can just say fetchLater()

- ... Data will definitely be sent, as long as the browser process isn't killed

- ... With timeouts, you can control when it's sent

- ... Another example for using a backgroundTimeout

- ... With BFcache, you could look at persisted state and send a beacon

- ... Especially on mobile when you get a visChange event and page goes hidden, JavaScript can keep running. But e.g. browser may stop running timers.

- ... Hopefully that will still fire if page gets BFCached

- ... Another example for sending after page was backgrounded for 1 minute

- ... The "before" example here doesn't work, this is a new feature that works with fetchLater()

- ... You can also cancel a pending fetch, via abort()

- ... Then start a new fetch with updated data

- ... You need watch against races, keep the result and be able to call .abort()

- ... Implementation could have a maximum background timer

- ... Per document, there are 64 KB of deferred fetch content waiting to go out

- ... If a new request is made, that pushes over limit, it's rejected with an exception

- ... Cannot use streams for this

- … If you’ve gone into the background, network requests sent later can reveal information about your browsing that may have happened on a different network. We want to avoid that, unless the site already has permission to do that with BackgroundSync or Service Workers.

- … So if background sync is on, we can defer requests. Otherwise, we send the request as soon as the page is backgrounded

- … When the page becomes hidden, JS continues to work and timers continue to fire and then they stop, and there’s no event that signals that they stop

- … So it’s impossible to guarantee sending that data to the page.

- … Didn’t test Safari for the same behavior, but Firefox and Chrome do

- … So even though the background timeout gives us this power, that doesn’t help for frozen, backgrounded pages

- … The code to handle pagehide is quite complex. It’d be better to have a timeout baked into the API

- Anne: Confused with timeout stuff at the end

- ... I thought the idea was fetchLater() call would be queued to be sent later, and the timeout would be happening there

- ... How does JavaScript matter?

- Yoav: Discussions around having two separate timeouts, one for bfcached pages that can no longer run script, and another for backgrounded pages that can run script for some time

- ... Second one was removed because those pages can manage their own timeouts, and send fetch whenever

- Fergal: I would say we took the old API and we aggressively removed anything you could do something in a similar way, e.g. "send after N seconds"

- ... but there are situations where JS is not working but we’re not really backgrounded. And there’s no event that triggers that

- Anne: document that’s hidden but not BFcache? For such documents browser throttle timers quite aggressively, so that makes sense

- Fergal: Not related to backgroundTimeout, that's only used when BFcache

- Yoav: Background word being used ambiguous, bfcache vs. hidden

- Fergal: "Frozen" and BFCache

- Yoav: Page Lifecycle specs and info on WICG. Defines “frozen” events.

- Michal: Code to do this is complex

- ... Can you remind me the use case for foreground timers for fetchLater

- Fergal: They want to deliver first data within e.g. "n minutes"

- Michal: Wouldn't be correct to create fetchLater immediately then set a timer later

- Fergal: Simplest and reliable would be get rid of backgroundTimer and swap to just a "timeout" parameter

- Anne: Some "timer" might make sense, but "timeout" might be ambiguous for future use for Fetch

- Domenic: It's in second parameter

- Fergal: Pair with activated thing

- Nic: Note about the limits for 64KB to be queued. sendBeacon has a similar limit

- … with fetchLater people will be using this queueing up more payloads to be queued later, where

- Yoav: limit per origin

- Andrew: curious about streaming constraints. Compression streams would be exciting. Have you considered allowlisting specific stream implementations

- Fergal: I don’t recall

- Domenic: can imagine a

- Ryosuke: can you clarify “background timeout capped by implementation”?

- Fergal: Chrome has a maximum of 10 minutes. There was no good way to decide what’s a good limit.

- Anne: Need to think about this. If the user changed networks we may not send it anymore.

- Fergal: depends on the background sync

- Anne: Per origin limit makes me think we will have a partitioning problem. Does it take the top level site into account. Needs more clarification

- Ming-Ying: it’s per document per origin

- Anne: Is there a global cap?

- Fergal: we haven’t discussed that.

Compression Dictionaries

- Tsuyoshi: Working on Compression Dictionary Transport team in Chrome

- ... Uses previous responses as compression dictionaries for future requests

- ... Compression agnostic, new schemes could be adopted in future

- ... Example of using a previous JavaScript library as a dictionary for future version

- ... Another example is using dictionary for HTML pages, used in future requests

- ... Here are results of lab experiments

- ... New HTTP response header

- ... Announces dictionary for future requests

- ... match is most important parameter. ONly for same-origin requests

- ... Optional ttl to designate how long dictionary can be used, independent of cache lifetime

- ... Chrome uses "expires" currently

- ... hashes is list of algorithms supported

- ...

- ... Client advertises dictionary in future requests

- ... New Accept-Encoding of br-d and zstd-d. Can be extended in future

- ... If server has matching dictionary and Content-Encoding it can serve using dictionary-compressed

- ... Adds headers to designate the response is encoded and using those dictionaries

- ... Also has a new <link rel=dictionary>, as well as a Link header

- ... Example HTTP interaction above

- ... Dictionary above can be used for any future navigation to the origin

- ... Browser sends Sec-Available-Dictionary header, and server responds with smaller version based on that dictionary

- ... Another example with external dictionary

- ... Browser downloads dictionary after first request, server sends Use-As-Dictionary

- ... Browser later navigates to another URL and notes Sec-Available-Dictionary

- ... Client decides whether to use dictionary, manages its own privacy risk

- ... Dictionaries are assumed to be private information

- ... Origin Trial are becoming available in Chrome

- Patrick: Standards-wise, most of the protocol-level is in IETF

- ... Browser-specific parts (link rel=dictionary and CORS protections) are going through W3C process into HTML spec

- ... Active areas of discussion, e.g. on match= string in dictionary response.

- ... Some desire to re-use URL patterns stuff in Service Workers

- ... HTTP WG prefers to not have complex processing, but most evaluation would be in client side

- ... Requires stable filename patterns for things you want dictionaries for

- ... e.g. for JS resources, you'd need a stable app.js path. Using a hash of contents won't allow you to use dictionary as currently defined

- ... Use-As-Dictionary discussion, keyed by hash of contents which you have to process the whole response for the hash. Maybe some helpers where advisory hash included in the response as well that intermediaries or client could use in some scenarios (e.g. to know if it's already in cache)

- ... There are size constraints on various parts of it, 100MB per dictionary item limit in Chrome

- ... Brotli CLI won't process anything over 50MB (no Zstd limit)

- ... Depending on Chrome version there may be limits that we're exploring

- ... Overall dictionary cache size limit as well, partitioned like cache

- Questions?

- Carine: In the IETF is the HTTP WG the one you're working with?

- Patrick: Yes

- Carine: Is the HTTP WG working on the Content-Encoding and headers?

- Mark: Yes

- Ryosuke: Who computes the cache?

- Patrick: Client-side computes the hash, and makes it available as part of the next request. Server-side could pre-calculate and use hashes to know how to process.

- ... At static build-time, you'd calculate hash of previous version, compress the current version using the previous version as a dictionary and store the compressed version with the hash of the previous version in the file name (i.e. main.js.br-d.<hash>). At serving time, check to see if a version with the requested dictionary hash is available and fall back to the full file if not.

- Ryosuke: browser computes hash based on content?

- Patrick: Yes, the browser computes this when the response is received and stores it along with the hash. Even if we add a hash on the response as a helper to reduce the need to calculate the hash multiple times, when the client stores it, it still needs to calculate and verify the hash matches the contents.

- Anne: Is it just a hash of the contents, or does it consider other parts of the response?

- Patrick: Just the contents. Just to make sure that the dictionary being used is really the same, so the decompressed contents is correct. It's not a cache identifier or anything else, just for dictionary integrity.

- Ryosuke: If the hash doesn't match, what happens?

- Patrick: The client sends a request to the origin with an available dictionary and hash; if it doesn't have a resource delta-compressed with such a dictionary, a response will be sent without dictionary compression.

- … For a lot of cases, there will be multiple dictionaries in flight, with clients having different dictionaries. It can ignore dictionaries it doesn't recognize for the requested resource.

- … You don't want it to happen too much, so we have a separate TTL on the dictionary. You don't want lots of stale dictionaries filling up intermediate caches.

- Anne: Dictionaries are supported for any subresource?

- Patrick: Any HTTP response – text, binary, etc.

- Anne: Including navigation?

- Patrick: Yes. You probably won't use a navigation response as a dictionary, but any HTTP response can be used as a dictionary.

- Anne: How does that reconcile with the CORS requirement?

- Yoav: It's CORS-readable, so same-origin or CORS-enabled.

- Patrick: It's both. Has to be same-origin as the content it's encoding, and cors-readable within the document it is fetched from.

- … A script can be used as a dictionary for future requests for the same logical resource, later on when the app has been updated.

- … There are "passive" dictionaries, used to update to a newer version, and "active" dictionaries built specifically for to be used for certain kinds of HTML or other resource, but not used other than as a dictionary.

- … The first navigation obviously cannot be dictionary-compressed, because there's nothing in the partition that has been seen before.

- Anne: How do you deal with redirects? If you have a cross-origin redirect to a same-origin resource, is that tainted?

- Tsuyoshi: can't use it if it ever redirects cross-origin

- Anne: Okay, so there's also tainting. But then how does it work for navigation? May follow up.

- Nic: A dictionary used for HTML might be different than for JS, etc. Is this just based on URL matching, or can you vary based on fetch dest?

- Patrick: Just based on URL right now. Fetch destination could potentially be added.

- Nic: Particularly, HTML pages usually don't have a common path prefix.

- Patrick: On path matching, most specific path is what's picked, so if you have "higher" and "deeper" paths, the deeper path will match.

- … You can have different HTML dictionaries for different path patterns, but right now everything is path-based.

- Barry: If you have example.com/* and example.com/*.js, will it use the former for navigation?

- Patrick: Yes, and for everything that doesn't match *.js.

- Ryosuke: We take the most specific match?

- Patrick: "Most specific" is currently "having the longest match string". If you have multiple matches, it's whichever has the longest string in the match definition.

- Ian: Why not just support multiple matches in the request?

- Patrick: Complicates the Vary caching; it's better for caches if we have just a single variant on the request. Especially intermediary caches.

- Further feedback to https://github.com/WICG/compression-dictionary-transport

Hands-on Workshop on measuring Event Timing, LoAF, and INP

- (not being recorded or scribed)

- https://github.com/mmocny/tpac_2023_workshop_responsiveness

Day 2

Initiator attribute

- Yash: Proposed addition to the ResourceTiming API

- ... Unique identifier that can be associated with each resource fetch and RT entry

- ... Discussion on Issue #263, continue talk there

- ... Helps with identification of parent resources, to build dependency trees

- ... Can use this to provide insight into loading scenarios

- ... E.g. 3P scripts that are loading a lot of resources or weren't intended

- ... Security products could benefit from backtracking

- ... Points to identifier of parent resource

- ... Whether this should be a Fetch ID, or would it make more sense to have a Resource ID

- ... We concluded it would better to use a Resource ID

- ... String concatenation of URL and StartTime attributes

- ... Readonly initiator attribute type

- ... Collecting ResourceIDs and logging the initiator

- ... Other cases where we collect this ID

- ... Resources fetched by link, img, iframe, or inline scripts

- ... In Chromium, use the parser preloading to know which resources were fetched by the document

- ... For CSS case where stylesheets are @import'ing, we can point back to the stylesheet as the initiator

- ... Some things are a little more complex

- ... The ancestor or direct parent script can be marked as the initiator

- ... For an on-stack script case, where a carousel relies on a library to fetch resources, to speed up resource loading, we may need to optimize the carousel and the library code it depends on

- ... Cases for on-stack async scripts

- ...

- ... Some questions:

- ... How should we present this complexity?

- ... Is a single initiator sufficient?

- ... Option to add a list of scripts that block execution

- ... Loading dependency and execution dependency tree

- ... Followups in https://github.com/w3c/resource-timing/issues/380

- Discussion

- Barry: What happens with a SCRIPT inserts a IMG into the DOM, what will the initiator?

- Yoav: Insertion of IMG tag is done by the SCRIPT, correct?

- Barry: Yes

- Yash: If it's an inline script that's fetching the resources and adding to the DOM tree, the initiator would be the document itself

- ... If its' an external script, it would be pointing to the 3P script

- Barry: It's a HTML modification that's causing the IMG to be fetched

- Ian: My understanding is at the point we're inserting the DOM entry, we know the script is running

- ... As long as it's not more complex where the script inserts another script that creates the DOM entry

- Barry: If you can't make this reasonably reliable in most cases, then it's not as useful

- Yaov: In this case as long as we're building a dependency tree, we'd attribute it to script 2, where script 2 was attributed to script 1

- Michal: async scripts on stack that block resources, I'm looking at the timestamps, is the last timestamp from ResourceTiming not when the response ended?

- ... It blocks execution start of the script, but not the responseEnd?

- ... Example given where there were 3 scripts, where the 3rd is blocked from executing since they were executed in order

- ... But the resource fetch wouldn't get blocked

- ... And ResourceTiming doesn't depend on the execution of that resource

- ... 3 scripts, they would all be parallelized and completed, there's no notion of ResourceTiming that the 3rd script blocked the 2nd script

- Yoav: The fetching of multiple scripts in succession, the fetching wont' be delayed by scripted in the middle, but if script 3 is fetching yet another resources, and script 2 is delaying that resource

- Michal: It's blocking resource discovery

- Yoav: There's some dependency chain there

- ... Maybe initiator isn't the right semantics for that, but maybe there's another signal for deferred scripts?

- Michal: Should RT have one more metric, executionStart or action

- ... But initiator would be delaying that

- ... Let's say there's a chain of 3 resources

- Resource 1 “blocks” resource 2 from execution (because it was “defer” and was ordered first, so executes first… but only if both are loaded together before DCL… it’s complicated!)

- Resource 2 is initiator of Resource 3

- Resource 3 now officially “depends” on Resource 2… but it also is indirectly ends up “blocked” by Resource 1.

- General observation: resource timing starts with resource discovery, but resource discovery is based on execution of work. Resource timing can report initiators but Resource timing does not report on work execution, just fetch.

- Noam: Use-case is to provide a network waterfall?

- Yoav: The use case is to provide a RUM-available dependency chain

- ... If we want to focus on a single resource and what's delaying that, going up the dependency chain should give us that answer

- Nic: prefer to have more details than less. Single initiator is useful. Other bits of information would help as well

- … What would wrappers do?

- … Would wrappers get blamed for everything?

- … It may help to have more than one initiator to avoid blaming 3Ps.

- Barry: comes back to Michal’s point that RT is about resource loading not execution

- Michal: It’s also about discovery. Resource 1 blocks 2, which initiated resource 3

- Nic: Could get out of hand

- Noam: Promise.all

Compute Pressure

- Ken: initiated by Google. System pressure - cpu, heat, etc

- … video chat systems put pressure on the computers during COVID

- … So for systems under pressure could adjust the number of frames or resolution to make things better

- … Introduced a lot of challenges. CPU pressure is global state that could be shared across origins and apps

- … Do we need permission prompts?

- … 4 pressure states: nominal, fair, serious, critical

- … developed with collaboration with Zoom and other API clients

- … Added support for workers and iframes

- (awesome demo)

- .. Global states can result in privacy issues

- .. Sites can use global pressure by changing workloads, enables them to broadcast data

- .. The attack requires a very quiet system, but is possible

- … Two mitigations in the spec: breaking the calibration and trying to identify that folks abuse the system using abnormal compute pressure

- … In OT, active users, lots of interest

- … Know that some people would really like low level access for that info

- Oli: How do pressure states match with the hardware? Android CPU vs. intel CPU

- Ken: Trying to align on CPU specifics was complex and utilization was undefined.

- … Wanted to define high level state that developers can reason about, but allow UAs the liberty to define

- … Wanted to do this in a way where hardware can run at e.g. 80% cpu utilization and not report critical (TODO)

- Oli: There’s interop questions if different systems report different values

- Ken: Values should align with user experience, not strict cpu conditions

- Nic: Would the levels be defined by the UA? OS+UA?

- Ken: A mixture. A lot would require some hardware collaboration. Maybe we’d use machine learning to detect the levels. It doesn’t matter much that it’s the same across systems. No real interop concern.

- Khushal: Can this be used as a hint from the user to lower system utilization? TODO

- Ken: Systems already do this somewhat, like start throttling if stressed for too long, and the Intel Thread Director on Windows can move processes around etc. But apps might function quite differently and want to be in control.

- … Streaming video game wants to get most out of the system ,but without killing the machine

- … another example is adding more people to a zoom call

- Noam: possible use case - adaptive initial response to user interaction based on pressure.

- Andy:

- Ken: Thought about attributing some blame to current origin to notify the site that it’s causing load

- Khushal: Any thoughts on serially dispatching this notification. For example, give it to a background tab/iframe first. Allows the more important content to get more CPU utilization.

- Ken: If you’re backgrounded you don’t get that info. It’s available to visible iframes

- … A few exceptions, especially in the video case. Sharing my screen means that zoom is in the background.

- … Same with picture in picture

- Khushal: Maybe dispatch it to the iframe first?

- Ken: both main frame and iframe would be able to get it. But you have to set the permission policy on the iframe

- Noam: expand on the comment I wrote. A case we see at Excel is that some interaction would provide a response in 200ms. In a small percentage of cases we can’t do that, and we’d like to provide some feedback to the user.

- … Would that work or would the state fluctuate too frequently.

- Ken: There are ways to set the frequency, but would be great to file an issue with that use case

- Nic: Looking at the explainer, it seemed geared towards real time applications. How can this be used for analytics? Can we split data based on the compute pressure?

- Ken: Haven’t thought a lot about that. Let’s collaborate!

- Nic: Not an explicit non-goal, though

- … I’ll open an issue

- Oli: Introducing a fingerprinting vector

- Ken: Some fingerprint-ability, but fairly limited. Limited number of states

- … could correlate the same user on the same system in some circumstances

- … Very hard to do, and we have mitigations in place

- Shou: Even if the spec limits the number of calls to 1 per second, still seems like a new fingerprinting factor

- Ken: TODO

- Alex: these words are more fun than low medium and high

- … Thanks for the research on side channel attack

- Ian: Spec says that algorithms are not deterministic?

- Ken: Spec is missing the limits, still ongoing work

- Ian: Analysis that has X% randomness resulting in lack of calibration

- Ken: The system may take some time to cool down and change states.

- … Could manipulate what it means if you treat the change as a range. Can move things on that range, to break the ability to calibrate

- … /*goes over “supporting algorithms” in the spec*/

- … mitigation proved to be quite effective

- … Plan to add default values in the spec

- Ian: interested in information theory perspective

- Ken: On really fast computers, it is impossible to find workloads. Attack varies based on machine

Long Animation Frames

- Noam: "Lo-Af"

- "No-am"

- ... Recap: We want a web that's more responsive

- ... Avoid jank and wait and other bad words we can find for these events

- ... Measure sluggishness

- ... INP we can measure regressions in responsiveness, worst interaction

- ... Regression metric, what caused INP?

- ... How does it become actionable?

- ... Look from our accumulated hours of tracing, what causes sluggishness

- ... 3 main causes

- ... 1. Direct / slow event processing

- ... Click event, took long to paint, INP

- ... 2. Main thread congestion -- things on the main thread, maybe not related

- ... 3. Other, things happening on the system, compositor, high compute pressure, input handling was slow

- ... We're talking about the second one here, in a way that's actionable

- ... Up until now we've had Long Tasks as a way to do this for a while

- ... 3 issues we've found issues with LT in the field

- ... First, "tasks" are an artificial term. In the browser, in the spec, but term can be used for multipel things. Not directly connected with UX.

- ... Task length is an implementation detail that's not necessarily useful

- ... Second is a missing feature, no attribution beyond what window

- ... Long Animation Frames, Long Tasks version 2

- ... LoAF is the time between idle and paint

- ... Start with idle, do some work, maybe it needs a render? if 50ms or more have passed after doing work, then its' a LoAF

- ... What's in a LoAF? Provides time spent rendering, blocking input feedback and layout thrashing

- ... Need to wait for rendering to know if style and layout were the last ones

- ... We also know which scripts ran during animation frame

- ... Script entry points

- ... Not the whole script profiled, the top of the stack

- ... For each script er can expose:

- ... What's been done so far:

- ... Most of the implementation, currently on Origin Trial

- ... Several active participants providing feedback

- ... RUM Vision is providing this in their dashboards

- ... Found script cause of bad execution

- ... "Game changer" easier to pinpoint issues

- ... Big productivity suite using this in the field to find scrolling jank

- ... Third case-study: a 3P ad provider, doesn't want to be the cause of INP issues

- ... Doing field regression testing

- ... Giving themes something actionable will help them take responsibility for this

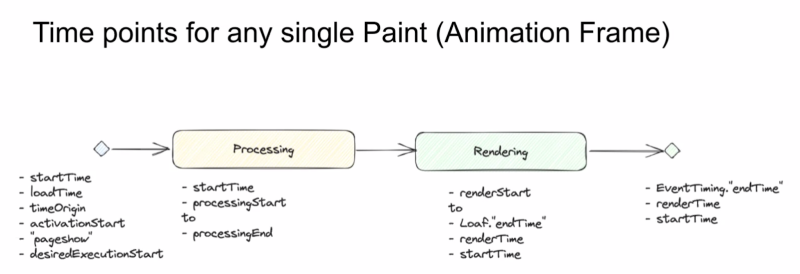

- ... Relationship with EventTiming and INP

- ... Trying to match INP and LoAF directly is a red-herring

- ... Doesn't always give results you expect

- ... Doesn't tell the whole story, but might incidentally tell you

- ... We measure sluggishness with EventTiming and INP

- ... We measure main-thread congestion with LoAF

- .... Requires analysis in the middle, could mean different things for different sites

- ... Analogy is ElementTiming and LCP, and ResourceTiming

- ... Work together, but it's intentional they're not merged into one

- ... Gotchas

- ... Can't 100% cover all scripts, but sometimes may be misleading

- ... Education challenges in teaching people that there's not a red-line between LoAF and blaming script. Gives hints and data, and requires analysis in the middle

- ... For example in RUM Vision slide, they had Sentry.io which wraps all JS calls. Doesn't mean Sentry is to blame for performance

- ... Also reasons like cross-origin frames (running on same process), or system congestion, extensions

- ... You may have LoAF but don't know why

- ... Missing things

- ... Clearer connection with EventTiming

- ... Want better support in Dev Tools

- ... Fix script entry attribution to be less partial

- ... Next steps is to finish OT, fix those missing bits

- ... Our focus on interop and spec'ing to fullest, will be after we learn a little bit more

- ... Responsiveness and congestion are related but separate

- Yoav: Mentioned this as Long Tasks v2. We're going to talk about rechartering soon. Should we rename Long Tasks something more generic that will cover both APIs?

- Noam: We can call it Congestion or Main Thread XYZ.

- ... I see the Timeline as one big thing that has multiple views into it, LongTasks with several entry types is fine

- Sean: Are we trying to deprecate Long Tasks?

- Noam: I don't know. It's possible, a future question

- ... Main step in the way is for Lighthouse / TBT support. People are using LT to measure that

- ... Once we use LoAF to measure that, we can consider

- Oli: How does this work in background tabs?

- Noam: LoAF is only for visible frames

- Oli: For the algorithm, this stuff is implementation-dependent, might lead to different results

- Noam: How this works is implementation dependent, yes. In Chrome for example, rendering throttled to 100ms, if no input event, we do every 100ms

- Oli: And Firefox doesn't at all

- Noam: The effect is the same though. You had an idle moment until paint.

- ... Blocking duration is the time which a possible UI event would have been blocked

- ... Different between browsers

- ... We use these metrics for improving ourselves

- Noam H: Use-cases, we are evaluating this API, but don't align exactly to the intent

- ... One is to identify bottlenecks during page load time

- ... Analyze events happening during page, to see if bottlenecks were JS or compile or style related

- ... So far I can't tell if it's useful, need to analyze the data

- ... Related to script compilation and evaluation, sometimes it's inline evaluation, and this only collects the root evaluation

- ... Second use-case is identifying smoothness and scrolling of viewport, identifying bottlenecks there. Seems promising to that scenario.

- Noam: To the second question, compilation should catch inline scripts.

- Noam H: Not inline scripts, when compiles happen, the first pass of the root items in the JavaScript then it may do JIT compilation

- Yoav: Pre-parse vs. compile vs. JIT

- ... Pre-parse phase, then parse the script, then interpret, then JIT hot paths. But not defined in any way.

- Noam H: Initial parse

- Yoav: Up until interpreter started running

- Noam: responseEnd until first time performance.now()

- ... Broad strokes, kind of intentionally

- ... We wanted to find the right balance of low overhead

- ... Enough information to be useful

- ... The needle can move to either side as we're researching and talking to others in this room

- Nic: Excited to hear about 3P scripts excited about this to improve. Did they try to experiment with LTs?

- NoamR: LTs don’t have scripts. We started this trying to add scripts to LTs and finding issues, then spinning that off

- Yehonathan: what about attribution of LoAF to an element or a layer? What if I’m animating something too heavy for the machine

- Noam: Showing style and layout only. No current plans. Not sure how to do that in a way that’d be reliable

- Andy: Would script attribution be useful for worker thread scenarios?

- Noam: Not exactly. JS profiling may be more suitable for workers than this. You don’t have lots a lot of types of tasks that happen in the worker. Attribution is typically to the worker

- Andy: Minification - sourcemapping and obfuscation?

- Noam: That’s for the analytics tools or (in the future) on the devtools side. Don’t even send column/line positions to reduce overhead for this field metric

- Oli: How high is the overhead with this? Thinking about speedometer 3. Have you measured in such benchmarks?

- Noam: Ran this with all the benchmarks. Had to revert some bits and pieces

- Oli: taking timestamps is slow on Linux

- Noam: can and will benchmark more

- Michal: Event Timing has to measure things anyway.

- Oli: this is overhead per event listener, not per event dispatch

- Noam: We can find mitigations. Can be a lossy hint to the developer. If it’s not 100% accurate that’s fine, as long as devs have info about where to start looking

- Nic: great data for traces and understanding. You mentioned TBT.

- … Do you see a similar aggregation metric related to LoAF?

- Noam: Hoping to port TBT to use this, as it does it more justice.

- … careful with too many aggregate metrics

- Nic: For RUM providers, aggregates help to monitor changes in them and alert developers on regressions

- Noam: Main change would be when stopping to measure TBT for invisible frames

- Michal: Worth discussing effective blocking time

- Noam: Not at the moment

- … With the OT we added to it, anything that can be measured to see what people use, and learn from it what needs to be shipped

- Nic: how long would the OT run?

- Noam: Been out for 2 month and would be active until we fill we learned enough

- Ian: Chrome 115-120, may be extended

- Michal: the “needs render” in the cycle, from Chromium’s impl, we differentiate the first invalidation from other invalidations. Multiple layers to be figured out. Can other implementations eagerly decide when a rendering stage is needed?

- Oli: Roughly what firefox does. Can eagerly paint as a result of input

- Emilio: what does it convey? Something may have changed? Something definitely changed?

- … e.g. an intersection observer churns a rendering loop. Does it count?

- Noam: yes

- Emilio: why? Is it well defined?

- Noam: You can define it in the HTML spec.

- Michal: The reason you measure a single task being long is that it’s blocking everything else in the queue. If you requested rendering, future work is being delayed by your task

- Emilio: is there a pending “update the rendering” step?

- Noam: yes

- Michal: Maybe it’s feedback about the naming

- Noam: Wanted to call it “frames” but didn’t want to confuse it with iframe. “Long rendering cycle”

- Michal: this is all related to “effective blocking time”.

- Olli: rendering is prioritized, but we force paint after interaction

- … high priority task is we think we have something to render

- Michal: Many reasons why you may cluster work and then render, and that’s browser specific. “Effective blocking time” would give you end to end duration

- … but the EBT would give you the longest block of work + rendering task

- Olli: There might not be “idle” but just not work

- Noam: After you've painted you’re “idle” even if it’s for 0ms. But you could call it something else

- Ryosuke: replace LT or complementary?

- Noam: We don’t know yet. You could say it’s an extension

- … we need to learn more about this space in order to know the answer

- Barry: Nothing in LT that this doesn’t answer?

- Michal: Could have multiple LTs in a single LoAF. May not matter in practice

- Noam: Could have a LoAF entry that has a few tasks in it

- Barry: Any reason not to use LoAF?

- Noam: If this succeeds this should deprecate LongTasks

- Ryosuke: LongTasks would take us years to implement, while this is implementable

- Noam: Working on this together would be better for the web than waiting to implement LongTasks

- Olli: This measures quite well what happens in the speedometer3 runner. It measures from a raf call till the TODO

- Andy: Is there a case where there’s an LT that doesn’t involve rendering so no LoAF fire?

- Noam: Only case is when the page is not visible for part of the time

- … If there’s no rendering, it still counts as a LoAF

- … This is main thread congestion even if it didn’t paint. Can say it’s a noop, but it’s an invisible animation frame

- Ryosuke: There are some computation that doesn’t result in a DOM change, where there’s no animation frame at all

- Noam: We have those as well

- Ryosuke: keeping track of that is less important, as users may not notice it

- Noam: Wanted to measure all that stuff. You could see clearly in the entry that this did not render.

- … Goes back to “this is a congestion metric”, and there was congestion

- … Maybe you did work for a second and it didn’t matter, but it might in other scenarios

- … so important to expose speculatively for other cases

- Ryosuke: So LoAF would report?

- Noam: Report and tell you it didn’t render

- … So 2 types of LoAF and we report both of them

- … maybe needs bikeshedding

- Michal: Could you imagine a model where you always schedule rendering work and bail if there’s nothing to be done.

- … rAF based reporting, if there’s no actual rendering

- Ryosuke: Can’t understand that in WebKit as we don’t know how tasks are run

- … So it would be impossible to implement right now the “no rendering update” case

- … rendering update is well defined and we know if it got delayed

- … but we have no knowledge if something took more than 50ms

- … We know when the rendering update was scheduled

Incubation adoption & Charter

- Yoav: Let's talk about pending incubations

- ... Stuff in WICG and some intention to move this to our WG

- ... Not about incubations inside existing WG efforts

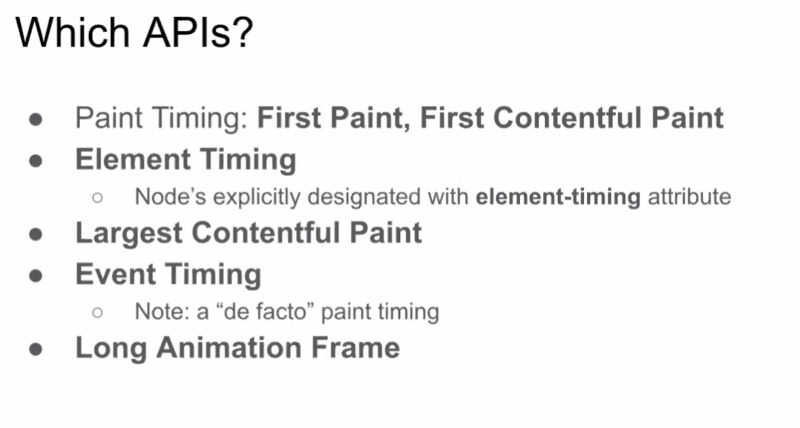

- ... ElementTiming

- ... Main reason to get into group is it's heavily relied on by LCP (which is in the group)

- ... Spec in WG that's reliant on spec in incubation

- ... Would be great to resolve that

- ... There could be value in uniting some of the existing specs into larger ones

- ... Particularly, move processing model of both ElementTiming and LCP into PaintTiming

- ... For example, have their single definition of Contentful. A single spec will help clarify this.

- ... My question to the group is can we ElementTiming is adopted by WG, then move all into a single spec?

- ... If not, there could be other creative solutions but let's see if we need to discuss those

- ... Obviously this meeting doesn't replace a Call for Consensus, but I'm trying to get a feel from the room for support or objections on this front

- Alex: For moving the spec, adopting ElementTiming?

- Yoav: Yes

- Ryosuke: What's the implementation status?

- Sean: We're implementing LCP, but not ElementTiming

- ... (just the ElementTiming algorithm)

- Michal: Half of ElementTiming is an extension to PiantTiming, and the other half is TODO

- Yoav: If there is no consensus in adopting ElementTiming, one thing we could do is move processing model off ElementTIming, and have ElementTiming spec just be IDL + a couple of hooks.

- ... We could do that, I guess

- Sean: Could be weird, FCP doesn't use that processing model.

- ... Currently PaintTiming has FP and FCP

- Yoav: First, we need to look into aligning on a single Contentful definition

- ... LCP Contentful and FCP Contentful

- Michal: Contentful could be shared for the most part, but the idea of first paint is of contentful content

- ... Whereas elementtiming and LCP represents the final paint of contentful content

- ... Different demarcations of paint and time

- ... Should be shared

- ... PaintTiming has many facets which should also be defined

- Yoav: Should be united in a single document, instead of 3

- Ian: Foundational Paint document, perhaps ElementTiming is off of that, LCP is also off of it

- Yoav: Is ElementTiming not on roadmap?

- Sean: We're not against it

- ... Do we need implementations?

- Yoav: No, we need WG members to be favorable of adoption is the criteria

- ... To move spec further down the line, CR or PR?

- Carine: Requirement for CR

- Yoav: Maybe we want to keep ElementTiming apart as long there's no other implementations, in order to not block PaintTiming from CR

- ... But that doesn't block WG adoption

- ... If you're generally favorable

- Sean: I'm more favorable having a main PaintTiming document, and keeping separate docs

- Yoav: Bringing all processing model into PaintTiming, but keeping separate documents? That would still be an improvement

- Sean: Yes

- Carine: PaintTiming is just WD

- Yoav: From my perspective, moving processing model doesn't require an adoption decision. But we could separately discuss exposing the API part as an adoption decision?

- ... Should I send CfC?

- Patrick: Does adoption mean pushing ElementTiming forward as a spec, or we want to take responsibility and taking what's in it and making sure they're all connected

- Yoav: Fixing stuff up is something we already need to do, WG is committed and adopted LCP. Therefore we need to fix up the underlying dependency issues

- Patrick: Aren't we responsible for impact on ElementTiming as a result

- Yoav: A situation we want to avoid is a situation where we effectively have all the things in PaintTiming are implemented, but ElementTiming is blocking rest of the spec making progress in W3C process

- Patrick: If ET doesn't become a standalone spec, and we take the relevant parts into the other specs, are we still responsible for ET or do we leave it as a straight incubator

- Yoav: Incubation that relies on processing models that live in WG specs

- Ian: Take off W3C hat and put on WICG hats and make work on it

- Yoav: Should I send a CfA or now?

- ... I think it's worthwhile to have API in separate spec anyway

- Alex: No multiple implementations of ET

- Sean: LCP is almost implemented

- Carine: If we fix issues in PaintTiming, what would be schedule roughly

- Sean: "Soon"

- ... We are going to have LCP, so we'll support algorithm of ElementTiming and LCP part

- ... So moving those parts around would be fine for Firefox

- ... If we just incorporate algorithm into PaintTiming doc

- Yoav: From my perspective it doesn't require a CfC

- Carine: And it's just a WD doesn't take anything

- Alex: I think having ET and a shared infrastructure separated would be good

- Carine: Should we send a CfC for adoption of ElementTiming

- Yoav: That would be a new document, yes

- Ryosuke: No consensus that we want this in the working group

- Michal: Unambiguous consensus that if ElementTiming were split in two that would be good. I heard no concerns about ElementTiming, a second implementation coming.

- Sean: No objection for a new document for algorithm of ElementTiming

- Yoav: Option (a) we adopt ElementTiming, we throw everything ET+LCP in PaintTiming. One Spec covering all 3.

- ... Option (2) we do all that, but we keep the hooks in ET separate to not stall larger document

- ... Option (3) we keep the same thing, but have the thing wrapper and keep ET wrapper in WICG

- ... I prefer Option 2 with thin wrapper in WG, unless there are objections we can go with Option 3

- Ryosuke: We'd have a significant problem implementing ElementTiming

- ... Algorithm needed by LCP and ET should be moved into WG

- ... Question is whether ET API should be included in charter of this WG

- Sean: I'd prefer we put ET algorithm into PT

- Yoav: In case we don't need consensus

- Ian: We can revisit after the algorithm is moved

- Sean: Sure.

- Yoav: Concerns around implementation's complexity

- ... Discussion earlier this year, and to try to share ideas for how this could be implemented elsewhere

- ... Right now this is Chromium implemented only

- Sean: Firefox has not implemented

- Yoav: What is missing?

- ... In the past the Mozilla folks were supportive of the concept, but had performance concerns

- ... I think some of those performance concerns were addressed

- ... What could be next for adoption?

- Sean: I'll ask folks to review difficulties, I'm not sure if they have new thoughts for this

- Yoav: If there's anything else to help push this work further

- ... Other incubations

- ... I think Memory Measurement had some desire from Mozilla in the past

- ... What can we do to push further?

- ... Incubations planned to go directly to WHATWG

- ... Talked about Scheduling APIs last year, one big spec

- ... Consensus it should be implemented directly into HTML

- ... Do we tie requestIdleCallback to the other Scheduling bundle? We'd need to add that to the charter

- ... Thoughts in that direction?

- Alex: Putting into the same spec as those other scheduling things?

- Yoav: Probably into HTML

- ... fetchLater() is going into Fetch

- Noam: But only Later

- Yoav: Page Lifecycle is related to things WG worked on in the past light Page Visibility, not sure about current state nor cross-browser implementations

- Olli: Implemented only in Chromium? Which didn't have BFCache?

- Yoav: Yes and yes

- ... It may need some revisiting

- ... I can try with my Chrome team hat on, push to figure out the situation on the Chrome side, if you can file issues

- ... This is parts of the snippets that Fergal used the other day for fetchLater()

- ... If this isn't cross-browser implemented, that won't be a viable solution

- ... Good to push on that

- Ryosuke: Which memory API were you talking about

- Yoav: Measure User Agent Specific Memory

- Michal:

- https://github.com/WICG/performance-measure-memory (MDN)

- Yoav: performance.measureUserAgentSpecificMemory() limited to COI

- Michal: Question about adopting this to the group

- ... I heard others may not want it to be part of the web platform

- ... Is lab tooling within WG in general

- ... Concept of a layout shift is worth being in purview of WG

- Yoav: Algorithm without Web API available?

- Michal: Yes, are we interested in discussing the challenge of layout shifts in this group

- Alex: Is there a group for web tools?

- Yoav: Yes, this would be shared concept between the two groups

- Michal: Should we have this even if there are concerns in shipping it to the field.

- Ryosuke: We're not interested in implementing this thing

- ... We wouldn't want this included in deliverables in WG, we'd be on hook to implement

- ... Want to minimize scope as much as possible

- Nic: Including a doc in the WG doesn't require a commitment to implement right?

- Yoav: Correct but it require IP commitments

- Carine: TODO

- Yoav: I've heard no appetite to adopt the concept, we'll follow up

Scheduling APIs

- Scott: Update on scheduling APIs. presented in the past, but wanted to give a high level update

- … breaking up work into smaller pieces can help with responsiveness

- … these strategies have proved very effective inside of Chrome as well

- … Shorter tasks can help responsiveness, but how should they be scheduled?

- … notion of priority gives some control over that

- … used to schedule prioritized tasks

- … modern API that returns a Promise, takes an abort signal

- … shipped this in Chrome 94. Usage is trending up as part of the focus on responsiveness

- … can be polyfilled

- …

- … Geared at improving responsiveness. Yielding to the event loop gives us a rendering opportunity

- … Enables a single large task, that yields to enable other tasks to run

- … Talked about this a few years back and keep hearing about this from developers

- … Concern that yielding can result in long time before they get rescheduled

- … Problem presented in the chart above

- … setTimeout can get in the way of continuations

- … with yielding and continuations, we’d prioritize continuations, so that once the render runs, we’d continue the task we were working on before

- … API shape needs to work well with async tasks

- … takes the same options as postTask so you can define fine-grained control over priorities

- … Yield also supports inheritance - continuations get the same priority as the task that triggered them

- … Example - task controller with a priority. Function loops over items and yields after each item.

- … calling abort would result in the Promise rejecting

- … Saw latency improvements in Facebook experiments compared to the regular React scheduler

- … Other ideas - you want to yield but not block rendering

- … semantics to wait after rendering happened and then resume

- … replaces double-raf hacks and doesn’t force rendering

- … Another use case - want to yield but wait for some timeout

- … requested by developers to essentially have a promise based setTimeout

- … Difference here is that it doesn’t need to be prioritized as the other APIs, but you could specify a priority if needed

- … other use case - Extending the priorities system to other APIs

- … thinking of an API that might make sense

- Olli: Priorities aren't specified anywhere, so we'll need to work on that. Don't match Firefox.

- Scott: Yes we have an open Issue there as well. I started a patch, but got distracted by other stuff.

- Noam H: Can you explain more between yield() and render()

- Scott: Yield doesn't wait for a render to happen. No guarantee. For example, you've updated the DOM and rendering hasn't happened, you call yield(), Chrome can just resolve the promise in the next task. Doesn't wait around.

- ... with render() if you make a DOM update, you're guaranteed the next time the promise is resolved, those updates would've been painted

Crash Reporting

- Andy: This may want to get pushed off to Charter Discussion

- ... Reliability isn't owned anywhere, in any WG

- ... Came up in crash-reporting repo should this be in scope for WebPerf

- ... Is that a Charter thing?

- ... What's the line between performance and reliability?

- ... Tied tightly, a Hang is a Long Task in many cases

- ... Memory crash can be poor memory usage, etc

- ... An error or reliability issue can happen due to performance issues

- ... Came up with reports from hangs causing crashes

- Yoav: Thank you

- ... I think this is in scope for our Charter if we're looking at mission and

- Yoav:

- Earlier we discussed reporting api, etc, which are in WICG

- All of these are in scope but may not have quite enough interest to adopt

- I suspect, any new reporting features, while they may be in scope, may find themselves in a similar position (not enough support for adoption)

- Andy:

- Makes sense.

- Yoav:

- Let’s look at the Charter proposal

- Missing/Scope

- Nic:

- We do not currently discuss reliability

- Alex:

- What did we refer to earlier?

- Crash Reporting, Deprecation Reporting (?), Intervention Reporting

- Who manages that? Us.

- Yoav:

- Web App Sec group also use that and rely on us, as does NEL and other things?

- What is the status of Crash Reporting

- Still in WICG

- When was it last published as a draft? …

- Yoav:

- What Andy is proposing is similar but different, and Andy is looking for a home and asking if Web Perf WG is the place.

- Andy:

- The current charter and many specs “imply” a concept of reliability but not explicitly. Maybe some of the lack of interest is due to lack of explicit mention of it.

- Yoacv:

- Perhaps add a PR to the Charter to see

- Nic:

- (Discuss draft Charter and how to comment/PR)

- (Going through Github issues, tags)

- Carine:

- This is going to be spent out (to AC) soon–

- I would rather ask to file and issue than to make a PR at this point.

- Andy:

- I can do that.

- <Reviewing Draft Charter>

- Yoav:

- Beyond that, there is a Web Perf Primer that may be better to move elsewhere. Will discuss with MDN

- There is also Security and Privacy doc that needs work. Could be spun out… TAG is working on a privacy principles doc… I tried to push some of that there, or have our own document about “deployability principles”. This would be used to help balance tradeoffs vs various principled documents.

- First step would probably be to update this document

- Those are the main things being updated in the current charter.

- Carine:

- Current charter says that we adopting “way of doing things”. One of them is to keep things in draft and not CG… I anticipate that this will get pushback. Not sure what the implications will be on the WG

- Yoav:

- Can you expand on the objections?

- Carine:

- Not really an objection..

- 1. Some things are sup[rised that we dont expect to make Any Recommendations…

- 2. Normally we get out of CR when we have multiple implementations. If we never leave CR, it makes things tricky.

- …Changes to specs may mean returning to CR… so maybe only snapshots, or change

- <Apologies imperfect notes>

- …This may be pushing the boundaries of the process

- We may need to discuss specifics of the CR process, 2x implementation requirements, etc. We will get feedback back from AC review.

- Carine suggests that we go into the AC review with suggestions / proposed solutions.

- Yoav:

- …<POH?> mentioned this in passing but I had not quite expected…

- Nic:

- Do you recommend wording changes to the Charter right now?

- Carine:

- I think I would, I can make some changes to wording as recommended.

- Yoav:

- I would prefer to better understand the “threat model” here first 😛

- Carine:

- The biggest threat is the holes in the process… no other group declared in the charter their intentions the same way we did. (Also pointing out other ways to break the process)

- Yoav:

- There are several ways to this: CR and versioning… We tried that system and it didn’t work well for us. We are in the process of moving away from that and we tried to be upfront about this.

- I need to better understand the process and implications and think through this.

- Carine:

- There is a new process for updating REC without changing version numbers… but somewhat it is also a hole in the process, since there is no check on the new CR for implementation status. The group is trusted with review…

- Yoav:

- …we decided to choose this route because the other was not quite defined, didn’t seem to work… maybe we take another look.

- <Some suggestions on living standards approaches>

- Carine:

- The decisions to make now is:

- Leave charter as-is and hope, or

- Change before the AC review…

- Yoav:

- I prefer to think about this

- Carine:

- I support that :P

- …

Day 3

NEL discussion

- Ian: Survey of open issues in NEL and what's going on

- ... A couple of immediate issues that some may want to discuss

- ... Network Error Logging (NEL) helps site owners understand why users can't reach their site

- ... Reports on network error conditions, i.e. DNS, connectivity, application errors

- ... Set a header on your site, later visits will use header to send error reports

- ... Different than other APIs, as we're reporting on cases where user isn't successfully on the page

- ... Since last year we've partitioned the policy cache, subdomain reports are downgraded to DNS

- … expire policies

- ... 27 open issues that fall into multiple categories

- … tasks, new information types, filtering and privacy issues

- … 5-6 outstanding issues on the privacy front

- ... Signed Exchanges (#99) would be sent through a different path than going through the destination report server, needing different signing requirements.

- ... And signed exchange may have its own Reporting API headers

- ... Request to include Subresource Integrity errors (#155), but NEL may not be the place to do it

- ... Extended DNS errors (#129) there could be additional DNS information, but questions on potential privacy violations - revealing the identity of the DNS resolver to the site

- ... Certificate fingerprints (#127) in cases there are TLS failures

- ... Two issues about minimizing report volume

- ... Filtering reports by path (#124) so you can get volume. #133 is similar but by error type (DNS vs HTTP etc)

- ... We discussed these in April and we'd like to move forward on it, but just need to do the work

- ... Last section is Privacy Issues

- ... First one (#105) there's certain information that's not relevant for the request depending on the type of error it is

- ... We have a potential issue with Cookies (#111, #112)

- ... And two outstanding issues that came up earlier this year by some researchers (#150 and #151)

- ... For Clearing Report Body fields (#105)

- ... For DNS error example (above), we're including things like URL, path and query

- ... Some of these aren't relevant since you haven't even made the request yet

- ... Remove HTTP-level fields

- ... Similarly for connectivity, TCP aborted

- ... The path, query, method, etc haven't been requested yet

- ... For cases where error collector is different origin, may want to clear path/query

- ... Suspect we don't want to do this as the path itself is quite relevant, and site owner has said they'd trust this third party with their error logs

- ... Two issues with Cookies

- ... Then SameSite cookies can be sent cross-origin to a collector

- ... HttpOnly cookies (not readable by site) would be readable by being included in the reports

- ... Should we be taking it on ourselves to modify the header for those reports

- ... Maybe we should be removing these things?

- ... Or if we're not showing the exact stream in the request, is it still useful?

- ... For issue #150

- ... Upshot seems to be two things. One is that NEL reports on requests other than the top-level document

- ... We don't do NEL on things that happen in the background

- ... Regardless of origin, if you're requesting things from CDN and we can't get to them, we should report on those

- ... Second is that usage/presence of DNS-based firewalls could be exposed

- ... I think this is a fundamental to what NEL does

- ... Last one we have is allow limiting information exposed in NEL by URL

- ... When setting up NEL policy, how much information do you want to report?

- ... Maybe you want to exclude certain information depending on where that report is going

- Questions

- Martin: Mentioned the block list example (DNS or any based)

- ... Network fetches are going to fail depending on the blocklist of different scenarios

- ... We have to assume at some level these blocklists are being applied by user-choice

- ... Net effect w/out NEL is that sites know a resource isn't loaded but don't know why

- ... That why is important to keep secret, because it could be a non-retaliation issue

- ... Resources can be blocked for privacy reasons

- ... Users could be retaliated against for them using privacy blocking lists

- ... I'd be uncomfortable providing this information tied directly back to the user

- ... OK with this in aggregate

- Ian: Not include anything blocked on the client's machine (e.g. extensions)

- ... When the UA does everything it would normally do to get a resource, how do we distinguish blocklists from a misconfigured DNS server

- Martin: It reveals more granular information than what was being reported before

- ... e.g. Users using pi-hole

- ... Creates a discriminator for people using those tools

- Scott: How would you know what would cause DNS to fail

- Yoav: Not missing a piece of information, no technical way to detect blocked from server failure

- Scott: Browser extension, pi-hole, ISP filtering, Google DNS blocks, I've not seen a way where we could determine that

- Martin: Concern is with existence of granular information, not the signal

- ... May be a case where DNS does provide information to browser through extended DNS error codes

- ... But most DNS blocking configs would return NXDOMAIN and can't distinguish from others

- Yoav: In that case, any guess of this was blocked by DNS or accidentally failed to resolve via website would be speculative. Website wouldn't know which is which.

- Michal: About retaliation, NEL doesn't issue report in contents of the request, some network errors happen, but they don't' immediately get log from NEL, report is sent out of band "later" (i.e. a couple of minutes)

- ... Not observable

- Ian: Correct

- Michal: Could they cross-reference somehow?

- ... That this particular user had these failures before

- Yoav: You would have to be extremely vindictive to retaliate

- Martin: Comes down to the privacy threat model, should we be giving this information to sites anyway?

- ... Delay gives breathing room

- ... Whereas if you're wanting to provide disconnect between report and action that failed, then a couple minutes of delays won't be adequate

- Ian: Delay is not for any privacy reasons, just the mechanism

- ... Other thing that might be relevant here, 48h timeout on policies

- ... Timewindow you're limited to if DNS blackhole goes up, after 48h we won't send any error reports

- Martin: Questions is should any of this information be available to websites

- Neil: I don't see a way to distinguish where blocking happens

- ... Why is it a problem if web server operator knows there's content filtering in place

- ... Wouldn't know what to do with that

- Lucas: From my perspective, it's all good. If you're a website operator, and some content doesn't load because of a DNS error, maybe the person who loaded that thing just forgot to renew that domain or something. If things start breaking in subtle ways, NEL really helps identify there's an issue.

- ... Whether there's explicit blocking or another subtle issue

- ... As a website operator, I'm seeing all these ad domains are being blocked. I could switch to another ad operator.

- Scott: Only if those own those 3p domains?

- Ian: Those 3p domains won't get reports, unless they registered for NEL for them-sleves

- Barry: If I load jQuery from cdn.jquery.com

- Scott: You wouldn't get reports unless you owned cdn.jquery.com

- Barry: I wouldn't know my own site was broken?

- Ian: Correct