Participants

- Amiya Gupta, Andy Davies, Cliff Crocker, Hugh Crail, Jason Williams, Michal Mocny, Noam Helfman, Yoav Weiss, Annie Sullivan, Carine, Tariq Rafique, Alan Mooiman, Sean Feng, Avi Wolicki, JeB Barabanov, Barry Pollard, Benjamin De Kosnik, Giacomo Zecchini

Admin

- Next Meeting: January 18, 2024 (early slot)

Minutes

Element Timing for containers - Jason/Andy

- Andy: SpeedCurve is a performance monitoring vendor

- … Jason works for Bloomberg, using a custom version of Chromium in their terminal

- … We have some of the same needs for ElementTiming

- … ElementTiming allows us to measure when a element is rendered to the page

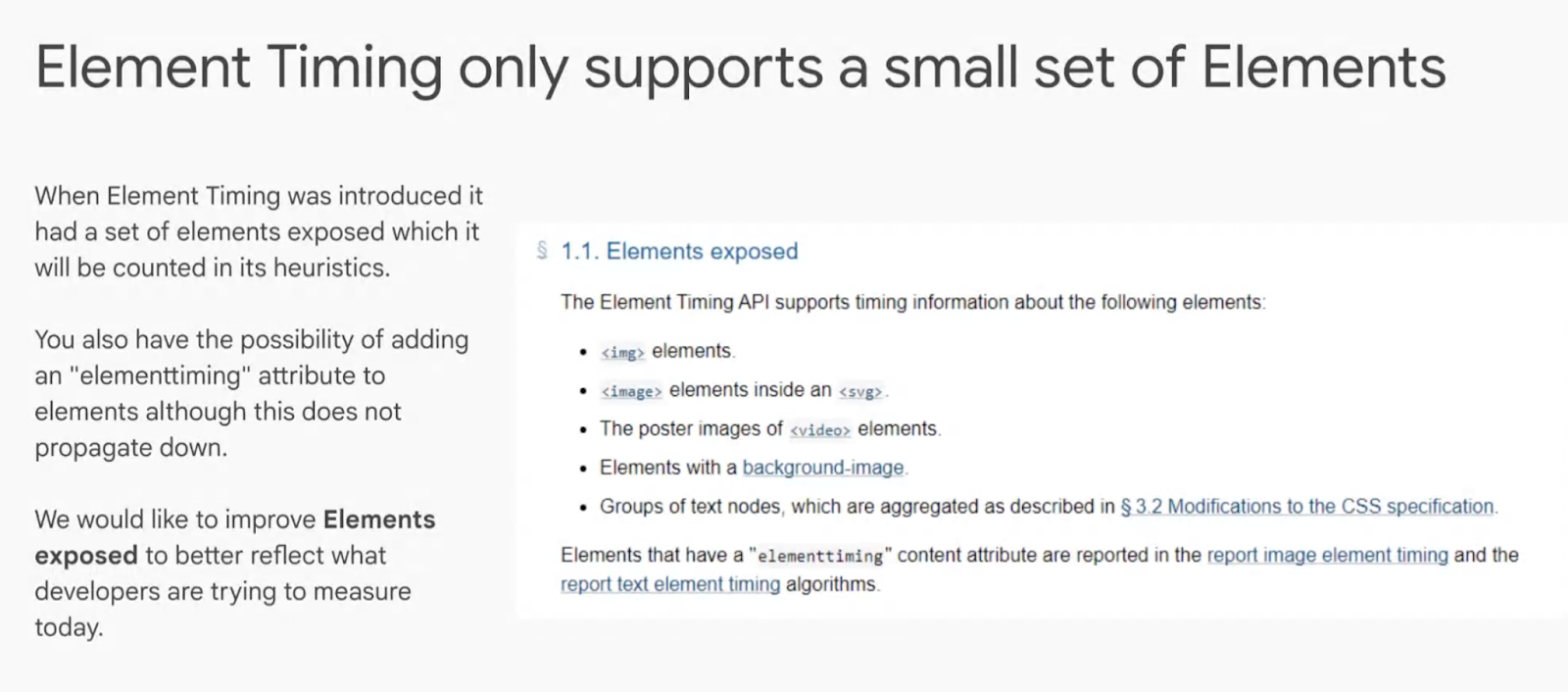

- … Limitations is it’s only applicable to a small number of elements

- … We love that customers can measure what’s important to them

- … But it doesn’t fit the way how we build the web today

- … Many different components, which are collections of HTML elements

- … No way of timing these as a whole

- … Collections of HTML elements wrapped in a DIV or another element, sometimes as WebComponents

- … Microsoft had challenges with measuring ShadowDOM

- … I’m more interested in measuring at the atomic component level

- … We can measure individual elements of that component

- … In a product card when an image rendered, or text elements

- … But when can they use it? The button that allows them to buy it

- … All of the content may not come from the origin, maybe it comes from a 3P origin

- … How do we measure it as a whole and which bits can we measure?

- … Being able to measure when a SVG, math or table renders would help

- Jason: SWEng at Bloomberg

- … We use component libraries in terminal

- … We embed Chromium

- … We go from one function to another, a bit like a page, has its own set of metrics

- … In the image here, we’re looking at a tabular data, main piece of content

- … Not a table, DIVs in a grid formation

- … When this entire function is loaded it gives you market data, text node shows name of function you’re in

- … But this is what our users would expect to see when they load this, the table content

- … Our developers would love to be able to measure this

- … When should a component be considered rendered?

- … We don’t construct a view all of the time, sometimes we stream data in, building up the DOM

- … e.g. for previous view with a table we’d start with an empty table and stream data in

- … We do care about the initial paint

- … Cells you saw re-paint, because market data is streaming in all the time

- … Some challenges, what if we have a component showing data that’s empty, do we wait for that cell to be populated before we mark as fully rendered?

- … Maybe we do show some timing events in a component until we know it’s rendered, or if the app is rendered

- … Marked container w/ ElementTiming attribute, want to know when it’s painted

- … DIVs load quickly

- … Now we’ve loaded the whole thing and it’s painted

- … But we’re also streaming data in

- … Maybe new data is added to a new row

- … Could be like LCP where entries stop coming through when there’s a user interaction

- … Some of the challenges we face when talking about solving this problem

- Andy: Same challenges - when is the component considered rendered?

- … Render initial data, then data comes in afterwards. We’re interested in the final state.

- … Dynamic data streaming could be separated

- … Separately, pages and apps are built on components

- … So we can measure experience people are really having on sites and apps

- … Unanswered questions

- … What about elementtiming attribute on a BODY of a complex DOM?

- … Animations?

- … Should elementtiming be considered for LCP?

- … When should we decide a container is painted?

- JeB: Interesting problem for us at Wix

- … Any Wix website consists of multiple apps and components

- … If we’re talking about LCP, we’re not interested in particular HTML element that is LCP, but interested in what component had highest LCP or application had highest LCP

- … So what we do currently is we take attribution data from LCP element and cross-ref it with additional metadata we have on the page

- … Leads us to the component / widget / application

- … This would give us a native way of doing this

- … Very much relevant use-case for us

- Noam: I can relate to this idea

- … Real problem we’re dealing with in Office Online when using ElementTiming

- … Two things i think are important

- … One is the container, we have a lot of complex elements with multiple DOM nodes inside them

- … We’d like to mark the container element

- … Simplifying our logic. What we currently do is in the complex container we inject a new element with ElementTiming, and consider it as the indicator of when the DOM was rendered or presented

- … Correlate that with the interaction that caused the element to be shown

- … List of complex menu items or something like that

- … What do we do with animations? We have components that fade in

- … What we’ve noticed, ElementTiming notes the time for the first frame when it’s fading in

- … (opacity CSS)

- … We’ve noticed it’s not reporting the time we’re interested in, the element is transparent in some cases

- … We’d like to know when it’s fully opaque

- Michal: One of my questions was streaming of DOM, building up the table dynamically.

- … In-ordered streaming of server rendered HTML

- … Browser knows the element isn’t complete yet, waiting for data

- … Another type of streaming is JS on the page, which happens at arbitrary times. We don’t know when it will start or end

- … ElementTiming you have to apply it once, early, eagerly, and it’ll fire once for that node

- … Doesn’t do well already with dynamic content and changes

- … Once the table cell node has painted, if you dynamically update it in place, it doesn’t work

- … I was eager to hear what someone prototyped

- … If it works for dynamic injection or new nodes

- Jason: If the server is sending content down, vs. JS APIs populating at arbitrary points

- … We operate more the later

- … JS APIs build out the DOM

- … ElementTiming that is OK, if it paints once, and it doesn’t emit new entries for us, we are happy with that

- … My example was more if we add a new set of rows

- … We have a prototype where we have a table/grid, and we listened for each cell being painted

- … If a cell updated with data later on, that’s fine, it’s just market data being updated

- … But if we care about new DOM elements come into that component

- Michal: Where it’ll get tricky, is if we’re trying to apply ElementTiming to the container

- … If the container doesn’t know when new elements will arrive, so done,

- … One proposal was to fire a new ElementTiming event, not how it’s done today

- … PerformanceTimeline has a delay today to fire until it gathers all of the data

- … Interesting if you fire entries, then re-fire with more up to date information

- … If we have a container with a set of its children, we report once painted, and not again for an update

- … Perpetually reporting a stream of events

- Yoav: One thing I wanted to suggest, right now ElementTiming there’s no real difference if applied in JS vs. HTML

- … For HTML, if you add a HTML attribute, it knows all its children and can keep that accounting

- … For dynamically injected ElementTiming, theoretically the browser could keep track of the element at the time it was injected, and report it at the time all those children are done

- … Could be a model, not sure how tricky it would be to use

- Michal: Could be polyfilled and prototyped with ElementTiming?

- … One thing tricky, if you add ElementTiming to a node that’s already been painted it won’t fire

- … So you have to always add at point of injection

- … Another example, where this change in approach could help us: Even today for LCP entries, if you have the first paint of the element, first animated image frame, eventual fullyLoaded paint, low resolution placeholder. One element can have multiple timings

- … Right now we buffer and wait until we have all of those timings

- … Could imagine a stream of timings for one element with different quantifiers and time values

- Noam: One challenge with animation is with iterative animations, it may never end

- … Some sort of timeout is needed

- … When to stop tracking?

- … Certain types of animations for example, we have opacity change (fading effect). Also have a slide-in into view.

- … Both cases we know the end state of the animation

- … We’re thinking about tracking the animation progress and report things around that time

- Yoav: Animation case is orthogonal to container case

- … Maybe you just want more timestamps, not happy with first one

- Noam: First one isn’t what user expects (fade-in)

- Yoav: Similar to progressive image discussion, where some people care about the first bits, some last, some “good enough” bits

- Noam: We’re similar to what Jason explained, we care about when user thinks the UI is ready

- Yoav: Is it consistently the last one? Or is it sometimes 60%?

- Noam: Perceived readiness, maybe doesn’t have event handlers

- Nic: And that could differ for different people

- Noam: Containers simplifies developer’s job, doesn’t need dummy hacks

- Michal: One of my primary questions was to what extent is this an ergonomic improvement for ElementTiming and happy with it

- … Andy mentioned LCP is nice but it’s always a heuristic and we sometimes want more control

- … Maybe this improvement is just a library?

- … But to what extent do we want to trickle these up to LCP heuristic? E.g. table example

- … If LCP was just better and solved use-cases directly, would we still lean into explicit marking with ElementTiming because that control is fundamentally important

- … Coupled or decoupled?

- JeB: Two tracks

- … First is what’s important for the user using the website

- … User-oriented LCP

- … Maybe making a decision that all tables should be considered as a container and maybe somehow reflected in LCP could be enough

- … Other track is what’s important for developers

- … Understanding what are the slowest parts of their application?

- … From ergonomic perspective for developers, this is as important as LCP for users

- Jason: Sympathize with the question of where do you draw the line?

- … In slide you had things like SVG and other composite elements that without question, LCP could consider those

- … Table is semantically that

- … I think developers will still need to signal various components via some sort of attribute

- … Take a look at the table I showed in the slides

- … I don’t see marking that table as a component sufficient for developer

- … For our users, they would want to see data is there to say it’s ready

- … Answering both your points, I think it’s both

- … Being able to mark components, this is a benefit

- … We can mark things that we think the user will find important, that LCP is not tracking

- Andy: Consent dialogues and other things that popup

- … Knowing when that interruption is being placed in front of the use

- … Things like a product card could probably decide, it could fold into LCP perhaps

- … But things like consent dialogues are a bit harder

- Michal: In terms of dynamically updating a component and representing the update

- … I’m reminded of Soft Navs

- … Say you have a page where only a few elements are updated, and you’re using ElementTiming, is it sufficient to report timings of the newest components?

- … I wonder if we could extrapolate lessons learned from page level slicing

- Noam: If the page has multiple components that load at different phases, e.g during navigation

- Michal: If I click a button and one cell gets updated, vs. I click a link and one element of the page changes. Relatively similar. How would you represent Element Timing of the component vs. the whole page? The analogy is close enough.

- Noam: We do it in similar ways to what I explained earlier.

- … We have some sort of interaction, “soft navigation”, load content that corresponds to that button click

- … Before we load it, we inject ET with unique ID corresponding to that interaction

- … Use it for our reporting

- Michal: you’re thinking in terms of largest content, or do you ignore things that are already there?

- Noam: During navigation we have gradual updates, parts on top, bottom, sides. Load at different times. Measure each, take the MAX() to consider when everything is loaded.

- Yoav: Part of your question earlier was whether this is a convenience compared to spraying element timing on all of the things and figuring out containers afterwards

- … vs. will it enable measuring of full components that are not currently measurable

- … I think some of Andy’s examples point out such elements

- … For SVG and MATH elements it’s clear where they start and end

- … Noam, is there something you weren’t able to measure with injections methods?

- Noam: Struggling with animation still

- … Optimistic we’ll find a way

- … If we find a way, it simplifies ergonomics of using it

- … Trying to think if there are scenarios we can’t solve

- Yoav: I imagine it would increase efficiency

- Noam: We are actually thinking about hacking ElementTiming to work around limitations of EventTimings. Using ElementTiming to detect the next INP

- … Using it in a similar way to INP in the sense that from event handler, we inject element with ElementTiming, the parser is forced to render it and give us that timing of the render

- … Hack to bypass cases where we can’t use EventTiming API for that purpose

- … Even if we can do that, we still have concerns that frequently injecting DOM elements is not the most efficient way

- … e.g. hovering over a list of items

- Michal: You’re not just describing an interaction that inherently leads to some DOM modification, you’re measuring interactions via adding DOM

- Noam: Correct, e.g. modifying a canvas element, but we have no easy way to measure when it was painted without this technique

- Jeb: Add to what Noam said. It’ll be an improvement in terms of ergonomics. But if we’re talking about performance, we’re in an ambiguous situation where to measure INP, we might cause high INP because we add stuff to DOM for purpose of measurements

- … e.g. when we need to cross-references element with metadata in the page, just for the sake of being able to identify the element

- … Will improve performance as well

- Noam: In terms of performance overhead, we’re not sure how bad it is

- … Trying to evaluate it by injecting two elements, then comparing with one element and see difference

- … To see DOM overhead from that

- Yoav: DOM dirtying could have a fixed cost you’re not measuring in that way

- Michal: let’s decouple those conversations

- … using element timing to measure event timing should be separate

- … Animation could be a separate discussion to improve element timing today

- … Then for containers, it’s very interesting. It’s interesting that it could be polyfilled but may have performance implications

- … Maybe we can share a prototype, evaluate performance, etc

- … Keen to see how many should just apply to LCP

- … If a table’s really dense and small and in-viewport you could heuristically guess it’s a “component”, vs. a more sparse table where each component is separate

- Jason: Agree that we can split those discussions. Need to have a conversation around taking the hacks we’ve done

- … Sounds like JeB and Noam have workarounds as well

- … Maybe we need to get back to seeing how these things run and what’s the opportunity.

- Michal: JeB-- for you "dom walk" to get the nearest component, how does that compare to Element.closest() api? You mentioned that running that code can be slow for large DOMs, but there’s an API that can maybe help you there?

- … Maybe you can mark containers and for each timing get it reported to the closest ancestor?

- Noam: From an ergonomics perspective, I’d love an API that gives me a DOM selector and gives me the timing for that element once the selector resolves.

- … Would work for containers as well as async interactions

- … Need to do a bunch of work to mark the right element

- … Would be great to have an API that does that natively.

- … Could be polyfilled, which is what we’re doing

- Jason: If this was done in userspace, how destructive would that be for INP? Interested in that

- Yoav: I’ve heard there’s a need here, maybe that need is ergonomics? That need for containers can be done in userland? But at the same time, it’ll have a cost. Cost inside the browser, but it’d be interesting to prototype a userland solution, prototype a browser solution, and see which one winds? Measure userland overhead before thinking about API shape

- … Just in terms of trying to evaluate the benefit

- … From my perspective of having 4 organizations here with the same need, point to needing to push along something in this area

- Jason: What would be the next steps?

- Yoav: From the WG perspective, some form of experimentation for a userland- or brower-prototype, or both?

- … At least on the container front.

- … Animations is one thing

- … INP hacks is another

- … On container front, playing around with prototypes and finding places where polyfill fails us or proving the performance difference can drive the conversation forward

- … Once we have more clarity on the need, then we have a question of who’s going to push this forward

- Nic: Is there an open GH issue?

- Andy: I’ll open one

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)