Participants

- Yoav Weiss, Nic Jansma, Barry Pollard, Michal Mocny, Mike Henniger, Mike Jackson, Noam Helfman, Pat Meenan, Sean Feng, Timo Tjihof,

Admin

- TPAC

- Registration

- Hotel

- Survey

- Working Group is meeting Mon, Tues, Thurs

- Next call - July 10th, 6am PT, 9am ET, 10pm JST (Service Workers special)

- Calendar invite incoming

Minutes

Deployability Principles - Yoav

- Yoav: Wanted to talk about data exposure and its relationship to privacy and security

- ... TAG has a privacy principles document we've been working with them on

- ... It includes a section that we've worked with them shaping around ancillary uses

- ... Defines all kinds of uses of data and different types of data being used in ancillary ways

- ... At the same time, it does not induced trade-offs -- only looks at things through privacy lens

- ... (this is by design)

- ... I thought it would be good to talk about the different trade-offs, and make sure we take all points of view into account

- ... Being able to deploy applications at scale needs to be considered when making decisions

- ... We want to get a wholistic view of all of the aspects

- ... Opened an issue on the design principles document on the TAG, as the privacy principles wasn't the right venue

- ... The issue was closed, the TAG's perspective, ancillary uses section was "good enough"

- ... I don't think that's the case. I think there are different reasons to capture ancillary data that's not covered

- ... Advantages and disadvantages for having APIs that capture ancillary use when it's available elsewhere

- ... Privacy Principles doc says there's no consensus on it

- ... Would love to come to a principled stance there

- ... I think we're learning a lot on mitigation methods on both privacy and security sides of things

- ... He presented a few weeks back on confidence signal for NavTiming

- ... Various privacy preserving ways we can expose this signal, usable in aggregate

- ... There's a lot we can document on that front, outline different mitigations

- ... Help inform future generations of features

- ... Want to have a principled stance for all of the trade-offs at play

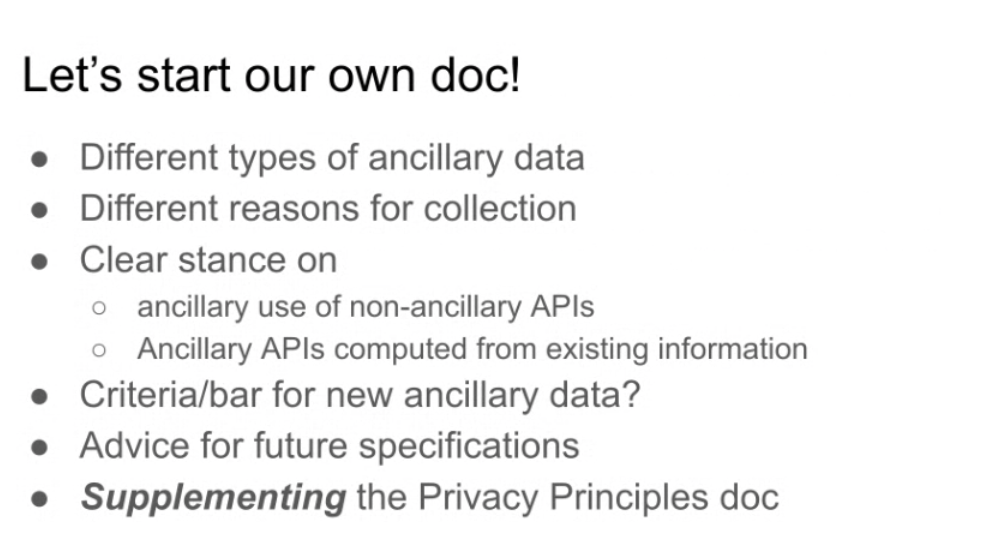

- ... Would like to start our own document

- ... Maybe discuss bar for what the bar is for exposing new ancillary data

- ... PP doc only talks about data minimization

- ... But some cases where new data can be exposed if the use-case is a very strong one

- ... Advice for future specs, paved path they can follow

- ... Supplement PP document with all of these things

- ... Not trying to replace or override it, just extra things not discussed there and I think should be documented

- ... Path I see is to put together a task force of interested folks and iterate on a document and put content in it

- ... Approved by working group

- ... Bring it to the AC as an official principles document

- ... That's it's more or less

- ... Open question if we need to add this to the charter

- ... Privacy + Security document started by Ilya many years ago

- ... Can we revamp it for this purpose?

- Sean: What I don't understand is why it can't be added to the PP document?

- Yoav: The folks that are editing that document don't want it there (it's out of scope)

- Sean: Feels weird there's PP document and we're supplementing it with our own document

- Yoav: In some ways it would've been good to have it in a single document, good for us to focus on the trade-offs for performance, monitoring, deployability, accessibility (from their perspective)

- ... I think there's [TODO]

- Sean: In the future,there could be the Privacy Principles document, but the WebPerf document has additions to that

- Yoav: The way I see it being used, is if we want to add a new API tomorrow, that exposes data in ways that goes against PP doc, but in a way we believe is crucial for the web to be viable

- ... No specific example in mind, but imagine a case

- ... It would be good to have an outlined principled stance, in order for us to make the right call to decide if we should or shouldn't expose it

- .. PP doc only talks about the minimal data possible, without expanding what that means in practice

- Sean: I need to read through PP a bit

- ... I would like to make a task force I'd like to be involved

- ... Want concrete cases where supplements are needed for that

- Yoav: Maybe the conclusion from the exploration would be that we don't need it

- ... Maybe we don't necessarily want to add that to the charter yet, exploration phase first

- ... Open to reaching that conclusion

- Nic: I would be supportive if the group forms a task force

- ... What other official documents are there? PP

- Yoav: Design Principles

- Nic: So we'd propose to be the official "third" document?

- Yoav: Yes, beyond that, accessibility could be one that would be useful

- Ian: I like this idea, given the nature of the work this group does, we should be the people advocating for monitoring and deployability

- ... I think this is going to be an important document

- ... I would love to see needs of Reporting APIs as a part of this

- ... Is there more to this document than privacy, what API monitorable, deployability simpler, in a document like this?

- Yoav: Worthwhile to think through, what other areas we can cover

- Ian: In regards to Sean's question, why not just in PP, I think there's more to say of beyond what is in contrast to that

- ... I can volunteer to the task force

- Timo: I would be interested in joining the task force as well

- ... What I'm interested in (I need to read TAG's PP), is if we could also augment that PP doc

- ... Are there signals we've seen already?

- Yoav: My past attempts at doing that were defined out-of-scope

- ... It is possible that one of the outcomes, is that we need to amend PP and we can argue for that

- ... Try to move sections from this document directly into PP if that makes sense

- ... Not sure it's a process issue as much as my convo's were scoped down to they're only looking at things at privacy level

- Timo: Are we looking to describe lower-level things, or making exemptions?

- Yoav: I think having contradictory principles is part of life

- ... I'm not sure all principles in PP doc all align together

- ... I could see us going into more details

- ... On various uses of data and examples

- ... If I'm just making a principle up, "the web should be deployable at scale" that may-or-may-not contradict with data minimization principles in PP doc

- ... We'll have to talk through examples to see how we manage that trade-off

- ... Want it to be good for users, web at large, businesses at scale, etc

- Michal: I volunteer for the taskforce

- ... I'm reading through the PP doc, it's very well written, I do like it. Some points I would contend with. They do carve out there is not a consensus on how ancillary data.

- ... But they leave conclusion that without a consensus, let's lean in one direction

- ... Data minimization is on "personal data", which is also defined, I think there's room for interpretation

- ... I think it's pretty obvious that this group will be one of the two directions that are already carved out in ancillary section

- Yoav: That's why I think we need this document, beyond the sentence saying "we think that", there's more to expand there and put more weight behind the argument

- Ian: We're in general agreement with Privacy Principles

- ... We don't have a stance contradictory to it, nor do we want to supersede

- ... Our principles may come into conflict and we need to take them together and articulate them

- ... None of this is contradictory with the document in whole

- Yoav: It's all about trade-offs

- ... Regarding charter questions we can wait until we know a more precise shape of document

- Nic: Next steps, smaller group that meets and brings status back to larger WebPerfWG?

- Yoav: Yes, maybe some in-person hacking at TPAC, bring it back to WG regularly

Compression Dictionaries Updates - Pat

- Pat: Compression Dictionaries are in WG last-call, its' on its way to RFC

- ... One discussion point

- ... Draft and what's going to RFC and what ships defines two content-encodings, one for Brotli (up to 50MB) and one for Zstd (up to 128MB)

- ... Spec allows for future content-encodings that can be dictionary-negotiated

- ... What we need to decide is what ships with the browsers

- ... For HTTP generally, no desire to limit it

- ... Concern if we ship both with no guidance or preference, sites are likely to deploy a mix of Br and Zstd, and new clients will need to deploy both content-encodings. They can't ship just Br or just Zstd

- ... On the flip-side, if Chrome only ships only Br and Safari only ships Zstd for example, sites will need to support both Content-Encodings for full support

- ... Is there value in shipping one Content-Encoding to ship?

- ... As things stand today, with Br and Zstd, Br(11) compresses about 10% better than Zstd

- ... If you happen to be in window of 50MB to 128MB then you may want to Zstd encode

- ... Reasons you may want to pick one or the other, if not, you may just flip a coin or not have a preference

- ... What I've heard more recently when Chrome shipped Zstd on server side, Br(1-11) and Zstd(1-22) if you target the same target size, they roughly use the same CPU

- ... May be latency reasons for streaming content

- ... May be how it integrates with the server, one better handles memory

- Yoav: Sounds like there is current benefits to having both. If you can afford the CPU and you're doing diffs out-of-band, Br gives better compression. If you have very large files, then Zstd is the way to go.

- ... From my perspective the cost here is on the implementors that will have to implement both encodings

- ... Chrome and Firefox already support Zstd (not sure about Safari)

- Pat: If both support both, and Safari just picks one, then origins could get full coverage by picking that one or just pick a single one

- Yoav: Unclear to me what the cost delta here is for implementors. Naively it doesn't seem huge.

- ... Seems beneficial to developers and end-users

- ... I would say supporting both makes sense

- ... Similar to how we have Content-Encoding itself, and we keep adding more. For 20 yrs gz, now we have more

- Pat: And we have Br and Zstd in regular Content-Encodings

- ... Right now we've had a natural monopoly on Br just because Zstd was only added recently

- Barry: For browsers that don't support Zstd then you'd be linking one feature adoption to another

- ... Second point is would limiting the support for brotli make it harder for us to support future encodings?

- Pat: Dictionary negotiation supports arbitrary Content-Encodings

- ... I think someone may build something that compresses WASM or AI models better than Zstd or Br.

- ... What we spec'd supports Br delta up to 50MB and Zstd up to 128MB, nothing stopping a new revision of that. Something could support e.g. 2 GB, using a new Content-Encoding

- ... I do expect in future there'll be a lot more

- ... For this initial launch window, do we want to nudge in a specific direction or let the web figure out what's best

- ... I'd prefer to ship both and see what works

- Barry: Limiting it now feels not-future-proof

- Noam: I have experience with Brotli. Do have any experiments using Zstd with Compression Dictionaries with current trials?

- Pat: Yes, one I know of

- ... If we had to pick we'd pick Br, as it compresses better in extreme cases, and it has the most-used trials

- ... Meta prefers Zstd in their production infrastructure (and they built it)

- Noam: I'm open against allowing everything unless it limits completion of it by browser vendors

- Pat: I'm not aware of anyone else working on it, Mozilla and Webkit support this but Chrome is the only one with active development and deployment plans

- ... We expect to see it launch shortly after RFC

- ... Working through ResourceTiming issues, to make sure reporting of everything is accurate

- Noam: In theoretical case in the future we may have 10+ delta compression efforts

- ... Does user have preference, or is it up to responder/CDN to provide right delta

- Pat: Depends on dynamically or ahead of time

- ... As part of build you'll decide what encodings and build those

- ... And server would respond based on what the client advertises as supported

- Noam: In current definition the client accepts the first response

- Pat: The client accepts dictionary then advertises all content encodings it supports

- ... e.g. br-d and zst-d

- ... Server sees what client advertises and sees what's available decides what it will serve (or neither)

- ... Client doesn't pick first, it says everything it supports

- Yoav: Use cases (e.g. WASM or ML-model) encodings, then it may be a choice between one of the generic mechanism and the specific ones, but not also "gzip" and "deflate" dictionaries

- Pat: Servers decide to build whatever is best for them

- ... Now if we have clients only supporting only N of the encodings, all different, then we have servers needing to support all union(N) of the encodings

- Noam: Server could support both but depends on the content type (e.g. ML-models vs. JavaScript resources)

- Pat: Even today you may decide to use Zstd for WASM>50MB and BR for everything <50MB

- Barry: We should remember that if we launch this, put the advice in MDN with some guidance

- Pat: We can document the size limits. I hesitate to document as part of the spec, compression differences as it may be just a function of the compressor code and not the algorithms themselves

- ... We should have some best practices

- ... Sounds like general consensus that we don't limit it

- Noam: I think it would be a concern if we did limit it

- ... We personally haven't tried using Zstd yet but we plan on doing it

- ... If it was limited, it would limit our ability to experiment, we'd prefer both