Participants

- Yoav Weiss, Nic Jansma, Dan Shappir, Jacob Groß, Keita Suzuki, Noam Rosenthal, Rick Klein, Shunya Shishido, Sia Karamalegos, Yoshisato Yanagisawa, Carine Bournez, Dave Hunt, Benjamin De Kosnik, Mike Henniger, Rafael Lebre, Rick Klein, Noam Helfman

Admin

- Please add your name if you're attending

- Please add any proposed topics if you'd like to present or discuss things

- Next meeting: July 18, 2024 at 8am PST / 11am EST / ??

Minutes

ServiceWorker Timing

- Noam: We had a meeting with a similar agenda a few years ago

- ... A bit more hypothetical

- ... Discussed what a ResourceTiming (RT) entry represents? Response or requests?

- ... Decided RT entry represents a "fetch"

- ... In spec terms, when you have a ServiceWorker (SW), it can fallback and the client just fetches normally from the network

- ... Everything else that happens we have at least two fetches or responses. To be more specific, you have a fetch event, you handle it, you fetch something else, and you pass on the response of the event

- ... Classic workflow in a SW

- ... In this case there are two fetches, internal and external fetch

- ... In spec land, a new response is created for the client, and the stream is T'd to both the SW and the client

- ... Response is copied, and the body is T'd

- ... The interesting bit is the connection info

- ... It's things like DNS lookup start, nextHopProtocol, things like that

- ... This was not in the spec originally

- ... The way it is right now, is that RT entry represents a fetch. ConnectionInfo attached to a particular fetch

- ... ConnectionInfo inside a SW is not forwarded to a client

- ... Partly because it would be negative value in some cases

- ... If you have a fetch event and fetch internally, that would be positive values, but really what you're forwarding the response. It could've been there in the cache originally

- ... Could've cached response in SW init, and only forwarded in the fetch event

- ... What we do today is we forward everything and we clamp everything. This is not according to spec, just Chromium

- ... We coincidentally pass on nextHopProtocol, because of the clamp.

- ... We also pass this on for regular HTTP cache responses. The cache itself does not have a hop. Just because we pass on connection info.

- ... There was a proposal in agenda to expose the RT of the internal fetch as an attribute on the external fetch

- Yoav: Right now there's divergence between spec and Chromium implementation?

- Noam: Yes

- Yoav: How backwards-compat would it be to match spec or other behavior we'd like?

- ... What do we think would break if we changed nextHopProtocol (NHP) and connection times

- Noam: It's kinda broken anyway because it clamps

- ... People who rely on this information and know SW is a fetch inside a fetch event handler it would break for them

- ... They'd need an attribute that would surface the internal fetch

- Yoav: And to change the way they'd collect data

- Noam: What they have now would be polyfillable

- Yoav: They'd need to deploy the polyfill

- Noam: We don't have the original entry today

- ... And it doesn't work correctly for original responses

- Yoshisato: You want to add a field or something to expose internal fetch?

- ... It won't be set if it's coming from cache?

- Noam: It would separate the fetch from the cache from the fetch from SW.

- ... Both would be available to you

- Yoshisato: How do you handle a fetch from a cache vs. new response?

- Noam: For SW, responses have to cache the RT information together with the response. Then when the response is copied from SW, pass that info along. Then the time origin would have to be updated.

- Yoav: Also possibility of SW making up responses, in which case there will be no linked RT entry

- Noam: No Connection Info

- Yoav: Do the current RT entries that a SW expose, do they match the internal fetch?

- Noam: Absolutely. If calling performance.getEntries() in SW code, that just works

- ... But it's a bit clunky to connect that w/ resource from outside. You need to postMessage() or whatever, or add as a header or ServerTiming. Bit funky to do that.

- Yoav: We're not breaking those in any way, just linking from main window?

- Noam: Correct

- Noam H(elfman): Is the plan to now have more entries, or just standard information on a single entry?

- Noam: Later, probably entry would have ".originalEntry" inside of it

- Noam H: Pointer to the fetch from the worker. Consider adding another entry?

- Noam: The entry already exists in SW, just doesn't exist in the client's timeline

- ... The link is the useful part here

- ... We could add a soft-link into timeline and give an ID

- Noam H: Only add connected to those fetch requests which have their own fetch

- ... If it reads direct from cache, it'd be empty?

- ... If there's a lingering fetch that's not completed while it's starting?

- Noam: Only get RT entries once it's already done

- Noam H: If original connection did not complete, would it still exist? Not sure if a common scenario

- Noam: Not sure how it would work, as you're teeing the response

- Noam H: e.g. Chunked Encoding response

- Noam: When body is done reading from server, then both SW and response stream will be closed at the same time

- ... If you're taking response and constructing a new response then it's not the original response any more

- Noam H: Those using .respondWidht()

- Nic: clarification around cache responses. Let’s say SW fetched a resources and serves it a day later. Would the original entry be of the original request from a day before?

- Noam: good question. I think the use case is not for that but for a SW that was awake while the client was requesting. Both ways are valid, but not a fan of putting monotonic times of cached things

- Nic: very negative times

- Noam: could get non-sensical. Makes more sense if these timings were only from memory cache

- NoamH: RespondOnly if the connected response is not from cache? Do we care about the connection that put the thing in the cache?

- Noam: Not taking from cache seems like a good first iteration. If it’s attached to the response object only and not a cache object

- Nic: Timing of the things in disk cache is something that’s less relevant

- Noam: Makes sense

- Nic: Mentioned NHP issues, would that just be a separate thing that would have to be fixed - empty that value out in case od a disk cache read?

- Noam: same for connection info

- Nic: So I was imagining that the original entry would be null. In addition all the relevant timestamps would be nulled?

- Noam: right now they are clamped to the startTime

- Nic: We have expectation that even for 0 duration things the timestamp is there. But NHP could and should be empty

- Nic: So the original entry would only be available in the case of a non-disk-cache, non-constructed entry?

- Noam: Yeah. Something that was HTTP fetched.

- Nic: From a RUM perspective we have different teams that look at the data - both for user experience and operational reasons.

- … Getting those times would help the operational people to get a better sense of e.g. DNS timing

- Noam: Are you reporting that from inside the SW?

- Nic: It’s hard, so only done it with one customer. Easier to not ask folks to modify their SW code

- Yoav: What we mostly discussed here was RT issue 363, but we also want to discuss how static routes affect RT data (issue 389)

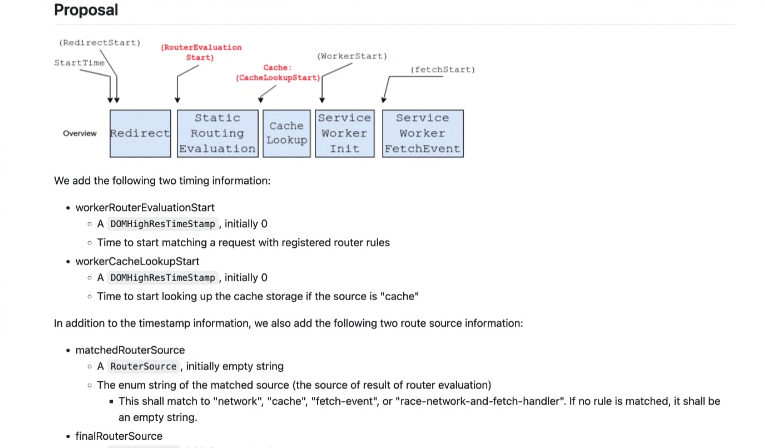

- Yoshisato: Our proposal is to add ServiceWorker Static Routing API, we're offloading some of the routing to the browser process

- ... Need to have a sandboxed process for the SW

- ... People want to minimize sandboxed processes, motivation for Static Routing API

- ... We want to make sure it's beneficial to users, improves performance

- ... We propose timing information from Static Routing API added to ResourceTiming

- ... Proposal is still changeable

- ... For Static Routing API, people can register SW for how to offload the execution to the browser process

- ... With a condition and source

- ... Condition has a pattern, if matches,it goes to the source

- ... Four sources available: network, cache, fetch-event, race-network-and-fetch-handler

- ... We'd like to propose timing info for each case

- Yoav: If I'm thinking about this from Noam's lens of looking at each RT entry as representing a fetch

- ... With Service Worker Static Routing (SWSR) evaluation belongs to the main window fetch, we could have one, two or three fetches for each resource

- ... (nevermind, still just two)

- ... SWSR evaluation belongs to the main window

- ... So this is all extra information on the main window RT entry

- Yoshisato: Network rule, people want to know how much time was consumed for SWSR evaluation

- ... They could have thousands of rules with a performance regression

- ... They want to know how long it takes for evaluation itself

- ... Race rule, if SW wins then network information is also surfaced

- ... Cached case, if cache exists, then SWSR evaluation time should be measured

- Noam: Cache lookup not specific to SWSR though?

- Yoshisato: Cached lookup doesn't need to run fetch handler

- Noam: Is it different from caches API inside SW?

- Shunya: Basically uses cache API, however directly accessed from browser process vs. regular API usage in SW fetch handler

- Noam: From a client perspective it's the same thing, maybe cacheLookupStart is valuable regardless of static routing

- Yoav: Is this similar to HTTP cache lookups?

- Yoshisato: This is cache specified in SW spec

- Yoav: So just SW cache lookup?

- Yoshisato: It can be used outside for SW but SW is using it

- Yoav: Cache API. Could be useful for regular fetches?

- Noam: Can't because in SW you can call Caches.xyz, specific to static routing

- Yoav: You could add UserTiming or something

- ... This cache lookup is only relevant for the "cache" rule?

- Yoshisato: Yes

- ... Only visible in cache rule

- Yoav: We talked last time, router evaluation, we want to expose that to folks in order to highlight too many routes that slows things down

- ... Want to know how long it takes to optimize

- ... Is there something similar to cache lookup, if they have too many things in the cache? Would assume they'd be fast regardless

- Shunya: We received some feedback from partners, they see in some cases cache lookup is slower than regular network requests (maybe on low-end devices)

- ... Their feedback is they're skeptical about using cache lookup

- Yoav: Basically it's not that developer can speed up cache lookups, but they could avoid cache rule entirely if their end-users have too many low-end devices with slow caches

- Shunya: One aspect

- ... We thought it's good anyway to expose them and make how they resource fetch measurable

- Yoav: There are some trade-offs

- Shunya: We believe cache lookup is faster on many networks

- Yoshisato: Low-end devices may not be faster in cache

- Noam: Useful to know it went to cache lookup regardless of how long it takes

- ... Useful to put rule itself

- Nic: From a RUM perspective that would help us know what the rules were

- Shunya: Q about route evaluation time. Got feedback from partners about the cost of static routing itself. However, so far we see in most cases it’s lower than 1ms, so highly ignorable

- Yoshisato: But it happens for every resource fetch, so even 1ms can add significant latency

- Yoav: Good point, as developers may have a hard time putting a price tag on 0.8ms but if you multiple by all blocking resources that could add up to 100ms

- ... Maybe it makes sense to somehow aggregate it

- ... Also some requests that happens after page is usable

- Noam: Would suggest, as it's not clear if this is a perf bottleneck in practice, maybe it should be recorded to CrUX before it's a web platform thing

- ... Then if we see later it's something people want to measure, then we can add it

- ... That way we can see if it has value first

- Yoav: Even before CrUX I would assume for internal gathered metrics, you could play around with aggregating cost up to multiple points in time, see which make sense.

- ... Correlate with user-visible metrics

- Shunya: Maybe before exposing this field, we can gather some metrics on total evaluations

- ... Such as DCL, FCP, LCP, etc

- Noam: Aggregate outliers if some websites have long evaluation, determine why

- ... We want to have avoid metrics that are only actionable to the browser itself

- Yoav: For cache lookups, route evaluations seem more actionable to developers than cache lookups (other than devs avoiding "cache" rule at all)

- ... Thing it scares me is if it exposes new and fingerprintable information

- Noam: Not different from perf.now() or timing in SW

- Yoav: You can already do that in a SW

- ... That makes sense

- ... Maybe it's something you can gather metrics internally, and we can reason more about how actionable it is

- ... Maybe we only report the eval time in aggregate, or if cache lookups exceed a certain threshold?

- ... Does that make sense?

- Yoshisato: Yes

- Noam: One thing that is very valuable regardless of the timing, is knowing that the fetch went through SWSR, useful from the get-go.

- Yoav: Yes

- Noam: Even for debugging purposes

- Nic: And we could know that from matchedRouterSource if it's filled in, SWSR is enabled

- Yoshisato: Naming, should we have "worker" for each one?

- ... To make field name shorter

- Nic: Would make sense to prefix with “worker”. I don’t mind longer names

- Yoshisato: For finalRouterSource, should we include the attribute if it didn't happen?

- Noam: For RT it's always a "full object" but the attribute could be empty, 0, clamped, etc

- Shunya: We should expose these fields all of the time

- Noam: If you look at RT IDL, it doesn't have "optional" for this reason. Design choice.

- ... RUM providers can decide to include/clamp if they want

- Keita: Just want to reiterate, when we specify cache rule and we have a hit, we probably won't have fetchStart and start timings. Only responseStart onward.

- Noam: There's always a fetchStart for a fetch

- Yoav: Fetch would be internal, and the response would be from the cache

- Noam: You wouldn't have requestStart, etc

- Yoav: It maps to fetchStart if missing

- Noam: Everything gets clamped

- ... RT attribute if it comes from cache. deliveryType

- Yoav: For 304s etc, we have deliveryType

- Noam: deliveryType should say it's from SW cache

- Yoav: We shipped it for prefetches and preloads

- Noam: Can also work for fetches that go to SW and do lookup

- ... New type that says SW cache

- ... Right now "cache" means HTTP cache