WebPerf WG @ TPAC 2025

bit.ly/webperf-tpac25

Logistics

TPAC 2025 Home Page

Where

Kobe International Conference Center - Kobe, Japan

When

November 10-14, 2025

Registering

- Register by 23 Aug 2025 for the Early Bird Rate

- Register by 20 Oct 2025 to avoid late rate

- All WG Members and Invited Experts can participate

- If you’re not one and want to join, ping the chairs to discuss (Yoav Weiss, Nic Jansma)

Calling in

Join the Zoom meeting through:

https://w3c.zoom.us/j/8801790059?pwd=aPFvyrwhnW4TqfCIROznMNMoVob3Xr.1

Or join from your phone using one of the local phone numbers at:

https://w3c.zoom.us/u/kb8tBvhWMN

- Meeting ID: 8801790059

- Passcode: 2025

Masking Policy

We will not require masks to be worn in the WebPerf WG meeting room.

Attendees

- Yoav Weiss (Shopify) - in person

- Nic Jansma (Akamai) - in person

- Barry Pollard (Google) - in person

- Dave Hunt (Mozilla) - in person

- Bas Schouten (Mozilla) - in person

- Nazım Can Altınova (Mozilla) - remote

- Joone Hur (Microsoft) - in person

- Guohui Deng (Microsoft) - in person

- Robert Liu (Google) - in person

- Noam Rosenthal (Google) - in person (select sessions probably)

- Nikos Papaspyrou (Google) - remote

- Luis Flores (Microsoft) - remote

- Andy Luhrs (Microsoft) - remote

- Fabio Rocha (Microsoft) - in person

- Patrick Meenan (Google) - remote

- Hiroki Nakagawa (Google) - in person

- Fergal Daly (Google) - in person

- Lingqi Chi (Google) - in person

- Jase Williams (Bloomberg) - remote

- Michal Mocny (Google) - in person

- Kouhei Ueno (Google) - in person

- Keita Suzuki (Google) - in person

- Hiroshige Hayashizaki (Google) - in person

- Mike Jackson (Microsoft) - in person

- Eric Kinnear (Apple) - in person

- Carine Bournez (W3C) - in person

- Justin Ridgewell (Google) - in person

- Adam Rice (Google) - in person

- Benoit Girard (Meta) - in person

- Nidhi Jaju (Google) - in person

- Nicola Tommasi (Google) - in person

- Euclid Ye (Huawei) - in person

- Rakina Amni (Google) - in person

- Alex Christensen (Alex) - in person

- Sam Weiss (Meta) - in person

- Takashi Nakayama (Google) - in person

- Minoru Chikamune (Google) - in person

- Ben Kelly (Meta) - in person

- Takashi Toyoshima (Google) - in person

- Samuel Maddock (Salesforce) - in person

- Javier Garcia Visiedo (Google) - in person

- Eriko Kurimoto (Google) - in person

- Shunya Shishido (Google) - in person

- Anna Sato (Google) - In person

- Ming-Ying Chung (Google) - in person

- Shunya Shishido (Google) - in person

- Anna Sato (Google) - In person

- Jan Jaeschke (Mozilla)

- José Dapena Paz (Igalia) - remotely, some sessions

- Mingyu Lei (Google) - in person

- Shubham Gupta(Huawei) - in person

- Amiya Gupta (Microsoft) - remote

- Gabriel Brito (Microsoft) - remote

- Tsuyoshi Horo (Google) - in person

- Kazuhiro Kureishi (Cisco) - In person

- Randell Jesup (Mozilla) - remote

- Tim Vereecke (Akamai) - remote

- Ari Chivukula (Google Chrome) - in person

- Kota Yatagai (Keio University) - in person

Agenda

Meeting room request from W3C: https://github.com/w3c/tpac2025-meetings/issues/61

Lightning Topics List

Have something to discuss but it didn't make it into the official agenda? Want to have a low-overhead (no slides?) discussion? Some ideas:

- Lightning talks

- Breakout topics

- Q&A sessions

- Questions for the group

- Short follow-ups from previous sessions

Add your ideas here. Note, you can request discussion topics without presenting them:

- Please suggest!

- Web Font metrics - Yoav (0.5h)

- Unused preloads/prefetches - Yoav (0.5h)

- Party town and workerDOM discussion?? (0.5h)

- Memory-induced reload - Yoav (0.5h)

- CSS performance reporting - Yoav

Day by Day Agenda

Monday - November 10 (9:00 - 16:45 JST)

Recordings: Day 1

Location: 505

Tuesday - November 11 (9:45 - 18:00 JST)

Recordings: Day 2

Location: 505

Thursday - November 13 (9:00 - 12:30 JST)

Recordings: Day 3

Location: 505

Meeting Minutes

Day 1

Intros, WG feedback

Recording

- Nic: mission - measure things and make them faster!

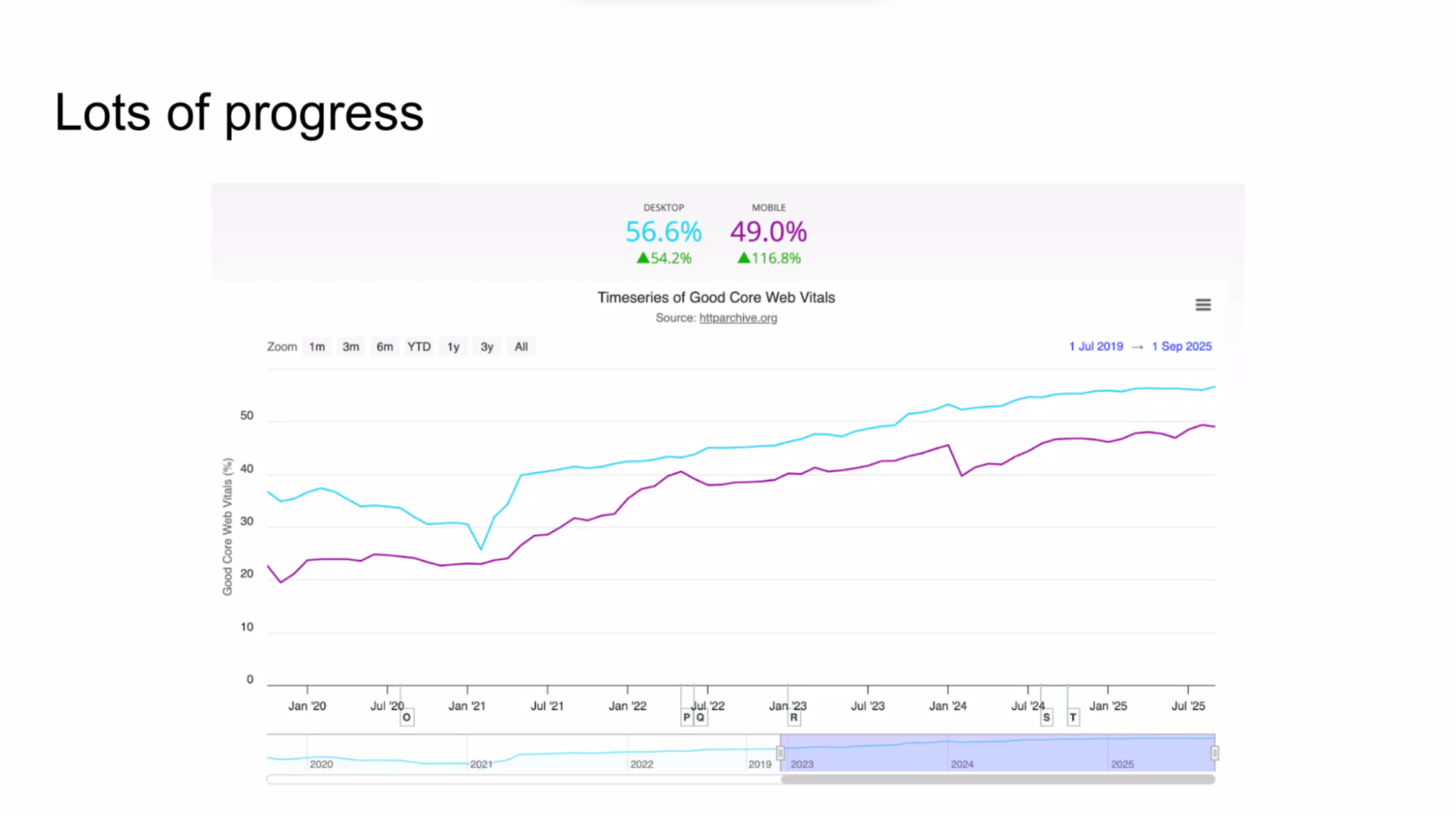

- … progress - Excitement around Interop and CWVs, LoAF adoption, NEL and reporting are a separate workstream, speculation rules

- … 80 closed issues

- … Charter coming up for renewal. We’ll ask an extension and discuss rechatering on Thursday

- … RUMCG gained steam. Summary at 4pm

- … Incubations - need to review the list for the rechartering discussion. Not in the charter but interesting

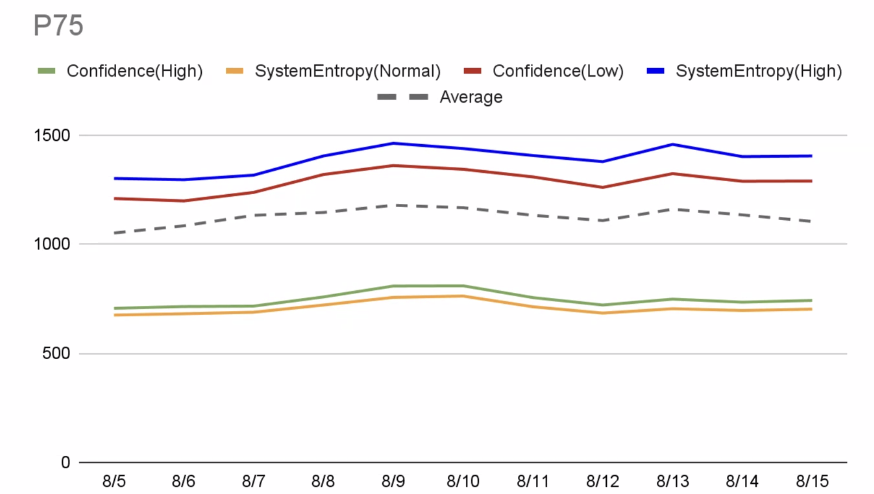

- … Market adoption - up and to the right!

- … Looking at chair feedback survey: variety of experience in WG participation, people catch up on artifacts after the meeting, feedback on meeting times (keeping the current cadence)

- … recording (people like the meeting recordings)

- … requests to update the agenda and post it on slack

- … people like the meeting minutes posting

- … Keep recording the presentation and try to send agenda invites to slack

- … Please reach out with any feedback

- … There’s a request for a “getting started” guide. We should do a better job. The chairs take that as an action item

- … Full agenda for the next 3 days

- … Code of conduct

- … Record presentations and save discussions for after the recording

Recording

Agenda

AI Summary:

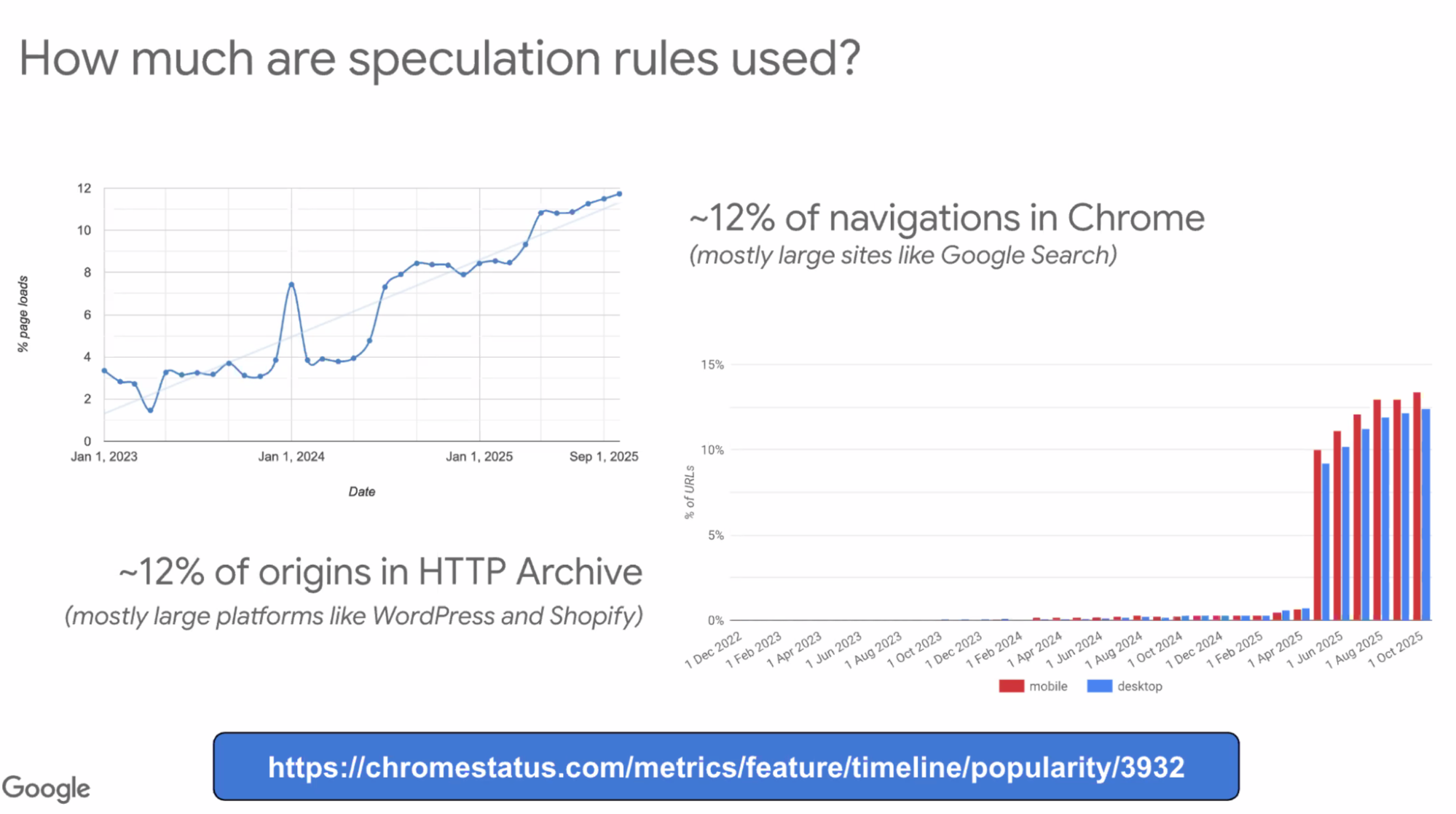

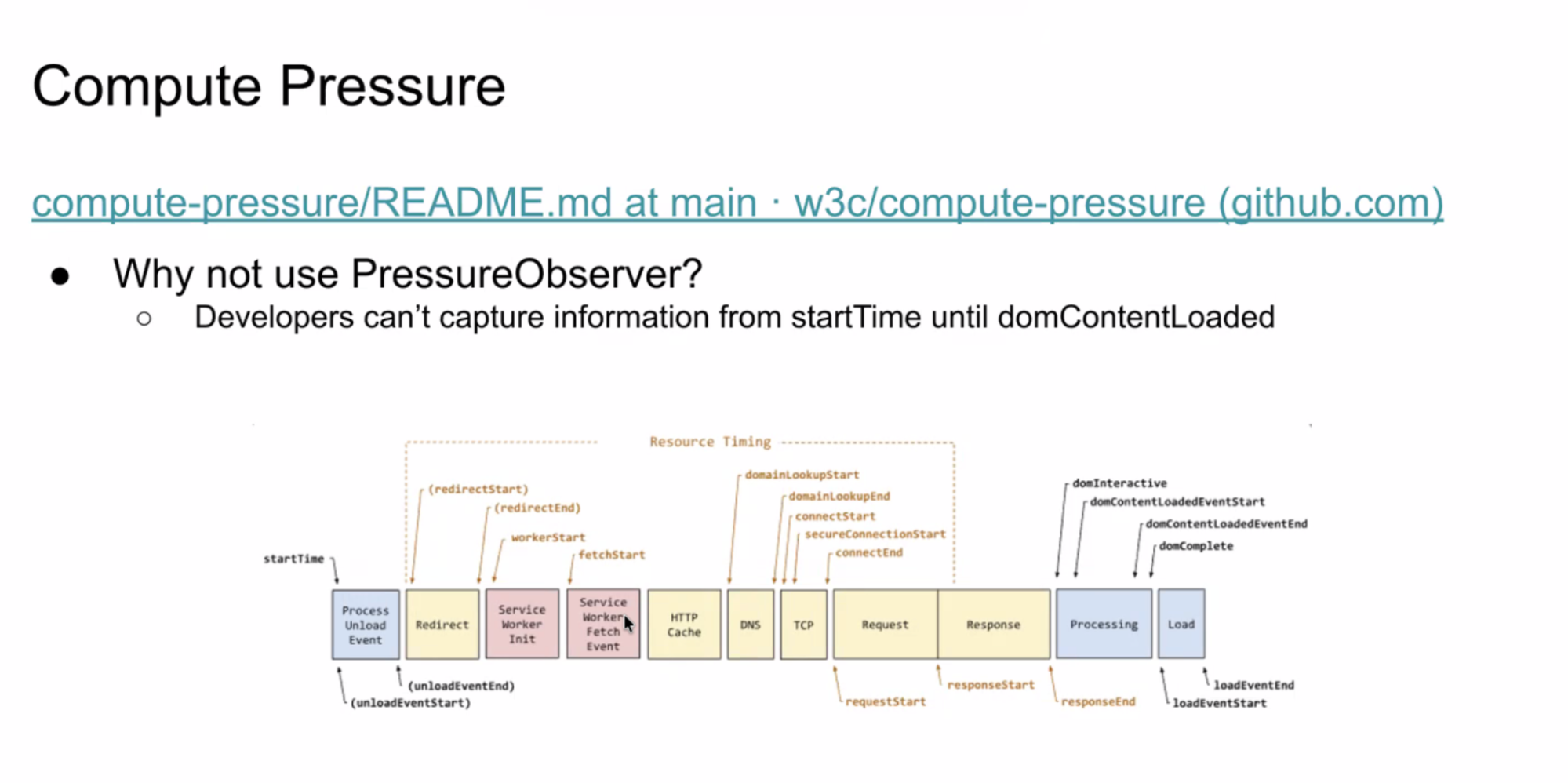

- Chrome’s speculation rules have expanded beyond the initial document “where” rules to include URL list rules, header-based rules, tags for analytics/experiments, No-Vary-Search awareness, and `target_hint`, with strong adoption (≈12% of navigations, major platforms like WordPress/Shopify) and measurable latency wins, especially on mobile.

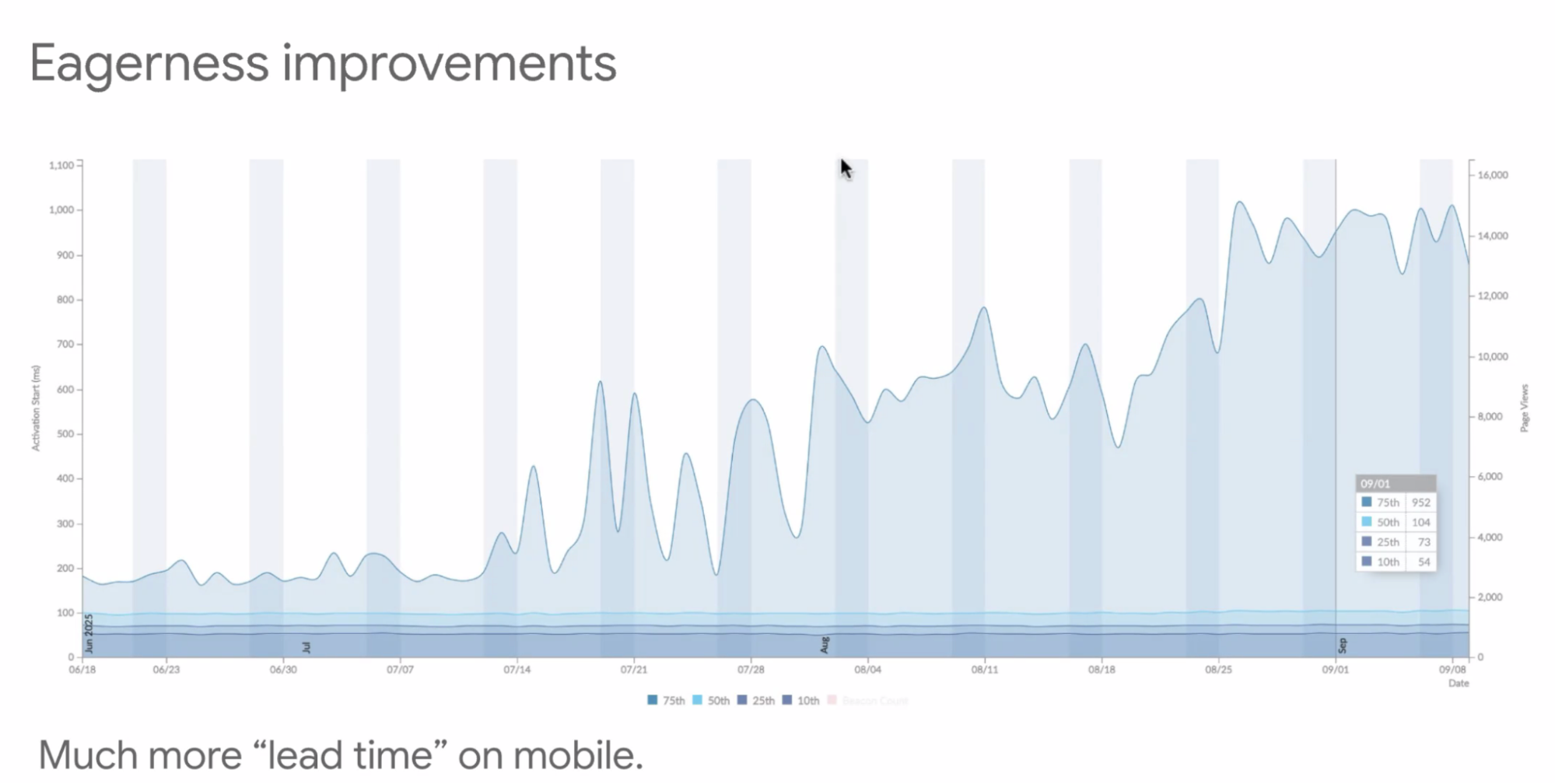

- Eagerness levels have been refined: desktop uses hover/mousedown; mobile now uses viewport-based heuristics (links entering view) to avoid over-speculation while still gaining significant prefetch/prerender lead time.

- Chrome has added protections and limits (e.g., constraints around cross-origin iframes, HTTP cache reuse even when speculation is unused), and is rolling out features like same-site cross-origin prerender under flags;

- A new `prerender_until_script` mode is being explored as a middle ground (no script execution until activation) to ease deployment for sites worried about analytics/ads/JS side effects; there were detailed discussions about inline event handlers, race conditions, and how/if to measure error impact before standardizing.

- Open questions remain on safely handling inline events, scroll/hover tracking, and activation sequencing (paint vs run activation handlers), so `prerender_until_script` is considered too early for Interop, with further experimentation via feature flags and an Origin Trial planned.

Minutes:

- Barry: Work with speculation rules team

- ... Hasn't formally been adopted into this charter, but have presented before

- ...

- ... Introduce API we've worked on at the time

- ... Not just a web API, part of Chrome UI

- ... Dev tools support

- ... Origin Trial (OT) and plans for API

- ... Good results from early adopters

- ... Talking today about what's happened since then

- ... What's changed since then?

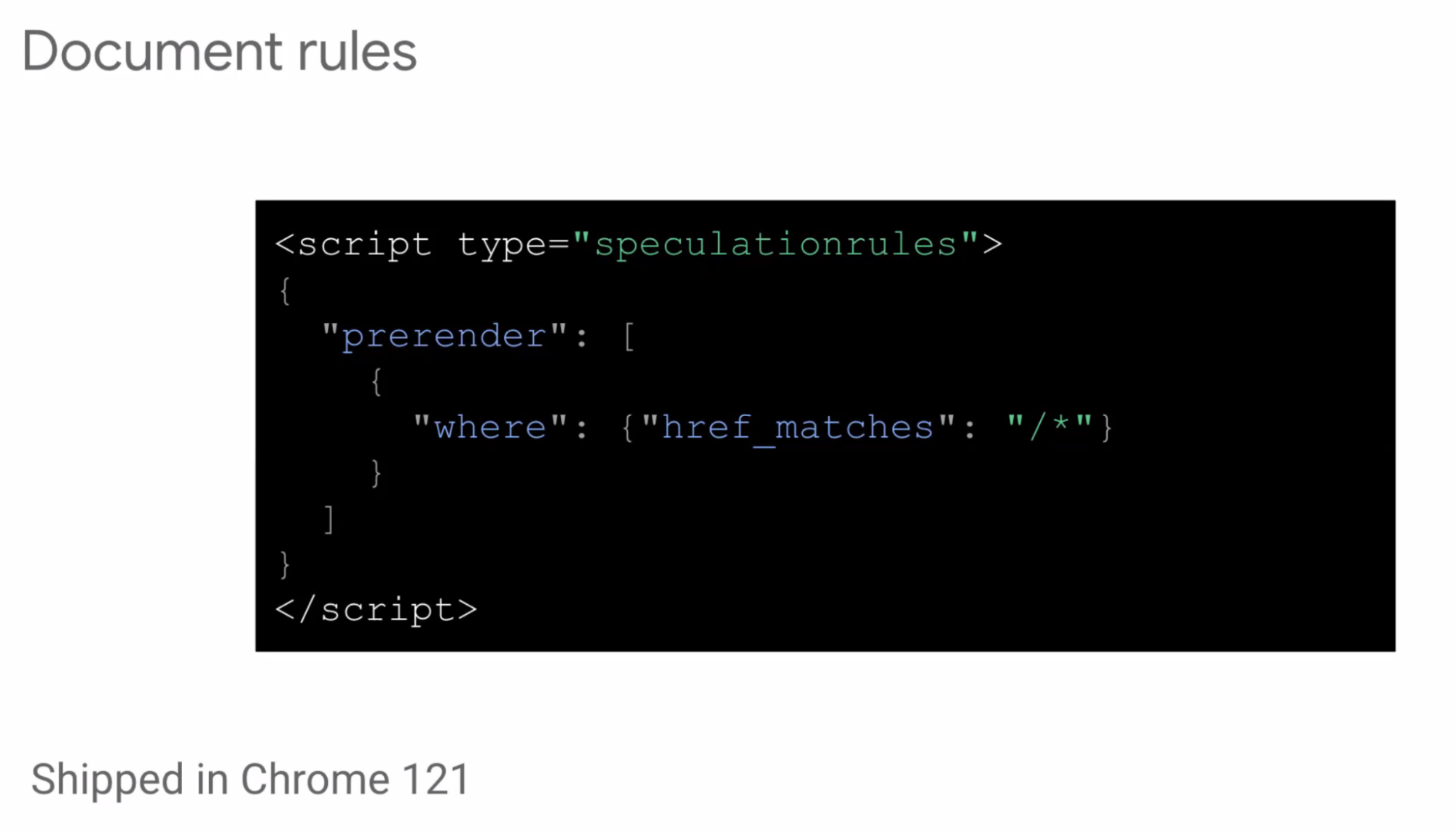

- ... Shipped document rules "where"

- ... More experiments going on

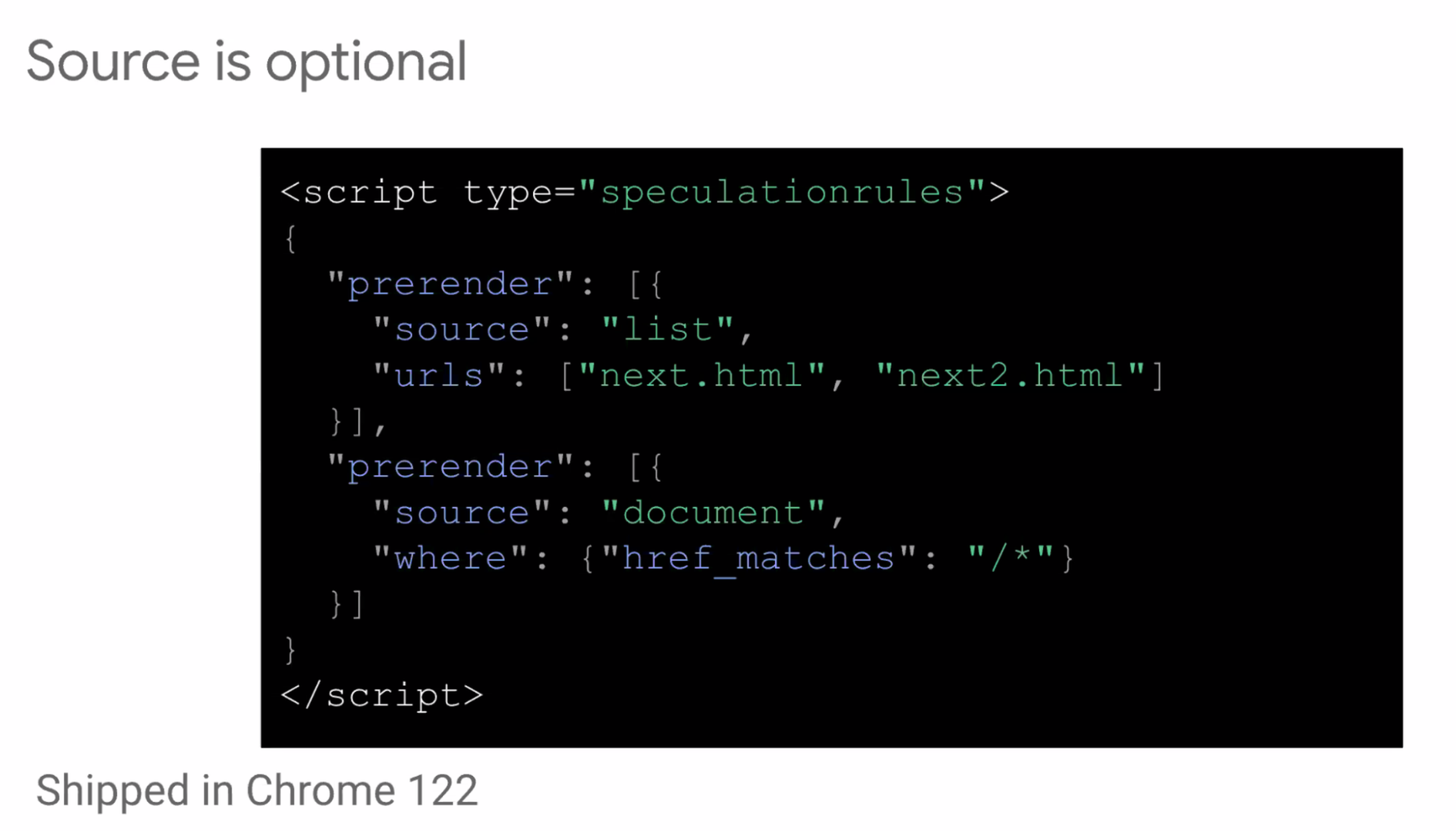

- ... Two types

- ... URL List rules

- ... Similar to link rel=prefetch syntax

- ... Document rules gives a where clause, matches URL

- ... URL list is useful if you know what the next page is

- ... Document rules is if you're uncertain and you want to wait to see how user will interact with the document

- ... Source is now optional

- ... Will be kept, may not make sense to remove

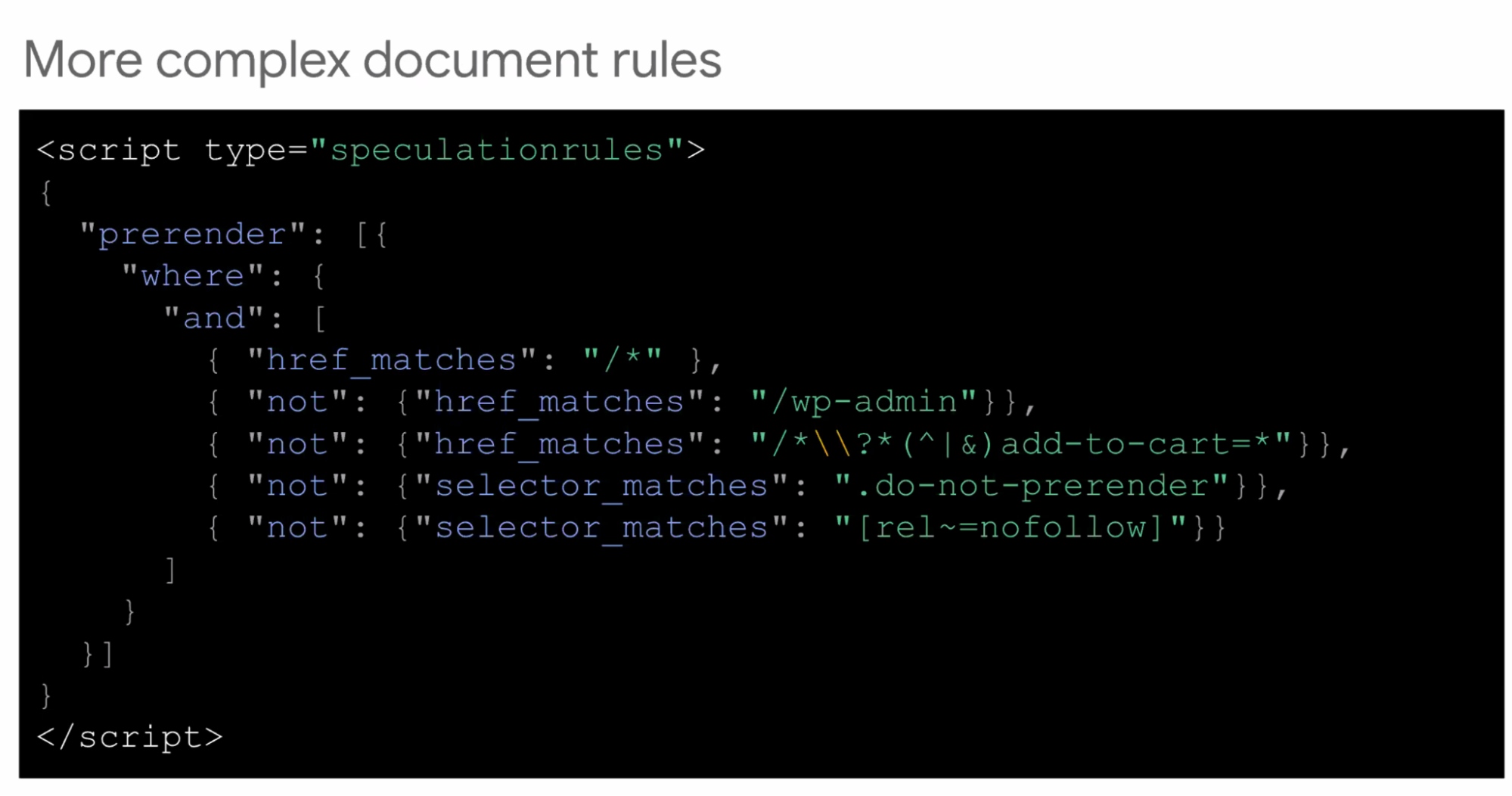

- ... You can have more complicated document rules

- ... and, or, not clauses

- ... Exclude things like wp-admin, add-to-cart

- ... Language available to you for selecting the most appropriate rules for you

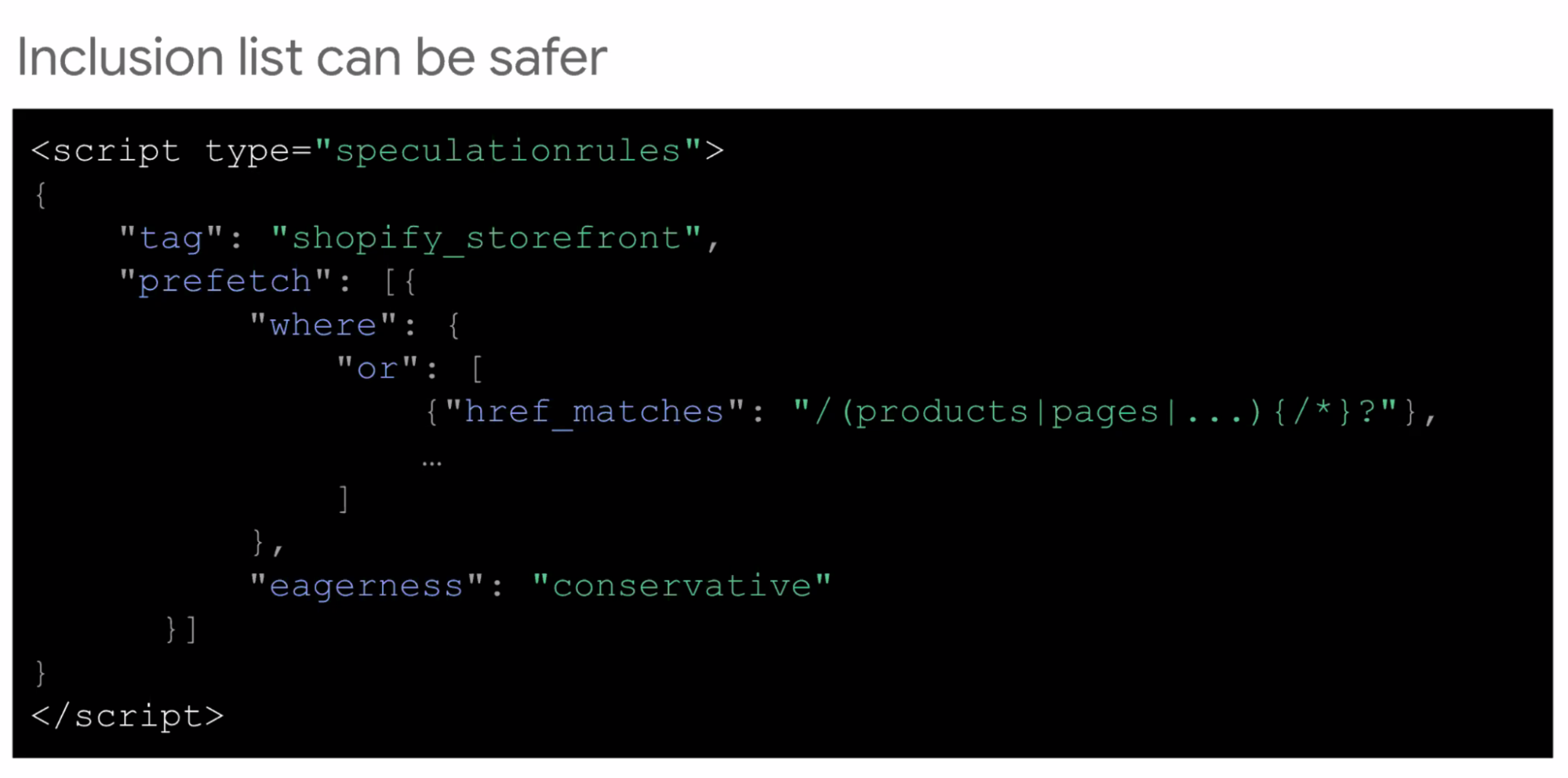

- ... Inclusion lists can be safer

- ... where/or clause

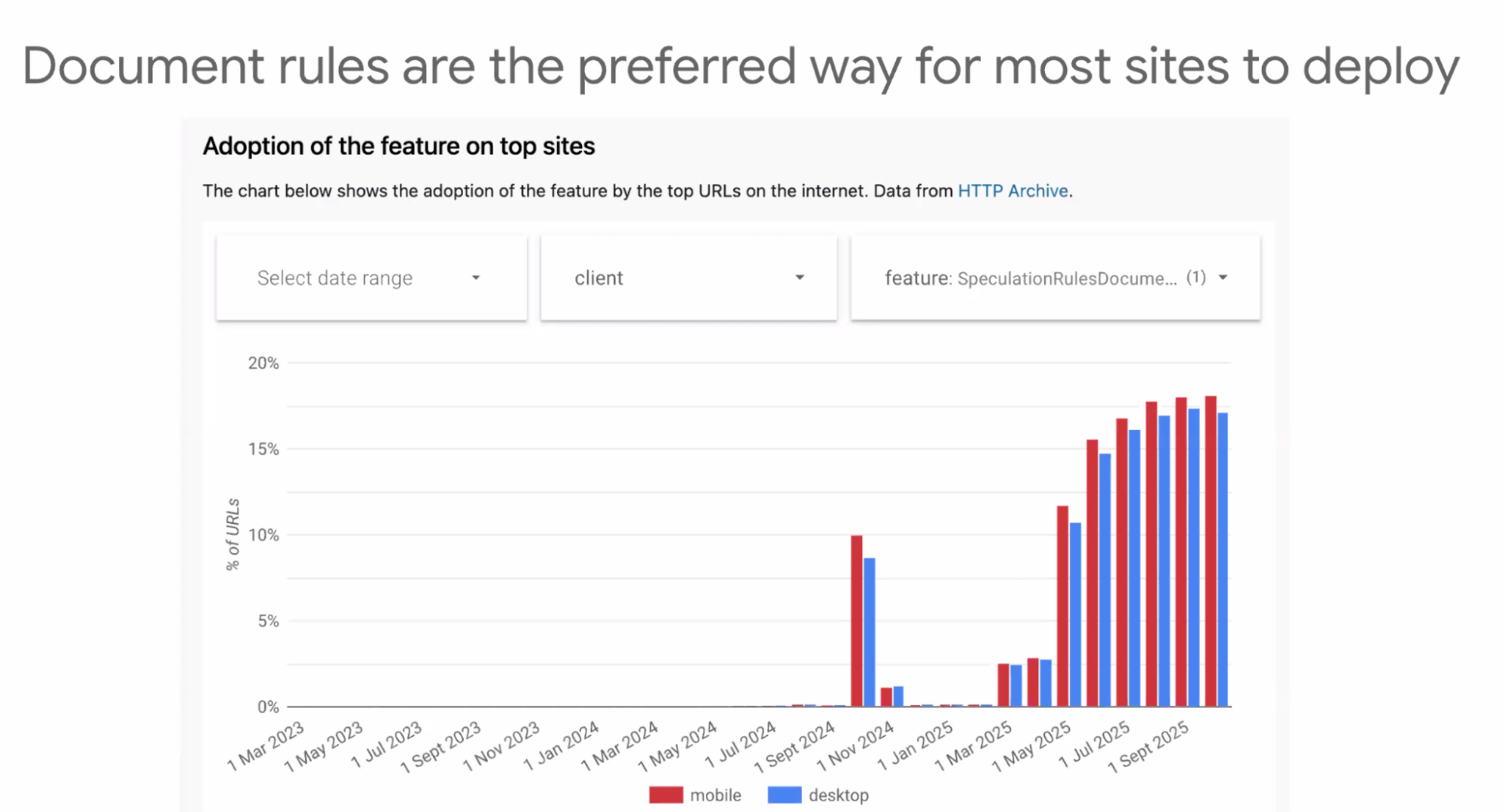

- ... Document rules are the preferred way for most sites to deploy

- ...

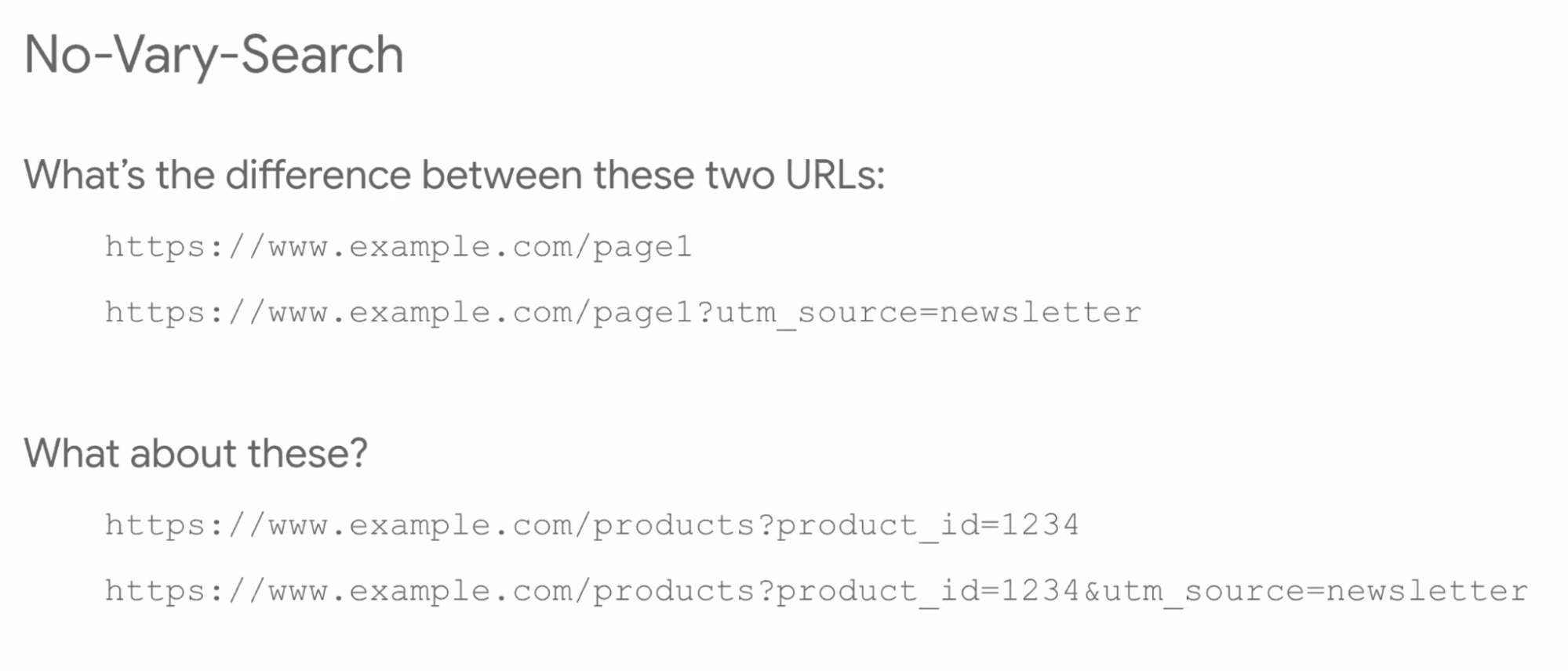

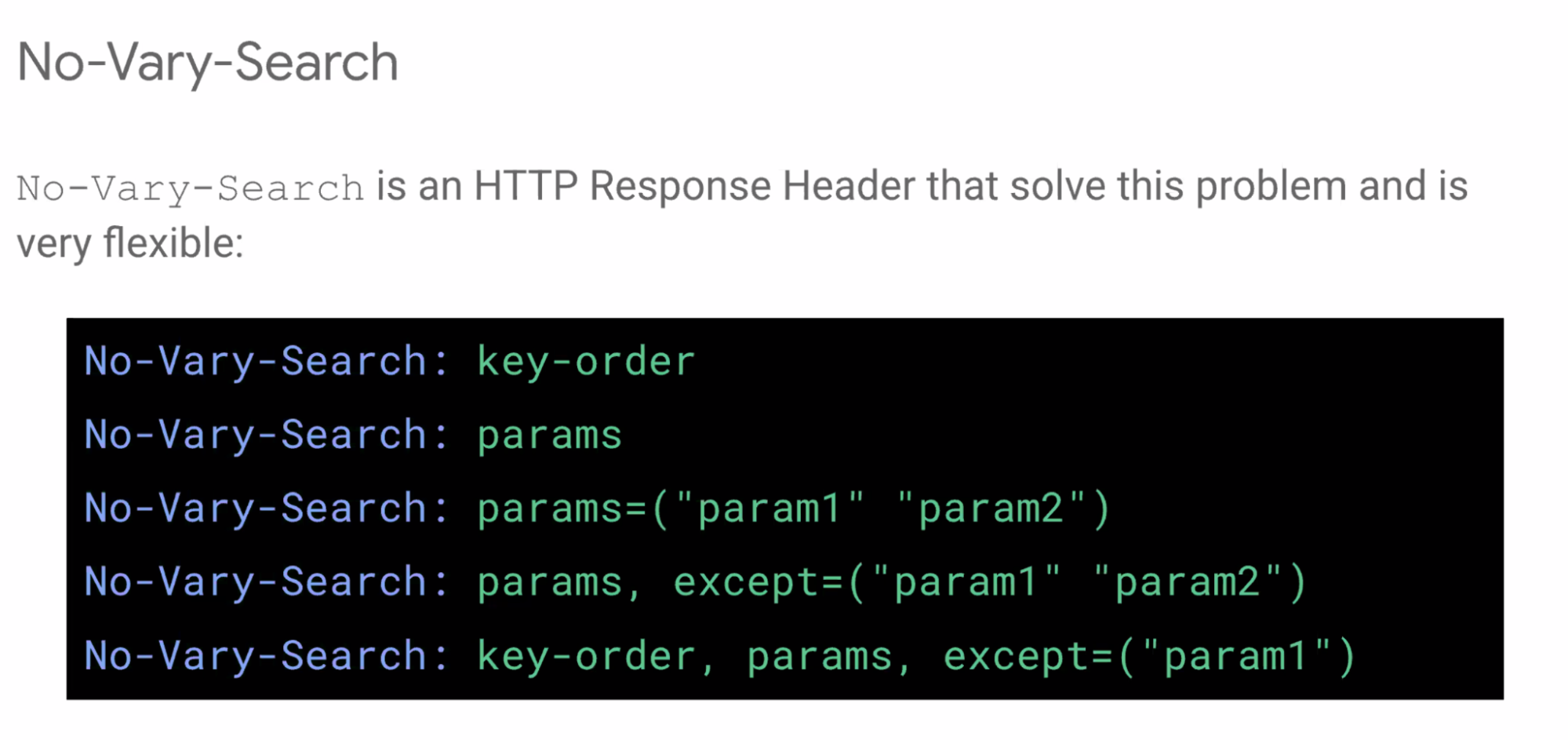

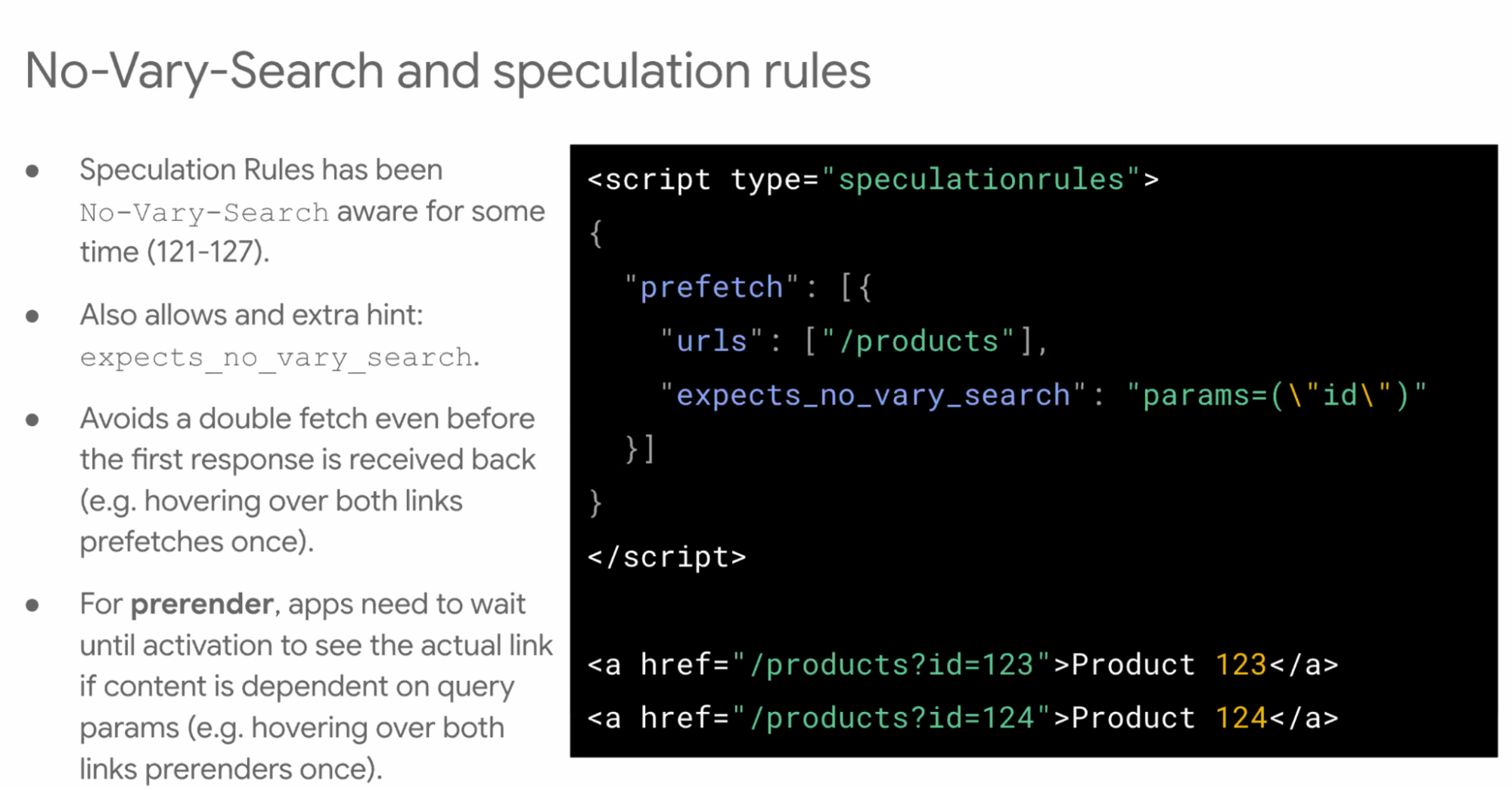

- ... No-Vary-Search

- ... What's the difference between these two URLs?

- ... In most cases, these two URLs are identical (content of the page is)

- ... Same HTML may come back. Some URL parameters matter (on the server side), some don't

- ... HTTP header to specify what matters

- ... Exclude certain params

- ... Speculation rules is No-Vary-Search aware

- ... Can even tell there's a No-Vary-Search expected in the response

- ... If the query does change client-side, then you need to wait until the activation there

- ... Added support relatively recently for HTTP cache

- ... CDNs could use this as a standard way of specifying

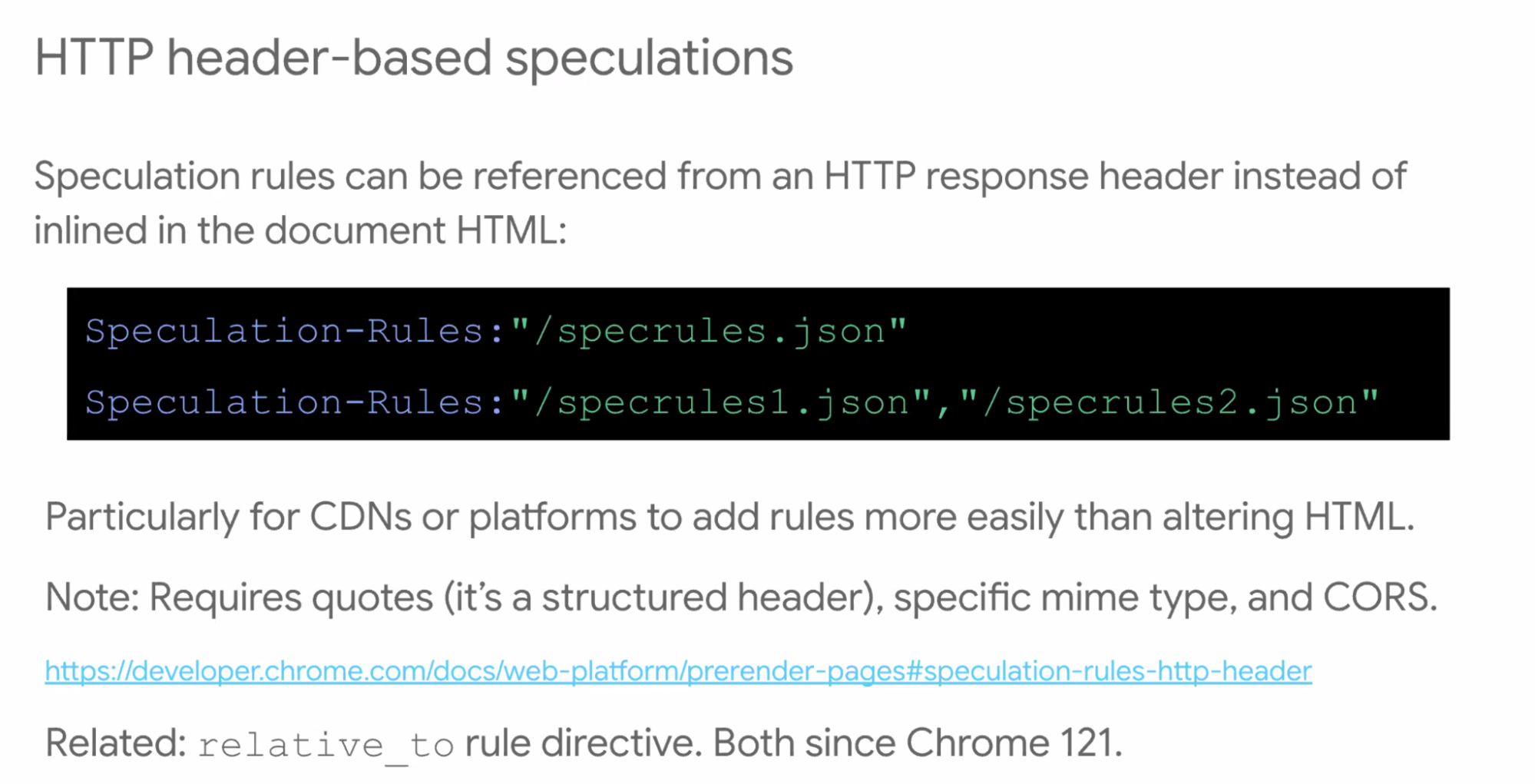

- ... Header-based speculation rules

- ... Can include in external JSON rather than inline in HTML

- ... Useful for CDNs or platforms to add more easily

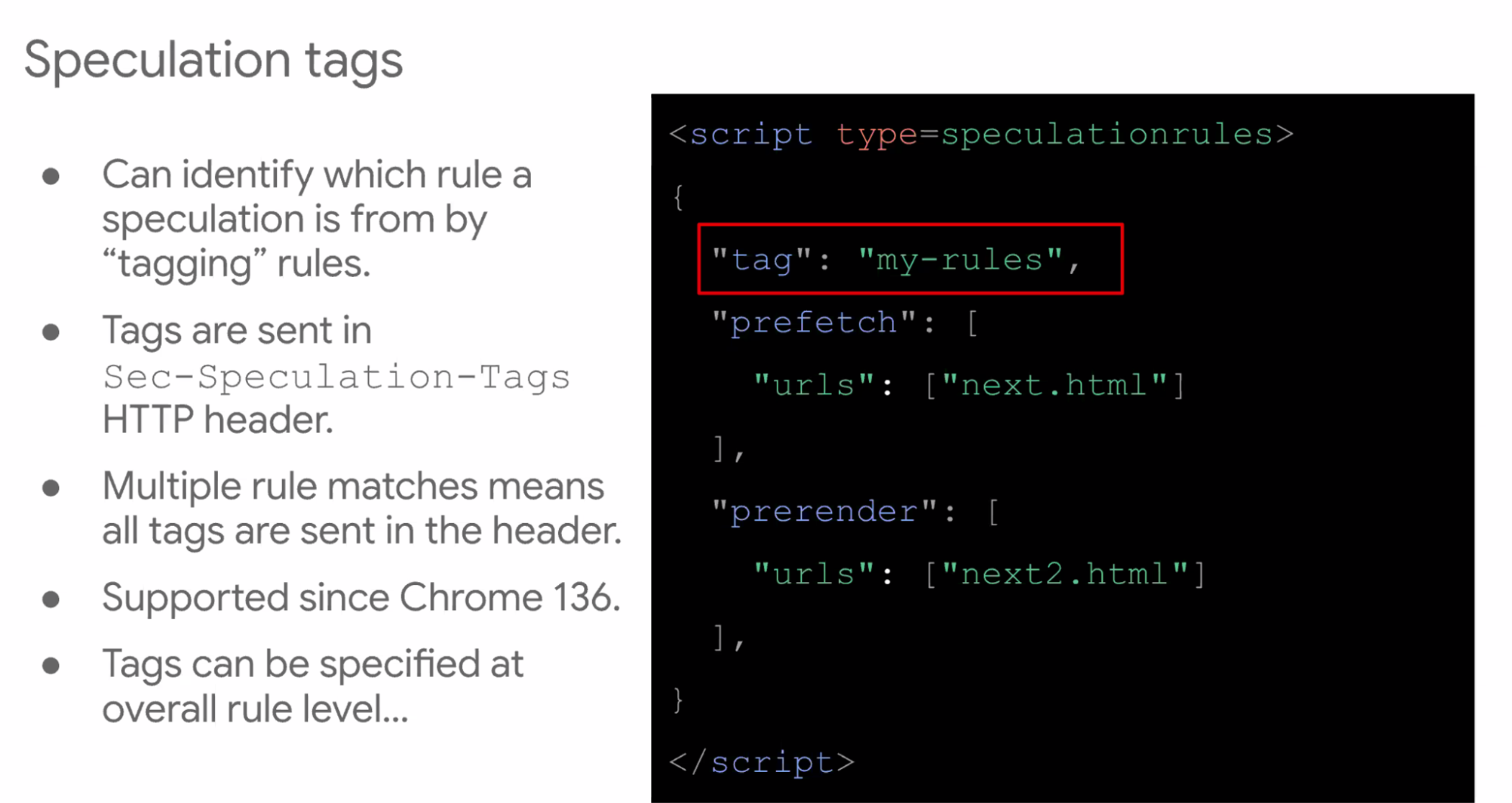

- ... Tag speculation rules

- ... Those tags are sent when sending request to the server

- ... Specified at overall rule level, or you can have separate tags. Useful for platform deploys.

- ... Useful for analytics, logging, a/b tests

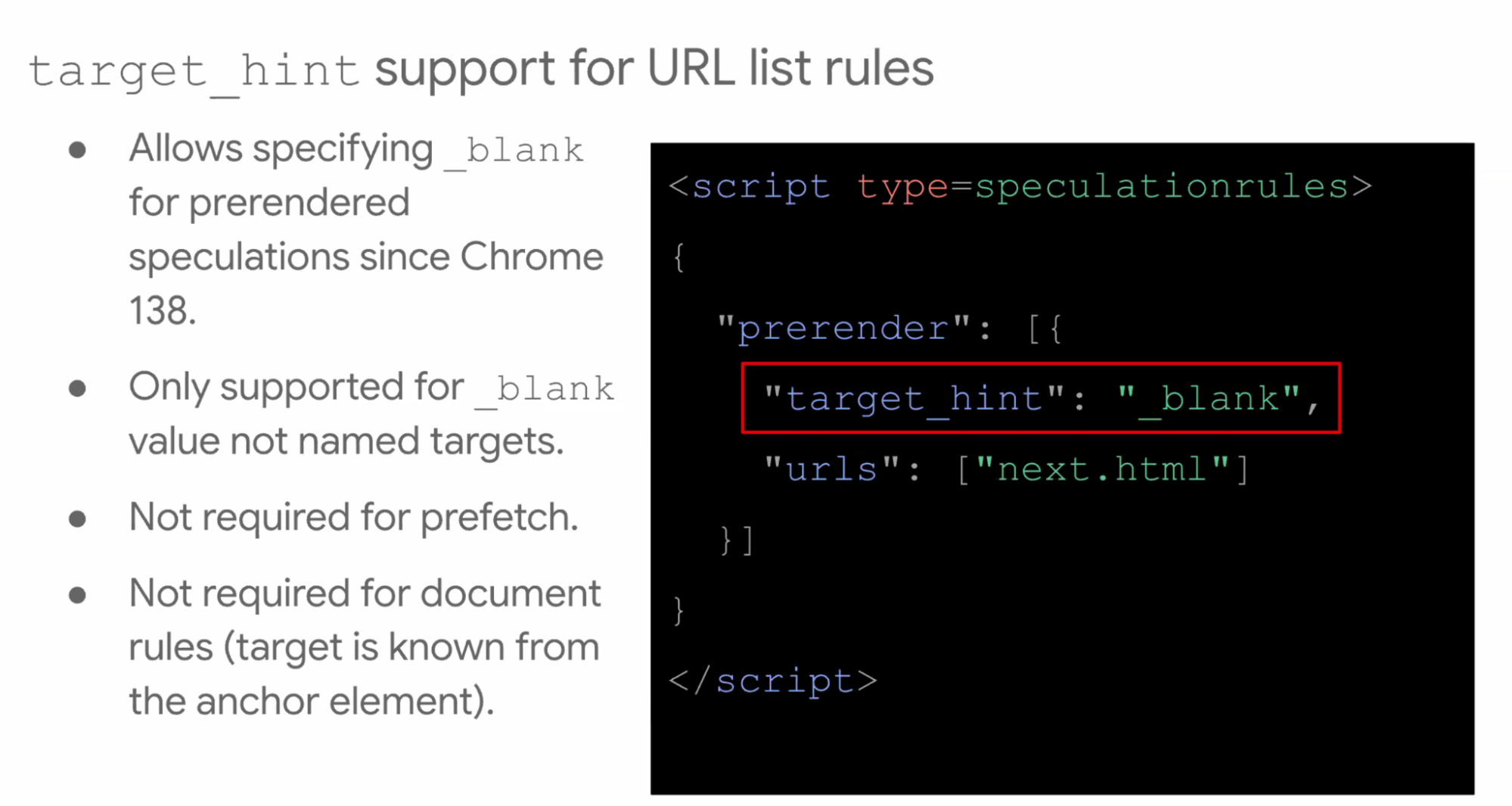

- ... target_hint support added

- ... If you wanted a real background tab with target=_blank, we allow you in your rule to specify that

- ... (don't support named targets)

- ... Isn't required for prefetch, more if you have a hard list of URLs

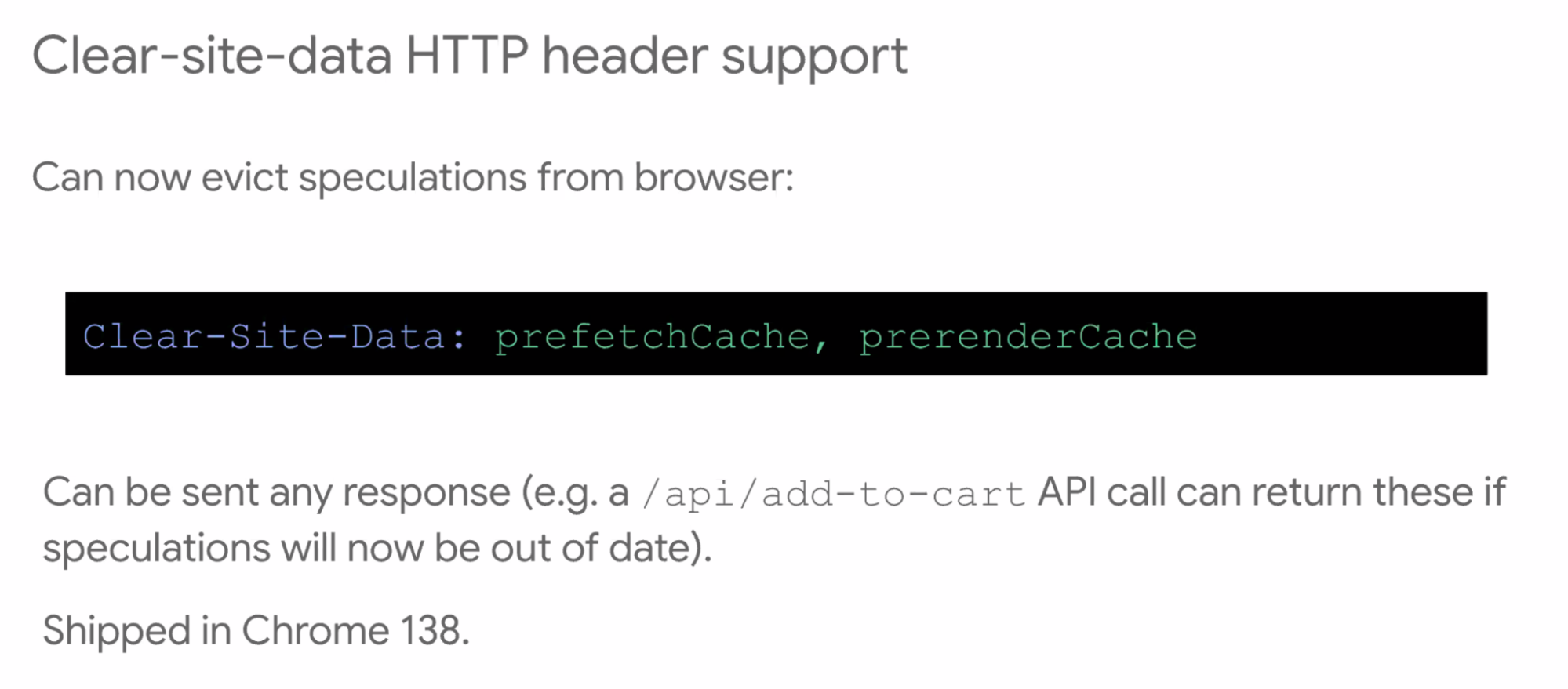

- ... clear-site-data allows you to clear those speculations

- ... Eagerness value you can specify

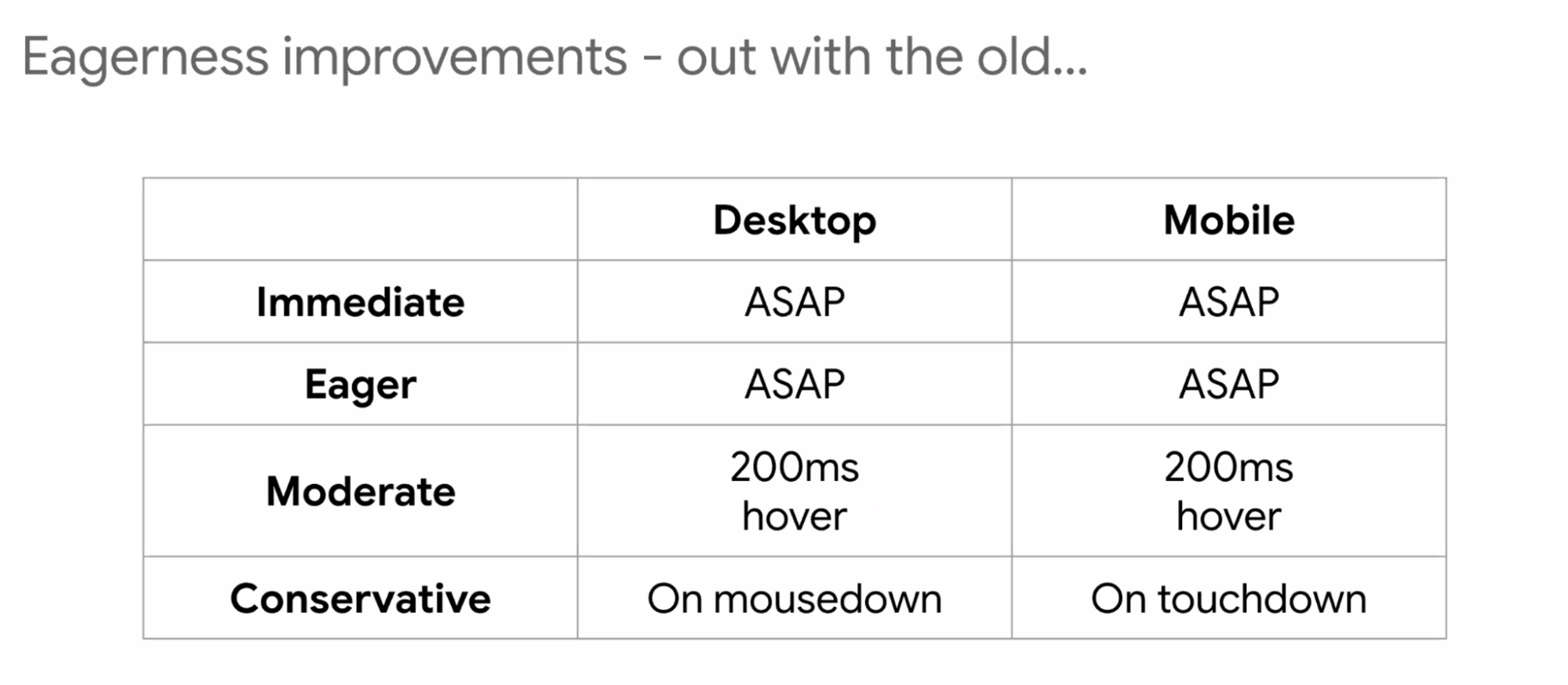

- ... Before:

- ... Immediate and eager used to mean same things, make network requests at low priority

- ... Moderate gives you the hover activity over a link, after 200ms we'll do that

- ... Conservative is when you start clicking (e.g. mouse down before mouse up)

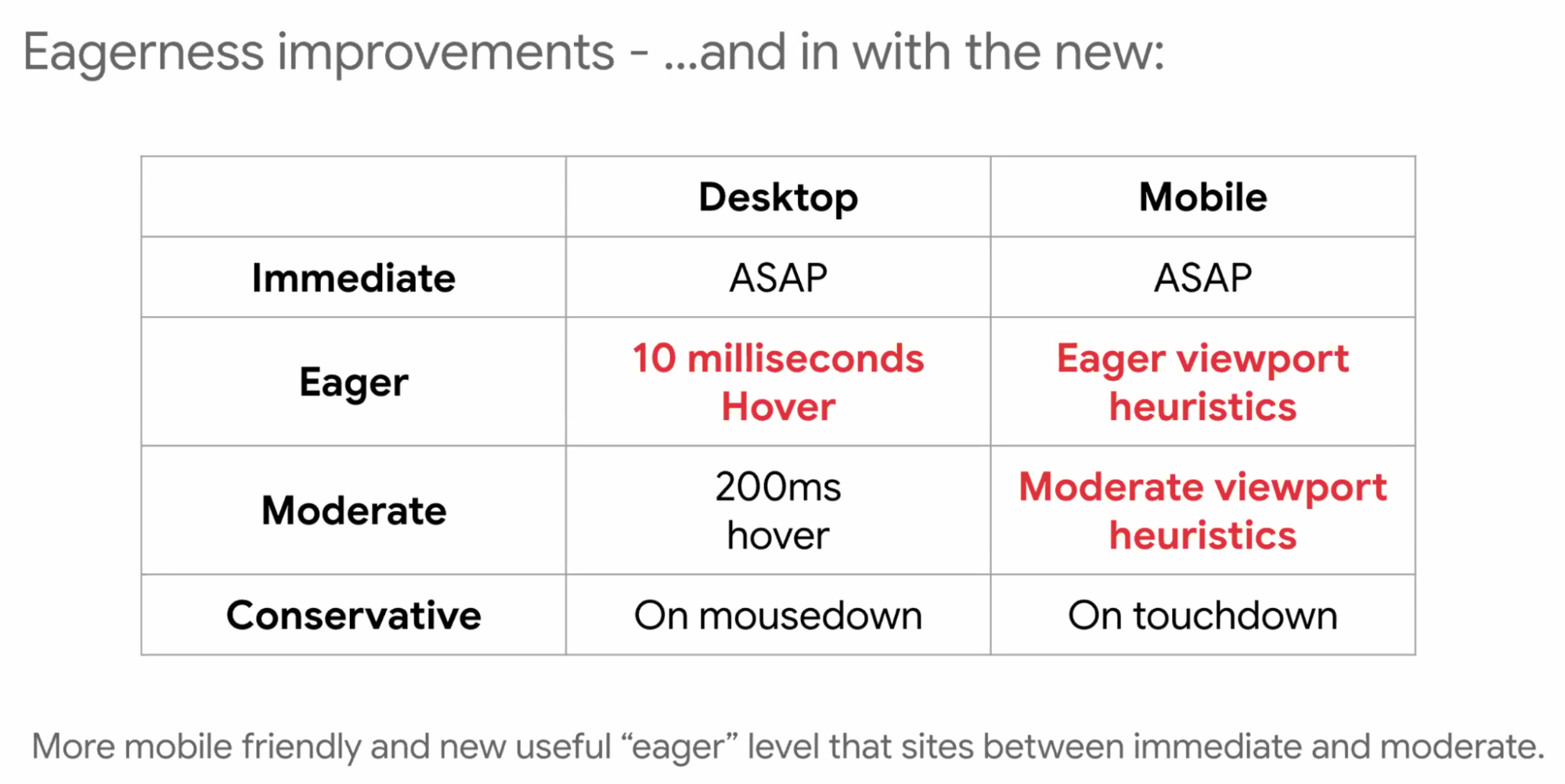

- ... Now we're moving ahead with:

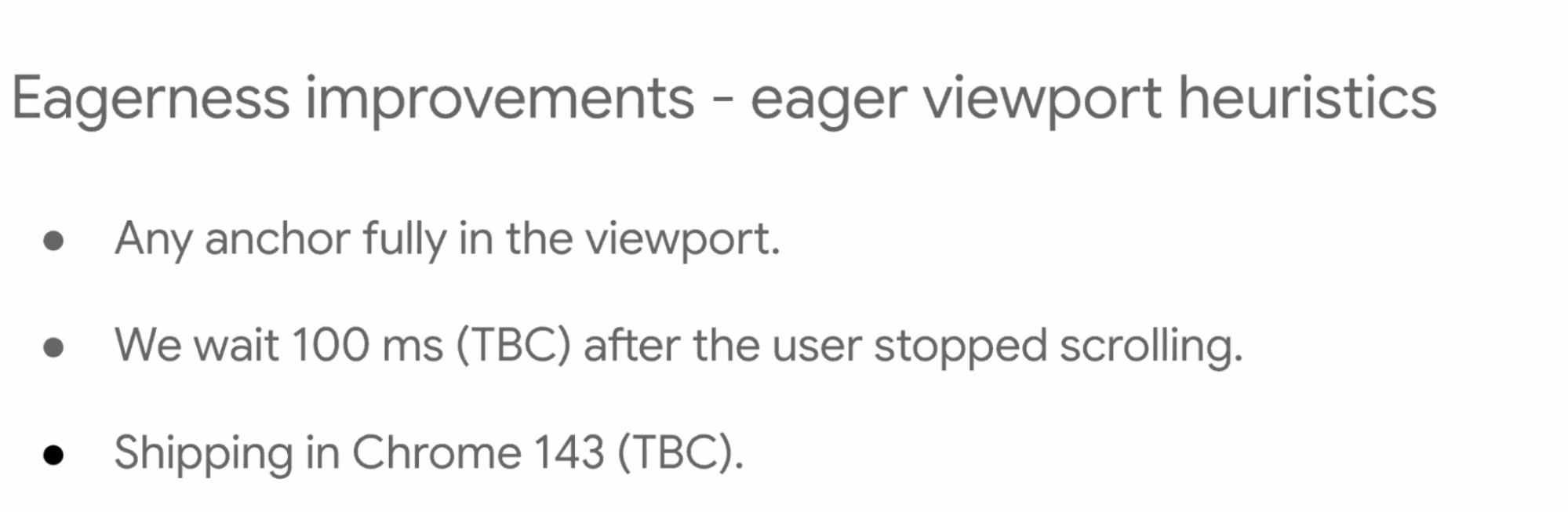

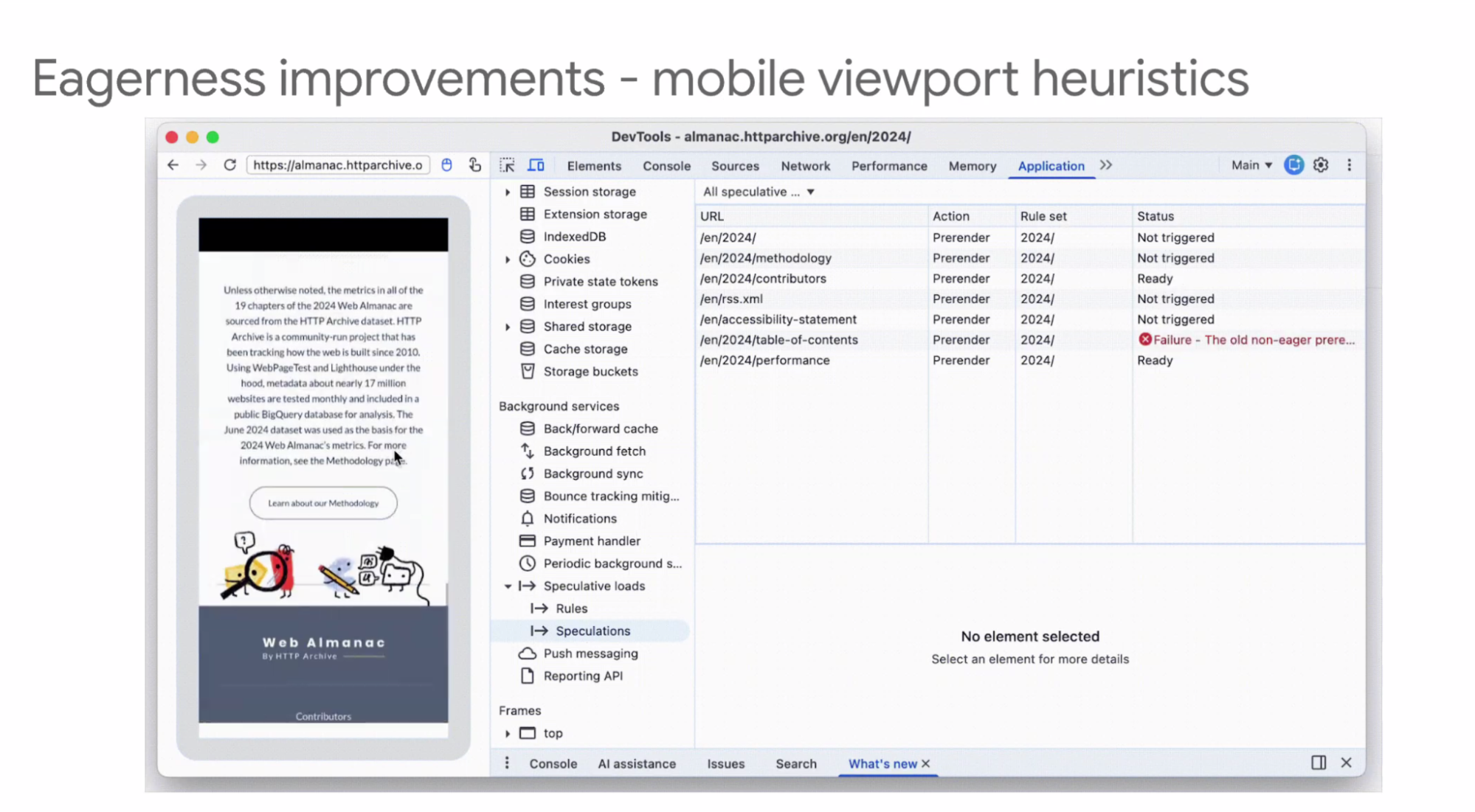

- ... On mobile we've added viewport heuristics since there's no mouse hover

- ... As your links scroll into viewport, we assume that's as close to hover as we can get

- ... Moderate viewport heuristics, anchor is within ~n pixels distance

- ... As people are scrolling you don't want to speculate loading

- ... Prefetch on eager thing, upgrade to prerender on something more

- (demo)

- ... From Akamai (Tim Verecke), rolling out eagerness changes

- ... From ~190ms header start to almost a second lead-time on mobile

- ... Speculations have a cost, from site owner, CDN, end-users

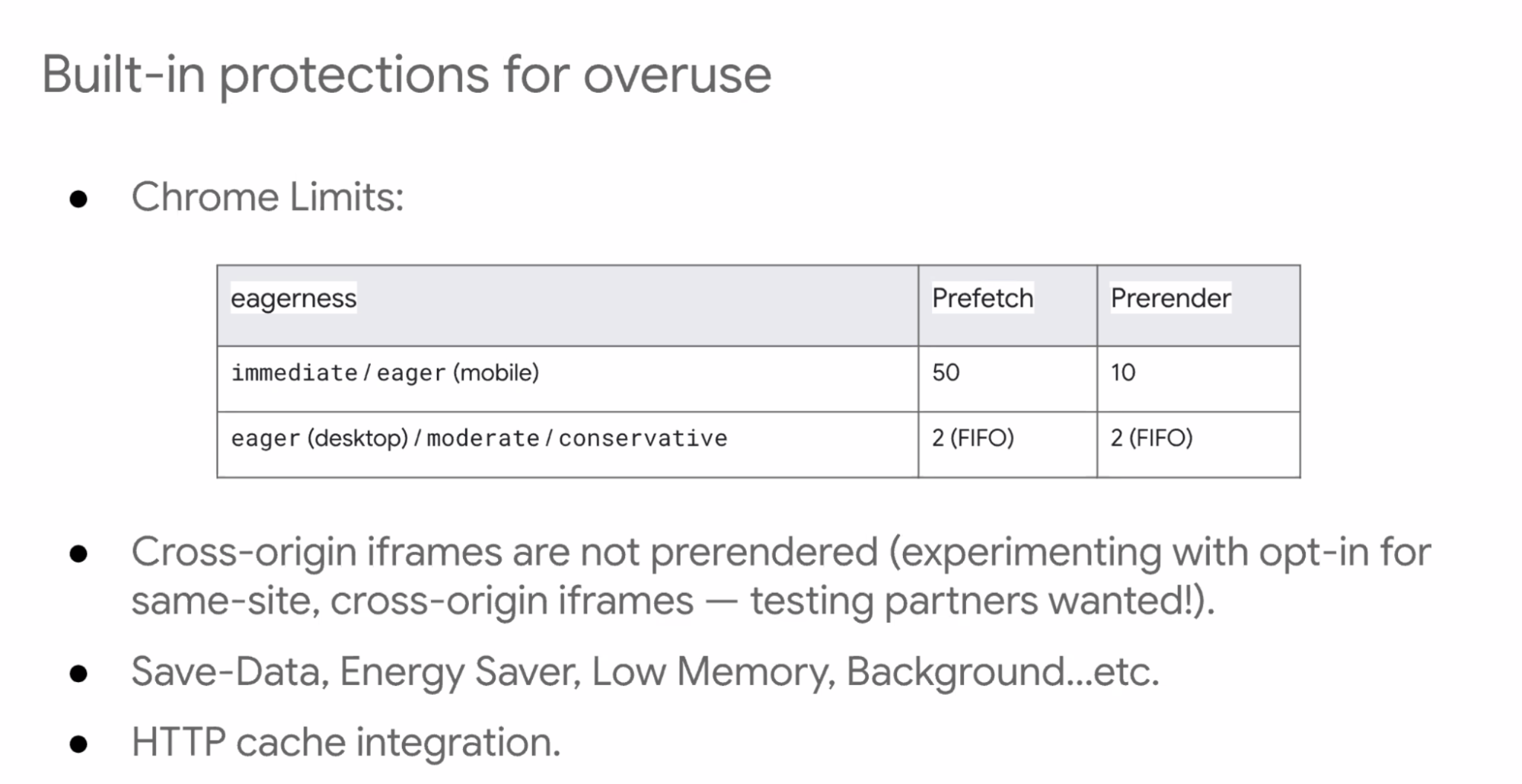

- ... Chrome has limits

- ... Cross-origin iframes, those aren't prerendered by default, there's a way to opt-in for same-site X-O iframes

- ... Lots of automatic built-in protections there

- ... Want people to use this in the right way

- ... Will store and check from HTTP cache, even if speculation isn't used, you may have already preloaded it into the cache

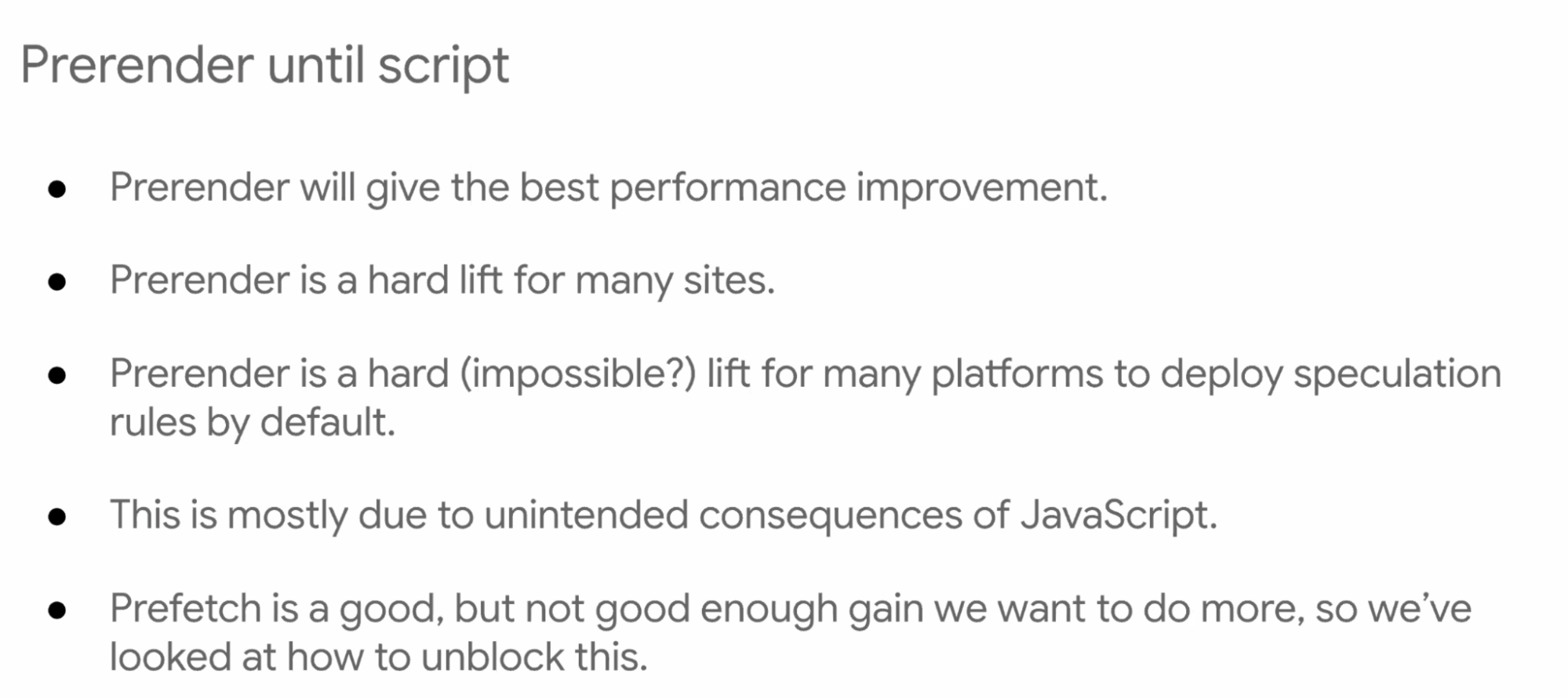

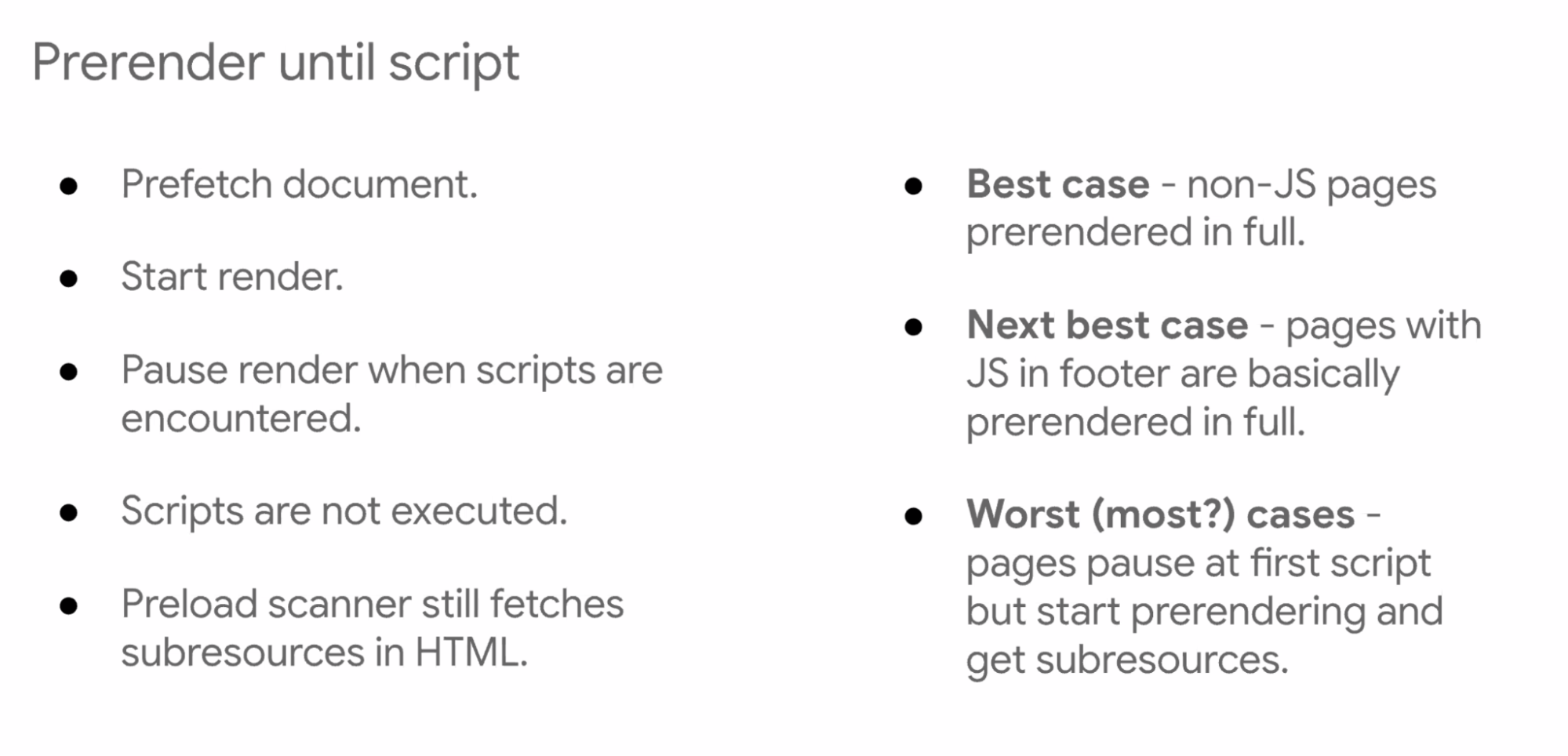

- ... prerender_until_script

- ... Sites are nervous/anxious about impact from analytics, ads, etc

- ... Prerender is hard lift for many platforms to deploy

- ... Some platforms need to be quite conservative

- ... Most of this is used to unintended consequences of JavaScript

- ...

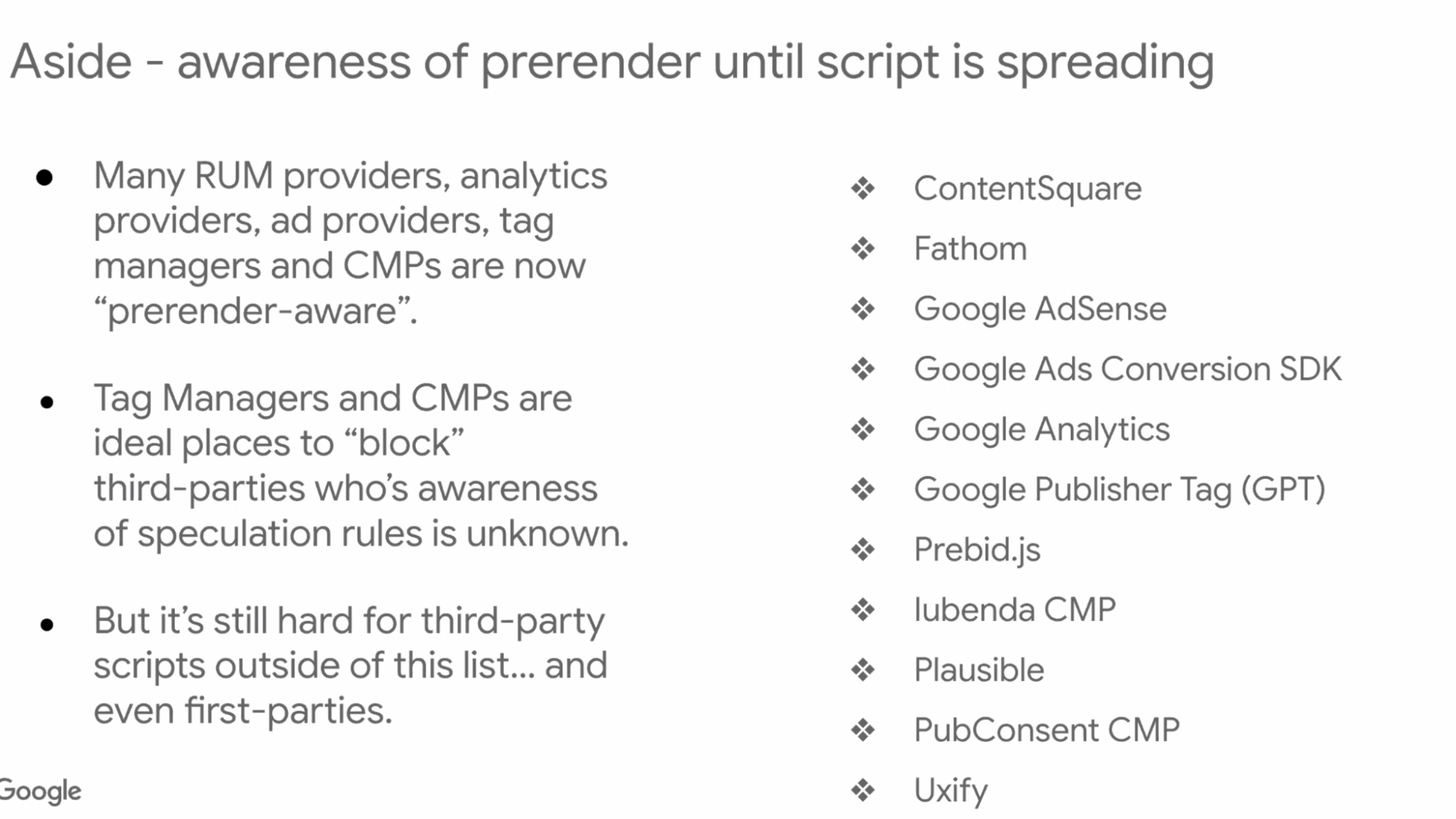

- ... Tried to improve awareness of this for third-parties to be prerendered aware

- ... Even with that, it can be hard for 3P and 1P scripts to be aware

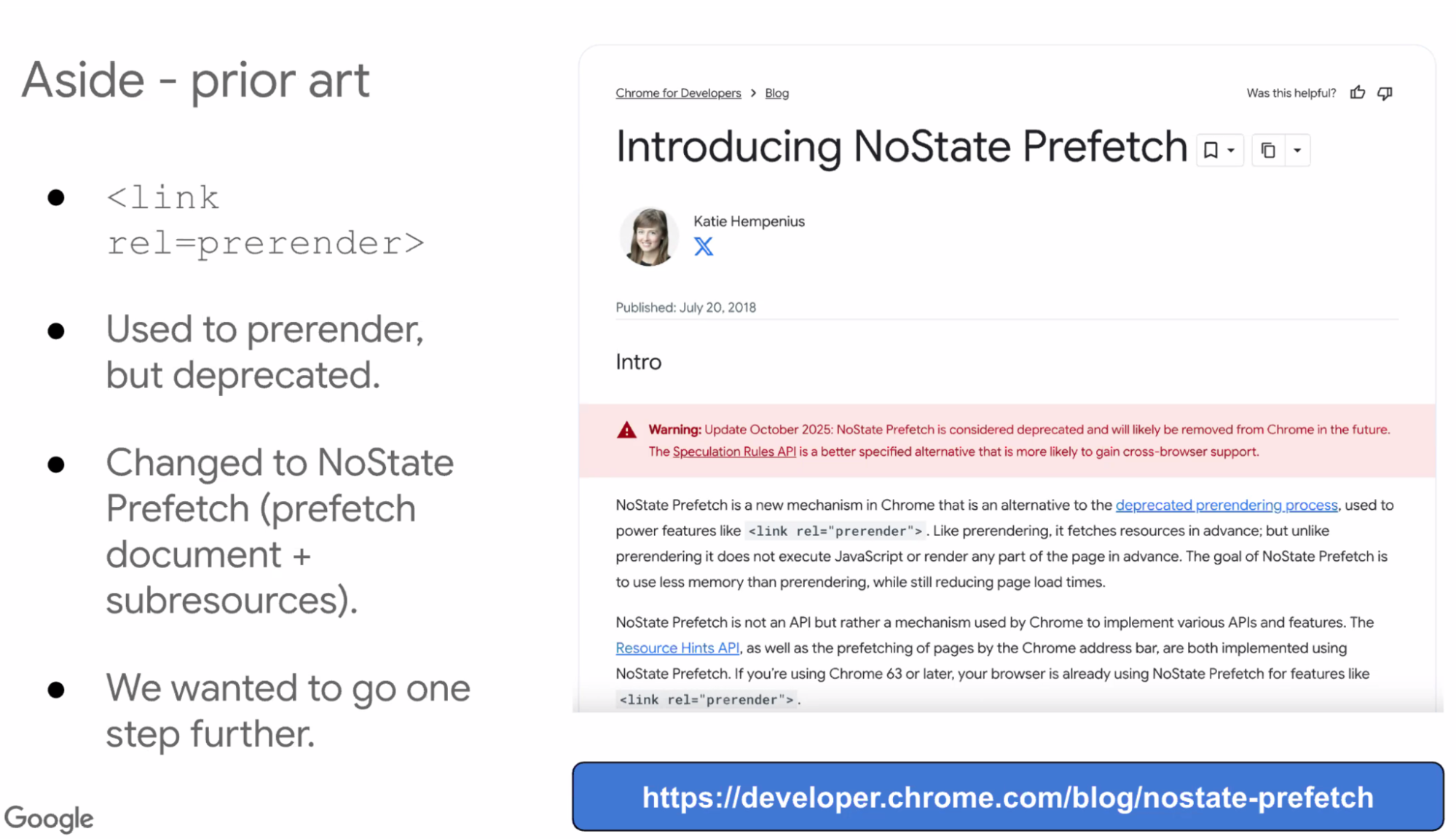

- ... We had a link rel=prerender, deprecated in 2018, changed to no-state prefetch

- ... Which prefetches document and all resources (via preload scanner)

- ... Thought it'd be a middle-ground between prefetch and prerender

- ... prerender_until_script

- ... Scripts are not executed

- ... This has interest from partners

- ... Want feedback

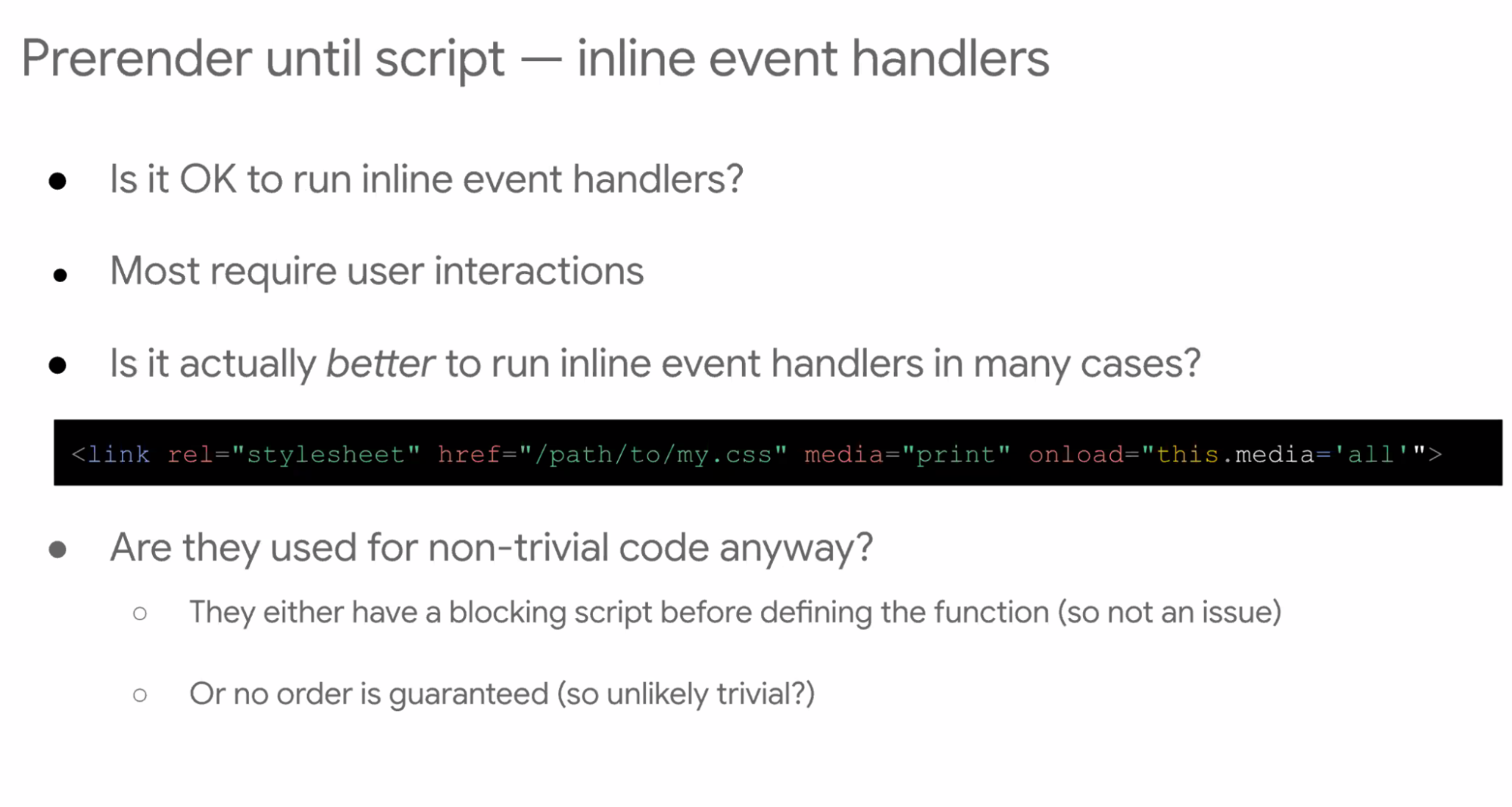

- ... Inline event handlers

- ... Is it OK to run them? Most do require user interaction

- ... In a lot of cases it'd be better to run, e.g. common async css hack. Once finished loading, we want CSS to be applied immediately

- ... Fewer cases I'm finding that need this paused

- ... Some more obscure features for cross-origin prefetch

- ... Google Search are first two preloaded automatically, uses Google anonymous proxy for privacy

- ... These features are mostly used by Google Search

- ... Breakout talking about this in more detail

- ... Adoption

- ... ~12% navigations in Chrome

- ... 12% of origins in HTTP Archive, mostly large platforms (WordPress and Shopify)

- ... Who's using this?

- ... Top section is rolled out by default

- ... Middle section are offering hover activity

- ... Bottom row is where they've rolled out full prerender

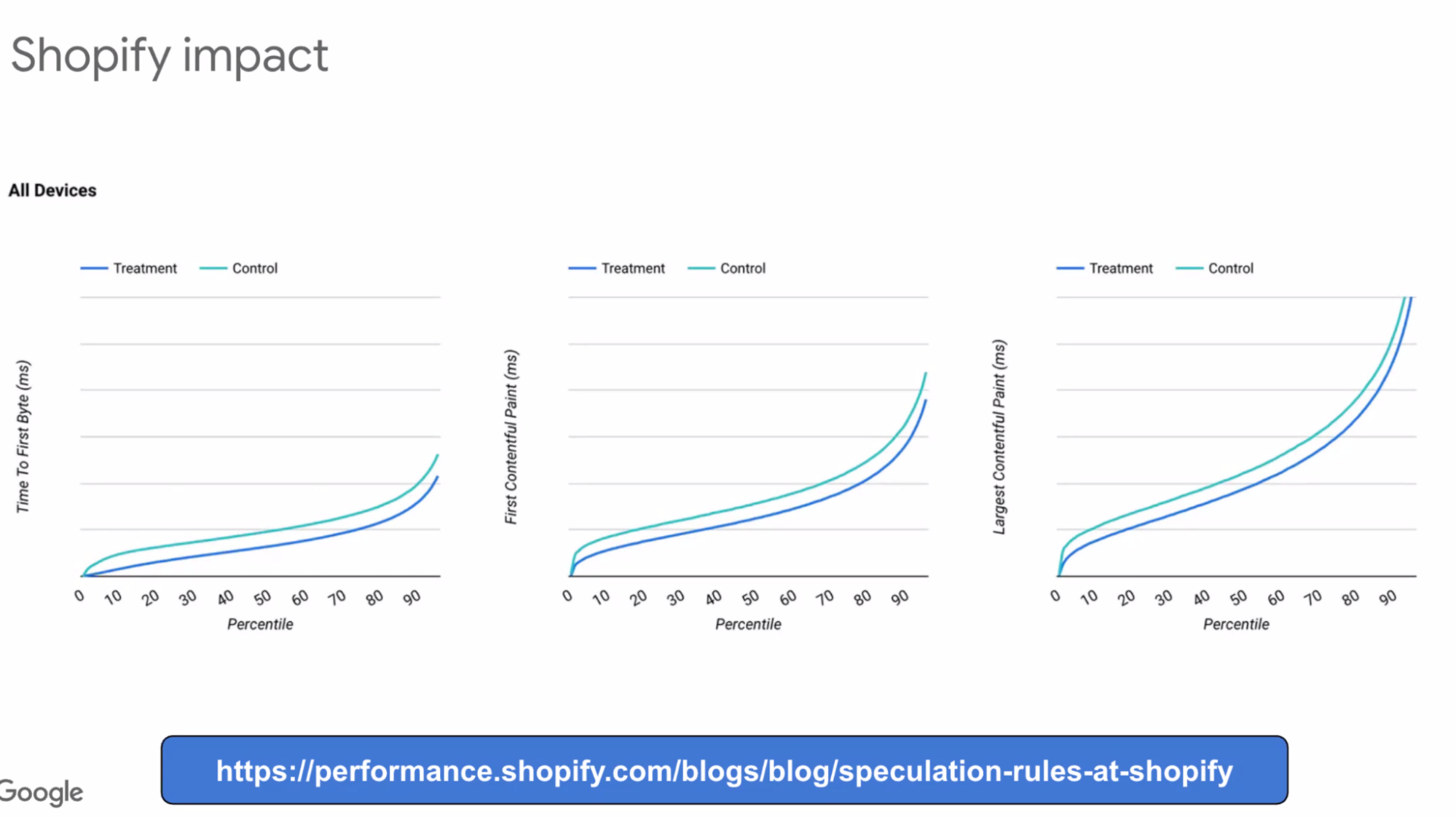

- ... Impact graphs

- ... Mousedown activated

- ... Improvements across the board across percentiles

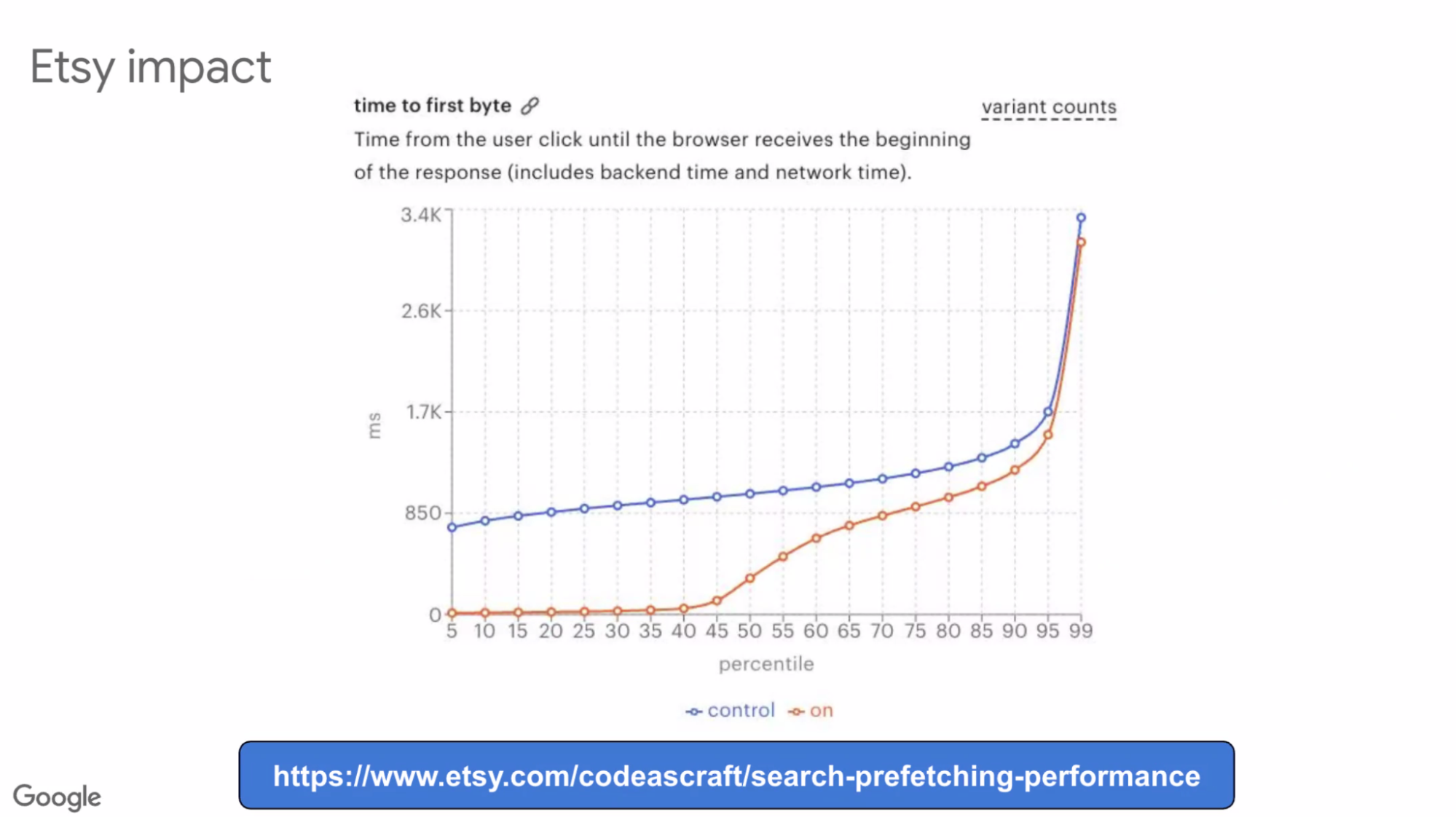

- ... Etsy is on hover

- ... Often has 800ms TTFB, up to 45th percentile that's down to ~0ms

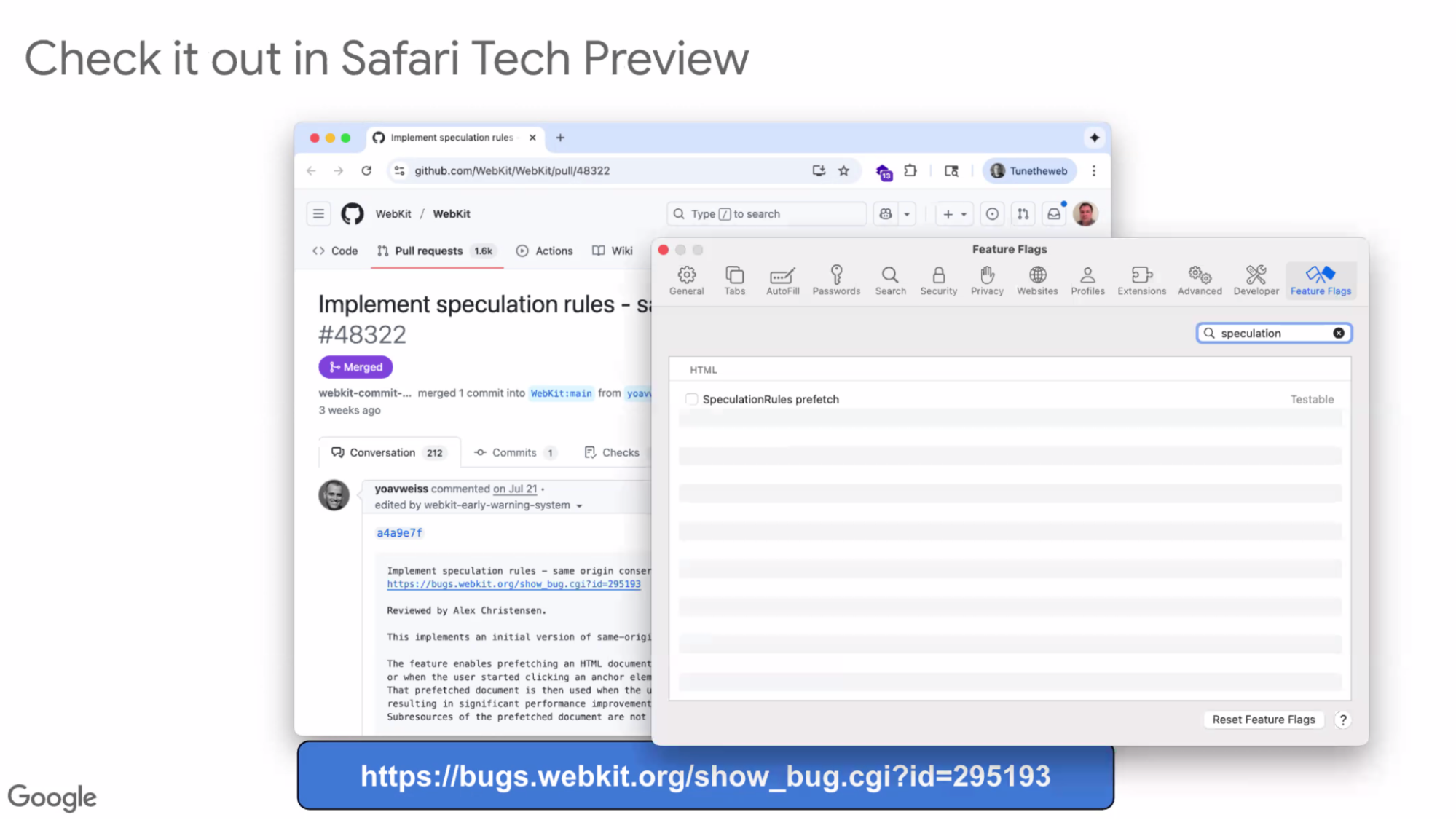

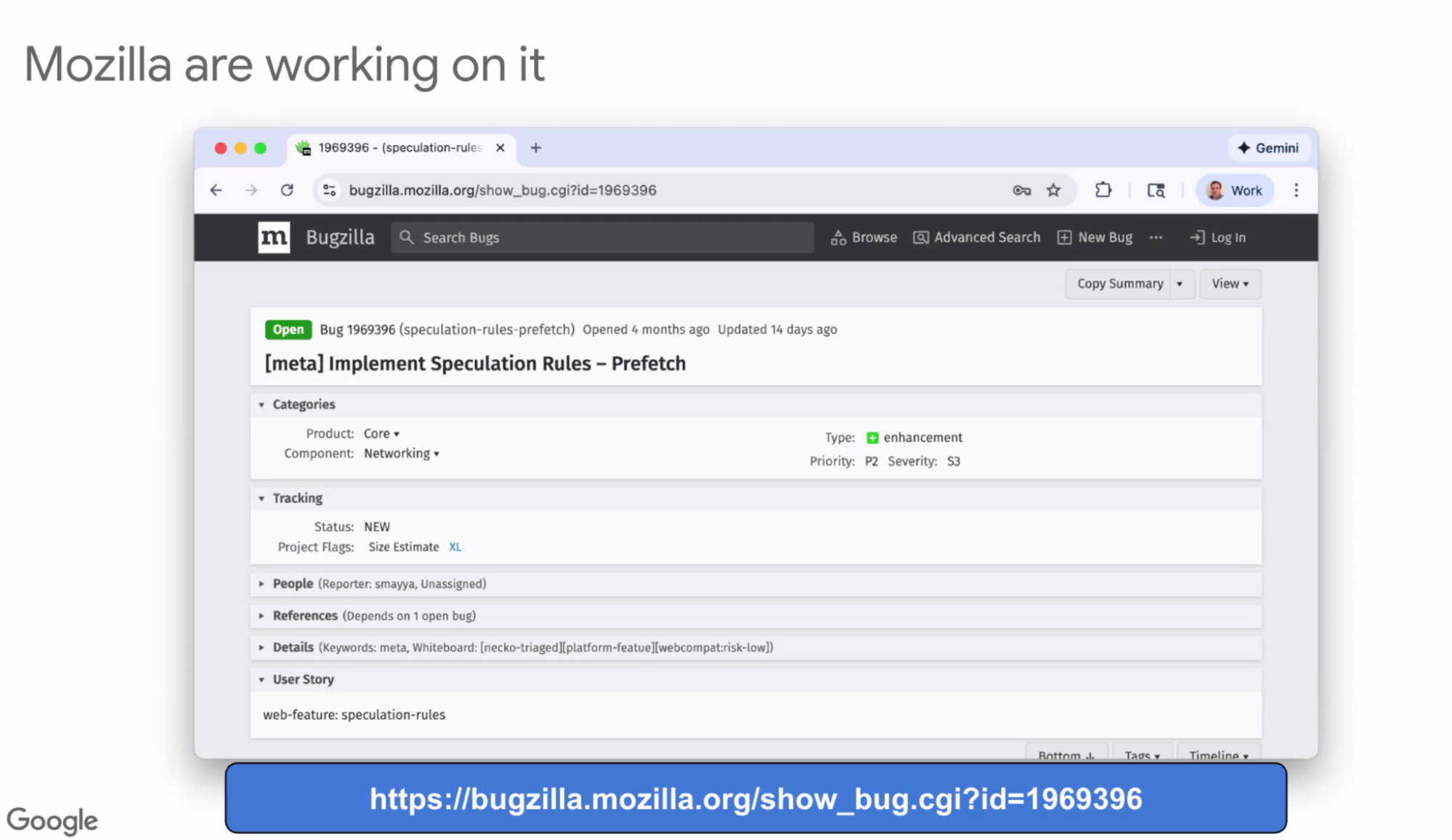

- ... Chromium-only at the moment

- ... Basis recently landed in WebKit

- ... First of many PRs

- ... Mozilla also working on it this and next quarter

- ... Ideally we'd have, if you're using prerender, and browsers don't support, would fall back to prefetch

- ... Ideally we'd get into Interop 2026

- ... Questions:

- ...

- ...

- nhiroki: Regarding same site X-O prerender, it's cross-site-cross-origin that is already supported

- Nic: Is there a way for a script to annotate that it’s fine with prerender for prerender-until-script?

- Barry: not at the moment. It adds some complexity but we talked about it, but not a lot of people were interested

- Nic: For an analytics script, it could be useful to be mark it

- Barry: you typically load those at the bottom of the page

- Nic: Some Akamai scripts insert themselves in the head but are prerender aware

- Barry: If there’s demand, let us know

- NoamH: Multiple ways to add the rules. Does the last one win? Are rules merged?

- Barry: Anything that matches works

- NoamH: Can there be a conflict if one excludes and one doesn’t

- Barry: The exclusion won’t work. Tags would allow you to see which rule worked

- NoamH: Cross-origin iframe prerender is enabled?

- Nhiroki: Still experimental using a feature flag

- Yoav: Would it be possible to somehow measure when these inline events refer to other scripts?

- ... Scenario that scares me is that inline event handler calls a function that should have been defined by a blocking script that is no longer

- Barry: Blocking script stopped, first thing run on activation, inline event handler isn't set up since it's above that

- Yoav: IMG tag that has online event, handler defined in script lower down

- ... A race that isn't lost in

- Kouhei: The onload function is blocking prerendering

- Yoav: When the image is actually loaded, onload will not fire if prerendering is blocked

- Kouhei: No guarantee, bug in the first case

- Yoav: Race isn't lost

- Barry: Cached image the race would be lost

- Michal: Onload events are async, no guarantee

- Yoav: Would it be web-safe to deploy? Can we measure their existence in the wild?

- Kouhei: Can't measure these ones

- Yoav: Can we measure that race condition? Inline event where it refers to something defined in an external script, you can measure this in terms of preload

- hiroshige: Measurement is hard, especially because it requires a deep analysis, catching of the error event may be helpful. Hard to measure. Those types of guarantee, trigger may effect other web platform changes. Slightly changing the timing of the event handler may have a hard trigger, internal changes may very slightly change task by one ahead, could trigger a bug report.

- ... This might be a risk. That kind of dependency may already be broken. Not just render and script changes, but other implementations and in the future.

- Yoav: With my deploying speculation rules as a platform as a hat on, if a website is written badly and it breaks that's their problem, if this is a flag I enable and it breaks, it becomes my problem. How big of a risk for race conditions?

- Michal: Async and defer script also gets postponed a lot longer than it would have been

- Barry: Continue prerendering then run later

- Michal: If it's a race, a race that was always won, and now defer could break it

- Barry: JS depending on JS

- Kouhei: Why don't we measure the amount of JavaScript errors, we can monitor while deploying

- ... Agree it's a possible scenario, change it's happening sounds low risk

- Yoav: You'll need platforms to participate in experiment to see those errors

- Michal: Platforms that opt into prerender_until_script

- Barry: Pausing inline event handlers is too difficult

- ... For us to update the code

- Michal: Can't measure errors until first inline script

- Yoav: Deploy prerender_until_script and see if there's a bump in the error rate

- Barry: Something that sites or platforms that turn on

- Yoav: I can see platforms experimenting before we determine whether it's safe or not

- Justin: Delivered as a script tag but it's actually JSON

- ... wanted to ask around scroll tracking

- Barry: Many signals where we slightly disable it

- Michal: prerender_until_quick, moment I click, now entire script has to run

- ... Moment where site looks interactive but it's not

- ... Do we swap first then run, or do we do any holding

- Barry: I think we swap first then run

- Lingqi: We have proposal to paint first then run the activation event handler

- Michal: In a perfect world, we'd wait some amount of time then get feedback

- Michal: Another question, hover on mobile. Order of events that fire, hover events the moment you touch before pointer down.

- ... Even tho it's in same task, opportunity

- Barry: Before we switched to mobile viewport things, clicking would trigger hover, but not same as multiple seconds you got on desktop

- ... Now in viewport, it's earlier any way

- ... Based on mouse-down

- Michal: It's Chrome's internal event that triggers hover

- ... Situations where a site's hover events are long-running, blocking INP

- ... On mobile it's left in there

- ... I wonder if that would affect our ability

- ... Depends on how it's implemented

- ... touchdown/mousedown hardware event, that will dispatch hover events/pointer over/pointer entered before pointer down

- ... Event dispatch could come later that it could

- Barry: Could be a micro improvement here

- Nhiroki: Experiment in feature flags, try on Chrome canary

- Barry: Origin Trial in January

- Yoav: On Interop, sounds too early for prerender_until_script, open questions that need to be figured out

Speculation Rules, link rel, preconnect

Recording

Agenda

AI Summary:

- The group proposed clearly separating “current-page” vs “next-page” preloading: `link rel=*` for the current page, and speculation rules (prefetch/prerender/preconnect) for anticipated navigations, with an eventual deprecation path for cross-page `link rel=prefetch`.

- A new speculation-rules `preconnect` action is being explored to restore cross-site preconnect in a world with network/state partitioning, while addressing privacy risks (TLS client certificates, partition keys, anonymization/proxy behavior).

- Participants discussed how to signal/observe preconnects (e.g., via headers, tags, or TLS-layer mechanisms like ALPS), noting current layering issues and the need for careful design.

- There was debate on whether cross-origin preconnects need full anonymization like prefetch, and how to balance that with complexity and performance, especially once a credentialed request reuses the connection.

- Developers would likely use speculation-rules preconnect as a more conservative, lightweight alternative or fallback to prefetch (e.g., preconnecting to a checkout domain), with explicit signals for dynamic or stateful resources.

Minutes:

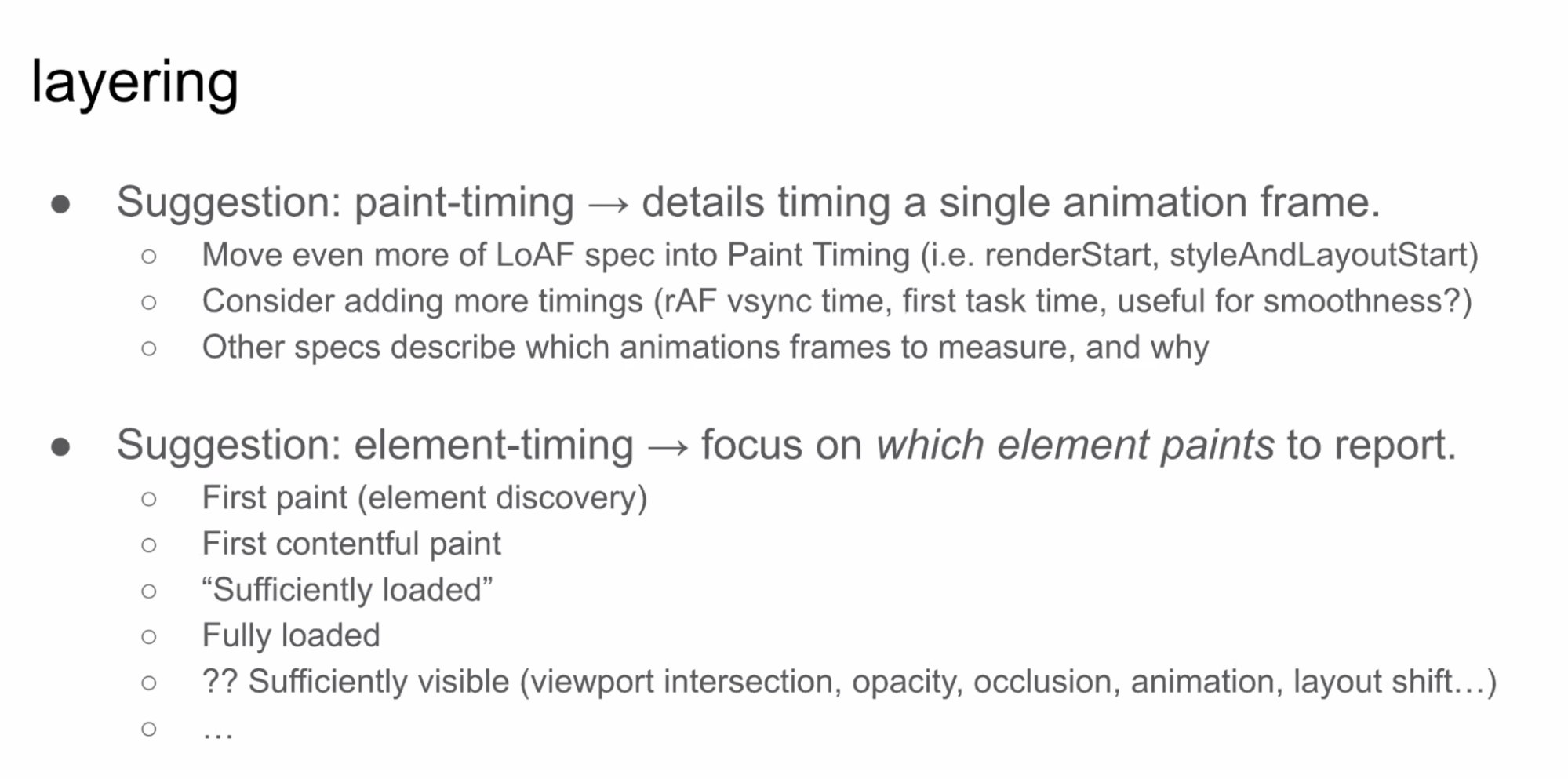

- Hiroshige: working on speculation rules. Want to talk about separating pre*

- … A few APIs to preload things. Either for the current page or for next page navigation

- … Different requirements around privacy

- … Different behavior and need a separate API for these two categories

- … Speculation rules prefetch and prefender work across site boundaries with privacy considerations covered

- … Adding more API in this category - speculation rules for preconnect

- … Want to propose separate API surfaces that make it explicit which category of preloading is needed by users

- … e.g. link rel=prefetch is mixing these two pages

- … Requires users to think about these categories and be explicitly aware of them

- … Separate API shape: link rel for the current page, speculation rules for the next page

- … Eventually would need to deprecate link rel APIs for the next page

- … Risk - requires users to move to the new API shape, but these APIs are already not necessarily working as expected, so suspect that the impact would be low

- … Would also require developers to be aware of these categories (added cost). But it’s better in the long term

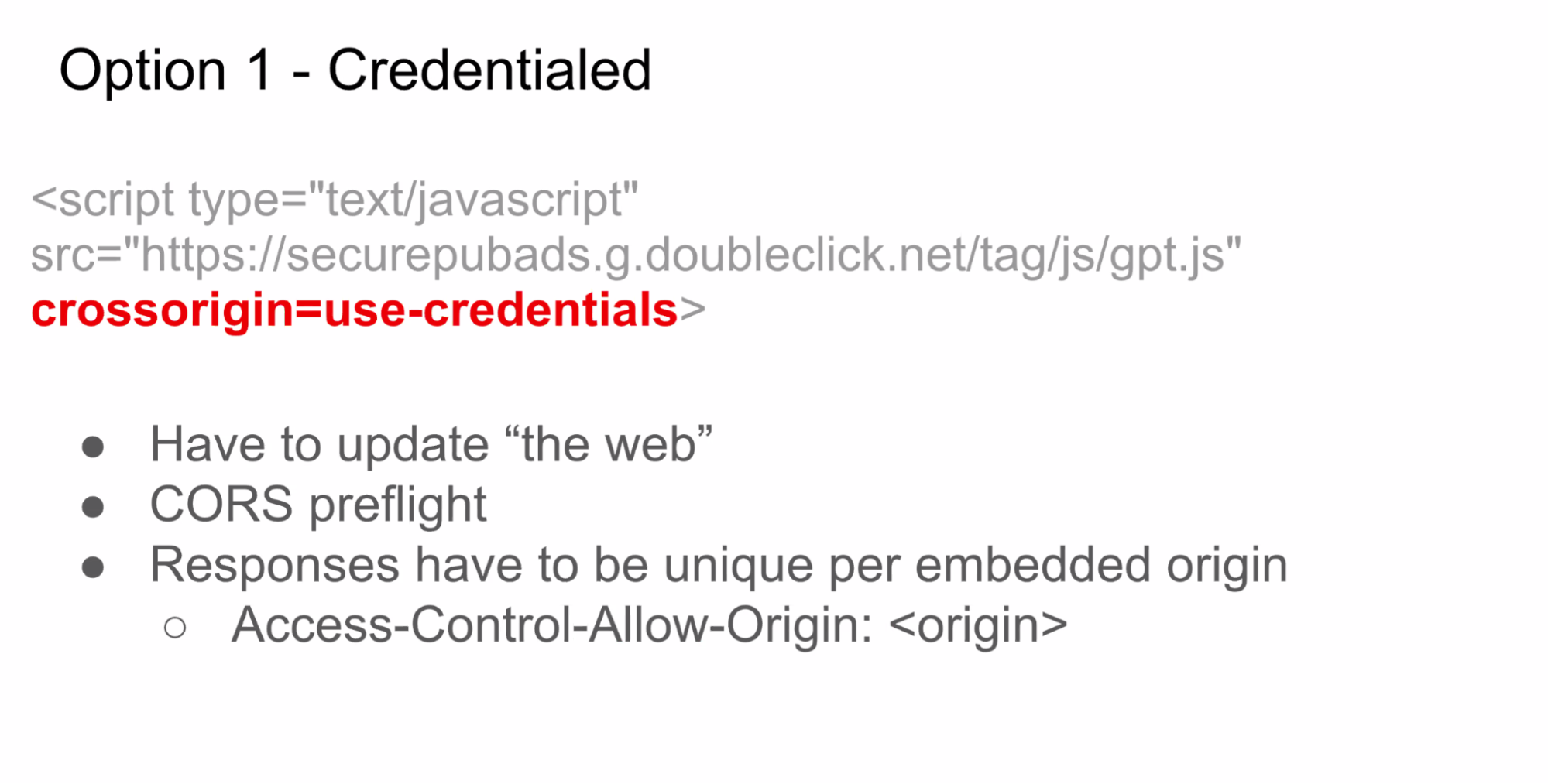

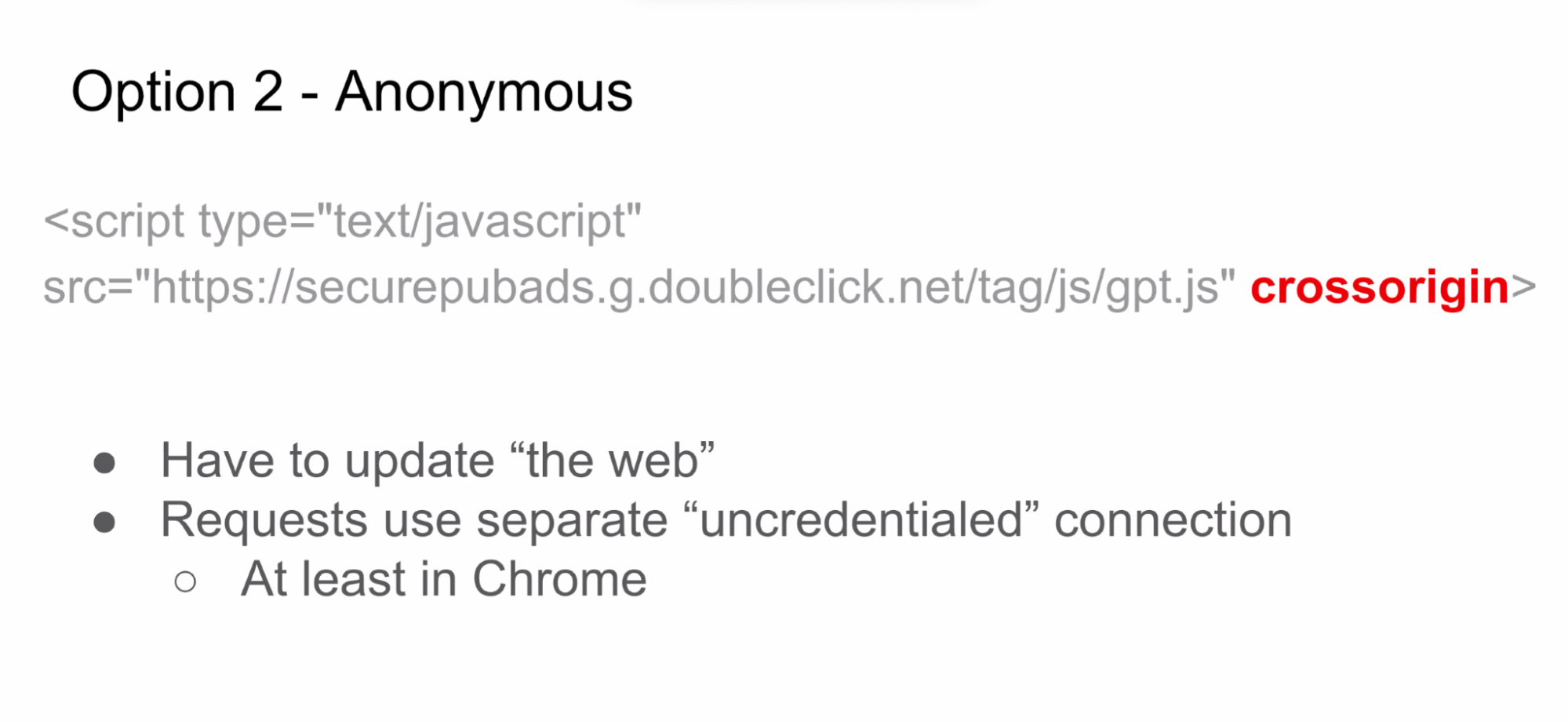

- Keita: Proposal to add a preconnect action to speculation rules

- … when we didn’t have network partitioning, it used to work for both subresources and documents

- … With network partitioning we need preconnect in speculation rules to have cross-site preconnect

- … Security considerations: used to work. Cross site tracking needs to be mitigated (e.g. eliminating credentials)

- … For preconnect the main vector is the TLS client certificates. (care less about cookies)

- … There’s also anonymization, preconnects would need to care about this

- … State partitioning - we’d need a different network partitioning key for these connections

- … In Chromium it’s 2.5 keys

- … In preconnect, we’d need to have the top-frame part of the key be the destination site

- … This may expose user browsing habits but that’s a broader concern

- … Wanted to ask if this is something useful in general and if there’s anything missing

- Ben Kelly: For anonymization, you’re going through a proxy. Did you measure the overhead of what happens if the entire resource then needs to be proxied?

- Keita: No numbers off the top of my head, but we’ll look into that

- NoamH: What happens with speculation rule tags?

- Keita: In the handshake there won’t be any way the server would know it’s a preconnect. No way to mark it right now

- Yoav: If that preconnect is used on request, could you add a HTTP header / tag?

- Ben: Reminds me we have a terrible layering inversion where some things are sent through TLS layer. Happens on preconnect. Might need to address how you'll handle that. Maybe in TLS layer. Could be a Client Hint.

- ... Opportunity to use ALPS

- ... When that flag is set, we don't do critical client hints negotiation, and things like that

- ... Needs to be addressed in your design

- Toyoshim: do we really need the anonymization of the IP address? The site can already fetch the cross-site. Preconnect for the cross-site is much cheaper than (what)?

- Keita: preconnect needs to be consistent with the existing pre*, so reasonable to have anonymization support

- Toyoshim: but using a proxy to connect to the cross-origin site makes sense, but using it for preconnect seems not reasonable?

- Ben: When you anonymize cross origin prefetch you're also not sending cookies, but with preconnect you could do anonymization and then send a credential request. That could be complex

- Eric: ALPS is complex. Separating between same-origin and cross-origin makes sense. In terms of separating the anonymization areas - preconnecting may not require it, but at the point you want to use the connection you’re in the same spot where you need it to be partitioned.

- Michal: what’s the use case for preconnect compared to prefetch? Could this be a graceful fallback to prefetch? Or do we want the developers to explicitly limit to preconnect?

- Keita: Could go either way. Depends. Preconnect is more lightweight

- Ben: Would benefit from some use cases. E.g. one domain for shopping and want to preconnect for a stateful checkout

- Bas: Could be a more conservative form of prefetch

- Michal: So dynamic resource that could change - a developer-explicit signal

Privacy-preserving prefetch and cross-origin prefetch

Recording

Agenda

AI Summary:

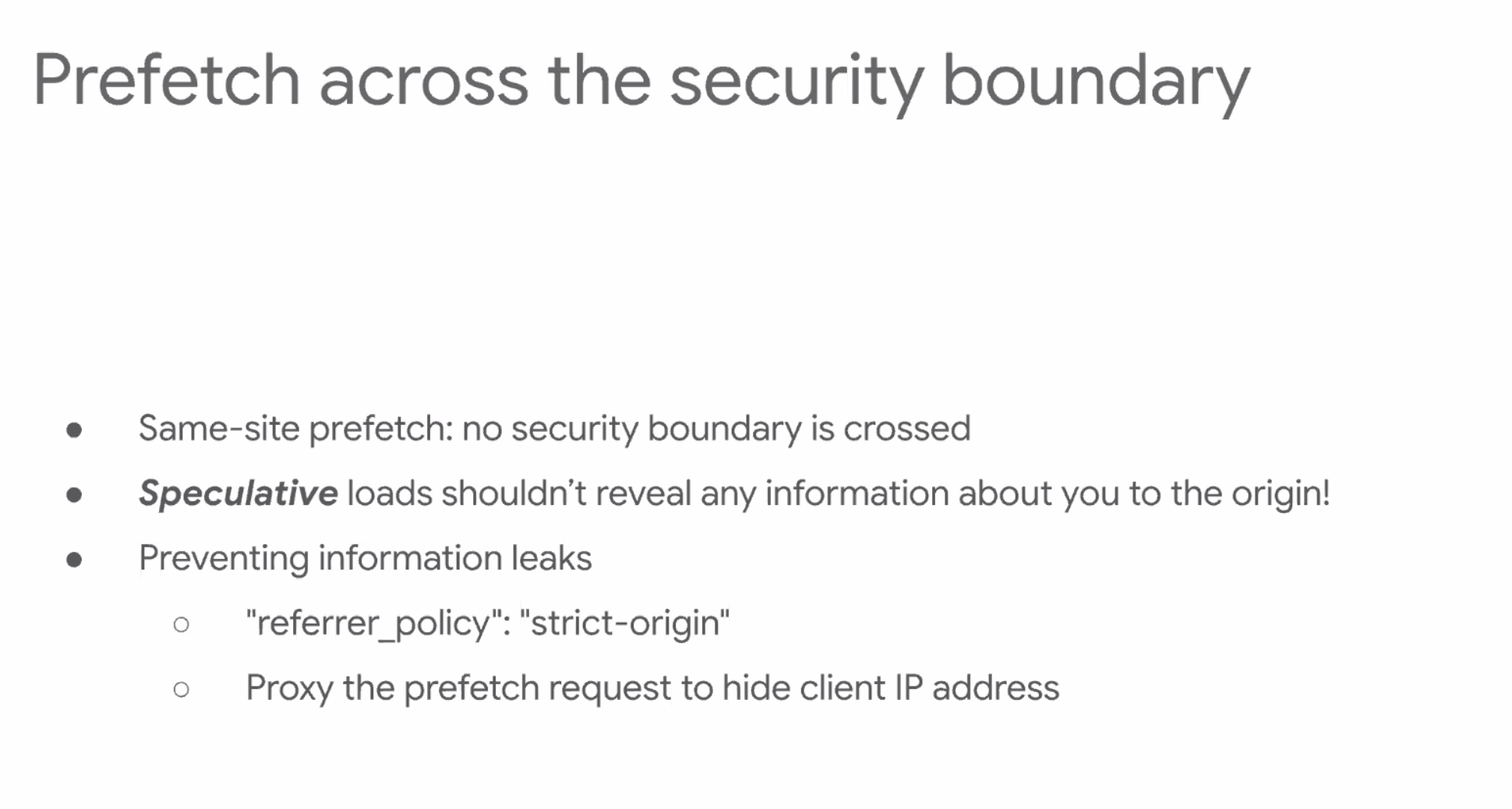

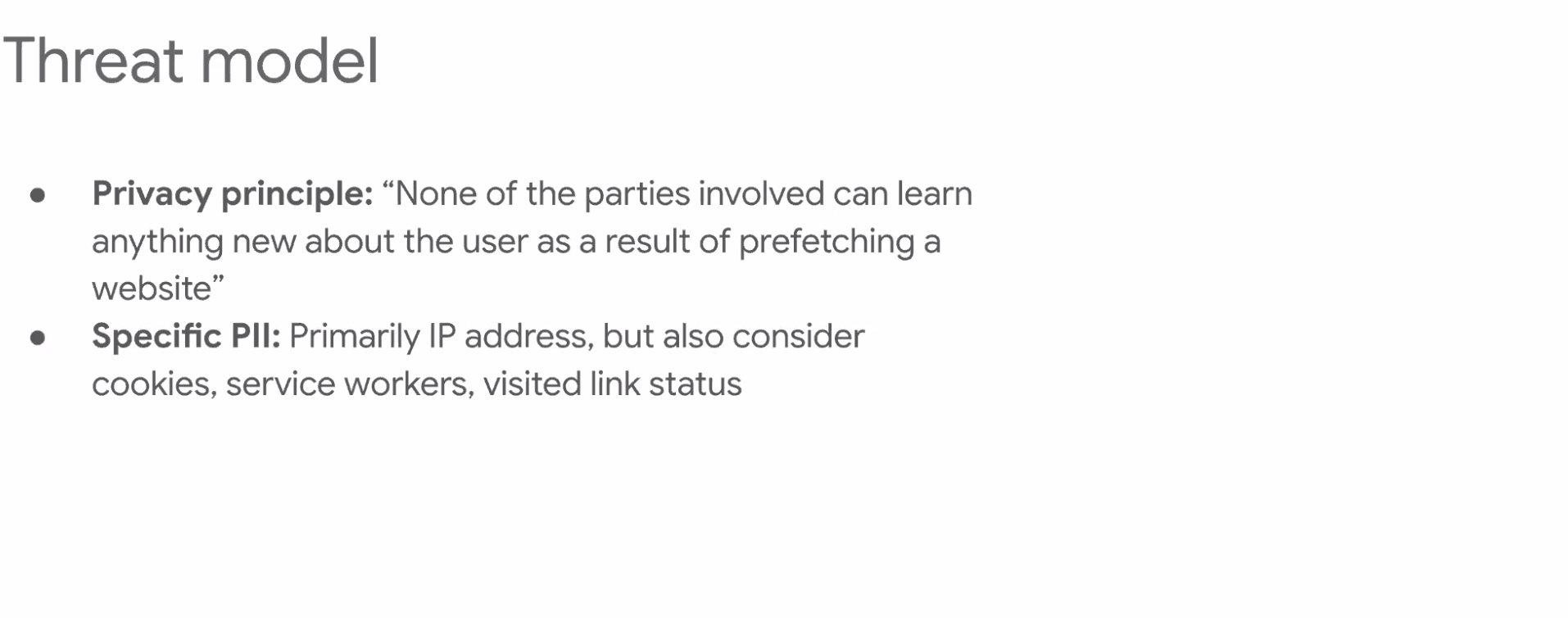

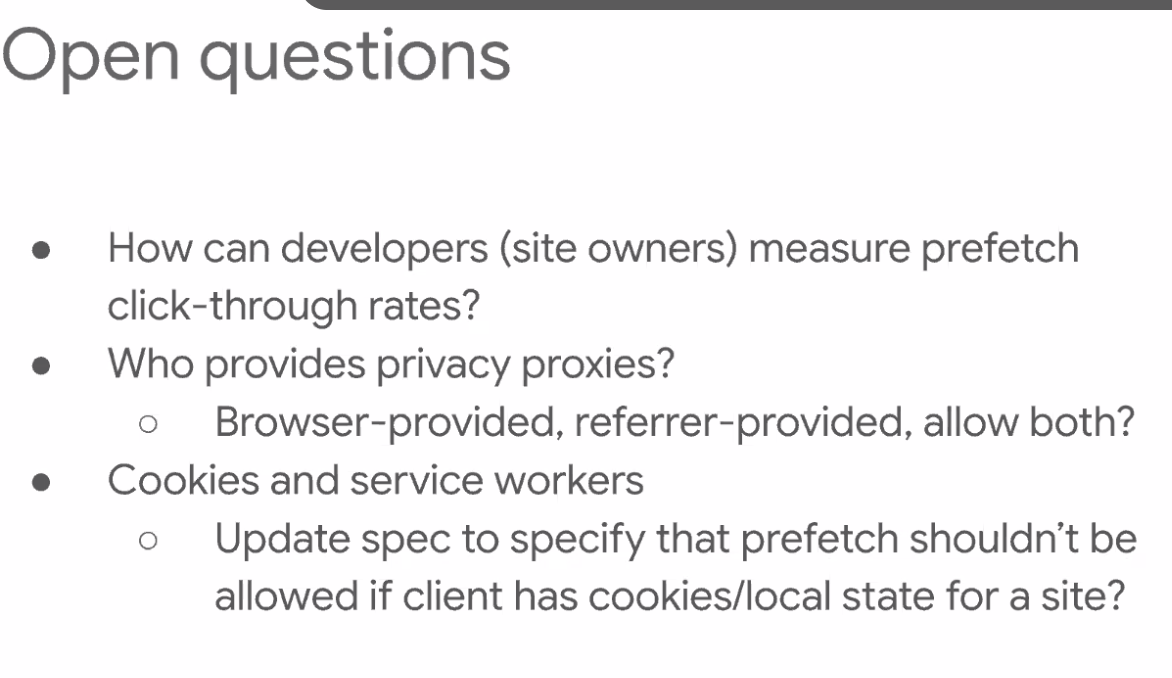

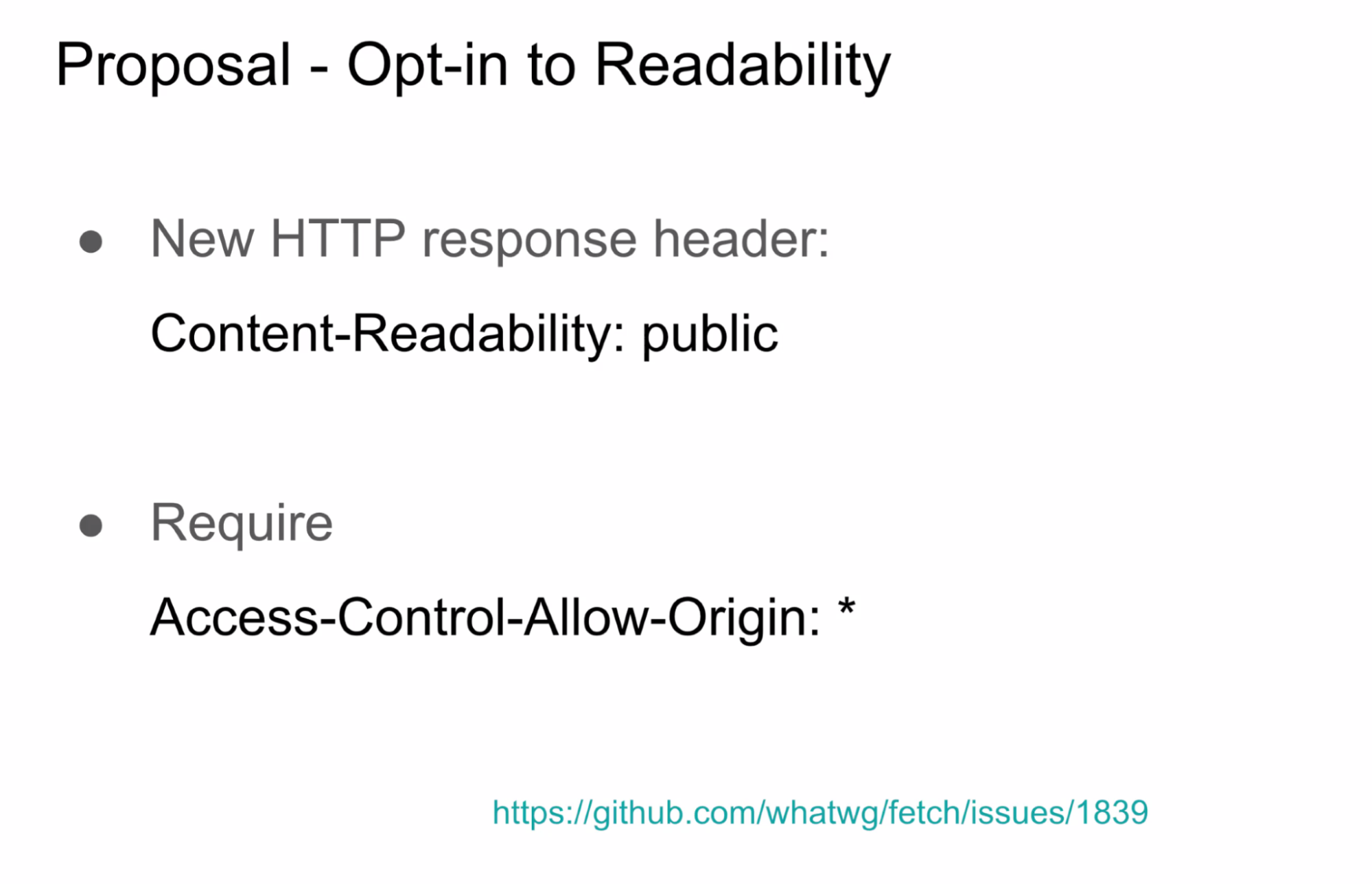

- Cross-site speculative prefetch should not reveal user-identifying information (IP, cookies, service workers, headers); existing specs mention “anonymized-client-ip” but don’t yet define a full anonymization model.

- Google’s Privacy-preserving Prefetch Proxy (P4) is an example of such anonymization in practice; discussion focused on defining a common threat model and deciding what else (beyond IP) must be stripped or partitioned.

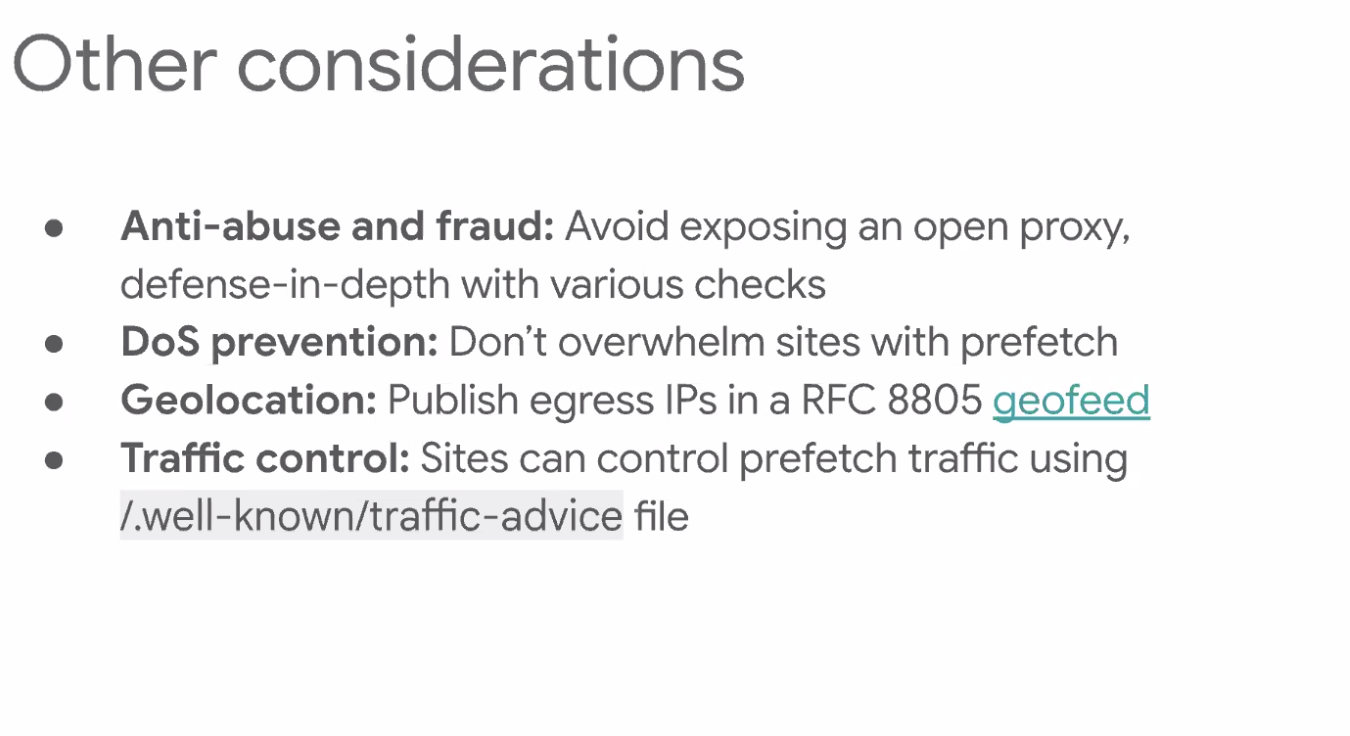

- The group debated “site-provided” (origin-owned) proxies vs browser-provided multi-hop proxies: origin proxies are easier to trust and pay their own costs, while browser-wide proxies raise competition, cost, and default-on privacy concerns (hence ideas like 2‑hop designs and token-based rate limiting).

- Concerns were raised about abuse, DoS, and tracking; most agreed these risks largely exist already, but mechanisms like `.well-known/traffic-advice` and PAT/token-based rate limiting could help responsible proxies limit traffic and amplification.

- There was also discussion of user trust and responsibility: with same-origin proxies, any response tampering is effectively the origin’s responsibility (protected by HTTPS tunnels), but UI/UX and attribution must avoid misleading users about where content really comes from.

Minutes:

- Robert: Cross origin and site prefetch - crossing site security boundary and a speculative load is not a direct load from the user, so should not reveal information about the user.

- … want to hide where the request is coming from, the IP address, and any cookies/service-workers that can identify a specific user

- … the spec states a need for anonymization but doesn’t talk about how it’s used

- … The HTML spec has “anonimzed-client-IP” but it doesn’t say a lot. Doesn’t talk about a lot of other things that should be anonymized

- … I’m maintaining Google’s Privacy-preserving Prefetch proxy (P4)

- … Other sites can also use it for users that have “extended preload” enabled

- … Want to build a common threat model

- … also want to strip identifying headers

- … Direct prefetch leaks all the above things

- …

- …

- … There’s a WICG issue for site provided proxy

- … Should the anonymized client IP also indicate that other identifying characteristics won’t be sent?

- … Privacy preserving preconnect could be a way to enable credentialed requests later on

- …

- Gouhui: for site-provided proxy, how can we trust that proxy?

- Robert: in the Mozilla proposal, having the proxy on the same-origin for the site, that’s easy to trust. An issue when it’s cross-origin.

- Eric: It has to be same-origin

- Robert: has the same information as the referrer

- Bas: That’s also true with a cross origin proxy, right? The site could already leak

- Yoav: You're making it easier, but Bas is saying it's already possible

- Eric: The client doesn’t need to do anything to cause that leak

- Bas: If there was a 3P setting up a malicious proxy, they can already do this

- ... I think the referrer provided proxy is interesting

- ... Unless you allow that, you are in a situation here services created by operators who also operate a browser are fundamentally advantaged, vs. 3Ps

- ... e.g. Google Search vs. someone else

- ... I think that is at conflict with equal and open web

- Yoav: I think there's also incentive and cost alignment if the referrer wants outgoing traffic to be anonymized, it has to pay the bill. Some browsers may not want to pay for all sites on internet.

- Ben: Right now P4 supports Google traffic, beyond there, it's a UA setting. Why is that?

- ... Capacity seems reasonable

- Barry: If you go to Google Search and we prefetch first (blue) link, going to Google Search proxy is fine

- ... If you want to use Google Search proxy, Google doesn't know anything about that. Chrome does.

- ... We don't allow that by default but you can opt in.

- ... Opt in rates are low

- Ben: Makes sense, but what I'm taking away there. Thread model of P4 proxy, doesn't have any double-blind scenario, they have a trusted environment

- Barry: We could have a browser proxy, any site to protect anything. A level removed here.

- ... Google.com has a proxy, only one to use it.

- ... Ideally allow bing.com to use their own proxy.

- ... Should browser have their own proxy?

- Eric: Making that on by default instead of opt in, is why Private Relay is a 2-hop proxy

- ... In order for it to be by default, 2-hop so Apple doesn't learn

- ... Masked proxy, multi-hop connect.

- Yoav: In same-origin proxy scenario, you don't need 2-hops

- Eric: Origin provided proxy, 1 hop is fine. Browser provided, 2 hops is needed.

- Barry: Is there any other interest in other sites providing their own proxy, e.g. bing.com or Shopify

- Ben: In scenario where origin is providing own proxy, does browser know if it's "good"

- ... Spamming end destination. All of those problems could manifest.

- Bas: For DOS'ing, I don't need a proxy for this

- Ben: Which of those considerations are those things this group needs to care about in order to enable this?

- Bas: Not malice but incompetence

- ... For malice all those things you can do already

- Yoav: If building, you could DOS all the sites you're linking to

- Ben: Could I put a tracking parameter?

- Yoav: Just do it on the website

- Barry: Once at proxy, no real way to understand what happens after that

- Eric: We use PAT for private relay stuff, you could do same thing here

- ... I'd need to fetch a token from proxy, provides natural rate limiting

- ... How much is this proxy allowing to amplify traffic

- Bas: If I'm colluding, could I do that anyway?

- Eric: Taking infinite tokens, I'm causing proxy to do that

- Yoav: DNS concern is around bots and a single IP creating a lot of traffic

- Eric: Bad actors can do that today

- Robert: Regarding bad actors, I think it's good to have .well-known/traffic-advice file. Standardize?

- Barry: Dependent on proxy being implemented correctly, and listening

- Robert: Good proxies could listen to that file if in spec

- Ben: When is that read? Is there some delay?

- Robert: Proxy is reading the traffic-advice files and caching with HTTP semantics

- Yoav: So destination origin controls how often it can update effectively

- Barry: My experience it's not used much

- Bas: Not used that much because cross-origin prefetch isn't used outside Google Search

- ... if other situations where speculation rules were used liberally

- Barry: I could see other sites using it

- Bas: Competition problem, where a smaller browser couldn't fit the bill for that

- Michal: Focus was on referring origin, and having proxy not DOS

- ... As a user I trust UA on the site the result wasn't tampered with, if a referring origin can opt into a proxy, is it delegating trust

- Barry: HTTPS

- Robert: Proxy opens a H2 connect tunnel

- Barry: HTTPS confidence

- Bas: Does it work for non-HTTPS?

- Barry: No

- Yoav: In terms of trust, even if we didn't have that, same-origin proxy it's still same origin

- ... If proxy would modify the response, the origin's responsibility because it's same origin

- Bas: But it could appear to user it's coming from 3P origin

- Yoav: You’re right, I’m wrong

- (voice-over: the first time)

- Robert: Giving a breakout on Wed at 8:30am

Scroll Performance

Recording

Agenda

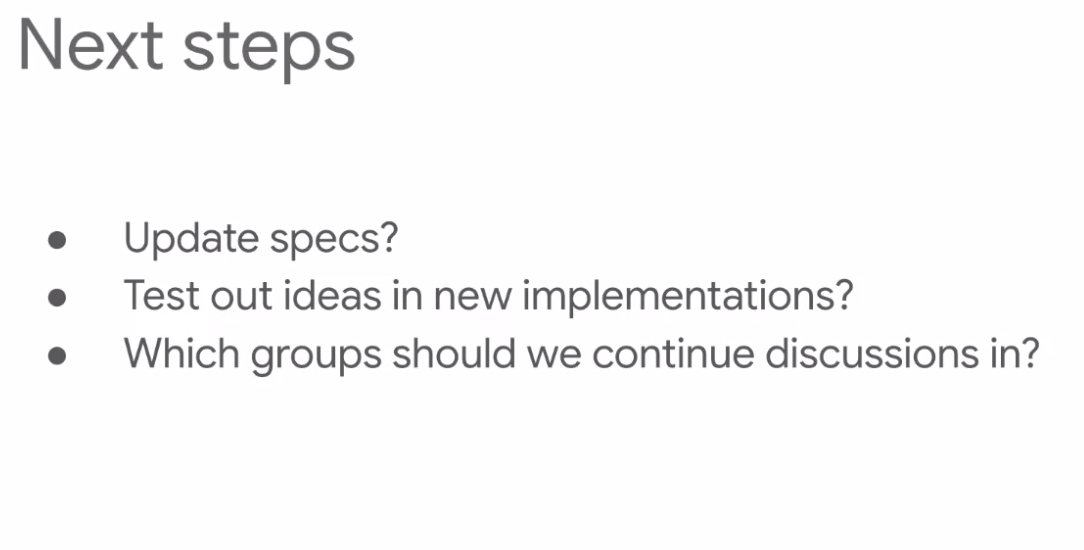

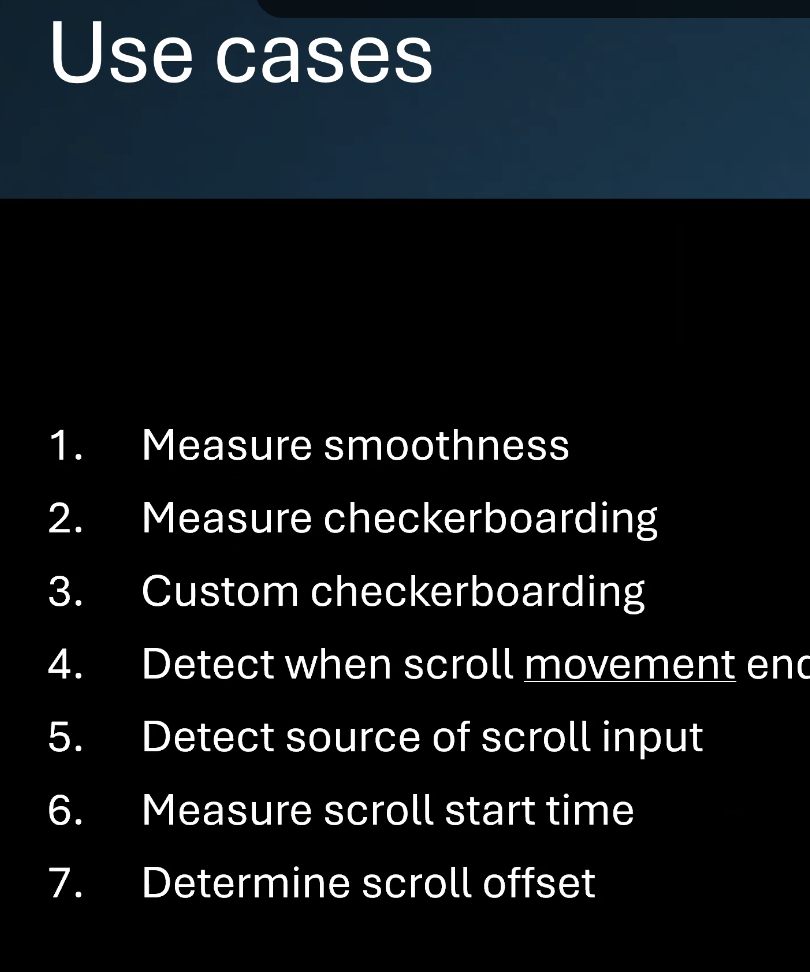

AI Summary:

- The discussion focused on improving web scrolling UX, especially for complex apps like Excel, by defining and measuring “good” scrolling in terms of both smoothness and interactivity.

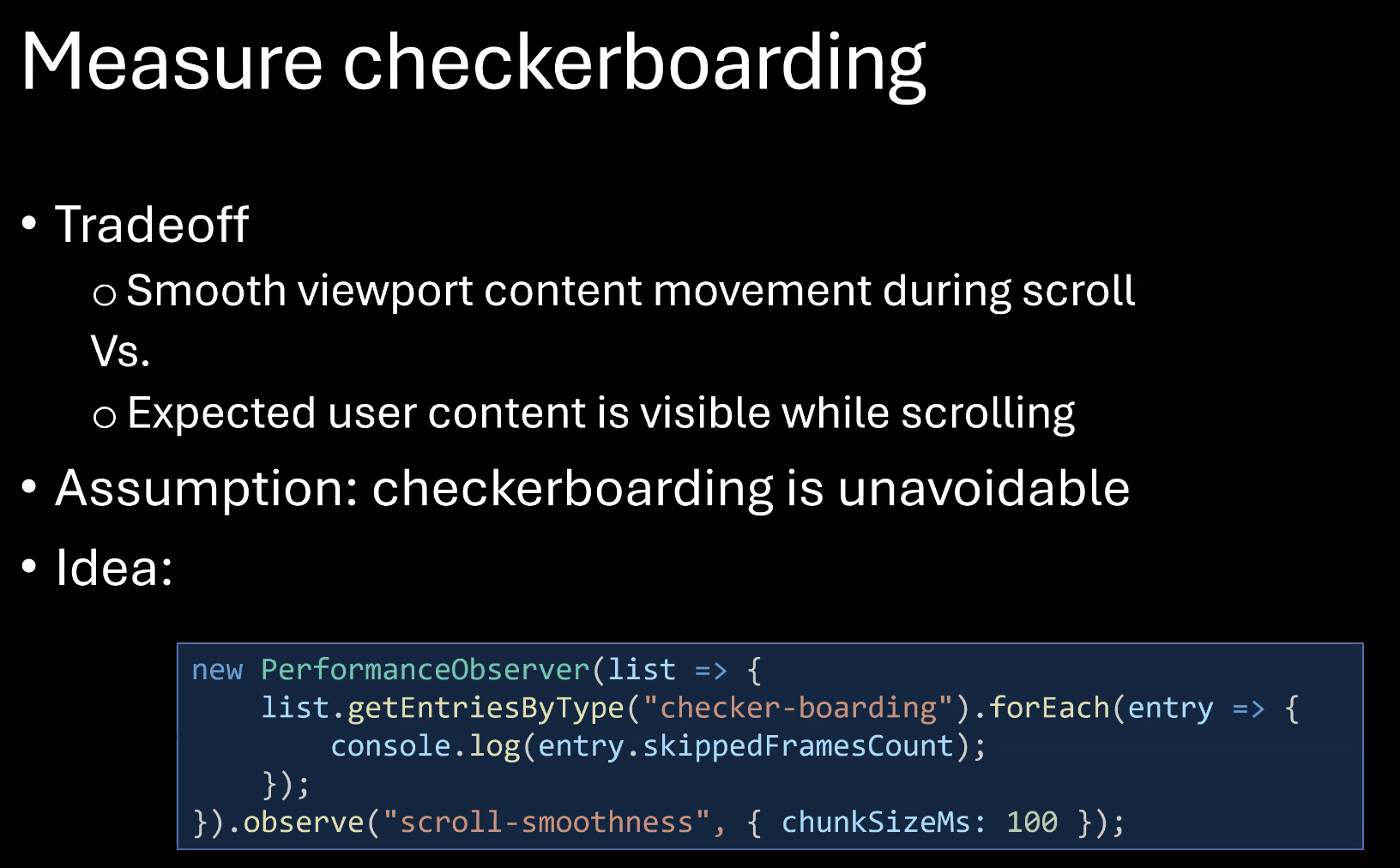

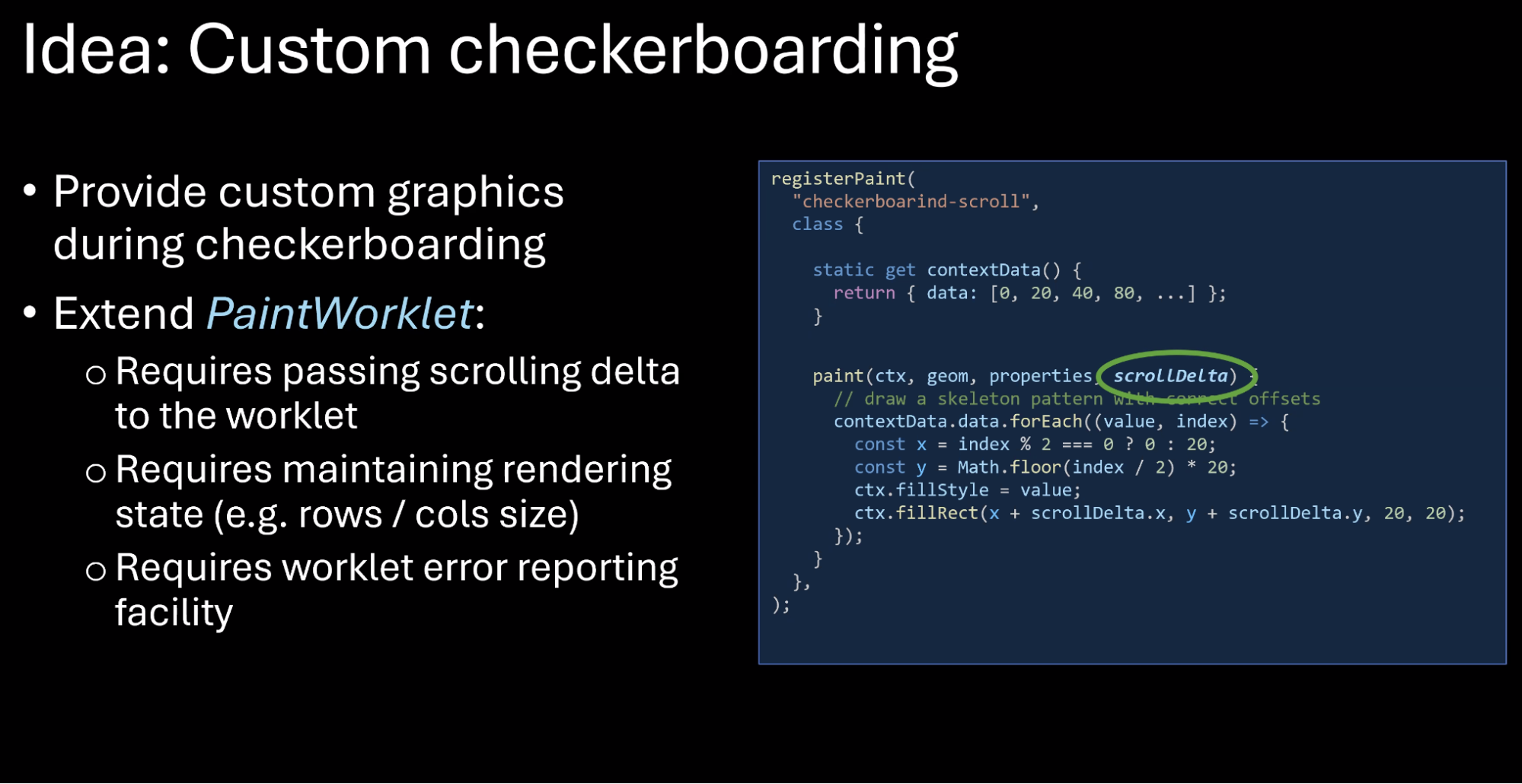

- Key use cases included: detecting and mitigating checkerboarding (missing content during fast scrolls), accurately detecting when scrolling ends (e.g., a lower-latency `scrollmoveend`/`scrollpause` event), knowing scroll start timing, and reading scroll offset without layout thrash.

- Measuring scroll smoothness and compositor jank (beyond main-thread LoAF metrics) was seen as valuable both for product quality and for performance regressions, with interest in compositor-driven metrics similar to native Android scrolling metrics.

- There was exploration of using or extending PaintWorklet (and possibly more declarative approaches) to render better “checkerboard” placeholders during scroll, though concerns were raised about complexity, limits on data access, and the risk of blocking compositor threads.

- Privacy and fingerprinting concerns were noted around exposing scroll input source (e.g., trackpad vs scrollbar), and participants agreed the proposals should be broken into smaller, independent pieces that can be evaluated and shipped incrementally.

Minutes:

- NoamH: Working on Excel and scrolling is something I’m working on

- … Lots of users don’t pay much attention to it, but it’s really hard to provide a good scroll experience

- … Mainly because there’s no definition of what’s “good” is

- … combination between smoothness and interactivity

- … Trying to get more info to make improvements

- …

- … will outline the usecases with a bunch of API proposal starting points

- … Scroll smoothness - researched in the past

- … Ongoing work done by microsoft

- … Checkerboarding - fast scrolling can result in user not seeing the content, due to compositing not sending the data fast enough

- … optimizing smoothness over content

- … There’s no way to avoid it, but we can measure it

- …Also maybe we can provide a better experience. Currently users are seeing the background color.

- …Could be achieved by extending the PaintWorklet API

- … Second use case, detect when scroll movement ends

- … scrollend event is async and not firing fast enough

- … can introduce scrollmoveend event to be faster

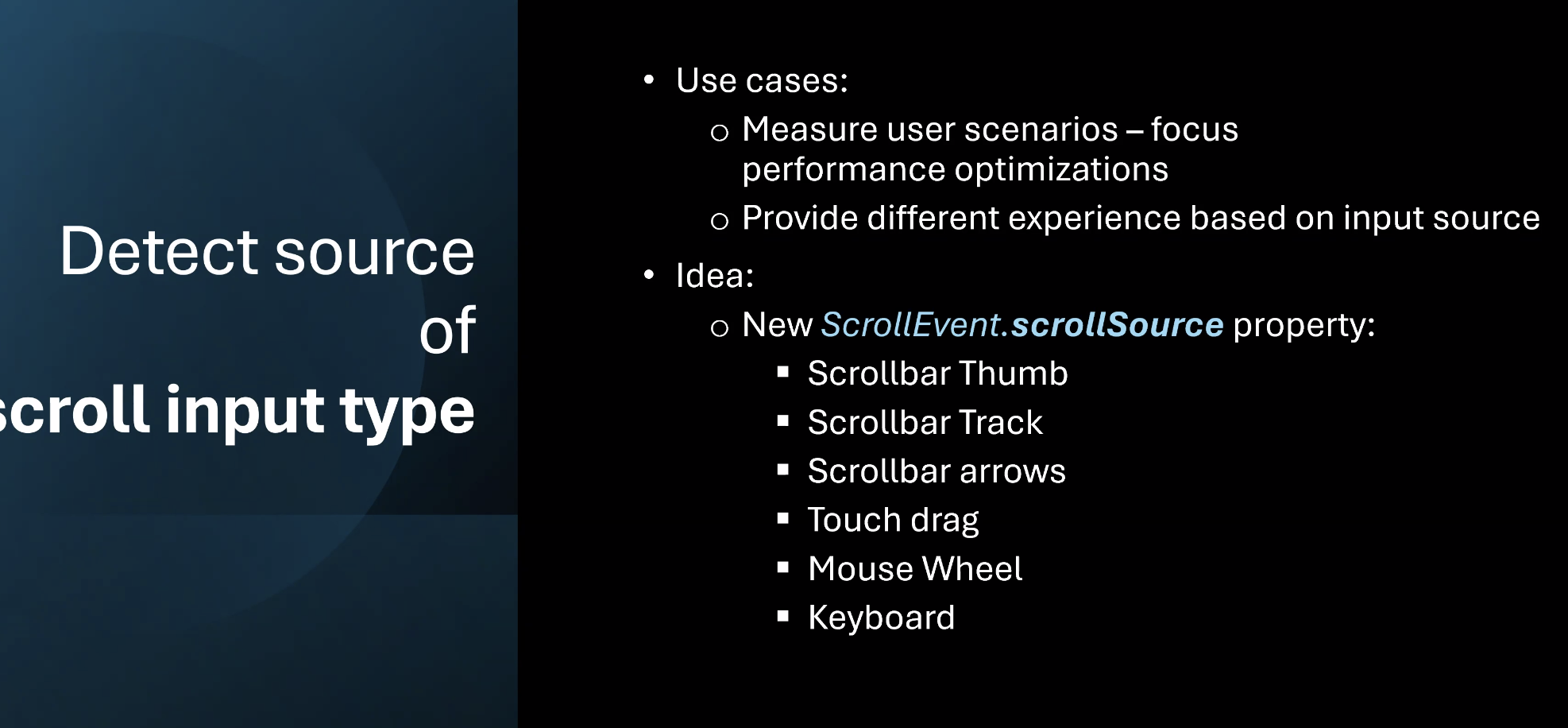

- … usecase - detect source of scroll input type

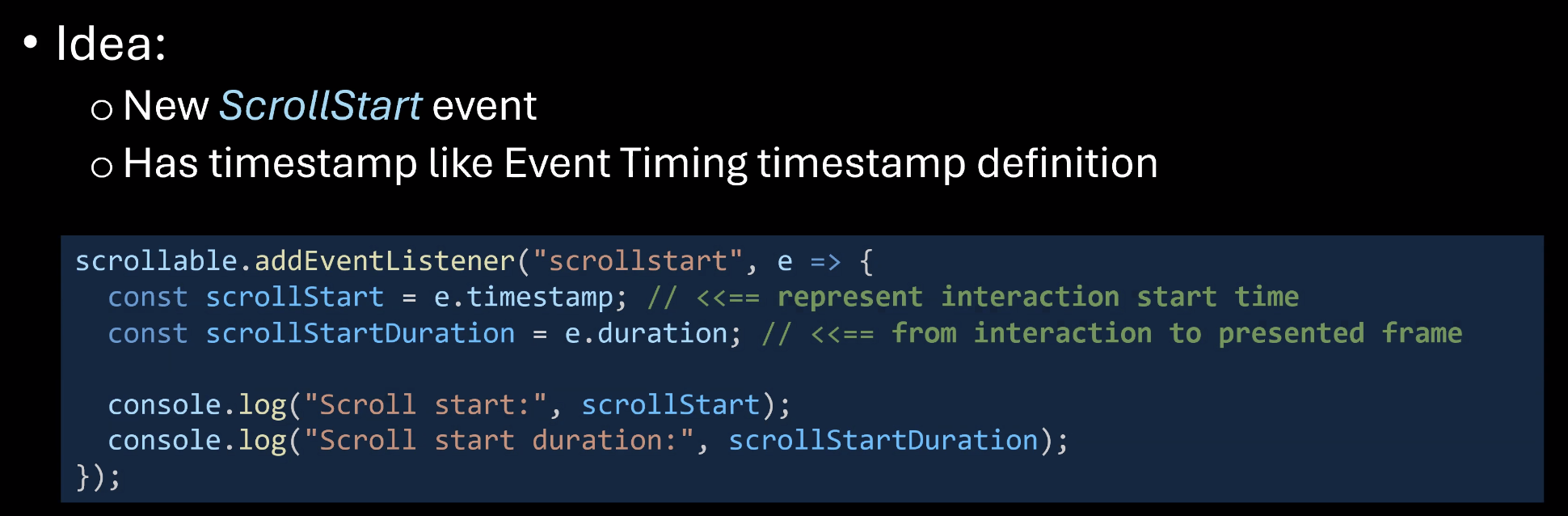

- … Measuring scroll start time

- … There could be a gap between the user’s scroll interaction and the first event. No way to measure it

- …

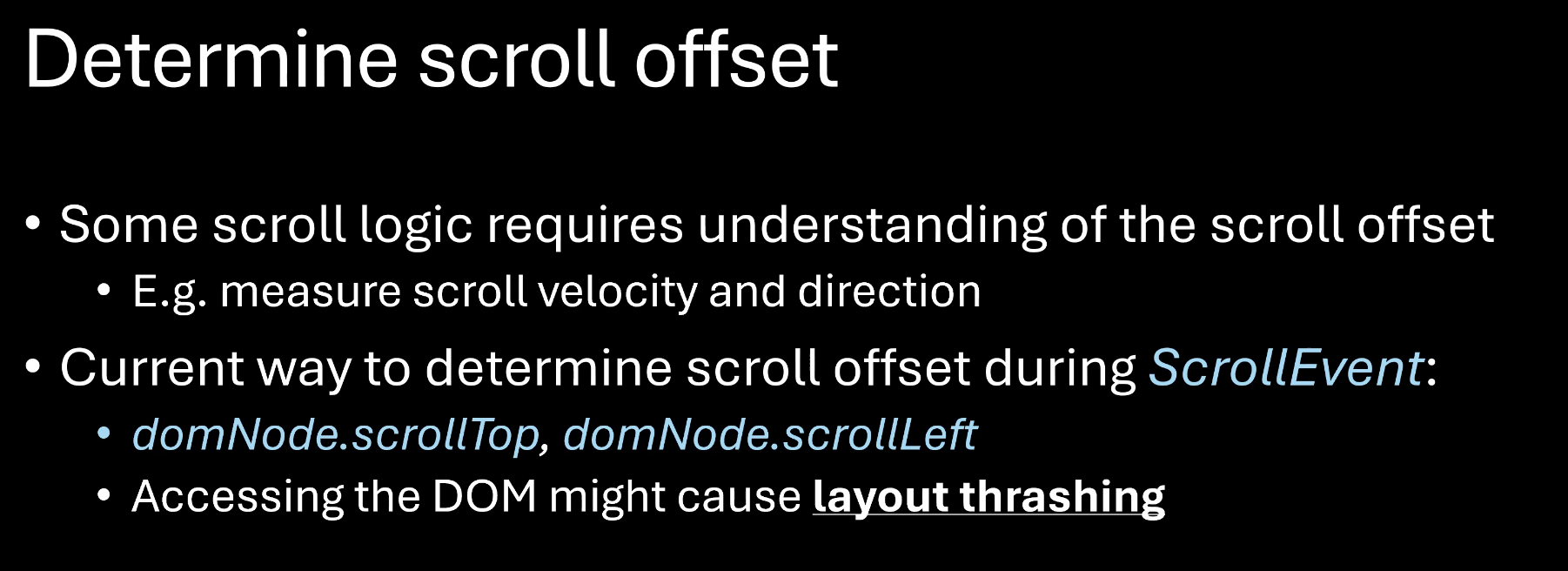

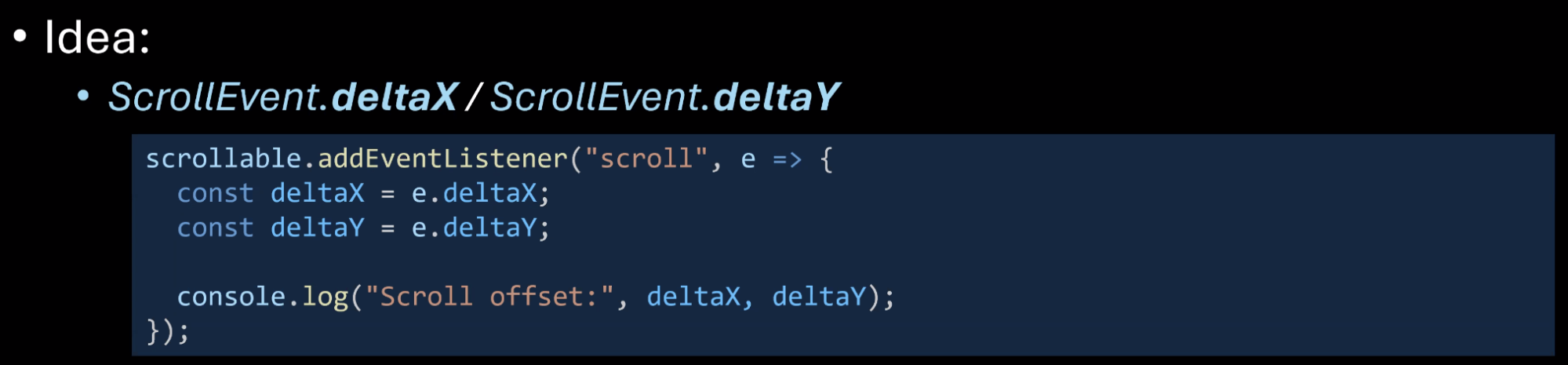

- … Determine scroll offset

- … Current methods can introduce layout thrashing, so..

- Benoit: Spec like this would be interesting for Facebook and other websites

- ... It feels like everyone tries to do this incorrectly with requestAnimationFrame

- ... Getting parity with metrics we can get on android, native scrolling metrics

- ... Two components, whether you're checkerboarding plus frames per second

- ... If drawing commands are too complex, getting feedback from that would be useful

- ... Did you consider looking into getting feedback from compositor for FPS?

- Noam: Considering, using rAF loop with all its drawbacks and problems

- ... Look at compositor could be more reliable

- Eric: Most makes sense. Source of scroll input, is there a fingerprinting vector. Accessibility devices. One of small amount of people using a specific style.

- ... Does knowing user grabbed scroll bar vs. swiped, is the use-case clear? Opportunities for fingerprinting higher

- Noam: Does provide more information for trackpad vs. scrollbar, privacy concern?

- Eric: Seems like it could be

- Yoav: Seven different proposals here, if you solved some of it would it give you some value? Or do you need all the things?

- Noam: Separate independent proposals, solved individually, incrementally, maybe some never solved or addressed (privacy issues or technical limitations), any one will solve use-cases for many users

- Michal: I love breaking it down into small chunks.

- ... On measuring smoothness, what could you do with it?

- ... Having a measurement would be useful to motivate other fixes

- ... Real-time measure of smoothness would be hard. Could sites adjust to be more smooth?

- ... In aggregate, invest engineering resources into optimize

- Noam: For why smoothness? Even with limited way of measuring smoothness with rAF loop, users are happier, more satisfied when interaction is smooth. But not satisfied when they see checkerboarding.

- ... We aggressively track many metrics, any time one regresses, we go to that engineer causing that regression

- ... Can potentially roll back that change

- Michal: Sold on value of measuring smoothness. Main-thread jank is somewhat measured by LoAF.

- Noam: While scrolling using LoAF observer to use hint, but it's not enough and sufficient indicator of checkerboarding

- Bas: Not an animation frame when janking up scrolling

- ... scrollmoveend, is that heuristics? Request browsers have a consistent end?

- ... Last scroll may not coincide with stopping of scroll

- Noam: Want to measure smoothness or other aspects, and respond to different things

- ... During scroll we pause fetching resources or other activities

- ... Not just scrolling as an interaction, from first scroll to last that's our movement

- Bas: Reducing latency to know when scroll is stopped

- Michal: Propose scrollpause

- Noam: OK to bikeshed on name

- Bas: Measuring smoothness vs. checkerboarding, compositor jank when scrolling is quite interesting

- ... Know there's interest in motionmark development group, having better ways of knowing when things aren't smooth just measurable from main thread

- ... Goes into any time of compositor driven animation

- .... Proposal somewhere

- Noam: Link in presentation

- Bas: Custom checkerboarding handling, interesting, having a paint worklet, has to work in compositor

- Noam: Already does

- Bas: Can, but doesn't have to

- Noam: Worklet driven by compositor thread, has some time to finish drawing activity then gives back

- Bas: Could be true in some implementations

- ... One of the reasons compositor smooth, is because you can't run javascript in compositor blocking manner

- Noam: Can perform limited set of drawing activities, could be abused with a long loop

- ... Compositor doesn't wait or little for worklet

- Yoav: Could something declarative, background color or image

- Noam: Can be done already, but won't be animated

- ... if we can declaratively say it can be moved, that would help

- ... Challenges for use-case in Excel, varying geometry of the grid, it would be expensive to regenerate images and swap them

- ... If you don't create images correctly, you can't get a smooth experience. Not just a single axis scroll, X and Y.

- Yoav: Even with SVG, can't do the same

- Benoit: Would like to see more motivations of using checkerboarding, difficult to use correctly, more supporting examples would be helpful. How would you even use it well for Excel? For a news feed?

- Bas: PaintWorklet is limited on what data it can access as well.

- Noam: Reduced experience, but we think we can do it. Could maybe be achieved with static image.

- Michal: Regarding checkerboarding, can you rAF loop in worker, to get a sense of compositor framerate. Main thread performance is biggest cause of checkerboarding. I suspect there's some implementation issues e.g. in Chrome

- ... Moment you show something else than checkerboarding, maybe the UA could solve this itself

- ... For checkerboarding I would push on implementations to find these situations and see if we can just make them go away before pushing on measurement

- Noam: We investigated, clean isolated repro cases, even without JavaScript

- Bas: Low-end devices with main thread

- Benoit: Having this metric would help with where to invest

- ... That's what we do on Android with native scrolling metrics

- Michal: If you can render in under 16ms, and constantly yielding, and it still checkerboards, what else can you do? Sounds like that's the situation

- Benoit: Don't target 16ms

Soft-Navs updates / InteractionContentfulPaint

Recording

Agenda

AI Summary:

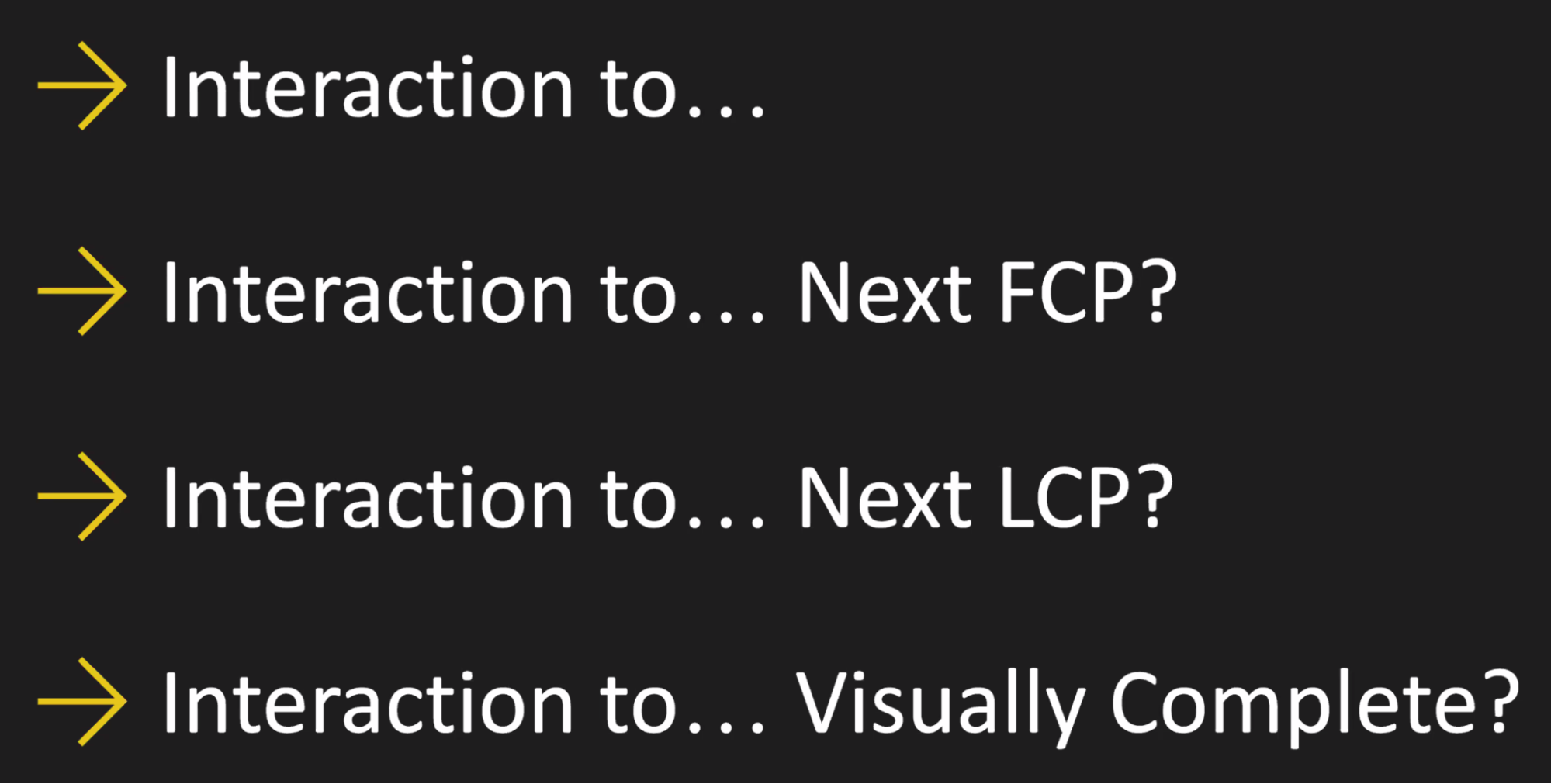

- The group discussed extending LCP/interaction metrics to “soft navigations” (SPA-like same-document navigations) via new PerformanceObserver entry types: `SoftNavigation` and `InteractionContentfulPaint` (ICP), plus a `navigationId` to slice timelines similar to cross-document navigations.

- Chromium’s Origin Trial shows that 20–50% of user journeys involve soft navigations that current LCP doesn’t measure, motivating heuristics that tie trusted user interactions, history (push/pop) changes, and DOM/paint updates into coherent soft-nav sessions.

- Under the hood, Chromium uses an “interaction context” (linked to Async Context and Container Timing work) to track which DOM regions and paints result from a given interaction, then reports ICPs and associated paints (including potential future metrics like total painted area, “visually complete,” and Speed-Index–like signals).

- There was extensive debate over heuristic vs explicit signaling: browsers need heuristics for defaults and CrUX-style measurements, but many participants also want explicit APIs (Navigation API hooks, container timing hints, “last paint done” signals) to reduce ambiguity and improve interoperability.

- RUM providers and developers raised ergonomics and performance concerns (large event streams, complex stitching in JS), leading to suggestions to: expose more raw but well-labeled data (e.g., both hardware and heuristic start times), improve PerformanceTimeline ergonomics, and possibly keep some higher-level “soft LCP/ICP” heuristics in JS libraries/specs outside core browser infra.

Minutes:

- Michal: <Demo of LCP candidates>

- … With interop, LCP is making it to all browsers

- … The page starts loading blank, and remembers what was painted and then not report on it again

- … Expose this data to performance timeline

- … As soon as we interact with the page, the page stops getting new LCP elements

- … interaction measurement is from the interaction to the next paint

- … When navigating as a result of interaction (SPA) LCP doesn’t work

- … To the user, it’s the same thing as a navigation

- … The page doesn’t start blanc so you can’t just ignore

- … <demo of a soft navigation paint tracking>

- … When an interaction happens and it updates the page and the URL, there’s a soft navigation

- … INP was made with thought on extending it later

- … It’s been a performance timeline feature request since forever

- … Range of intuitions of whether JavaScript is good, or causes issues

- ... All of those reasons are obvious and hopefully exciting

- ... Another motivation on the horizon

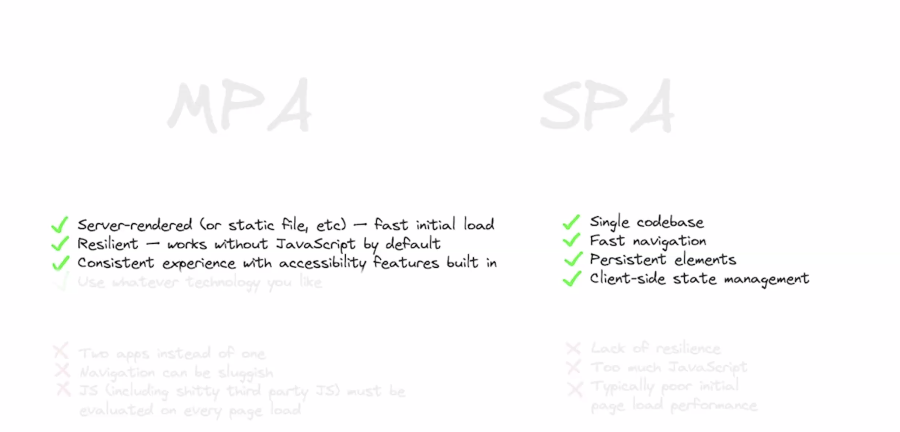

- ... The way we build sites is evolving to blur more and more the lines

- ... In terms of deploying sites, blurred from a technology perspective. From server-rendered MPA to client-rendered SPA

- ... Sampling of one talk of benefits of MPA vs SPA

- ... Frameworks like Astro are a server-rendering framework, but with a flip of the switch, it can do client rendering

- ... Dynamically update in the page. Developer develops MPA, but framework supports same-document navigations.

- ... Same with Turbo, HTMX

- ... Sites that used to be developed with client-rendered JavaScript, are now doing some server rendering

- ... Technology choice as a developer, you can pick and choose what navigation is the best

- ... Navigation is one of the biggest factors that should go into that decision, and there's no way right now to adequately measure

- … Also Speculation Rules that we talked about don't really work for soft navigations..

- ... Declarative partial updates session, possible to give power to all websites to update

- ... In Chromium we did an analysis to detect how much traffic out there goes unmeasured

- ... 15-30% estimate that we're not measuring

- ... Now that we've deployed some Soft Navigations code, we think it's at least 20-50% extra traffic out there

- ... Chromium is Origin Trial, closer to end of OT

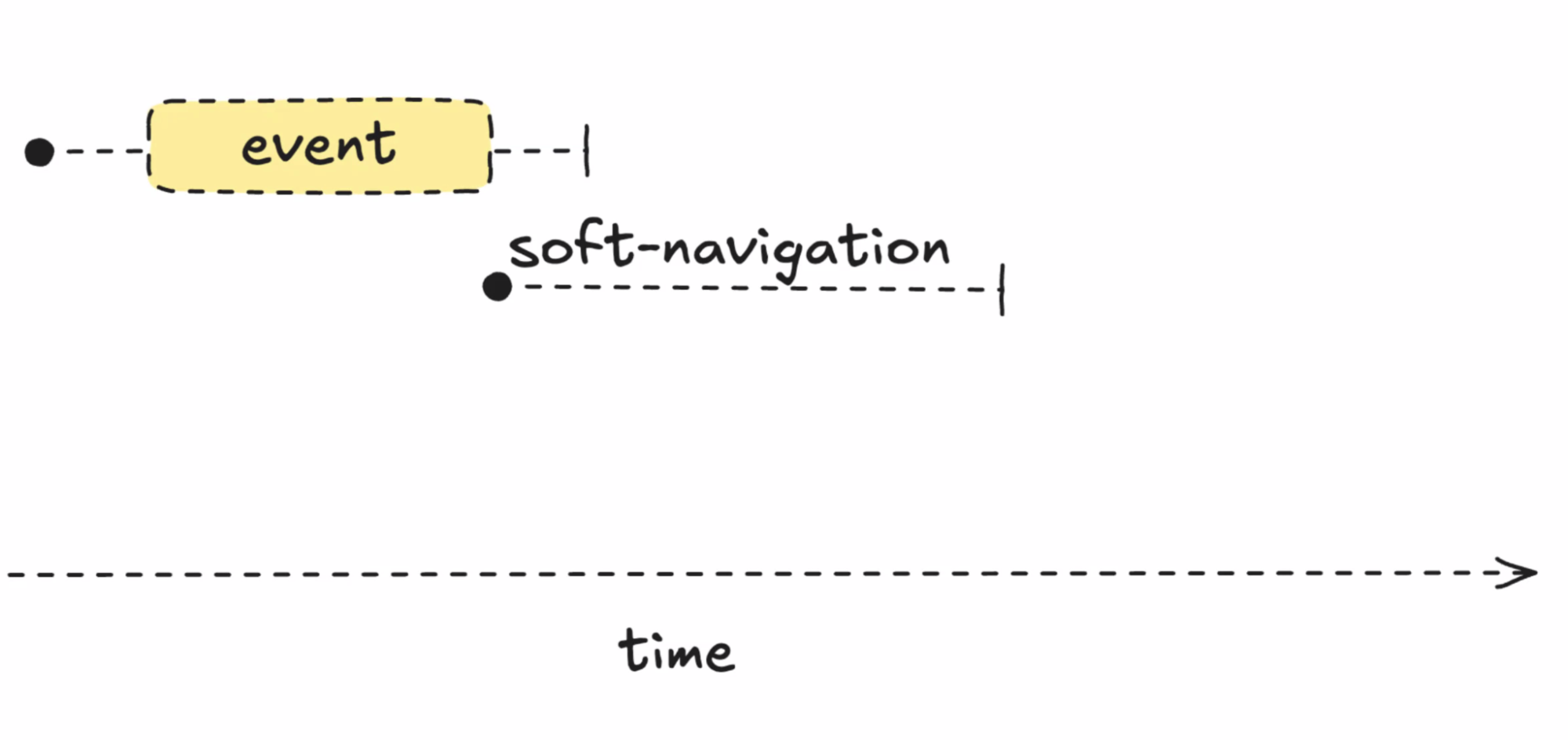

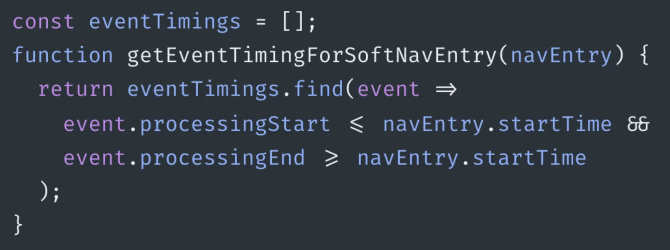

- ... Two new PerformanceObserver entry types

- ... Somewhat as easy as observing for new types

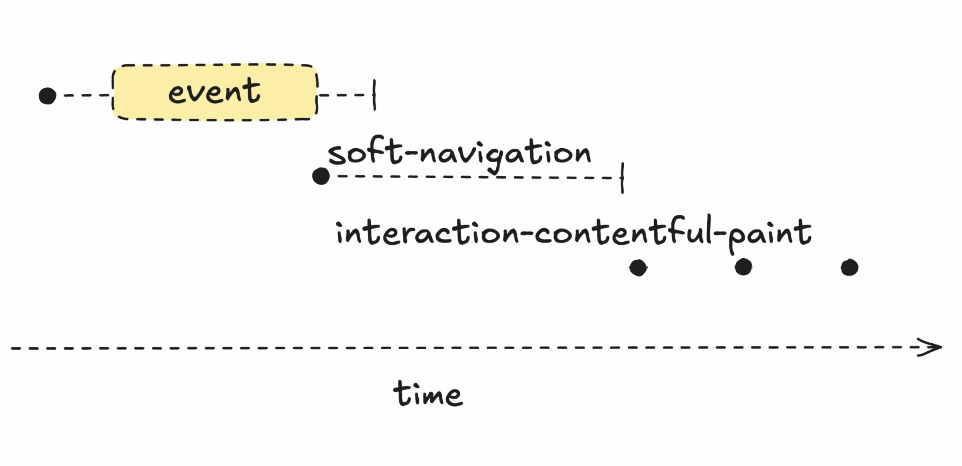

- ... How to model events. Start from hardware timestamp. New entry type for a soft-navigation.

- ... Eventually you meet all criteria of a soft navigation, we report this in the future about the past

- ... Tell you in the future, in the past, a soft navigation started at X timestamp

- ... If you have PaintTiming mixin enabled, you get paint and presentation time

- ... First Contentful Paint of that soft navigation

- ... Today in Chromium you can join the Event Timing with soft navigation, but the times align

- … Eventually you get a stream of new entries, all same metadata as LCP

- ... All have a timestamp, but it's just some time value

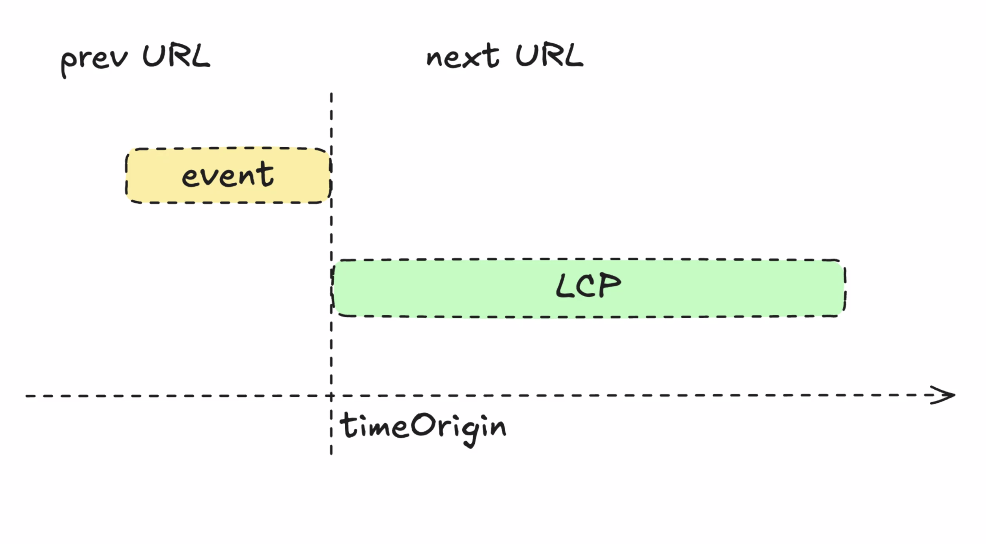

- ... Based on original document timeOrigin

- ... Interaction at 30s in, I'm going to have an INP at 31s. You shouldn't measure relative to timeOrigin, you measure to soft navigation (an alternative timeOrigin)

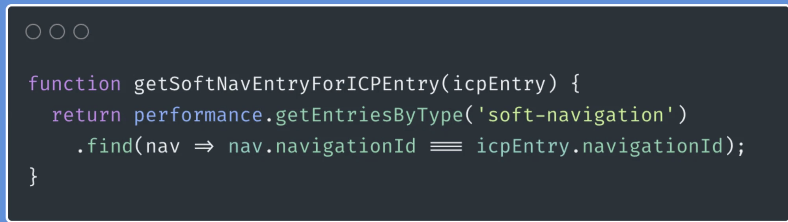

- ... Lookup SoftNav and compare to ICP entry

- ... Reason the timeOrigin is placed at the end of the event, is it's similar that way to cross-document navigations.

- ... Regular navigation on today's web, clicking a blue link and page is janky and slow, the new page won't even process until the page resolves that event

- ... Eventually the event is done running, a chance to run before unload event listeners

- ... That's the timeOrigin for the incoming page

- ... The time of the event goes to that URL

- ... The next URL gets the LCP

- ... For Prerending that's similar, but you need to compare against activationStart value

- ...

- … Also important for attribution purposes

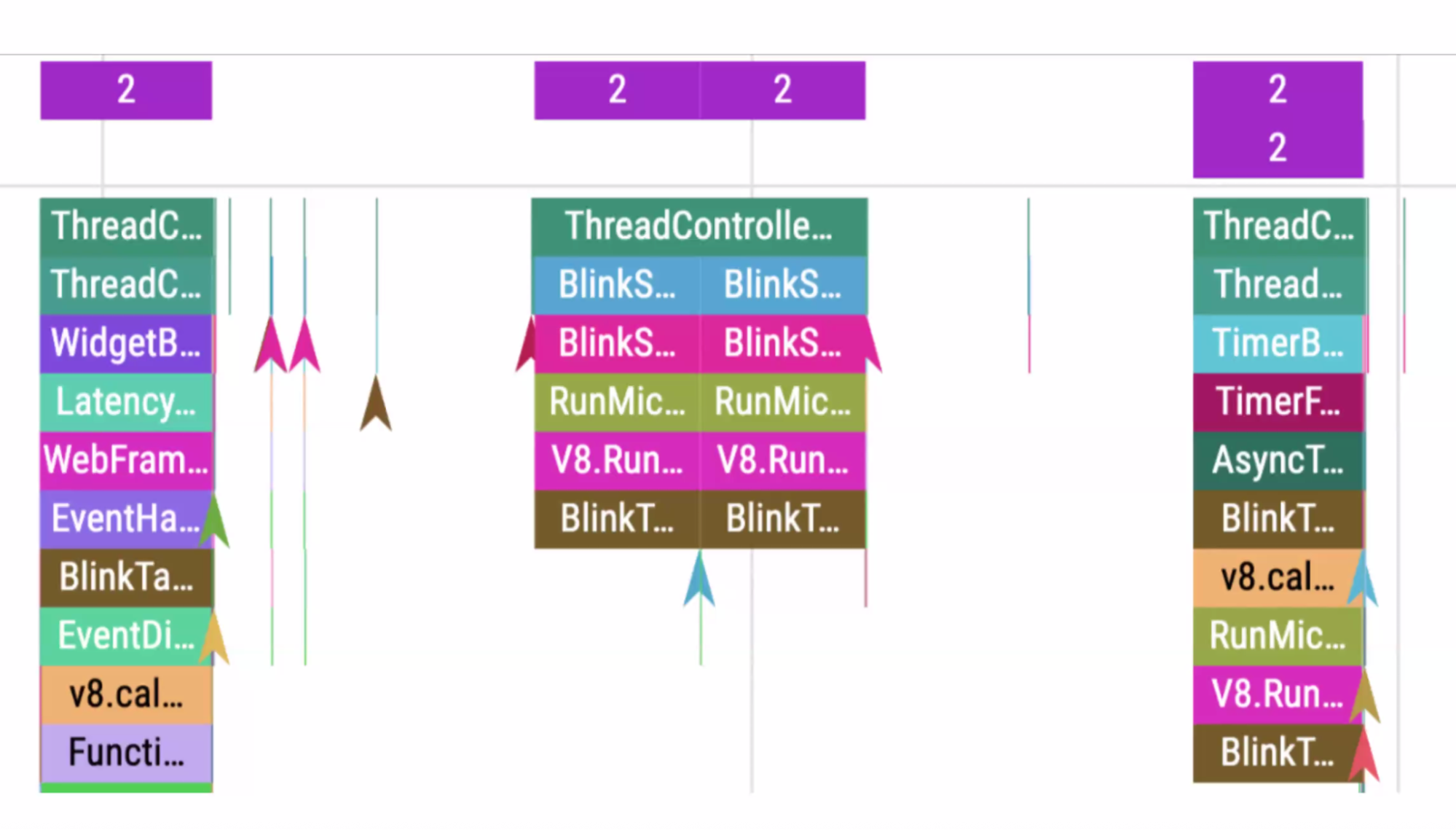

- ... How does this work under the covers?

- ... Starts with monitoring interactions

- ... Monitor a few event types, to constrain any risks with the implementation

- ... When you have an interaction happen, you now have an interaction context, wrapping the event listeners that fire

- ... We can build a body of evidence over time when it changes

- ... Example interaction where an interaction happened, a network event fired, then a deferred task to resolve it. Task ID is tracked throughout.

- ... Async Context is related

- ... We had an interaction, created a context, we then observe modifications to the document itself

- ... Changing images, DOM appending, etc -- we observe those entry points, and we a apply a label to the root nodes to note that this interaction is changing this part

- ... Difficult to mark the parts of the page that were modified

- ... So if it further modifies itself, all of that bubbles up to this interaction

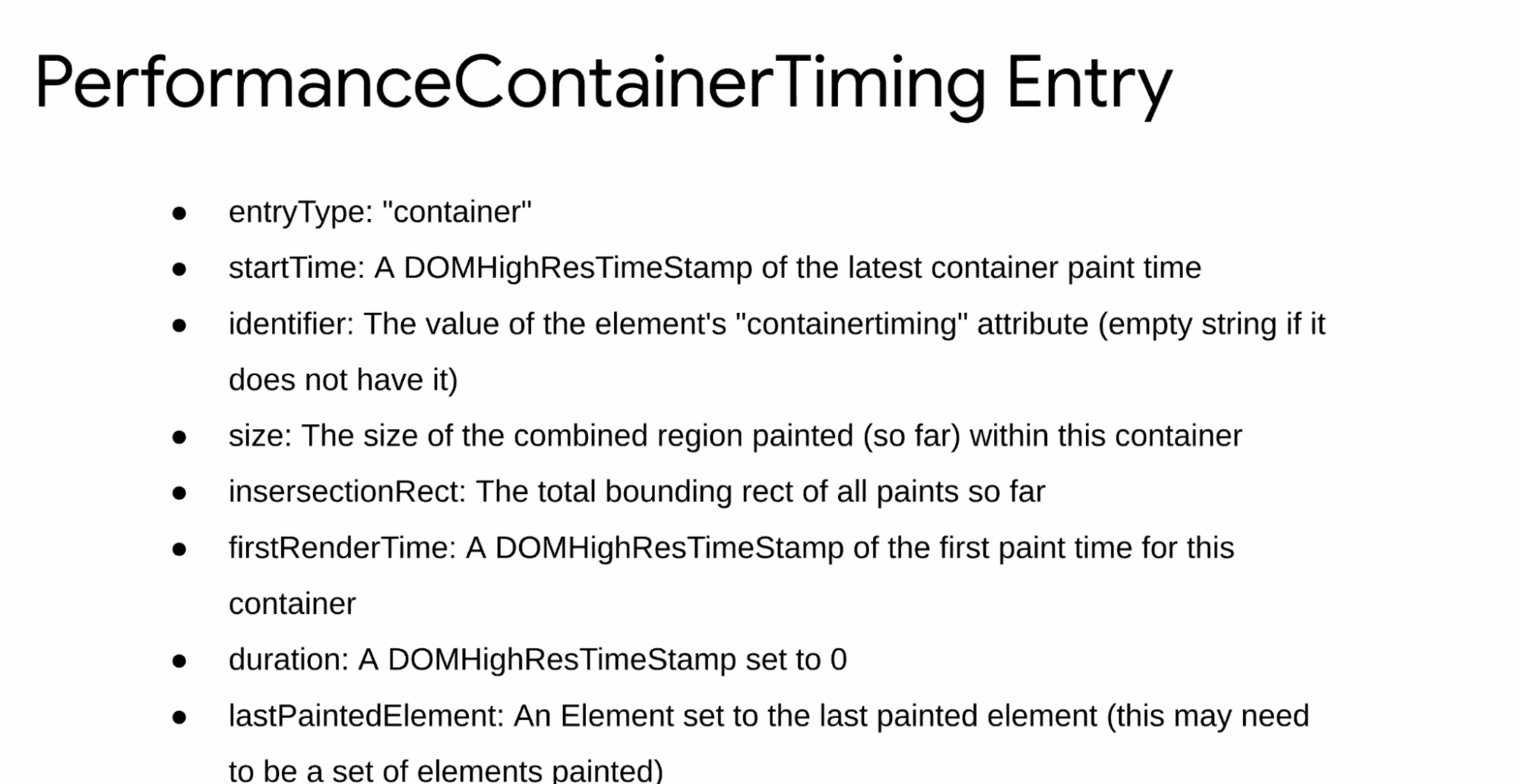

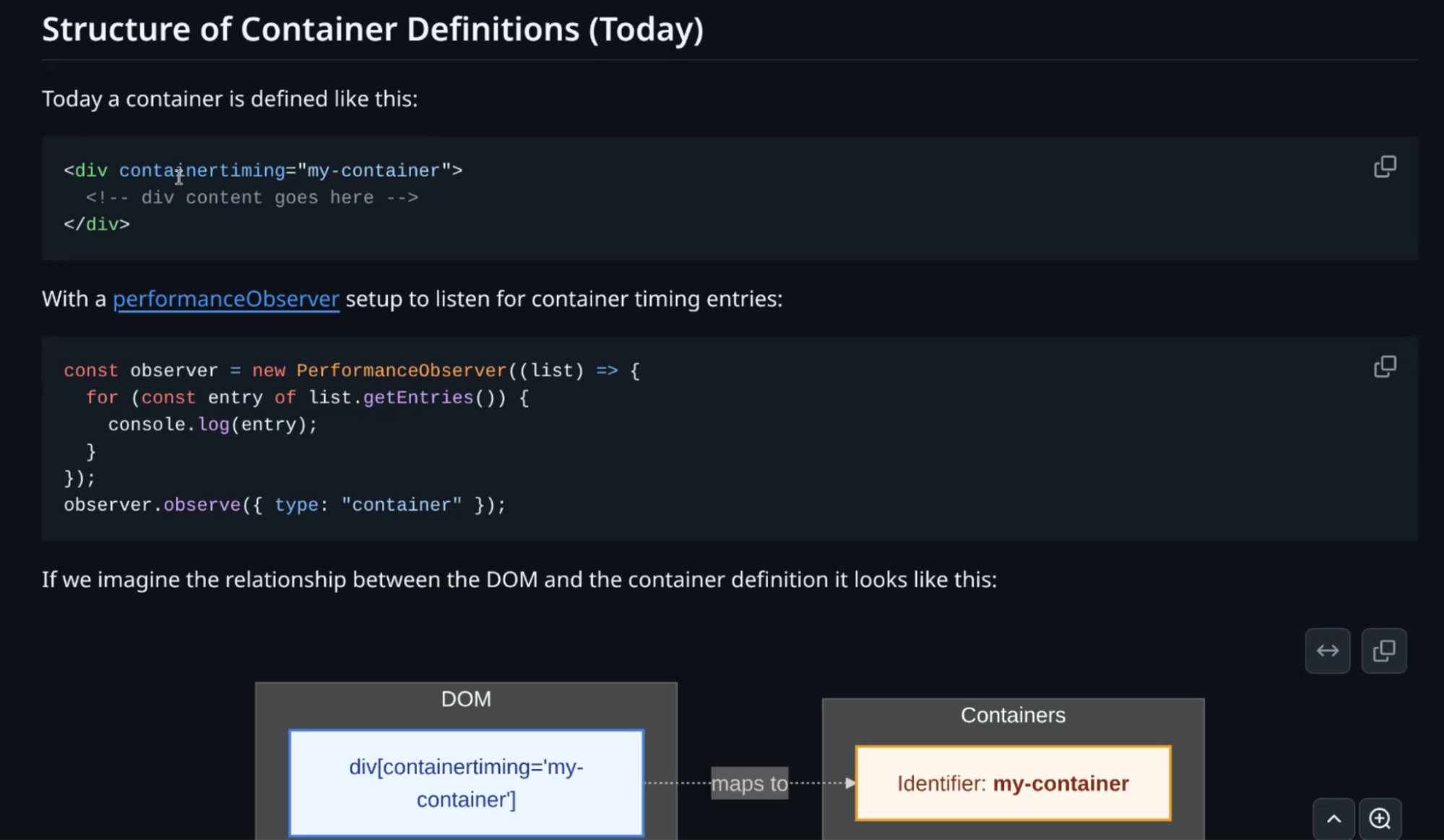

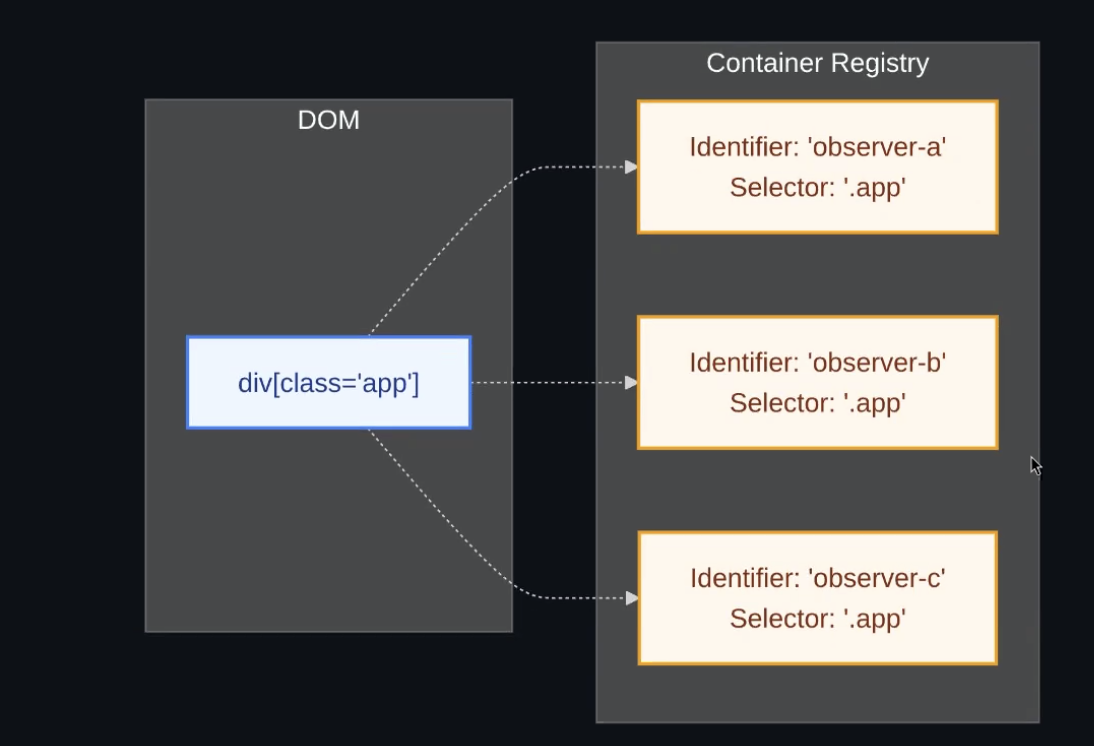

- ... Same problem description as Container Timing project

- ... We have these foundational features, coming to web platform anyway

- ... The only other thing we did is gluing together and adding heuristics

- ... Some of those that Chromium currently has

- ... We want to see trusted user interactions vs. programmatic navigations. e.g. autoplay to next video is not tracked

- ... Heard feedback from Origin Trial, some cases are useful

- ... We also require you push/pop history stack (replaceState is not sufficient, heard there are some valid use-cases)

- ... One of the big heuristics, we only report ICP entries if there is a history navigation

- … But technically, we could report every contentful paint after interaction

- ... WICG repo for this, proposed spec, entry itself doesn't offer much above base PerformanceEntry

- ... InteractionContentfulPaint is similar to LCP

- ... New navigationId property added to PerformanceEntry

- ... We also expose it to LayoutInstability API, EventTiming

- ... Once you're in a new URL, and we've emitted Soft Nav entry, it makes sense to slice/dice this new timeline

- ... This ID is exposed to a range of things

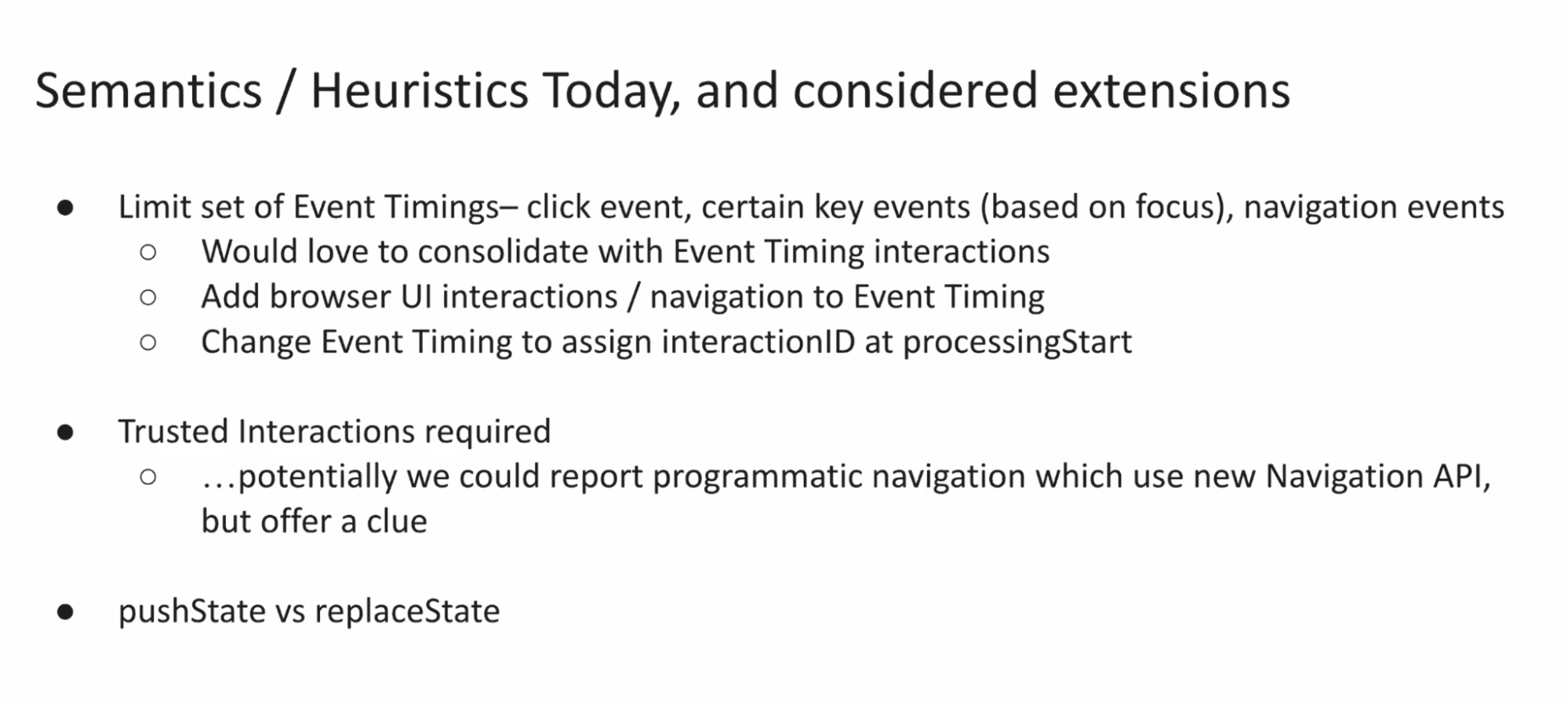

- ... Some of the heuristics we're considering changing

- ... We had limited to some events we're measuring, click, navigation and some keyboard events. But we should consolidate concept of interactions across all specs. EventTiming has a list of events.

- ... We'd have to expand EventTiming for some other events

- ... When I hit back-button in browser UI, I get an event fired on the page itself, so it's useful to report to EventTiming anyway to measure

- ... Trusted user interactions are required right now, but you also need a hook for initial scope to track Async Context. With new Navigation API, you can inform the browser

- ... With pushState sites could do effects then pushState, so it's too late.

- ... Navigation API you would call first.

- ... Some patterns of replaceState could be useful to report

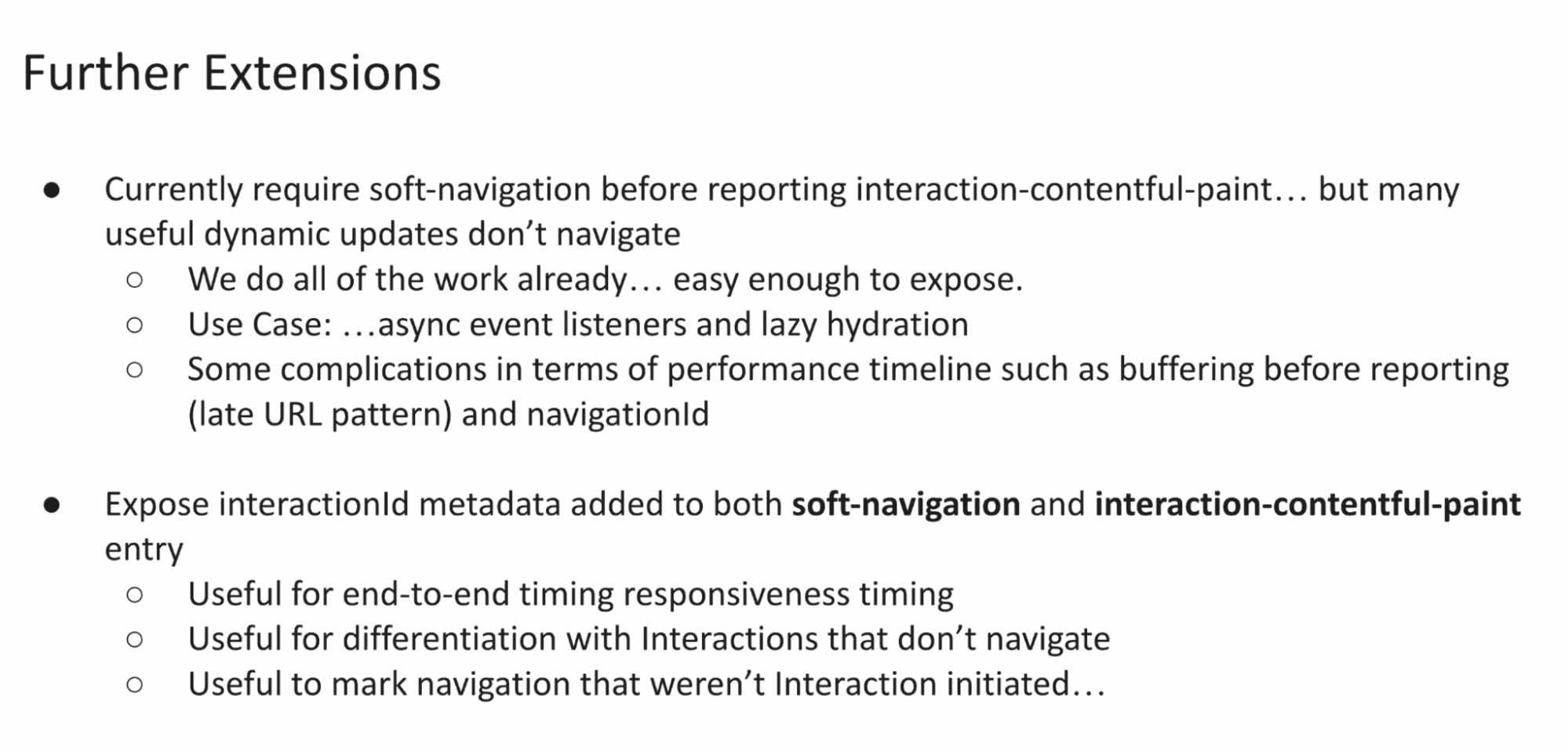

- ... In Chromium we require Soft Navigation before reporting ICP

- ... We could report interactions that don't navigate

- ... Feedback from OT, told us it'd be convenient to report metadata about interaction

- ... Soft Nav or ICP was triggered by an interactionId or event name or something on those events

- ...

- ... Future opportunities

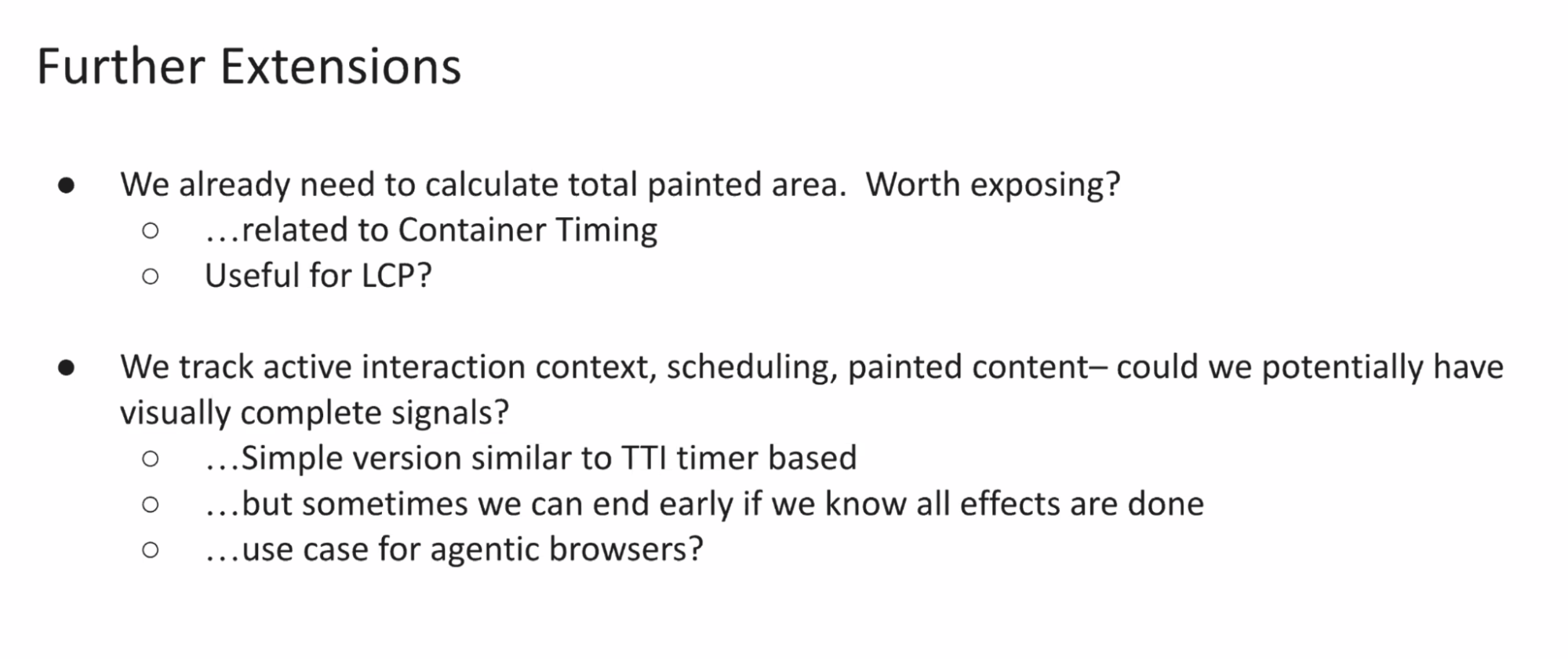

- ... We started calculating total painted area and how it changes over time

- ... We have the ability to do a Speed Index-type thing. Not exposed anywhere

- ... Container Timing may have this concept as well

- ... Knowing Visually Complete, LCP has some heuristics, but it's useful to know when it's all done

- ... Any time a new Paint and after some time it's settled, visually complete

- ... If any scheduling, network stuff, etc, may not be visually complete. Could declare it once all is done.

- ... One use-case is automation or agentic browsers, you need to know when the page is done interacting

- ... Generally speaking for interactions, async is a trend that's happening. As sites are loading, site authors are choosing to not execute JavaScript right away.

- ... Thin shim measuring all interactions, once JS bootstrapped, replay the event.

- Bas: You mentioned the total painted area. Why is it interesting for soft navigations?

- Michal: Part of solving this problem improved the way we’re doing bookkeeping, we needed to do this. So we could now expose this to LCP

- … When you know you only want the largest element, but for all the updated content you need to do extra bookkeeping.

- Toyoshim: Why do we need the start time to be heuristic based rather than exposing an API? This could be unreliable

- Michal: The feedback we got from an OT the heuristics can be hard to understand. The current version tries to minimize the heuristics baked into the timeline. Here you get both data points, so maybe we should report the hardware timestamp as well to the navigation entry. End-to-end attribution is very easy.

- … But there’s an advantage for RUM to do the slicing at the end of the event

- … Useful to know of the time you wasted before you started routing

- Barry: Why do we have heuristics at all? We only emit ICP for soft navigation

- Bas: The definition of a soft nav is heuristics driven. Even if specced, people can fall out of it

- Michal: Heuristic is the trigger that starts the context. I’m proposing to extend it to cover any interaction

- … For the navigation, it has to be pushState rather than replaceState, but we could remove them as well

- … Navigation event makes it easy to meet the criteria

- Bas: Even if ICP is exposed, soft nav will continue to be a heuristic event

- … High level description is easy, but there’s a lot of devil in the details

- Michal: Punt the hard parts to Container Timing and Async Context

- … Remaining heuristics are fairly slim

- Yoav: Theoretically we could go the route of EventTiming and INP

- ... All contentful paints of all interactions are being recorded. As well as extra information regarding pushState, to have to prevent listening to that

- ... Implement userland parts as a heuristic thing

- ... e.g. INP is not an API, it's JavaScript heuristics

- ... People want it to be spec'd

- ... These kind of heuristics could be spec'd as a non-browser spec in RUMCG

- Barry: Great to have scope and potential, and measure what you want. But interoperability concerns.

- Bas: Userland libraries doing heuristics

- Yoav: web-vitals.js reports navigations based on entry, ICP, etc

- ... It is a way to spin the heuristics out of infra

- Barry: Every event creates navigationId rather than soft one

- Yoav: But we'd lose navigationId part

- Bas: Async Context could allow you to track it

- Yoav: Stitch Async and Interaction Context

- Michal: Between moment event happens and navigation commits, you're in both previous and new route at the same time

- ... We debated a layout shift in middle, is it new layout or old one. Depends on context.

- ... For some things like ResourceTiming, the browser could more easily decide based on heuristics.

- ... Do we think it's useful convenience.

- Barry: It's painful

- Michal: Can we improve PerformanceTimline APIs better?

- ... Here's how we think about it from our site vs. how you think about it

- Alex: I like the discussion about moving away from heuristics

- ... Content authors saying Soft Nav, I'd like to reset all LCP and Shifts and stuff

- ... Rather than browser guessing

- Michal: Two ways to do that, Navigate API saying I'm going to begin doing some work

- ... As long as we don't restrict it to user interactions

- ... Other way to do it is just Container Timing, if you're dynamically updating page, you can apply Container Timing hints

- ... Don't need any heuristics

- ... Where useful to have a default

- ... Hard for site authors to know all of the parts, many things comes together

- ... Browser actually sees everything and you get a difference from what happened

- Barry: Existing SPAs, cutting off 99% value on day one

- ... Consistency of it, library "A" I measure these things, library "B" measures differently, looks terrible

- Yoav: Do we really need the website declare anything? I don't think we should move away completely to site says Soft Nav, we need those heuristics and ways for websites to override.

- ... Where do we put these heuristics?

- Michal: Chromium runs a program where we measure for sites, CrUX

- ... If you want to measure your own metrics with PerfTimeline, you'll have the most insights

- ... By default, we need heuristics to do that

- Nic: tracking visually complete is something we’re extremely interested in

- … This could be measuring soft navigation “page load time”

- … We’re trying to do that, but this can be significantly efficient

- Michal: Sometimes things never end. You can more eagerly decide when things have ended

- Nic: Our customers are using that with the less-precise heuristics we’re using.

- Yoav: Async Context do we have an end-time

- Justin: Hard to do, we know when context can't be held anymore

- ... We've had discussion in the past, context could be marked ended

- ... No good way to solve it at the moment

- …https://github.com/tc39/proposal-async-context/issues/52

- Michal: Finalization and garbage collection, timer-based. Delay relative to the actual end time.

- Nic: That's what we're doing exactly

- Noam: +1 on explicit API to indicate that the last paint happened

- ... Crucial for us, even when we don't use Soft Navs

- ... Open a large element, but it only starts loading real content later

- Barry: We follow LCP heuristics where we only emit larger paints

- Noam: Spinner to tell the user to wait

- Barry: Could want all paints of different sizes

- Michal: Stream of paints, each paint offers some extra value

- ... How container timing proposal roughly works

- ... Subset of page you care about is one virtual container

- Barry: Low-level APIs gives huge potential, but could be doing more processing. web-vitals can be a lot more work just to get Soft LCP.

- ... When measuring 20k interactions across a long-lived soft nav, that's a lot of work.

- Yoav: Processing overhead in JavaScript, raw API

- Michal: I think the PerformanceTimeline could be more ergonomic

- Yoav: Won't solve this now, but it might be interesting to determine how these two different paths may look like

- ... Hear Barry's concerns of this being a huge hassle to work through

- ... Or boomerang.js or other RUM providers

- Barry: Two APIs, ICP vs. Soft LCP

- Michal: Ergonomic questions I'm going to try to cover tomorrow in LCP talk

- ... One anecdote

- ... Google News has same-doc navigations

- ... Through extensive instrumentation they figured out all dynamic content updates

- ... Stitched it through together

- ... They then mark done, but it's when they put new HTML

- ... That's when browser finds new images, etc

- ... Moment they end is totally transparent, then you start to animate in opacity, load images, etc

- ... Measure hundreds of milliseconds with no work on behalf of the developer

- Benoit: We see this all the time, things aren't annotated correctly

- Michal: Facebook works relatively well to measure, you optimize on pointerdown, your click event does the thing to do the rendering, but pointerdown accelerates the interaction

- ... Because we only wrap click event, not pointerdown

Unload deprecation, permission-policy: beforeunload

Recording

Agenda

AI Summary:

- Chromium is deprecating `unload` (which blocks BFCache and is unreliable, especially on mobile/Safari) and has shipped `fetchLater` plus a `Permission-Policy: unload` rollout that’s already reduced BFCache blockers; very few sites are opting back in.

- `beforeunload` has legitimate uses (e.g., unsaved-form confirmation, informing the server about session/state teardown) but also slows navigations and is often abused for logging and lifecycle work.

- To mitigate third‑party abuse, there is a proposal for `Permission-Policy: beforeunload`, letting top-level pages block subframes from registering `beforeunload`; third‑party data should instead be persisted via visibility/page lifecycle events.

- The group compared `beforeunload` vs `pagehide`: `pagehide` doesn’t block BFCache, carries a `persisted` flag to distinguish BFCache vs real unload, and can run in parallel with navigation, making it better for most server/state signaling—though it’s not guaranteed to fire in all shutdown scenarios.

- There was agreement that: confirmation prompts for unsaved data remain a key reason to keep `beforeunload` (ideally used temporarily), while destructive/cleanup work should move to `pagehide`/visibility events, with a longer‑term goal of tightening defaults (e.g., permission-policy defaulting to `self`).

Minutes:

- Fergal: Worked on BFCache so want to get rid of unload

- … unload deprecation - last thing in the page lifecycle, unreliable on mobile and safari desktop

- … Blocks BFCache in Chromium

- … Failed to get vendor support for permission-policy: unload, so went for a deprecation

- … So we built fetchLater

- … Added permission-policy to Chromium with gradual rollout (per domain) of disabling the permission policy

- … Reduced BFCache blocking by a few percentage points

- … Some sites are disabling it to protect their BFCache

- … Less sites are enabling unload

- … The current plan is to wait for complaints, if any. (none so far)

- … Unusual rollout, as rolling to big sites first

- … ~10% per month

- … beforeunload is similarly problematic. It has legitimate uses (e.g. to save the user’s data)

- … But it slows down navigations, even if you do nothing (~20ms on Android in lab, no measurement in the wild)

- … Can install the handler temporarily, but a lot of people abuse these event and e.g. save logs in all the lifecycle events

- … There’s probably no legitimate use for a 3P to install a beforeunload

- … Proposal to add Permission-Policy: beforeunload to give the top level site the ability to prevent subframes from registering beforeunload

- … 3P data should be saved from visibility changes

- … No explainer yet, I’ll add a link later

- … Are there legitimate uses for beforeunload? Other things we need to provide?

- NoamH: Another usecase, reducing server state management

- … If the app maintains a state, it can use beforeunload to let the server know that it no longer needs to maintain that state

- Fergal: so as you navigate away from the page, the server can drop the state? You can do it from pagehide

- … It fires when you navigate away, so same timing as beforeunload

- Bas: difference between pagehide and beforeunload?

- Fergal: It won’t block BFCache. The event has a persisted flag on it that helps BFCache

- … If you’re putting something into BFCache, the unload handler makes it so that you’d get an unreliable page state on restore

- Bas: So a pagehide that tells you you’re going into BFCache, what do you do?

- Fergal: You can signal to the server that a navigation away happened.

- Bas: But you might come back from BFCache

- Barry: unload can do destructive things

- Yoav: Noam's use-case is server-side destructive

- Barry: pagehide is also not guaranteed

- ... e.g. on mobile can go to another tab

- Noam: We had a bug, where incorrect signal linking to server that it needs to discard state

- ... Increased COGS significantly

- ... If pagehide would've worked?

- Fergal: Could have had some bug, and ignored beforeunload stopping navigation.

- ... Every beforeunload has a pagehide before

- Bas: Destructive things from pagehide you can still do things

- ... For cases going into bfcache, you can choose what to do

- Fergal: pagehide has a little bit more information

- Yoav: What you could do is not going into bfcache, go into state. If going into bfcache, start a server on server-side to clear state.

- Michal: If I close the tab I'm not getting beforeunload?

- Fergal: You should get it

- Barry: If you're explicitly closing it, but if you background first, and browser later closes it, you won't

- Bas: Value of bfcache could be not enough to keep

- Fergal: Still destroy state on server in pagehide, and get back into good state on pageshow

- Takashi:From the viewpoint of navigation performance perspective, a big difference, beforeunload can block navigation, so we can't run navigation in parallel. For pagehide, we can just run in parallel.

- Fergal: For chrome, cross-site navigation, pagehide will run in different process.

- Michal: I thought main use-case was to confirm prompt

- Fergal: You have some unsaved data, it just tells you, stops you navigating away.

- ... You want to be able to stop the user before navigating away

- Michal: Confirmation prompt, has saved me. I don't like that it slows down every navigation.

- ... Pattern to temporarily register makes sense.

- Fergal: If you're going to save data in some nav event, you should do it on vis change

- Michal: Still support beforeunload on pages with forms

- Fergal: Some pages care about nav performance, they embed, 3P or related, those may be misguided to use unload/beforeunload, and they should be able to say "not allowed"

- Barry: Would default ever be?

- Fergal: Would be nice if it was "self" vs. "*". Maybe in a year's time.

RUMCG updates

Recording

Agenda

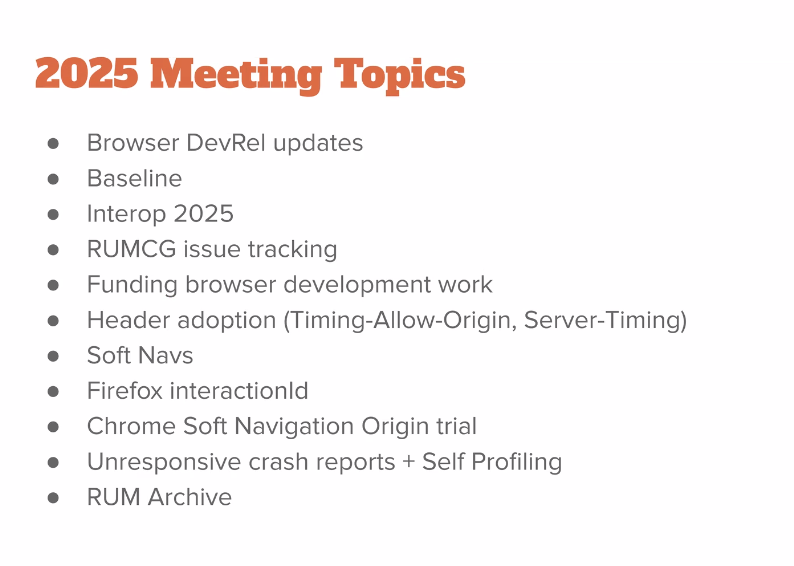

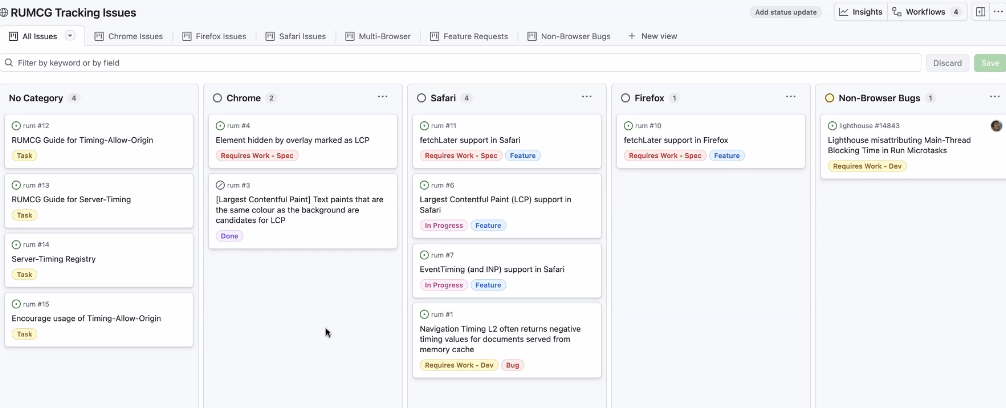

AI Summary:

- The RUM Community Group (RUMCG) is a year‑old, open W3C CG (no W3C membership required) focused on measurement and reliability, serving as a public coordination channel between RUM vendors and browser teams, and working on topics like TAO, Server-Timing (including a registry and guidance), and feature requests (e.g., `fetchLater`, LCP improvements).

- The group offers regular monthly meetings, public minutes/recordings, and is intended as a venue for follow-up on WebPerf proposals and for RUM providers (large and small) to collectively surface needs and potentially influence browser priorities.

- A major discussion topic was whether high-level metrics like INP (and more broadly CWV) should be standardized and/or browser-implemented vs. left as library-defined heuristics built on low-level primitives like EventTiming and LayoutShift.

- Browser and vendor perspectives differ: some argue for only standardizing primitives and keeping CWV-like metrics in JS/non‑normative guidance, while others want more alignment (to avoid divergent implementations between libraries, CrUX, and RUM tools) and even eventual browser-exposed metrics.

- The tentative conclusion was that RUMCG cannot publish normative specs, so the practical path is to add non‑normative “how to calculate the metric” guidance (e.g., INP definitions) into relevant WebPerf specs, giving RUM providers and browsers a common reference without forcing all UAs to ship the high-level metrics themselves.

Minutes:

- Nic: Lots of intersection between the groups.

- … It was formed after TPAC last year

- … It’s a CG, not a WG. 3 co-chairs and active for roughly a year

- … Wanted to share updates, why it exists, and look for opportunities for collaboration

- … The group meets once a month. Agenda docs, mailing list group for cal invites

- … Discussions happen in the webperf slack

- … One important difference - W3C membership is not required

- … It’s also more focused on measurements

- … Individuals can join without status, companies that can’t/won’t be a Members can as well

- … ~20-40 individuals joining every month

- …

- … It’s a useful communication channel to RUM folks, so had updates from browser teams (Chrome, Firefox)

- … interesting discussion about funding browser dev work - can RUM providers band together and fund browser dev work

- … Not a ton of action came out of it, as it’s complex. But it’s an interesting option

- … Good discussion on header adoption (TAO, Server-Timing)

- … Trying to do our work in public as much as we can

- …

- … Requests on things we want from browser (fetchLater, LCP), hoping to influence priority

- …Also tracking work happening in WebPerf

- … e.g. Resource initiator information, content type

- …

- … Trying to influence things like Server-Timing, so created a Registry that documents common-cases of Server-Timing seen in the wild

- … Also want to create guidance on Server-Timing, to propose well-known Server-Timing header names for common use-cases

- … Same for TAO

- … F2F meetup in Amsterdam before perf.now

- …

- … Could be a good venue for followup questions on use cases and API proposal shape.

- … Inversely the RUMCG could try to motivate the agenda of browser vendors and influence what goes into the platform

- NoamH: Is the CG focused on monitoring or also tries to define performance best practices?

- Nic: more on measurement. Less on best practices

- NoamH: performance only

- Nic: Performance and reliability

- Barry: Some RUM people also help their customers improve things. So there’s no clear cut on “measurement only” and they are interested in improvement

- Nic: Do we see any other ways of better coordination? I feel like I could’ve discussed it more

- NoamH: Recordings and minutes?

- Nic: Everything is linked from the RUM

- Barry: Google used to have closed-doors meetings with RUM providers. This is more public and helps smaller RUM providers

- NoamH: Is there a process for following up with the vendors?

- Nic: The issue list is more for the group itself to keep tabs on what it needs to do

- … Communicating to the group - anyone could hop over and discuss things with the CG

- Yoav: INP spec

- Michal: The hard parts are in event timing. INP as a metric is only special because of CWV

- Barry: different opinion. We’ve made it a “standard”. Different libraries should implement it in similar ways. Also CrUX needs to measure it

- … The doc is not a spec. We have a reference implementation

- … Fair ask - we want people to measure this the same way

- … Lots of complexity around the attribution object, etc

- … I think it was answered, but the ask makes sense

- Yoav: what would be the venue? CG, WG, IG?

- Bas: Need to define what the terms mean, but also do we need normative standards to define what’s outside the UA? Or should we move the algorithm definition into the UA?

- Barry: Low level APIs are great, but we need the high level API that just gives you INP

- … Ideally webvitals.js should not exist and the browsers should just emit these value

- Nic: Things that we are doing commonly. CLS vs LayoutShift is the same thing.

- Barry: Also LCP makes opinions in the browser—more so than the other two CWVs.

- Nic: Does that feel like a best-practice guide that the RUMCG can cover? Or based in?

- Barry: UserTiming can be used for anything. Though the downside of that is it’s used differently by different people. RUM CG have discussed how to standardize that

- …But for CWV there are rough edges that anyone that tried to measure CWV outside of web-vitals will have hit.

- Nic: There are differences between web-vitals.js and boomerang. A spec would’ve helped us

- Barry: Extensible web manifesto is about eventually paving the cow-path

- Bas: There’s an argument for doing low-level APIs, but when you standardize a high-level feature that becomes a de-facto standard. But then UAs need to adopt and invest resources in maintaining these defacto-standards.

- Barry: Two questions: do we need a standard? Does it need to be baked in?

- NoamH: What’s the argument for baking it in?

- Bas: that’s what we’re standardizing

- Yoav: not necessarily

- Michal: A non-normative note that defines INP on top of Event-timing would be 2 lines.

- … There will never be a 1:1 match between RUM and CrUX

- … The RUM provider gets e.g. LoAF data which is richer

- Barry: LCP has a lot more weirdness. When to stop, subparts and where do they split

- Michal: visibility changes were solved with EventTiming

- Barry: but not LCP

- Michal: Yeah, because there’s no LCP “end” event

- Bas: Wondering about apple’s opinion on CWV as a defacto standard

- Alex: CWVs has Google’s fingerprints all over it, but that’s become parts of the Interop project and we’re working on pieces of it

- Bas: Appetite about standardizing more parts of it?

- Alex: Skeptical due to distribution schedules, implementation changes being more complex

- … We can add the missing primitives, but standardizing the conglomeration of the primitives that not our level. I implement the primitives, yall can use them

- … If you standardize and measure the same things - great. If not - also great

- Nic: So what would be our recommendation?

- Bas: What’s the outcome we want? Should the CG work on having more normative standards for RUM providers?

- Barry: Can they produce normative standards?

- Nic: I’ll need to look

- Carine: CG can’t produce specs, IG can’t have non-Members join

- Barry: so maybe a non-normative section in the relevant specs

- Nic: Let’s resolve on that!

Day 2

Agenda

AI Summary:

- The Audio Working Group wants to expose the `Performance` interface (notably `performance.now()`, and likely `timeOrigin`) inside `AudioWorklet` to enable high‑precision timing for latency measurement, DSP load benchmarking, and real‑time processing decisions.

- Precision would follow existing Cross-Origin Isolation rules: reduced precision in non‑COI contexts, with the possibility of higher‑precision timers when the owning document is COI; this requires plumbing the COI “bit” into the worklet.

- Using `performance.now()` directly in the worklet avoids timing‑sensitive round‑trips via `postMessage`, although results may still be sent back to the main thread for analysis when feasible.

- For correlating worklet and main-thread timestamps or multi‑origin scenarios, `performance.timeOrigin` should also be exposed and clearly defined for `AudioWorklet` in the spec’s processing model.

- Security and privacy concerns are expected to be manageable but will need review by browser security teams; related discussions around animation smoothness/latency metrics suggest future extensions (e.g., worklet-side UserTiming-like primitives).

Minutes:

- Nic: Exposing the performance interface to the audio worklet

- Michael: From the Audio WG. Lot of desire to have high precision timers in audio worklets

- … The actual change to the spec is just to expose the interface in audio worklet

- … But there were concerns around privacy

- … There was also a request to understand what developers want this for

- … Want to use it monotonic clock, latency metric calculation and benchmarking tests for audio and DSP load

- Yoav: One of the questions that came up when we discussed, was around Cross Origin Isolation (COI), what's the precision required, and how can we get that in a AudioWorklet

- Michael: The precision is already reduced if it's not COI, in terms of requirements that seems fine

- ... If the AudioWorklet is running in COI context could we get a HR timer?

- Yoav: Theoretically yes

- ... Is that something feasible? Is there a use-case?

- Michael: Use-case for higher-precision in COI context

- ... As far as piping the bit, I'm not sure what would be involved in that

- Yoav: Is AudioWorklet owen by the document, that they can get that bit from? Ownership structure?

- Michael: Components of Web Audio that have a similar mechanism, where we provide similar reduced information if not COI

- NoamR: Owned by document

- ... Use-case is about measuring latency, reminds me a bit of measuring animation smoothness

- ... Currently the Performance APIs are not that great in measuring animation smoothness

- Yoav: Performance APIs in general or just performance.now()

- Michael: Just performance.now()

- Yoav: Pre-UserTiming just being able to grab timestamps

- ... Useful first step, last time we discussed this, discussion evolved into things like UserTiming

- ... Right now the model is measure things in Worklet, the postMessage/communicate to the main thread

- Michael: With performance.now(), after measuring, depending on the usecase, some of it could be posted back to the main thread

- ... Some could be used within AudioWorklet to change the signal processing itself

- ... Communicating back to main thread isn't as feasible if they have a timing requirement

- Yoav: First step is exposing performance.now(), if there are use-cases where having other APIs can help you do UserTiming/measure/mark in worklet, then grab them on main thread without having to communicate, that could be a future extension

- Michal: If you just send performance.now(), the worker is in a different origin, so you can't sync timestamps

- ... If you have performance.timeOrigin, you could sync

- Yoav: So we'd also need timeOrigin

- ... Seems reasonable to just expose

- ... Beyond just changing interface, you need to change the processing model

- NoamR: Wherever the integration is, in AudioWorklet, you need to get the bit and pass it along

- ... In the spec PR need to define what timeOrigin is

- Michael: Can we iterate on this?

- ... Are there ongoing security concerns?

- Yoav: No spec security concerns here, but you may need to sync with security teams to get approval

- NoamR: I would suggest to followup on animation smoothness thing, it's a measuring latency thing

- ... In terms of measuring latency in statistical way, how much latency did we have over the last second

- ... You want to measure periods of time where you have higher latency than others

Recording

Agenda

AI Summary:

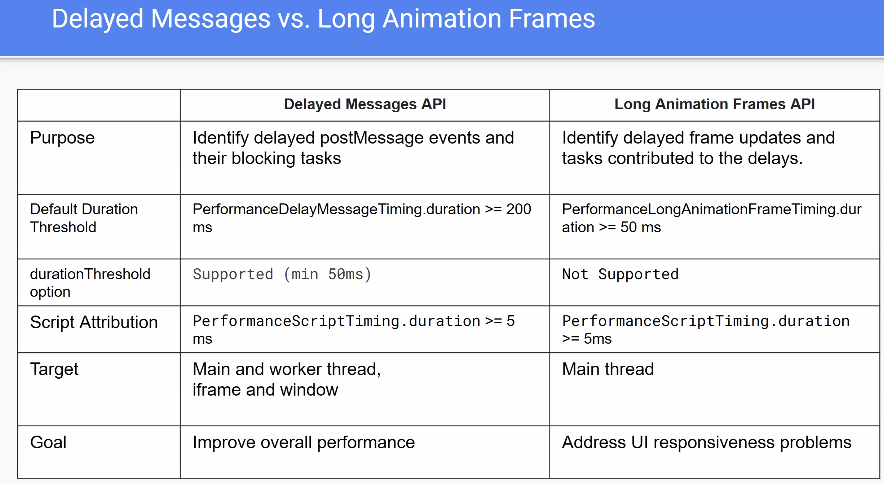

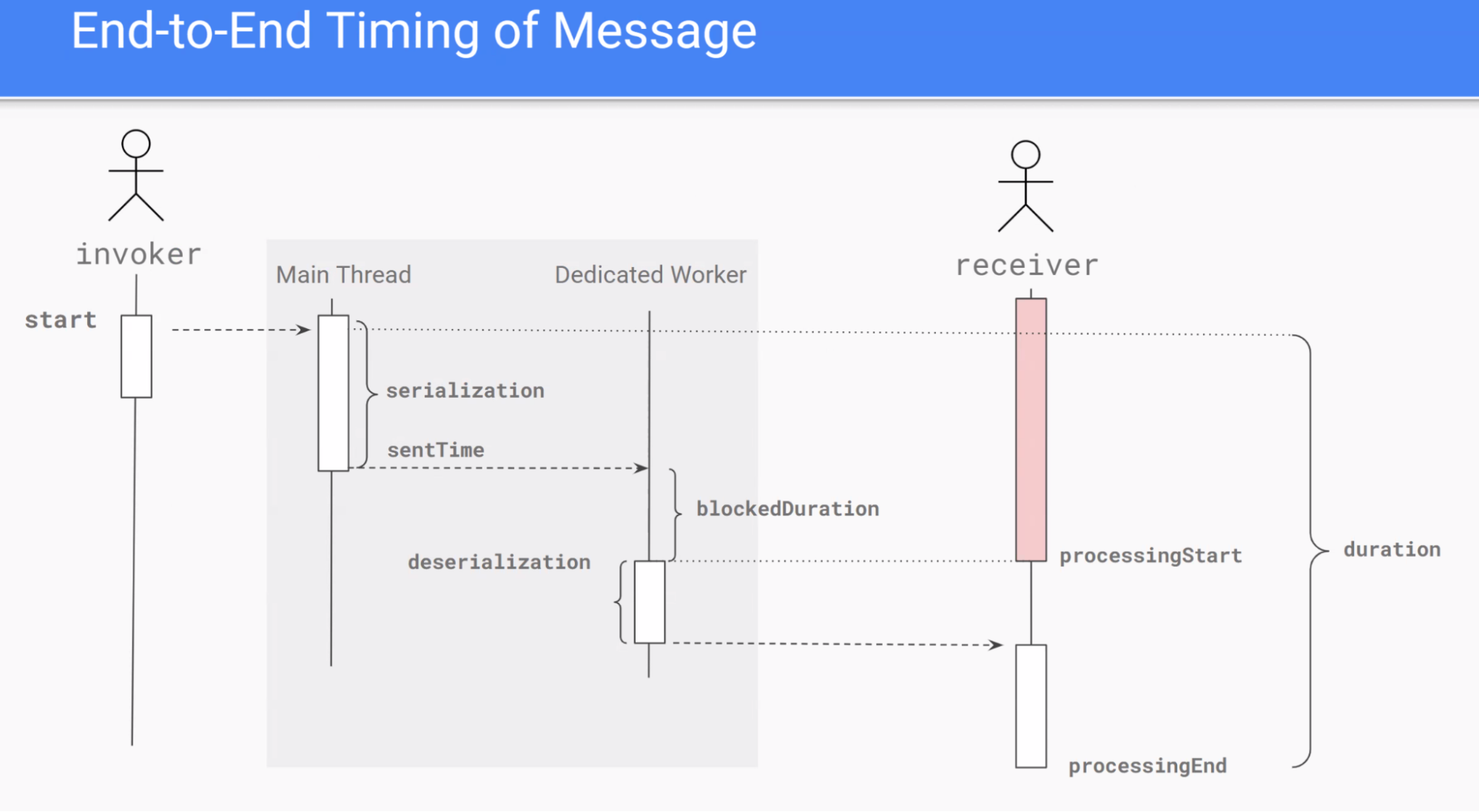

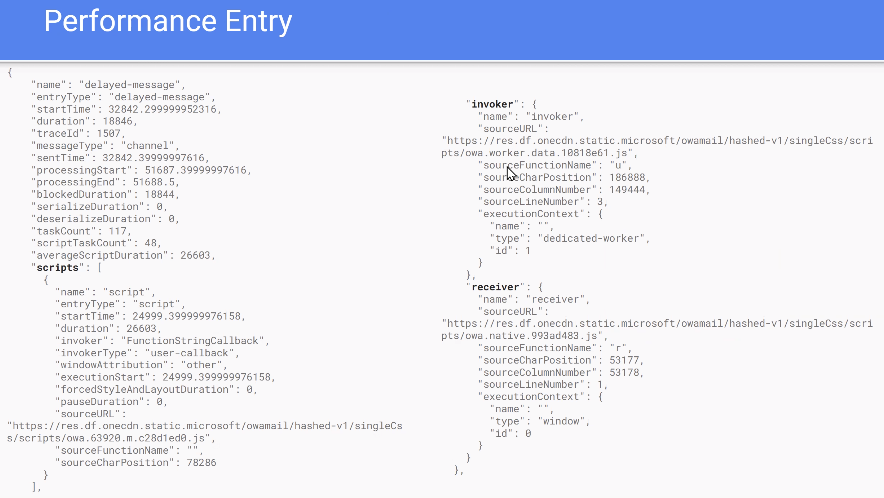

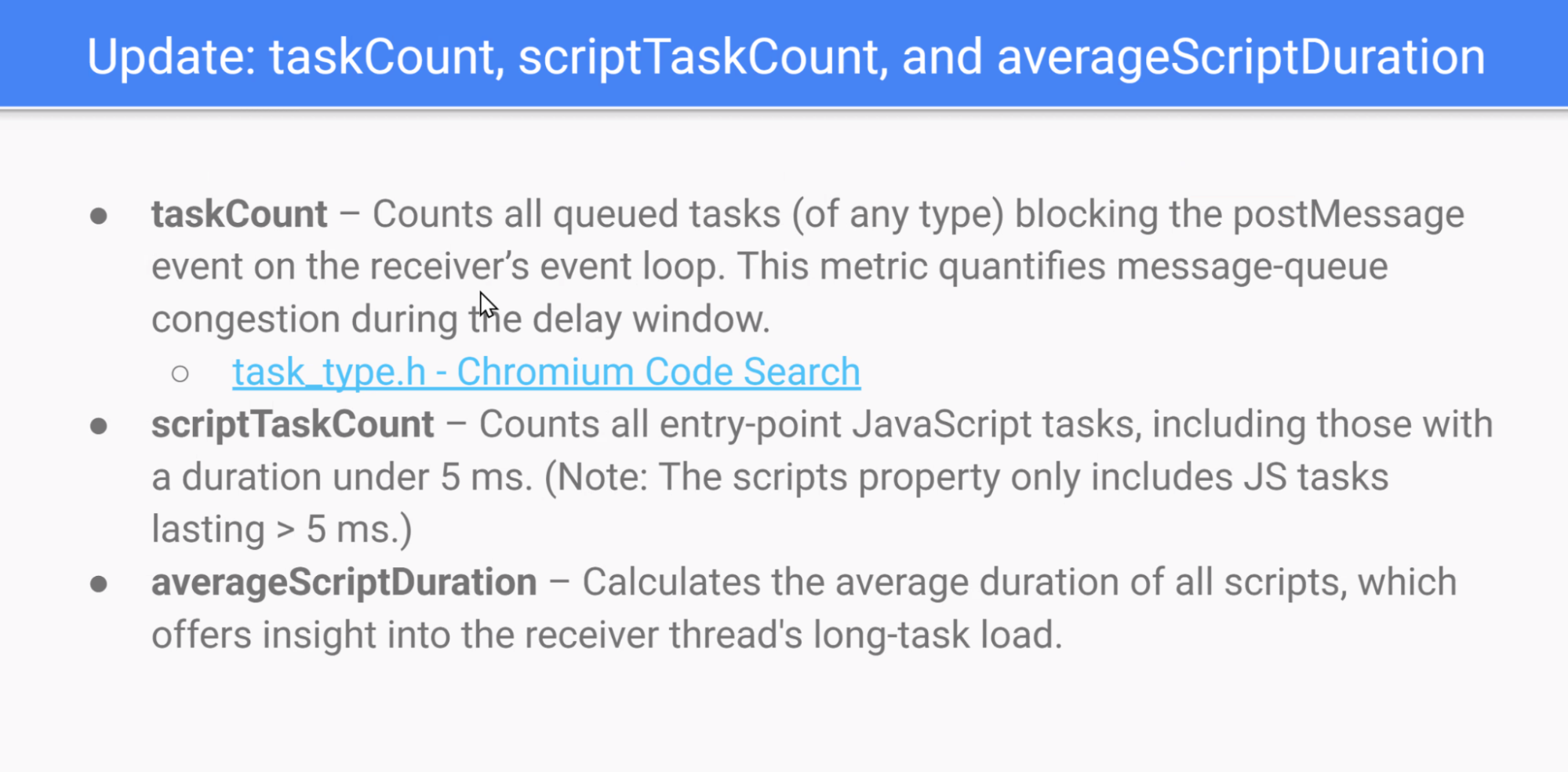

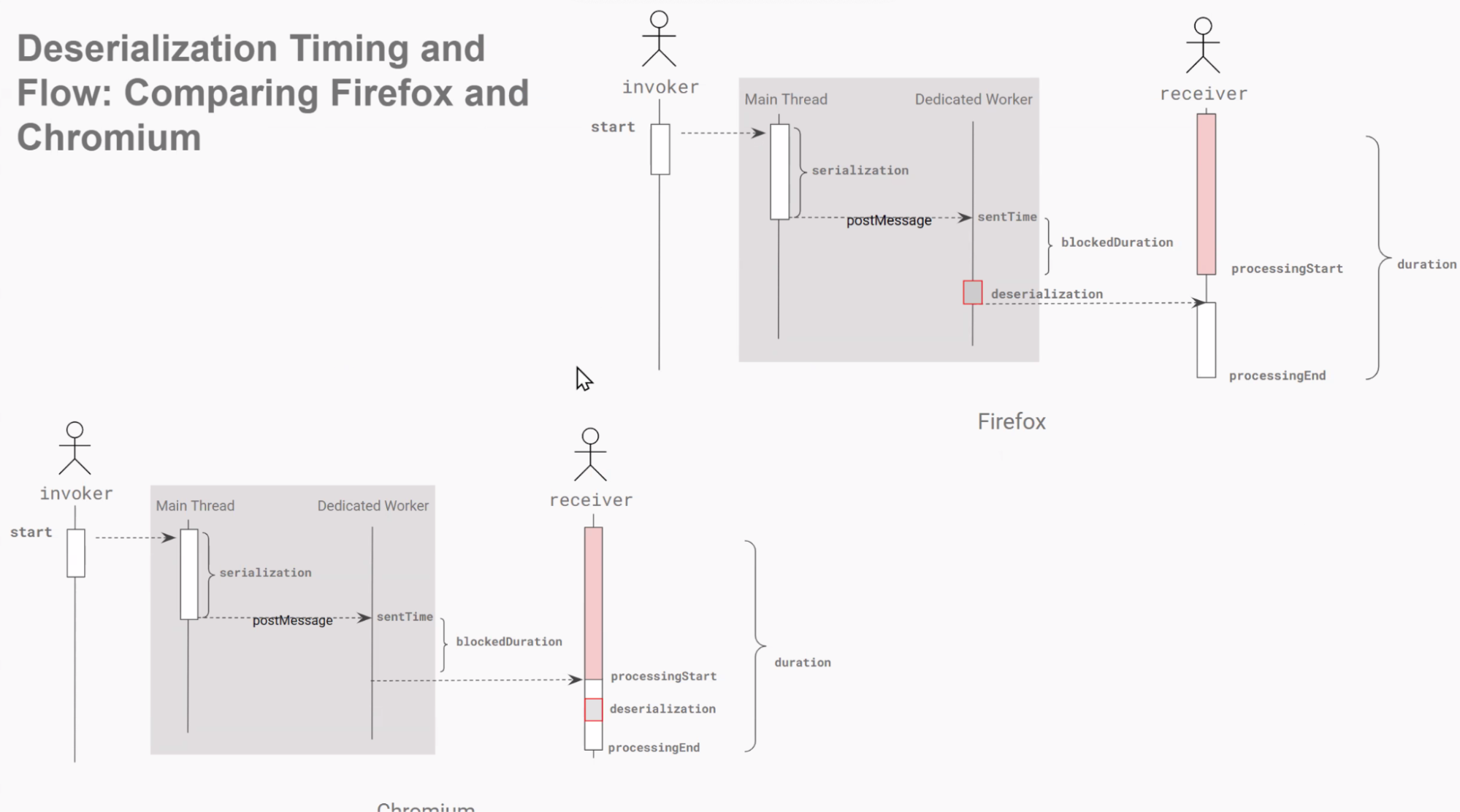

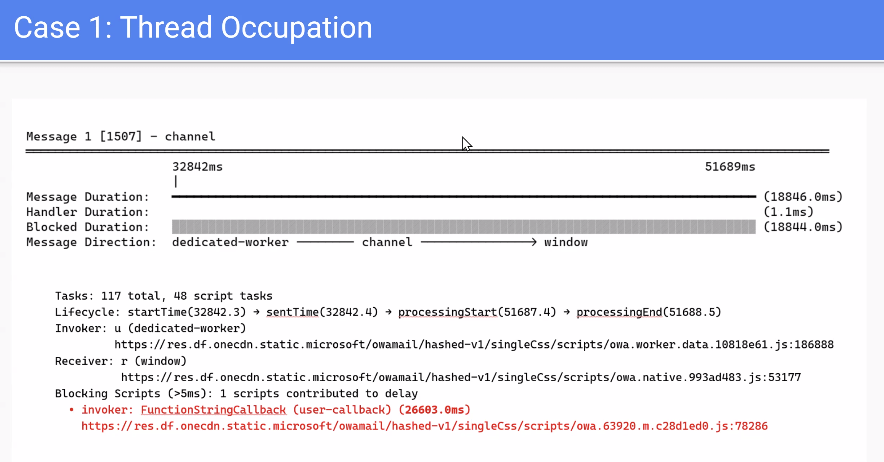

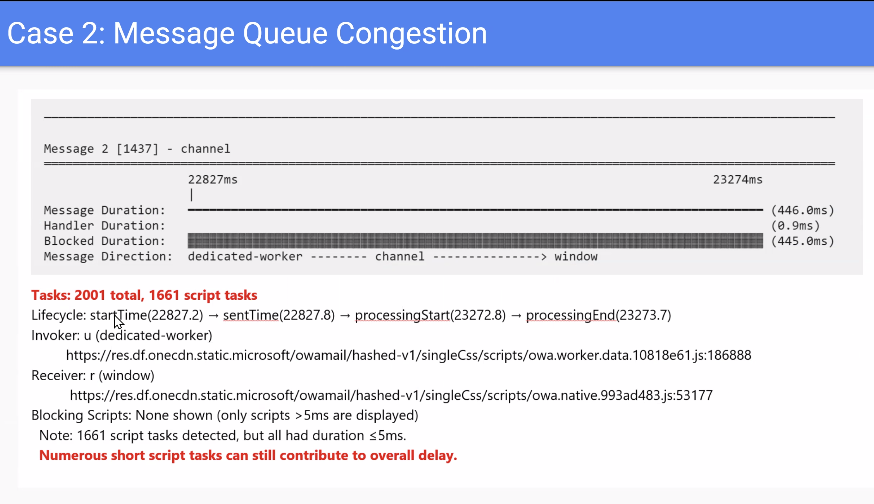

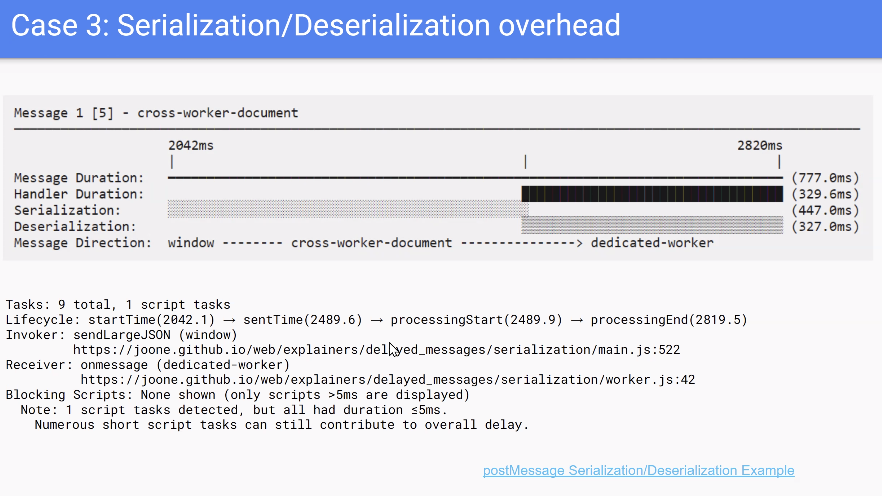

- Joone presented a proposed “delayed message” performance API for `postMessage`, aimed at measuring message delays (including in workers), attributing delays to scripts similarly to LoAF, and adding metrics like `taskCount`, `scriptTaskCount`, and average script duration.

- There was debate on how actionable some metrics are (e.g., task counts and averages, differences in “task” concepts across engines, and browser variance), and whether to expose raw totals instead so developers can derive their own metrics.